With PixelSync and InstantAccess, are Intel well-armed for a three-way graphics war?

Processor giant Intel has been an unusually active presence at this year's GDC, glad-handing game developers, announcing new graphics technologies and convincing top dev houses to use their proprietary gaming advances. You might say they're acting just like AMD or Nvidia. In fact, when Intel's Haswell CPU hits shelves this summer, they'll be taking those two companies' dominance of the graphics market head-on.

I spoke to their wonderfully-titled European Gaming Enablement Manager, Richard Huddy last week ahead of the announcement of Intel's two latest graphics technologies – InstantAccess and PixelSync, both advances that you won't see working with any other GPU tech.

The technologies don't sound particularly exciting when you describe them as (brace yourselves) new API extensions for the DirectX framework, but they could end up being very effective indeed. And we'll be seeing them soon: the new extensions have already been made available to Codemasters for GRID 2 and Creative Assembly for Rome 2.

InstantAccess

The first of the extensions, InstantAccess, is intended to help the CPU and the GPU to share memory. That's going to be particularly important with the way the coming generation of consoles are likely to be built, working with similar ideas to the unified memory of the PlayStation 4 and the AMD-sponsored Heterogeneous System Architecture (HSA) initiative.

With InstantAccess the CPU and GPU will be able to access and address each other's memory without having to go through the DirectX API itself. Previously, when the CPU and GPU have actually been on the same silicon and not discrete cards, DirectX has had a tendency to get in the way.

“DirectX was built on the idea that the GPU will probably be a discrete card,” says Huddy. “It will be on the far end of a rather slow bus. That sort of assumption doesn't play particularly well for us, it just disadvantages the platform.”

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

The benefit in-game is not about raising the average frame rate when you're playing on Haswell hardware - Huddy concedes that you'll only see an improvement of around one or two per cent - but raising the minimum frame rate and eliminating those jarring moments when performance drops sharply.

PixelSync

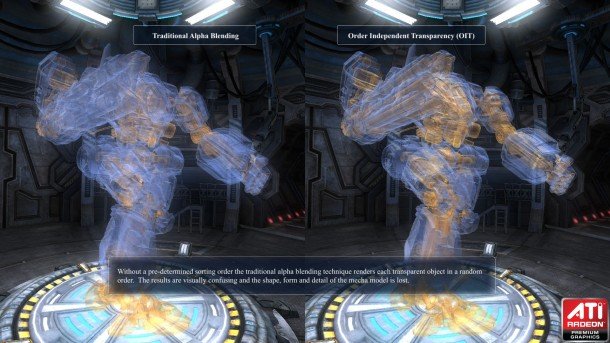

The aim here is to introduce Order-Independent Transparency (OIT) to real-time rendering in-game. It's been called out by graphics coders like DICE's Johan Andersson as one of the greatest problems in getting cinematic-quality rendering in modern games, and of the two API extensions, this is the one which most clearly sets Intel apart from its competitors. While DX11 originally shipped with it, modern GPUs are not built to be able to render realistic transparency without surrendering a huge amount of gaming performance.

Attempting such a thing on today's graphics architectures, Huddy claims, would result in a seventy percent performance hit. Haswell can do it with a mere ten percent frame rate drop.

That sort of transparency is vital if you want realistic-looking glass, water, smoke, foliage, hair - y'know, things that crop up in games quite a lot. Though, as Huddy points out, they're currently recreated using "cheap and nasty approximations." Dry your eyes, AMD, I'm sure he wasn't talking about TressFX.

Codemaster's GRID 2 uses PixelSync's version of OIT when it detects a Haswell GPU. With the game currently running on that hardware at around 40-50FPS, turning on OIT only costs a further 4-5FPS.

In Rome 2 you can actually use the system-melting original DX11 OIT tech if you're using a discrete GPU, but Huddy thinks that ability will likely be removed for launch.

“If you put a high-end discrete card up against Intel graphics, we win,” he says. “If you turn on OIT we win because we can do this with a tiny performance loss; we know how to synchronise pixels. But there's just no way to do it in a discrete card. It's going to be a bit embarrassing having a $400 graphics card struggling to keep up with Haswell.”

You may yet to be convinced by the above screenshots of GRID 2, but the difference of having a proper transparency effect interacting with the lighting model will likely be most palpable in motion.

Fighting talk

I'm pretty sure Intel is looking to release serious gaming laptops that are running a Haswell chip for both processing and graphics - probably with the touted beefed-up GT3E version of its graphics architecture. There's a possibility that we may yet see that top SKU in the desktop range too, but unlikely as that may be, the mobile power could worry both Nvidia and AMD's discrete mobile division.

Huddy's been looking at the Steam Hardware Survey with interest, where Intel's HD 3000 stands as the graphics hardware most-used by subscribers. With Haswell, Huddy says, Intel will now be “taking another significant step towards making our graphics the graphics of choice for playing games.”

That's some tough talk. When I ask if that means Intel is expecting Haswell to create a three-way graphics fight he agrees.

“And we are coming well-armed to this particular fight,” he adds.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.