Why VR games won't care which headset you're using

At Oculus Connect one year ago, the Oculus Rift was, essentially, the only game in town. Oculus was leading the charge on VR, and while their sale to Facebook soured some of the fanbase, they still largely had the faith and support of the community behind them. By early 2015, things were very different. Razer was creating the Open Source VR ecosystem “to abstract the complexities of [VR] game development,” telling me “if we don’t build this, the ecosystem will never survive.” Then Valve’s SteamVR joined the race with hardware that surpassed the best of what Oculus had to offer, with the promise of great touch controllers as part of the package.

At this point, I was getting worried. How were developers supposed to support all these different headsets and controllers? Will multi-platform VR development be overwhelming for small teams new to VR development? Will the end result be a messy platform war, burned out displays and trampled controller husks littering the battlefield, where the majority of games work on Oculus or SteamVR but not on both? With so much happening around VR so fast, these seemed like legitimate concerns to me. But after spending a couple days at Oculus Connect 2 and talking to developers, I’m not so worried anymore.

VR development is going to be just fine.

All of the devs I talked to were predictably bullish on working with Oculus’ hardware. But I also tried to talk to some of them about cross-platform development, particularly with SteamVR. What I heard was good news.

“We want people to focus on making great content, great interactions, and deploy it anywhere. It just works out of the box,” said Nick Whiting, Epic Games’ lead VR engineer. Whiting and I discussed the new Bullet Train demo, but we also talked about how Epic’s been tailoring Unreal Engine 4 to suit the needs of VR developers. “For instance, [our previous demo] Showdown, we’ve shown it on the Vive, we’ve shown it on the Oculus, we’ve shown it on the Morpheus with no content modifications. It works on all of them out of the box with no content modifications, other than for the Vive, for the standing experience it assumes zero is on the ground, and on Oculus it assumes it’s in the head position. So you basically just change one number to set your camera position and everything else works. Very little modification to get it to work on different systems.”

That’s a promising degree of cross-platform compatibility already, and the hardware is still months out from release.

Whiting gave me a more detailed example of how supporting different motion controllers—SteamVR’s, the PlayStation Move, and Oculus Touch—works in UE4.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

“[For] the control input, the actual buttons on the device, we have an abstraction called the motion controller abstraction. It’s like with a gamepad. With gamepads you have the left thumbstick, right thumbstick, set of buttons. We did the same thing with motion controllers. They all have some sort of touchpad or joystick on top, a grip button, a trigger button. We have an abstraction that says ‘when the left motion controller trigger is pulled, when the right motion controller trigger is pulled…’ so that it doesn’t matter what you have hooked up to it, it’ll work out of the box. For the actual motion controller tracking, there’s a little component that you attach to your actor in there that tracks around, you can say ‘I want to track the left hand, I want to track the right hand.’ If you have a Touch or Vive or Move in there, it all works the same.”

But what about accounting for the differences in performance between those controllers? If one tracks more quickly and accurately than another, does that affect its implementation in the engine? Is that something developers will have to account for? According to Whiting, prediction solves that problem.

If you have a Touch or Vive or Move in there, it all works the same.

“[The latency of the tracking] makes a huge different, but fortunately from the SDK side of it, we can handle prediction mostly equivalently on all the different things, so they behave the same,” he said. “We do what we call a late update. So when we read the input, we read it twice per frame. Once at the very beginning of the frame, so when we do all the gameplay stuff like when you pull the trigger, what direction is it looking, and then right before we render everything we also update the rendering position of all the stuff. So if you have a gun in your hand we’ll update it twice a frame, once before the gameplay interaction and once before the rendering. So it’s moving much more smoothly in the visual field but we’re simulating it.

“There’s more latency on the interaction, but the visual stuff is really what causes the feeling of presence. As long as the visual updating is really really crisp, that makes you feel like you really have something in your hand and presence in the world. Everyone’s a little bit different, so we want to handle the technical details for that so they don’t have to worry about it. They just have this motion controller component, and we’ve handled the latency, adding the late updates and doing all the rendering updates on the backend so it just works out of the box.”

While Unreal Engine 3 earned a reputation as a high-end engine for AAA studios, Epic’s made serious attempts to simplify UE4 and make it more approachable (and far more affordable) for smaller teams. They’ve had to, because indie darling Unity has done so much to democratize game development.

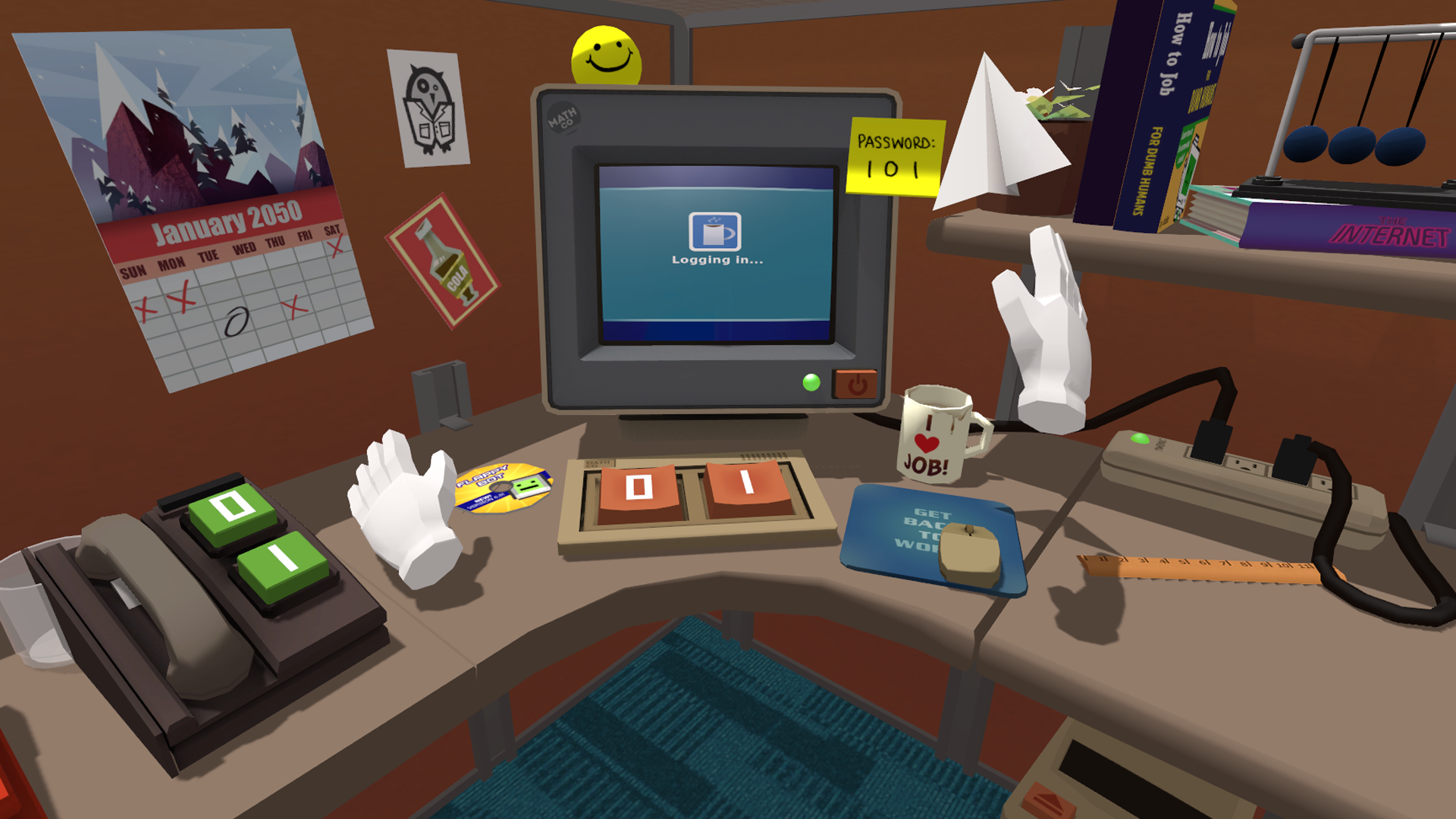

Unity, like Unreal, has built-in VR support, but that doesn’t mean much by itself; the crucial element is how Unity handles developing for multiple VR platforms. “Fantastic,” according to the developers at Owlchemy Labs, who’ve been working on Job Simulator for SteamVR and the Oculus Rift.

“We have to do a little bit of tweaking. Everything’s really early. But it’s been fantastic,” said Owlchemy’s Devin Reimer.

“We’d be out of business if we weren’t using an established engine like Unity or Unreal. If we were trying to write our own stuff we’d be fucked,” added Owlchemy’s Alex Schwartz. “Unity’s been really helpful, and they know VR is a big play, so they’re bringing us into their loop on betas and alphas. We get to try early stuff and give feedback….So yeah, integrating multiple SDKs into the project is great. They’re also going with this concept of ‘VR default multiplatform,’ where built into Unity would be out of the box support for all these platforms.”

VR development will still suffer some growing pains over the next couple years, and it’s true that the volume of competing SDKs and hardware platforms is messy and a bit confusing. It’s still unclear how exactly Razer’s OSVR SDK, Valve’s OpenVR SDK, the Oculus SDK, and every hardware platform will live together. There’s also Nvidia’s GameWorksVR and AMD’s LiquidVR, which both strive to improve VR performance.

There will be some confusion and some fragmentation. But it seems like the major engines, including CryEngine, will enable developers to build multi-platform games with relative ease. And that means that, as gamers, we won't have to care too much about which headset we're using. Plenty of games will just work.

And Unreal Engine 4, like Unity, is now a viable option for small teams, who will likely be creating the most interesting VR experiences as the industry feels out what works and what doesn’t. Nick Whiting pointed out that UE4’s Blueprints visual scripting, which he works on in addition to VR, is meant to give designers the tools to create their games without doing any coding.

“One of the things we focus on is how do we make it easier for small teams to make much more detailed worlds. We want Blueprints to be a visual scripting language that people can populate the world with interactables and procedural layout tools and whatnot, completely without the help of a programmer,” Whiting said. “So if somebody wants to shoot a fire hydrant and have it shoot out water, previously someone would have to script that. With the Blueprint system anyone can add a quick interaction for that. It makes it really easy to make these rich detailed worlds that have a lot of nuance to them...instead of a setpiece that’s completely non-interactable. I think that’s really the next jump. When you start making open worlds, the burden of being immersed and grounded in that world requires you have a lot of interactivity and personality to the world in addition to it looking nice.”

We may well end up seeing more games platform-locked due to exclusivity deals than the difficulty of multiplatform development. But it’s easy to forget with all the excitement around VR that a true consumer release is still a few months away, and that’s when development is at its messiest.

“Hopefully the utopian future of VR development is you hit the button and you’ve built VR support for tracked controllers and head tracking, and it works on a new headset that’s come out in the time you’ve launched a game, and it just works,” said Alex Schwartz. “It would be really cool if that future got there. But by definition we’re working on such early stuff that it’s hacky, early stuff.

"Plus we can’t complain. If we went to someone who built games 20 years ago and told them about our problems, they’d be like ‘Shut up. I had to write in Assembly!’ "

Wes has been covering games and hardware for more than 10 years, first at tech sites like The Wirecutter and Tested before joining the PC Gamer team in 2014. Wes plays a little bit of everything, but he'll always jump at the chance to cover emulation and Japanese games.

When he's not obsessively optimizing and re-optimizing a tangle of conveyor belts in Satisfactory (it's really becoming a problem), he's probably playing a 20-year-old Final Fantasy or some opaque ASCII roguelike. With a focus on writing and editing features, he seeks out personal stories and in-depth histories from the corners of PC gaming and its niche communities. 50% pizza by volume (deep dish, to be specific).