Why you're right to hate motion blur in games (but devs aren't wrong to include it)

Motion blur might be the most hated post-processing effect in PC games. Here's why it keeps showing up.

Flipping off the motion blur switch is an automatic first-launch ritual for me and I assume a lot of other PC gamers. It's probably the most hated post-processing effect in videogames. So why do so many games include it in the first place, and why is it always enabled by default?

Good luck getting a game developer to tell you! One studio I asked declined to comment and the others didn't respond. Maybe they just don't think weighing in on the merits of individual post-processing effects is a valuable use of their time, or maybe they're in the pocket of Big Blur. I wasn't getting anywhere, so I started doing my own research. Here's what I learned:

- There's a valid reason for all the motion blur effects in games

- There's also a valid reason to hate motion blur with a passion

- We'll probably see less of it as frame and refresh rates increase

What motion blur is

Motion blur occurs naturally in photography and film, because cameras don't capture scenes instantaneously. Film is exposed for a period of time—say, 1/48th of a second—and if an object moves across the frame during the exposure, it will appear to blur in its direction of travel. If the camera itself moves, the whole scene will blur.

That's not great if you're trying to show high-speed action clearly, but at standard frame rates, video "needs some degree of blur to look natural," according to a nice summary from Vegas editing suite maker Magix Software.

That's especially true for 24 fps films. A baseball flying through a shot might hypothetically only show up in a handful of frames, and we wouldn't want each of those frames to contain a crisp, perfectly round white ball in a new location—it would strobe unnaturally across the screen. Instead, we see a blurry oval streak across the screen, which represents all the information recorded by the film for the duration of its exposure.

A scene from 1933's King Kong, embedded above, helps demonstrate. Computer graphics researcher Dr Andrew Glassner pointed out in a 1999 article that Kong's movement looks jerky and odd because, unlike everything else in the movie, he was animated with a series of still photos which contain no motion blur. Today, stop-motion animators add a motion blur effect when they want natural-looking movement.

Why games add motion blur

Techniques like multisampling and temporal anti-aliasing can defeat visual anomalies and help create natural-looking movement in games, but they don't always cut it, especially at lower framerates. With my sympathies to Redfall console players: 30 fps looks like ass. Rotating the camera rapidly turns the world into a King Kong-style slideshow.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Enter motion blur, the cure that's worse than the disease. Developers have insisted it's useful for a long time: In an article from 2007 book GPU Gems 3, Gilberto Rosado of Rainbow Studios (makers of the MX vs ATV series) wrote that motion blur post processing effects can create a greater sense of speed and "realism" and help "smooth out a game's appearance, especially for games that render at 30 frames per second or less."

A 2013 study by MIT and Disney researchers also said that motion blur effects are useful "for reducing artifacts" and "achieving a realistic look" in games.

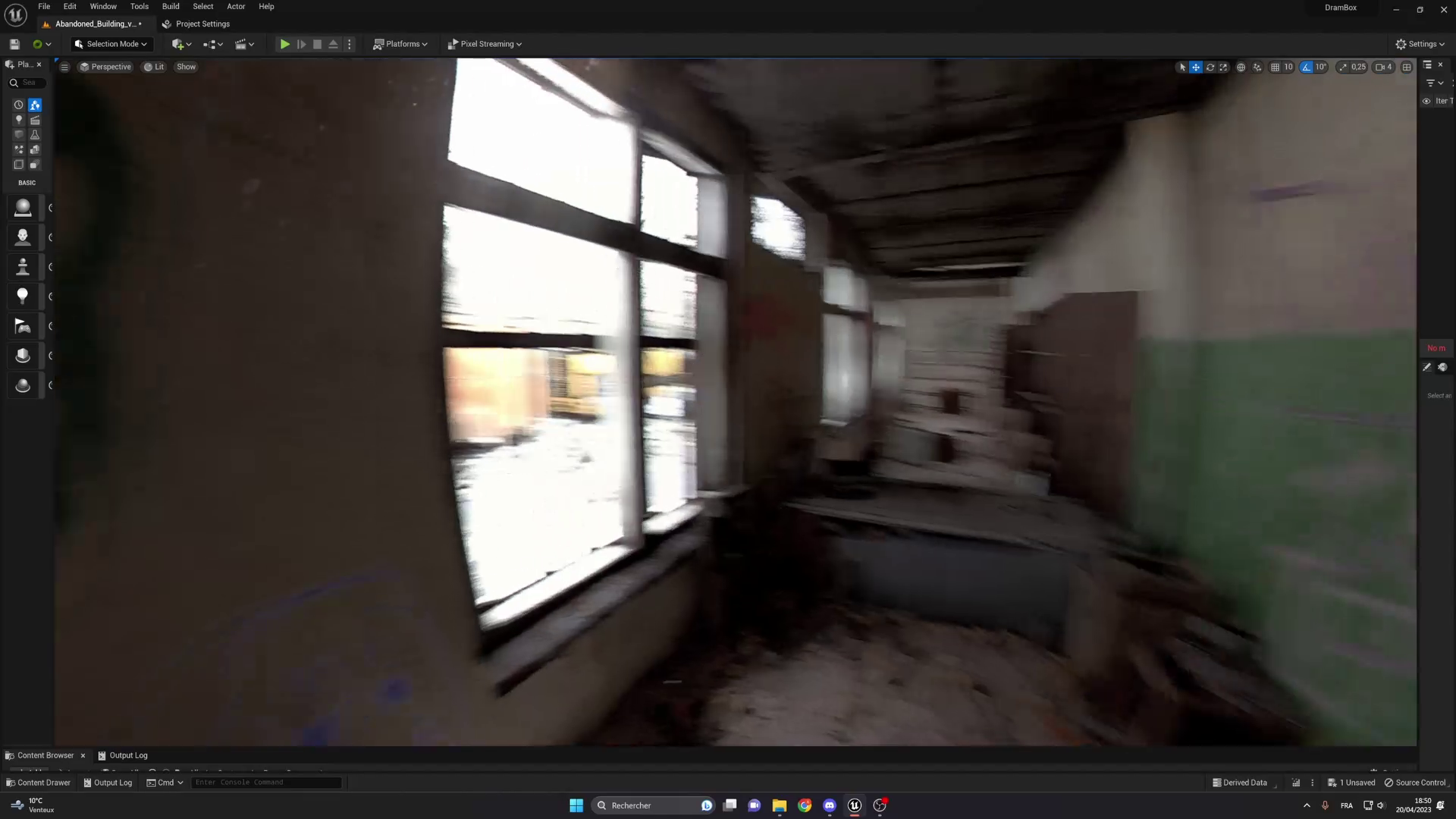

VIDEO: Half-Life 2 with motion blur on and off.

After a little testing in Half-Life 2, I was surprised to find that I basically agree that motion blur can "smooth out" low framerates in an attractive way. Even with Half-Life 2 at 60 fps, flicking the camera back and forth looks pretty bad. With motion blur on, however, it appears smoother in the sense that it looks more like what I'd expect to see if an actual 60 fps camera were being rotated—a smear, rather than jittery leaps between frames.

But I'd still never play Half-Life 2 with motion blur on.

Why gamers hate motion blur

If PC gamers had to swear some kind of oath for admittance to the hobby, a promise to hate on motion blur would appear early on.

Amateur game dev Adam Sanders, whose studio Red Slate Games is working on an unannounced project, offered me a simple explanation for motion blur's low status among gamers. (Finally, someone willing to talk!)

In movies, motion blur can be used to express speed and isolate the subject of a scene by blurring its surroundings—roughly simulating an eye's focus—but in videogames, where players typically control the camera themselves, Sanders thinks that motion blur tends to do the opposite. Instead of making the subject clearer, it's likely to "obfuscate information players are actively trying to perceive."

"Fundamentally, it comes down to the differing needs of the media," says Sanders. "Films need to implement carefully crafted tricks to draw the audience's eyes and perception to specific parts of the frame. Games allow players to frame the scene themselves, and post-processing effects like motion blur interfere with that."

If you're ever in an argument about motion blur for some reason, now you can drop some legit academic research about how people don't care for it.

This is mainly a problem when motion blur is triggered by a player's own camera movements, as in the Half-Life 2 example above. It does make camera rotation appear smoother, but the trade-off is that the very enemies I'm trying to center are consumed by a torrent of blur. In games with egregiously strong motion blur, the screen can turn to mud whenever you try to orient yourself, which naturally makes it kinda hard to orient yourself.

Sanders points out that players don't tend to complain about localized motion blur—the streak of a sword swipe in an attack animation, for instance. "I think this shows that the problem with motion blur in games is that it's being used wrong when applied as a shader to the whole screen," he says.

Also, it ruins all the cool screenshots you try to take. So, yeah, motion blur is bad.

Should you ever turn on motion blur?

If PC gamers had to swear some kind of oath for admittance to the hobby, a promise to hate on motion blur would appear early on. But I get why it pops up so much: If you're trying to convince an audience that your game has nice graphics at 30 fps, you blur up the jittery bits. And to be honest, as much as I dislike very strong motion blur, I don't always notice it when it's subtle (at least until I look at my screenshots folder and discover that they all suck).

I also don't mind that things go all streaky when I hit the nitro in racing games. As long as I can see the track and other cars clearly, I can live with speed lines. Although, that MIT and Disney study I mentioned earlier concluded that motion blur did not "significantly enhance the player experience" in the racing game they showed subjects, so if you're ever in an argument about motion blur for some reason, now you can drop some legit academic research about how people don't care for it.

Individual tastes might also vary on whether intentionally emulating the look of video is a valid use of motion blur. Since the "real" things we see on screens are shot with cameras—news footage, eg—mimicking camera effects can create the impression that we're looking at reality. We've recently seen an example of how effective that can be in Unrecord, an eerily real-looking Unreal Engine 5 shooter that simulates the perspective of a bodycam. Motion blur plays a role in the illusion along with other video effects, such as lens distortion and bloom.

Ignore anyone who tells you that you "can't perceive" more than 60 Hz.

I think motion blur can sometimes contribute to an overall effect that trumps clarity. Where natural-looking movement is the goal, though, higher and higher frame and refresh rates are making motion blur effects obsolete. Spinning around in Half-Life 2 is kind of ugly at 60 fps, but a lot less ugly at 144 fps on my 144 Hz display, and it'd be even less ugly at 200 Hz. The more frames I'm actually shown—the higher the temporal resolution—the less information needs to be generalized through blurring.

Ignore anyone who tells you that you "can't perceive" more than 60 Hz. We talked to experts about that, and it's nonsense: one psychologist suggested that 200 Hz could be a good target if we want to experience video motion close to the way we experience real-life motion. Even a decade ago, researchers were pointing out that 100 Hz video looks significantly different, and arguably better, than 60 Hz video, in part because the reduction of motion blur improves the illusion.

Until 30 and 60 fps cease to be acceptable standards in videogames, we'll have to keep popping into the graphics settings to flip that motion blur switch. The good news is that although it's almost always on by default for some reason, it's also one of those post-processing effects that can almost always be switched off—maybe Big Blur hasn't gotten to everyone just yet.

Tyler grew up in Silicon Valley during the '80s and '90s, playing games like Zork and Arkanoid on early PCs. He was later captivated by Myst, SimCity, Civilization, Command & Conquer, all the shooters they call "boomer shooters" now, and PS1 classic Bushido Blade (that's right: he had Bleem!). Tyler joined PC Gamer in 2011, and today he's focused on the site's news coverage. His hobbies include amateur boxing and adding to his 1,200-plus hours in Rocket League.