Here's why Dishonored 2 is running so poorly

A detailed look at the initial performance and an analysis of bottlenecks.

Prior to its launch, Dishonored 2 was hotly anticipated, which makes the serious performance issues at launch somewhat surprising. I realize we're right in the middle of the holiday shopping spree, but putting out a game that runs like crap, even on relatively powerful hardware, is never a good idea. AMD graphics cards in particular are having a tough go of things in the game, but there are many facets of what's going on.

Given Bethesda is already talking about a patch next week to help with framerates, digging too deeply here may be somewhat late, but I'll revisit the topic once we've seen what the patch can do, and I'll update this article accordingly. For now, let me cover some of the basics of what's going on and what you can do about it. This is an expansion to our previous article on performance and system requirements.

All of the testing that follows uses a sequence set around the docks in the first major city, Karnaca. It's where the game opens up, and performance suddenly takes a dive.

First, there are six presets for graphics quality: very low, low, medium, high, very high, and ultra. The above gallery shows the six options (with Nvidia HBAO+ enabled on the 'ultra' setting). The biggest change you'll see is the presence or lack of reflection on the water at ultra compared to very high, and then going from high to medium there's a clear change that reduces the overall brightness/fog as well as some of the finer shadows. Antialiasing is also off at very low, and set to FXAA at low, whereas TXAA is used at all other settings, and TXAA produces a clearly better result.

Many of the individual settings match this six levels of granularity, so you'd probably expect there to be quite a few ways to improve performance if your system is struggling. Turns out, with the current version of the game at least, that's absolutely not the case. Yes, dropping from ultra to very low will help, but not as much as you might think. Let me call out two examples.

Using a GTX 1070, at ultra quality 1440p with Nvidia HBAO+, performance in this particularly complex scene is 47 fps. Now, take this same GTX 1070 and drop the quality from maximum to minimum, using the very low preset and turning off HBAO+ (which in this game is an Nvidia-only option). Performance improves to 69 fps, which is less than a 50 percent increase. Using AMD's top card, the R9 Fury X, the situation is similar. At 1080p ultra in the same scene, performance is 43 fps. Drop to very low and framerates improve to 71 fps. That's a bigger improvement than on the GTX 1070, but it's still only a 65 percent increase.

This is actually somewhat of a common issue for id Tech games, and the Void engine used for Dishonored 2 is based on id Tech 5 as far as I can tell (and not the newer id Tech 6 that's used in Doom). The engine has some good image quality, but scaling by dropping to lower settings is pretty poor. Other games (GTA5 for example) can triple or even quadruple their framerates by going from 'ultra' to 'low' quality—but the change in image quality for such games also tends to be more noticeable.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

There's another common complaint with id Tech games, and that's support—or more specifically, the lack thereof—for multiple GPUs. There are some hacks that you can use to try and get SLI running, but at present the game won't even start without taking such measures. CrossFire meanwhile didn't prevent the game from running, but the second GPU wasn't utilized at all. What seems weird about multi-GPU hacks is that, if they actually work properly, why wouldn't Nvidia incorporate them into their drivers? My guess is that they don't actually work properly, so they're left for enterprising (daring) gamers to use at their own risk.

The astute among you will notice that the 1070 at 1440p is basically equal to the Fury X at 1080p—yes, it's that bad for AMD right now. The fact that Nvidia's HBAO+ is hardware exclusive is also a bit odd. This was common several year ago, but newer engines usually make it available on AMD hardware. Maybe Arkane is using an older GameWorks library, or maybe they disabled it just so AMD users wouldn't have one more item that would tank performance even more. Maybe the patch will address this as well.

Regardless, these are a couple of high-end GPUs, struggling to run the game at acceptable framerates. There are many areas of the game (even looking in a different direction in the same scene) where performance is substantially better. In fact, variability in framerate depending on where you are and which direction you're facing is more pronounced in Dishonored 2 than any other game that immediately comes to mind—the difference between a low-fps outdoor region and a high-fps region can be way more than double. Indoor areas are a lot better as well, but seeing such variability in outdoor scenes (in the same area of the game) is unusual.

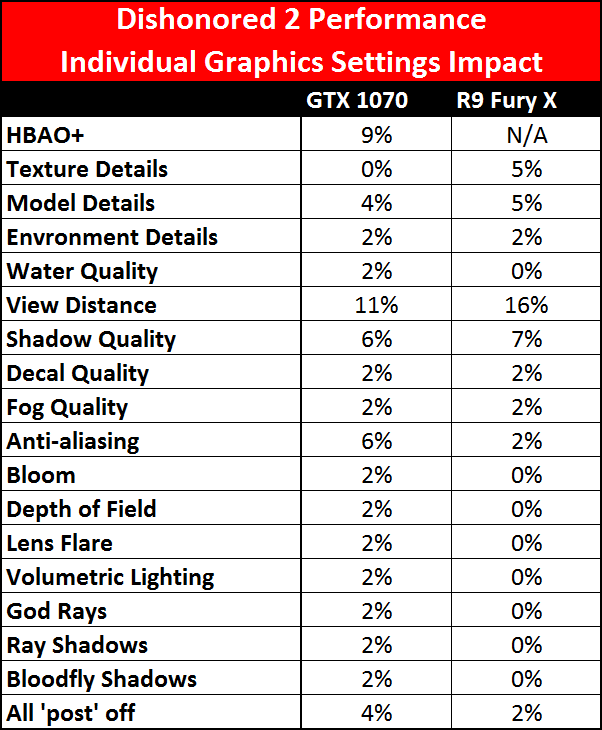

Let me run through some of the other settings quickly to show how much—or little—they impact performance. I started at the ultra preset and then dropped each setting to minimum to check the impact on framerates. I've reported the increase in framerate for each setting in the following table, but keep in mind that not all of the improvements are cumulative, and I didn't perform extensive benchmarking at each setting (due to time constraints, not to mention the impending patch).

The biggest factor in performance among the settings is view distance, which improves framerates by 10-15 percent on most graphics cards when going from max to min, but you get more pop-in on lower settings. For Nvidia cards, turning off HBAO+ is another big item, netting a nearly 10 percent increase. Shadow quality is third, giving 6-7 percent, and then model details, which gives 4-5 percent. Antialiasing had less of an impact on AMD's GPU compared to Nvidia's, but that's also because the Fury X has a ton of memory bandwidth and was running a lower resolution. Everything else has a negligible hit on performance, and combining all the lower settings that can be either on or off (from bloom downward in the above table), turning all of them off only added 1-2 fps on our two test GPUs.

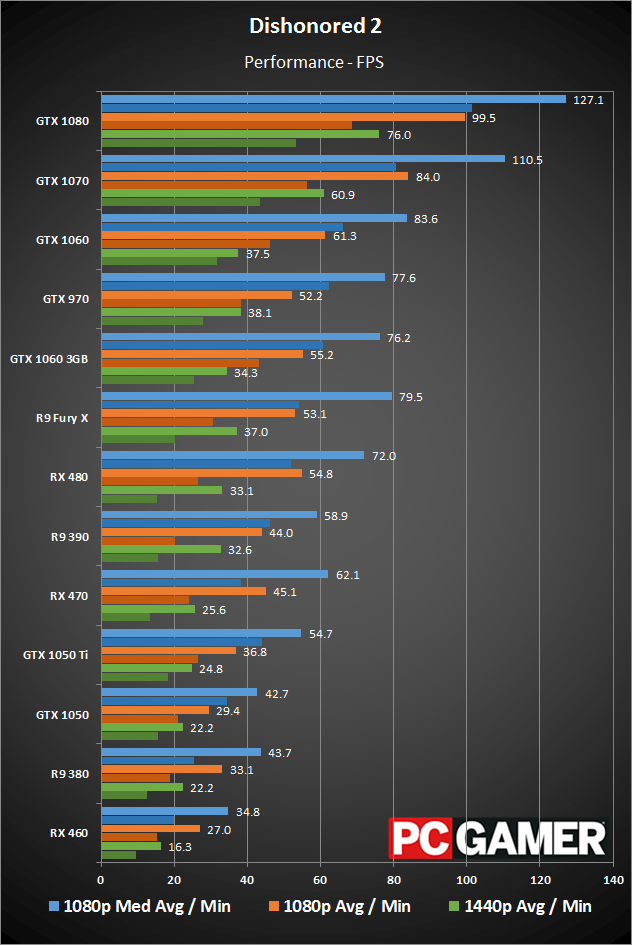

It's easy to throw out the claim that the game engine is poorly optimized, but what can be done to improve the situation (outside of a patch by the developer and driver updates), and why does the game run so poorly? Improving the situation requires modding by the community—or more likely, the incoming patch—but analyzing the various impacts on performance is something where I can provide some suggestions as to what's happening. Let me start with a performance chart of the GPUs I tested, at 1080p medium, 1080p ultra, and 1440p ultra using an overclocked i7-6800K at 4.2GHz.

The first thing that you'll note is that Nvidia is just killing AMD on performance in the initial release of Dishonored 2. The GTX 1060 3GB is a pretty close match for the Fury X, taking the overall lead in my chart thanks to more consistent framerates. Clearly, VRAM isn't the only factor either, since the 3GB card has less memory than the 4GB Fury X and 8GB RX 480/R9 390. The GTX 970 also ranks rather high, all things considered. AMD is in dire need of some help, with average minimum fps (the average of the bottom three percent of frames) sitting at 30 fps or lower on even the fastest cards when running 1080p ultra. Whether help comes in the form of an improved driver or actual tweaks to the game doesn't really matter to me.

But there's more going on than just AMD vs. Nvidia. VRAM is a factor that can sometimes play a significant role. Look at the two 1060 cards for example, where the 6GB card is normally around 10 percent faster (thanks to having more CUDA cores), but at 1440p despite relatively consistent average framerates, the 3GB card takes a significant hit in minimum fps. Even more telling perhaps is the RX 480 8GB compared to the RX 470 4GB, where average fps favors the 480 by 16 percent at 1080p medium, 22 percent at 1080p ultra, and 29 percent at 1440p ultra. Average minimum fps is still close, but AMD cards are clearly stuttering and encountering some problem.

Need a new PC to play Dishonored 2? Check out our build guides:

Budget gaming PC

(~$750/£750) - A good entry-level system.

Mid-range gaming PC

(~$1,250/£1,250) - Our recommended build for most gamers.

High-end gaming PC

(~$2,000/£2,000) - Everything a gamer could want.

Extreme gaming PC

(>$3,000/£3,000) - You won the lotto and are going all-in on gaming.

Prefer to buy a prebuilt than building it yourself? Check out our guide to the Best Gaming PCs.

Finally, I think one of the biggest factors with poor performance right now is somehow related to geometry throughput. AMD added a feature called primitive discard acceleration to the Polaris architecture, and this clearly helps. It discards extra geometry that won't end up in the final output, meaning if a triangle is blocked from view by another triangle, don't draw it. In TessMark, AMD said primitive discard acceleration can improve tessellation performance by 200-350 percent.

This is one area where AMD's Polaris substantially improves on the previous AMD architectures, and the results is the RX 480 beats the R9 390 handily, and very nearly matches the R9 Fury X. Something similar happens in The Division and Rise of the Tomb Raider, two of a handful of games where the RX 480 generally beats the R9 390. Both are known for having significant amounts of tessellation and geometry—and they're also games that use some of Nvidia's GameWorks libraries, not coincidentally.

One final item to discuss is the impact of the CPU on performance. It ends up being a relatively small concern, at least for most users. I kept the CPU clocks the same this time, and tested the i7-6800K at 4.2GHz with 6-cores plus Hyper-Threading, 4-cores without HTT, and 2-cores with HTT—I'm basically looking at a 6-core enthusiast part compared to a typical Core i5 K-series and a hypothetical Core i3 that doesn't actually exist. L3 cache is the same, but what I found is that the 'Core i5' part was within 1 fps of the full Core i7 at all settings I checked.

The 'Core i3' is a bit of a different story, with performance that trails the Core i7 by 10 percent at 1080p ultra/very high/high, but the margin increases to 15-20 percent slower at the medium/low/very low presets. Except, unlike the Core i7 and Core i5 parts, Core i3 performance is also prone to seemingly random changes in performance. Another sequence of testing at one point had the simulated Core i3 part running 20-30 percent slower than the Core i7, but after a few minutes that 'corrected'. My guess is the Core i3 may be slower at loading all of the assets and this can present some issues. Also, a real Core i3 would drop the clock speed by 10-20 percent, which would make the gap bigger.

This is obviously not one of the better game launches in recent history, matching up quite well with Mafia 3 in many ways. It's also worth noting that the default mouse settings (to me at least) are horrible—I had to increase the sensitivity to 50 and turn off mouse smoothing before it felt mostly right. Prior to that, I would actually have to lift my mouse off its pad (sometimes multiple times) while turning around or navigating the city. Nvidia cards also seem to have an issue where the V-sync setting in the game doesn't work right—at least in my experience, I had to force V-sync off in the Nvidia Control Panel for it to work.

It's sad to see a game with so much anticipation stumble so badly out of the gate. From what I've played of Dishonored 2, it's a very cool game and should be a lot of fun. Running it on an high-end 10-series Nvidia card, you'll be fine and likely not think too much about performance (forget about 4K), but until the situation improves, AMD graphics card users will want to wait and see.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.