Why crypto mining wasn't the only culprit for wild GPU prices

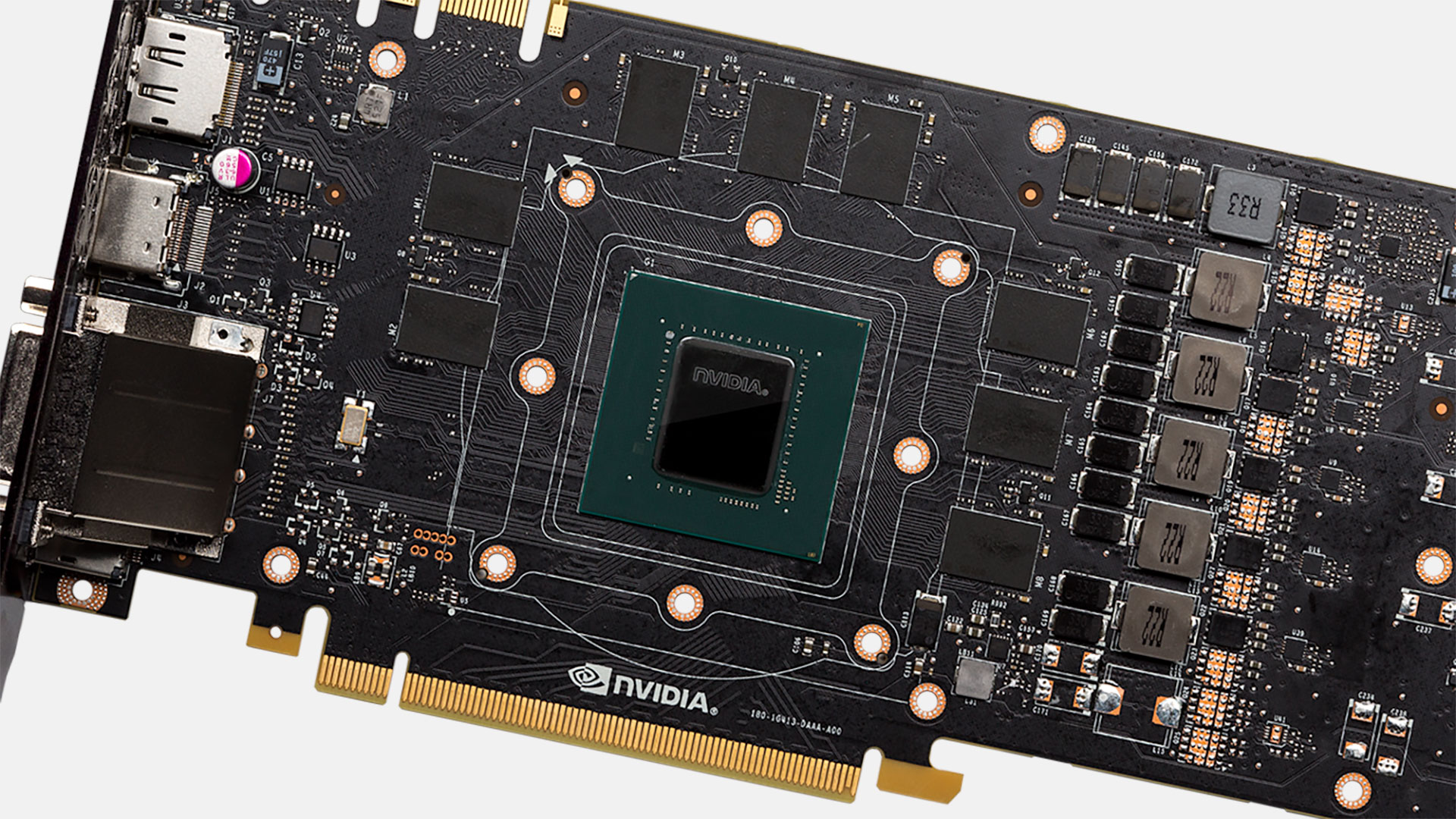

Looking back at the great graphics card shortage.

Graphics card prices have been falling back to 'normal' over the past month, and the end of the GPU shortage is finally in sight. But why did we have a shortage in the first place, and are we really out of the woods—and what's going to happen with the next generation of graphics cards, due in the August/September time frame? I've spoken with several industry insiders to get a better idea of what happened, and what we can expect to see in the remainder of 2018, perhaps beyond.

The easy scapegoat on the shortages is cryptocurrency miners, and we've been as guilty as any site in pointing the finger of scorn that way. It's true that miners didn't help the situation, but are they the sole cause of the problem? No, of course not.

Another equally easy target is the GPU manufacturers, Nvidia and AMD. "If they would only produce more chips, we wouldn't have to fight for our right to play games!" So goes the refrain, but from what I've gathered it's not really fair to place the blame solely on them either. Sure, AMD and Nvidia could have ordered more wafers—months before the shortages hit, with their perfect ability to predict the future—but that wouldn't have fully satisfied demand or kept prices at and below MSRP.

Similarly, we could try and lay the blame at the feet of the AIB (add-in board) partners, Asus, EVGA, Gigabyte, MSI, etc. Prices did increase at their stores, but all cards were still sold out. AIB manufacturers are another cog in the wheel, but not the only factor. What about retail outlets? If you look back at the online market in January/February, most of the cards being sold were primarily through third parties. Some of these resellers were simply buying as many GPUs as they could, and then marking them up and trying to turn a quick profit. And then someone would buy those cards at inflated prices, and bump the cost even higher!

One of the major culprits for all of this is actually something else, however, specifically the DRAM manufacturers. But again, it's not fair to completely lay the blame at their feet. In 2015 and 2016, DRAM prices were at all-time lows, and investing in additional foundries to make even more DRAM to satisfy the needs of an already saturated market was obviously not a great-sounding idea. Samsung as an example only finished half of one of its new DRAM foundries, though it's now working to complete the installation. That takes a lot of time—you don't just flip a switch to turn on a multi-billion-dollar facility, so this is a future goal.

The important takeaway is that DRAM prices were plummeting. Meanwhile, demand for NAND was increasing. NAND isn't DRAM, but it's often manufactured at the same place. Switching back and forth takes time and can cost a lot of money, meaning plans are put in place months or even years in advance. And those plans were to make more NAND and less DRAM (and to transition to 3D NAND, but that's a different topic). All the pieces were in place for DRAM supply to drop in late 2016 and into 2017, and then things went south.

Smartphones started using more DRAM (and NAND), and the upgrade cycle for smartphones is shorter. AMD launched Ryzen and the CPU battles of 2017 spurred more PC upgrades than in recent years. Cars have become an increasingly big market for DRAM ICs as well—most modern cars probably have 4GB to 8GB of DRAM scattered around their circuit boards, and models with advanced features like lane assist and self-driving technology can more than quadruple the amount of DRAM used! There has also been a massive surge in supercomputing investments, which require a lot of DRAM (for the system as well as add-in boards), and on the other side of the equation, millions of tiny IoT devices are being manufactured, each with a modest chunk of DRAM.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

It all adds up, and our beloved graphics cards need lots of DRAM as well. With more demand than supply, prices had to go up. Even if nothing else had happened, graphics card prices would have increased in late 2017 and early 2018, but toss in the surge in demand from cryptocurrency miners and you have the makings of a perfect storm.

You don't have to look far to see how DRAM prices have skyrocketed over the past 18-24 months. I remember buying 16GB kits of DDR4-2400 and DDR4-2666 memory for as little as $50-$60 in mid-2016. Those same kits today sell for $170 or more! DDR4 might not be the same as GDDR5, GDDR5X, or HBM2, but it comes from the same facilities and must fight for time on the production line.

What does that do for graphics cards? The contract price for GDDR5 was around $40-$50 for 8GB when Nvidia's GeForce 1080 and 1070 launched, alongside AMD's Radeon RX 480/470. Today, contract prices for the same 8GB GDDR5 are apparently closer to $100 (give or take, depending on volume). Spot prices have gone from around $60 to $120 or more. And every level of the supply chain wants a piece of the action, so if the base cost increases by $50 on a graphics card, that usually adds closer to $100 to the retail price.

If GDDR5 is in a tough bind, it's even worse for HBM2. I've heard suggestions that 8GB of HBM2 can go for $175 (give or take), and it's already more costly to use due to the need for a silicon interposer. Based on this information, I think it's a safe bet that Vega 56 and Vega 64 aren't ever going back to the initial targets of $399 and $499 for MSRP. That's not good, because while the cards can compete with the GTX 1070/1070 Ti/1080 in the performance realm, they can't do it while costing 25 to 50 percent more.

Nvidia and AMD haven't officially (meaning, to the public) raised prices on their pre-built graphics cards, but with Founders Edition models mostly back in stock (outside of the 1080 Ti), it's worth noting that the FE cards typically cost $50 more than the baseline MSRP. And Founders Edition models help to eliminate at least one layer in the supply chain. Unofficially, it sounds as though contract prices for the graphics card manufacturers have gone up—in part to account for the higher cost of DRAM, in part due to other factors. Even now, 'budget' GPUs that were originally targeting the $110-$140 market are selling at $150-$200.

Looking forward, the DRAM manufacturers (Samsung, SK-Hynix, Micron, etc.) are increasing their production of DRAM, and building new facilities as well. Why wouldn't they, considering prices are double or more what they used to be? But even with the increased production it could be some time before DRAM prices approach anywhere near 2016 levels. Whether or not there was price fixing and collusion among the DRAM companies is another factor, but don't expect the outcome of those investigations to lower prices any time soon. (At least SSD prices have started to drop, thanks to the investments in additional NAND capacity.)

What does all of this mean for new graphics cards in 2018? AMD is basically in radio silence, and other than a 7nm shrink of Vega destined for machine learning applications (where it can sell at prices that make using 8GB of HBM2 a non-factor), I don't expect to see any major graphics card launches from Team Red this year.

Nvidia is a different story, with the widely rumored GTX 2080/2070 or GTX 1180/1170 slated for launch in August or September. (Word is that Nvidia is circulating conflicting materials regarding the naming, so no one really knows for sure what the final name will be.) And whether it's called the Turing or Ampere architecture (or even Volta), it looks like the new GPUs will be similar to the Volta GV100, minus the Tensor Cores and FP64 support. I've seen speculation that pricing will be higher than current models, so $499 for the 2070 and $699 for the 2080, but what I've heard from industry insiders is that we will likely see the 2080 priced in the $799-$999 range instead, with the 2070 going for $499-$599. Whether the new GPUs will be fast enough to warrant such a price is anyone's guess (probably not, at least not initially).

The reason for the price hike relative to the 10-series parts is the same, though: higher DRAM costs, combined with increased demand from multiple sectors (gaming, automotive, AI, and crypto). Nvidia needs to make money on the parts, and if manufacturing and parts cost $50-$100 more than the previous generation, expect the price to be $100-$200 higher. In the meantime, if you have $16 billion dollars available, investing in a new DRAM foundry sounds like a great plan.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.