Whether or not Nvidia really has a chiplet-based RTX 5090 'under investigation' its GPU future has to be multi-chip

Rumours suggest it hasn't yet approved a GB101 chiplet version, but that the bulk of Nvidia's next-gen gaming cards will still be monolithic GPUs.

There is the suggestion going around that the green team is testing out a chiplet version of its top GeForce graphics card for the future Nvidia Blackwell GPU generation. This comes hot on the heels of another rumour stating explicitly that the Blackwell architecture is going to be used for data center silicon, too, and that this successor to Hopper will definitely be a multi-chip module GPU.

We're at least a year out from the release of the Nvidia RTX 5090, but that's not going to stop the rumour mill going into overdrive every time there's the faintest whiff of next-gen silicon in the air. And that means we have to take every rumour with the requisite measure of salt given that at this point it's all largely guesswork, conjecture, and hearsay.

The idea of Nvidia finally making the switch over to a chiplet design for its server-level GPUs doesn't surprise, and indeed sounds like a smart plan. The sort of compute-based workloads necessary in data centers can be fairly straightforward to run across multiple graphics cards, so the idea that they could run on multiple compute chiplets within a single GPU package would make sense.

There are also some guessed-at potential RTX 5090 specs floating about, but they're so thin as to be almost transparent. The really intriguing question is around whether Nvidia can make GPU chiplets work in the gamer space. AMD has already nominally made the move to chiplets for its own high-end graphics cards, but the Navi 31 and Navi 32 chips only utilise a single compute die (the GCD) within the package, so games effectively still only have to look at one GPU.

There are reports this will change with subsequent RDNA 4 chips, though, with rumours claiming AMD is going to abandon chiplets and even the high-end market with its next-gen GPUs.

There's no hint in the tweet by the XpeaGPU account—itself in response to the Kopite7Kimi tweets about Blackwell—that the GB101 chiplet version it's referencing contains multiple compute dies, just that Nvidia has such a GPU "under investigation." The fact it's not approved yet suggests that the success of the endeavour is still to be verified.

That might be because it's trying something ambitious, like multiple compute dies for game rendering, or simply because it is doing more testing. While it's not explicit in the tweet that they are talking about a GeForce card, such as a potential RTX 5090, the reference to Ada Next suggests the chiplet version would be for a successor to the current gaming GPU architecture.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

The the Ada Lovelace architecture has also been used in professional cards, which themselves are more compute-focused than rendering, and a pro chiplet card can't be ruled out. Nor can a resurrected Titan Blackwell card, y'know just for the people with more money than sense. They won't care if it works properly or not...

We may still end up in the situation where all of Nvidia's Blackwell GPUs in the GeForce RTX 50-series are monolithic chips—which I think is probably the most likely outcome—and the suggestion is that all of the lower-end client GPUs will be anyway. But the idea the green team is at least testing it out is tantalising.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

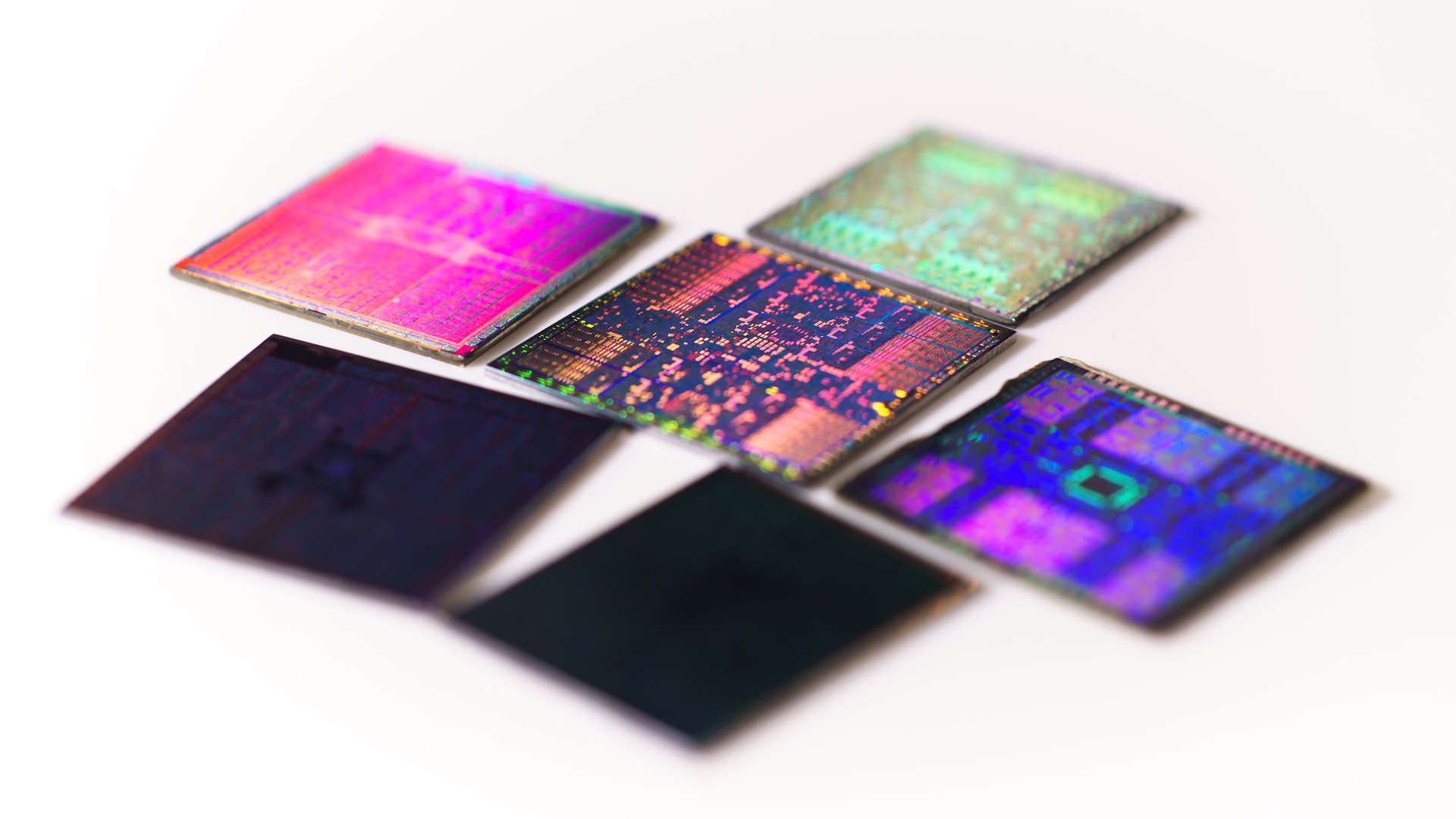

Because the future of GPUs ought to be chiplets, whether that's the next generation or in a few generations time. The top-end monolithic graphics silicon is getting so big that they're monstrously expensive to manufacture, and disaggregating GPU components into discrete chiplets cuts that down hugely. It also effectively means you can develop a single compute die, the really complex GPU logic chiplet, and use multiples of it to create all the different cards in a stack. Less R&D costs for different GPUs, lower manufacturing costs, win and win.

It's just that rendering game frames across multiple chiplets is tough.

You only have to look at the mess SLI and Crossfire ended up in trying to do that across multiple graphics cards to see how difficult it is. But not impossible. The first chip maker to get a gaming GPU running happily with multiple compute dies is almost certainly going to clean up.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.