What you need to know when you're upgrading your RAM

A fresh look at the state of DDR4 in 2018.

We often describe the PC as having its own ecosystem, as though it’s some form of living entity, and the symbiotic relationship between each component is almost a perfect example of Aristotle’s (oft misparaphrased) saying: “The whole is more than the sum of its parts.” Admittedly, the Greek philosopher was likely referring to metaphysics and ideologies, but we'll pretend he was talking about computers.

After all, you only have to take one core component out of the typical system to render the machine incapable of function. But by themselves, the components hold far less value. So when something dramatic happens—like, say, the price of RAM skyrockets—it's tempting to buy cheaper components. Makes sense! But before you choose what memory to buy, you should make sure you understand how memory works and what your money gets you. So let's bust out the magnifying glass and shine a light on the volatile world of memory.

The obsession with memory speed

Memory is a complex subject. For more reading on how memory works, check out our What is RAM primer.

Memory speed is one of the most convoluted specifications around, and is often misrepresented. Way back when, in the early days of SDRAM development, the megahertz measurement was the correct way of advertising the associated speeds of memory. In short, every single solid-state component in your machine operates at a specific frequency, or Hz—whether it’s your processor, GPU, memory, or even SSD, each one operates on a cycle. Like the ticking of a clock, each tick represents a single hertz or cycle (the opening and closing of a transistor gate, in this case). A speed of 1Hz, for example, is one cycle per second; 2Hz is two per second; a MHz is 1,000,000 cycles per second; you get the picture.

The problem is, when DDR (or double data rate) RAM came on the scene, it changed how data transfers were registered. Instead of only actuating once on the rising of each clock cycle, it could now also process an additional operation on the fall of that same clock cycle, effectively doubling the rate at which the DIMM could process data. The figure for accurate measurement of data transfer requests then shifted from MHz to MT/s to adjust for this change, despite the fact that memory still operated at the same frequency. However, marketing apparently didn’t get that memo, because many companies, in a bid to tout it as the next big thing, ignored the MT/s figure, instead referring to it as MHz. Technically speaking, modern-day memory quoted at 2,400MHz, for instance, only operates at half that frequency.

Why timings and latency matter

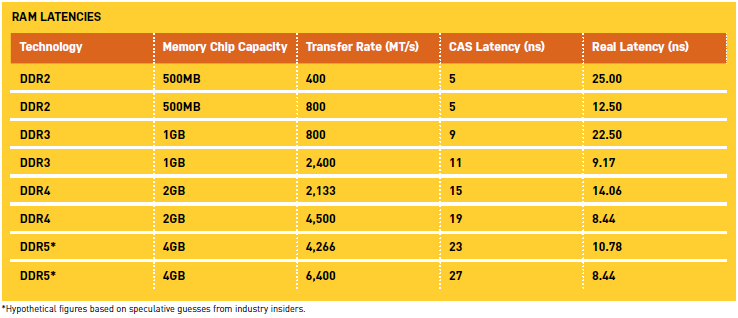

The next part of the holy trinity of memory specifications revolves around timings and latency. There are a ton of them, but the most important one you need to keep in mind is the CAS latency. Referring to the Column Address Strobe, this figure indicates how many clock cycles it’s going to take for the memory module to access a particular memory location, either to store or retrieve a bit of data held there, ready for processing by the CPU.

That said, this figure on its own doesn’t give you all the information you need. It’s only when you combine it with the memory transfer rate we mentioned above that you get a better picture of just how fast your memory modules are.

So, how do we get a figure that makes any sort of logical sense to us consumers? Well, there’s a handy formula that converts CAS latency and MT/s into a real-world latency: Latency = (2,000/Y) x Z, where Y is your RAM’s speed in MT/s, and Z is your CAS latency. So, as an example, if we take a 2,666MT/s memory kit, operating with a CAS latency of 15, we get a real-world result of 11.25ns. This tells us approximately the total time it takes for that memory module to access, store, or request a bit of data from a location on the module.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

This is where overclocking memory typically comes unstuck. Generally, the higher the frequency, the higher the CAS latency, and as such, real-world performance gains are often slim, unless the rise of that CAS latency is slowed as well. When it comes to the “best” performing memory, what you’re looking for is a kit that has a high frequency, a low CAS latency, and the necessary capacity (see next page) to do what you want to do.

Where does DDR4 come from?

To understand how memory capacity is calculated, we have to look at how the chips themselves are designed. This starts with JEDEC, an association of over 300 different companies that focus on solid-state technology. Its task is to ensure that universal standards are used across the registered companies when it comes to solid-state tech, with a particular emphasis on DRAM, solid-state drives, and interfaces (NVMe and AHCI, for instance). What this does is ensure that the consumer won’t have to pick between four different connection standards for DDR4, for example, and everything is consistent across multiple manufacturers—in other words, no proprietary BS. As far as memory is concerned, JEDEC lists the criteria that, say, DDR4 needs to adhere to—for instance, the number of pin outs, the dimensions of the chips, the maximum power draw, and more.

That spec is then handed over to the manufacturers, such as Samsung, SK-Hynix, Toshiba, and Micron, to produce memory chips to their own specifications that fit into JEDEC’s criteria. Typically, the differences lie within the size of the manufacturing process used (which additionally affects power draw). These chips are then bought by aftermarket partners, such as Corsair, HyperX, G.Skill, and so on, to be assembled with their own PCBs, heatsinks, and aftermarket features (RGB LEDs, anyone?), while, of course, still adhering to JEDEC’s original outline.

Currently, due to limitations in transistor size, DDR4 chips are limited to just 1GB each, although a theoretical 2GB per chip is well within the JEDEC specification. In the consumer market, most DIMMs can only support up to a maximum of 16 memory chips per stick, meaning a maximum capacity of 16GB per DIMM is possible. You can find larger sticks in the enterprise market, typically featuring up to 32GB per stick, but these are designed for server use rather than the consumer market, and are priced into the thousands.

How much memory?

So, in this world of hyperinflated memory prices, just how much DDR4 is enough for what you want to do? It’s tricky. A year or so ago, we would have happily sat here and recommended 64GB of DDR4 for any video editor or content creator out there. But, to be honest, given the ludicrous price increases (more on that later), that’s just not a practical or good use of your money in this day and age.

4GB If all you’re building is a home theater PC or a machine for low-end office work (we’re not talking 4,000-cell Excel files here), then arguably a single 4GB stick of low-spec DDR4 should be perfect for the job. Couple that with a low-end Pentium part, and you’re all set. For those keen miners out there, this is also the ideal spec for a cryptocurrency mining rig, because mining is typically not as memory-intensive as other more mainstream applications (but you should pass on the Pentium if you’re planning to mine CPU-intensive currencies).

8GB If entry-level gaming is your jam, 8GB (2x 4GB) is the absolute minimum we’d recommend at this point in time. Ironically, the lower the amount of VRAM on your GPU, the more likely the system is to cache overly large texture files on to the actual RAM itself (here’s looking at you CoD: WWII)—dual-channel here helps with those massive transfers.

16GB For mid-range to high-end gaming, you absolutely need that 16GB of DDR4. Annoyingly, more and more games are starting to use more and more memory. Yearly releases and poorly optimized titles are putting more strain on system memory, with Star Citizen recently announcing a minimum spec of 16GB of RAM purely to run the Alpha. 16GB is also a nice middleweight RAM capacity for any task you want to throw at your rig. Whether that’s extensive office work, Photoshop, entry-level videography, you name it, it’s the right spec for you.

32GB For anyone who makes a living from 3D modeling and content creation, 32GB is the way to go for the time being. Although this will likely set you back $400 or more, it’s the absolutely right amount for applications such as After Effects, 4K Premiere Pro, and more.

32GB-plus For now, unless you can somehow get it aggressively subsidized, anything beyond 32GB just isn’t worth it, at least not until prices drop below that $500 mark once more.

The DDR4 price hike

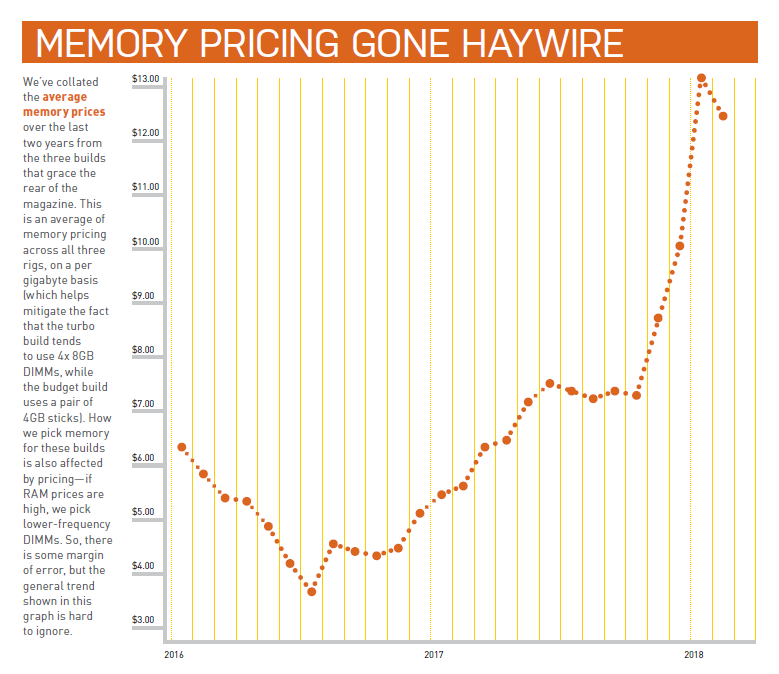

If you haven’t built or upgraded a machine recently, you might not realize that memory pricing has gone crazy over the last 12–18 months. Memory kits have seen their prices at least double during this period, with some almost tripling. That means that while, a year or two ago, the amount of memory you put in a system could be decided on a whim, it now has to be a carefully thought-out plan if you want to keep any machine up to date and capable of the workloads intended. But why have prices increased so much? And what are the chances of them coming back down to more reasonable levels?

The biggest problem for DRAM is that producing flash is far more lucrative. The reason for this is primarily down to one thing: smartphones. The specifications for these tiny powerhouses are constantly on the rise, so the amount of storage and RAM they ship with has risen sharply recently. As they are premium products, the amount of money being charged for them has gone through the roof.

You might be sitting there thinking that high-speed desktop DDR4 RAM has nothing to do with NAND flash, and in a sense you’d be right, but the plants that manufacture NAND are also the ones responsible for our beloved DDR4. So, because it’s financially more advantageous to switch over to NAND, that’s exactly what has happened, and will probably keep happening for a while yet. It might be important to us, but desktop RAM is relatively low on the pecking order as far as manufacturers are concerned.

There is another reason for the current high prices, though, and that’s down to market demand. We had some decent platform releases last year, which meant there has been a slew of people in the market looking to buy more RAM. Yes, it’s Ryzen’s fault. Well, not really, but the new platform has certainly not helped the situation.

If you’re looking to upgrade a system with more RAM, it could be worth hanging tight for a little longer, because the memory market has a history of righting itself once serious money is involved. (Not to mention class-action lawsuits.) If you’re looking to build a new system, your options aren’t as obvious, because the simple fact of the matter is that RAM is going to cost you. Buy wisely. And be ready to pounce when pricing does return to more reasonable levels.

Memory channels and bandwidth

What is channel bandwidth and how does it affect memory? Think of it as the maximum amount of data that can be transferred at any one time between your system and the memory installed. It’s calculated using MT/s, the width of the memory bus, and the number of memory channels your system supports. So, for a typical Ryzen 7 1800X system, featuring 16GB (2x8GB) of 3,200MT/s DDR4, it’s something like this:

3,200,000,000 (3,200MT/s) x 64 (64-bit bus) x 2 (dual-channel) = 409.6 billion bits per second, or 51,200MB/s ,or 51.2GB/s.

That’s the absolute maximum amount of data the system could transfer between the memory and the processor at any given time, before bottlenecking. If you were to use a 4x4GB kit on your dual-channel board, bandwidth wouldn’t increase, because the processor can still only read and write from two memory channels at a time (thus the dual-channel spec), despite the fact that you have four DIMMs installed. On the flip side, installing just one DIMM cuts that figure in half.

The X299 and X399 platforms go one step further with quad-channel memory, which again doubles the bandwidth. But latency stays the same (or is even worse in some cases).

For most applications, dual-channel memory kits provide you with more than enough bandwidth for everything you want to do on your desktop. However, for applications that manipulate massive data sets, textures, and more, an increase in channel support can eliminate potential bottlenecks, because more powerful processors become more capable at manipulating larger data sets. 4K, 5K, and 8K video editing in After Effects, for instance, benefits greatly from having access to both a larger memory capacity and increased memory bandwidth, thanks to quad-channel support.

The future

JEDEC is poised to announce the next-gen memory standard (DDR5) later this year, and has confirmed a few details for us: “DDR5 will offer improved performance, with greater power efficiency compared to previous generation DRAM technologies. As planned, DDR5 will provide double the bandwidth and density over DDR4, along with delivering improved channel efficiency.”

That’s exciting, if only for the fact that power consumption should drop—DDR3 sat at 1.5V and DDR4 at 1.2V, so it’s likely we’ll see DDR5 at 1.0V or lower. The doubling of density means we’ll likely see mobos double in max capacity, too, with mainstream platforms maxing out at 128GB, and high-end desktops hitting a 256GB limit. Expect initial MT/s figures to hit around 4,133MT/s, with CAS timings at 27 cycles (if not more), or 13ns latency. However, it’s unlikely to come into production until late 2019.

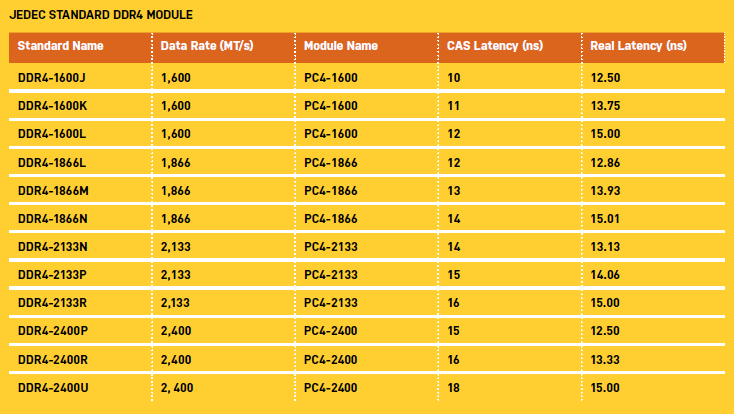

The JEDEC standards

What exactly are JEDEC’s standards? And why are they necessary? The biggest reason they exist is to ensure consumers have a non-convoluted platform. They also ensure that motherboard manufacturers don’t have to design four different types of motherboard, just because Corsair has its own connection standard, HyperX another, and G.Skill a slightly different variant. You get the picture. Think of it like USB, but for memory. The biggy is that all 300 members can pool their resources to accelerate technological development, without any one of them getting a competitive edge, and saving them time and money in the process.

It’s worth noting that JEDEC’s standards are on the fairly conservative side of things when it comes to memory frequency (after all, they’re designed to work with everything from desktops to servers and supercomputers), and DDR5 has yet to be clarified in its entirety just yet, but you can see from the table below just what standards each manufacturer has to adhere to. Below is a partial list of the DDR4 standards, the full specifications are available from JEDEC for a fee, or you can look at Wikipedia's list of official JEDEC DDR4 standards.

Alan has been writing about PC tech since before 3D graphics cards existed, and still vividly recalls having to fight with MS-DOS just to get games to load. He fondly remembers the killer combo of a Matrox Millenium and 3dfx Voodoo, and seeing Lara Croft in 3D for the first time. He's very glad hardware has advanced as much as it has though, and is particularly happy when putting the latest M.2 NVMe SSDs, AMD processors, and laptops through their paces. He has a long-lasting Magic: The Gathering obsession but limits this to MTG Arena these days.