What is RAM (Random Access Memory)?

A primer on memory, how it works, and how much you need.

Everything you need to know about memory

Our modern PCs consist of seven primary components: CPU, motherboard, GPU, RAM, storage, PSU, and the case to hold it all. While the CPU and graphics card usually get all the glory, each component plays a critical role. Our focus here is on the RAM, or Random Access Memory, and we'll talk about how it works, how much you need, and how the various specifications affect performance.

To understand why we need RAM in the first place, let's step back and look at the big picture of how our PCs work. At the top are the CPU and GPU, which run instructions on various bits of data. (Note: I'm not going to dig into the GPU aspect much, as VRAM in most cases is similar to system RAM, only with a wider bus and higher clockspeeds.) This data can eside in several places, from the processor registers, to one of the cache levels, to RAM, or for long-term storage it could be on your HDD, SSD, or even on the Internet.

The CPUs and GPUs that do all the serious work chew through data at an incredibly fast rate, but the more data you need to store, the slower the rate of access. So our PCs have a tiered data hierarchy, where the fastest levels are also the smallest, and the largest levels are the slowest. If you take the Core i7-8700K as an example, peak throughput allows it to fetch, decode, and execute six 32-bit instructions per clock cycle, with each instruction potentially requiring two 32-bit operands (pieces of data). At 4.3GHz on all six CPU cores, it could consume up to 1.85 TB/s of data, but that much data requires a lot of space.

The Kitchen and the Chef

It's analogy time, as hopefully this will help you visualize what's going on inside your PC. Think of your PC as a kitchen at a popular restaurant, where the CPU is the chef fixing various dishes. The chef has immediate access to the various stove burners, oven, blender, microwave, and other utensils—these are the processor registers. Nearby, within easy reach, are all the seasonings, ingredients, and other items that are actively being used—the L1 cache. Close at hand but not quite as convenient are additional pots, pans, plates, etc. in the cupboard—the L2 cache. The chef can get these with a minimal delay if needed. The L3 cache then ends up being somewhat like the pantry—a short few steps away, but still readily accessible.

Where does RAM sit in this analogy? It would be everything else inside the restaurant, including things in the freezer and refrigerator that may need to be thawed out before use. It could also include dirty dishes that need to be cleaned before use. There's plenty of room to store just about anything the chef might need, but a frozen steak can't just be thrown into a frying pan or oven. The chef needs to dispatch an assistant to go grab things and bring them back.

This is a high-tech kitchen, so long-term storage is a massive stasis chamber where everything put there will never, ever go bad. The chef can get at everything he might ever want, but instead of a delay of seconds or maybe a minute, now the chef needs to wait an hour or two while an assistant gets it out of stasis. During this time the chef (aka, the CPU) might end up sitting around twiddling his thumbs if there's nothing else to do.

At the close of business (ie, when you power off your PC), the chef and assistants can't just leave food sitting out on the counters. Everything needs to get packed up and stored for the night in the gigantic stasis chamber. Otherwise it might spoil. Your PC's RAM is like that as well—RAM can't retain data without power. When your PC is turned off, all the data that was in RAM is gone. That's why RAM is sometimes referred to as 'volatile' storage, as opposed to non-volatile storage like flash memory (SSDs) and magnetic platters (HDDs). When an application wants to save data for future use, that data needs to be placed into long-term, non-volatile storage. Short of your SSD or HDD failing (which can occur, so be sure to have some form of backup system in place), things on your drives don't disappear unless you intentionally delete them.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

What does this mean for PCs?

Getting back to PCs, our long-term storage devices are incredibly slow, at least when compared with a CPU's speed. SSDs can read and write data at 500MB/s to 4GB/s, which is several orders of magnitude slower than a fast CPU (1,800GB/s). A hard drive meanwhile chugs along at an agonizingly slow 100-200MB/s at best, or even 1-2MB/s in random IO. THat's completely insufficient for the needs of any modern processor, and it's why modern PCs need lots of RAM and have several levels of caching.

A Core i7-8700K CPU can eat up to 1.85TB of data per second but using dual-channel DDR4-3200 RAM will only get you 51.2GB/s—or 42.7GB/s if you stick with DDR4-2666. So best-case, system RAM is only capable of supplying about 2-3 percent of the CPU's data needs. But the cache hierarchy mitigates the slowness of RAM, just like RAM helps to mitigate the slowness of long-term storage.

Raw bandwidth figures don't even come close to telling the complete story, however. DDR4-2666 can crank out up to 42.7GB/s in an ideal situation, but things aren't always ideal. Each request to get data out of RAM ends up going through a lengthy process, and how long the steps take is what the various RAM timings tell us.

Understanding memory speeds and timings

Most RAM comes with an advertised clockspeed and four timings, so you might see something like the above DDR4-2400 15-15-15-39 kit. The speed is the easy part, that's the number after DDR4, except that's the speed in mega-transfers (MT) per second. So DDR4-2400 means 2400 MT/s, because DDR memory transfers data on both the rising and falling clocks, but the base speed is half that value—1200MHz in this case. The timings are listed as: CL-tRCD-tRP-tRAS, and are the number of memory clock cycles required for each operation.

Memory is broken up into banks, rows, and columns. To pull a random byte of data from memory requires the memory bank to close the currently open row, open a new row, and then access a specific column. Thankfully, spatial locality means the currently open row is often what's needed, so the only delay is the amount of time it takes to access a column of data. That time is called the CAS (Column Address Strobe) Latency, abbreviated CL.

The other timings, which I won't delve into much, are the RAS to CAS delay (tRCD), Row Precharge (tRP), and Row Active Time (tRAS). tRAS is the minimum number of cycles between when a row is activated and when the data in that row can be accessed. It's pretty high (usually a bit more than twice the CL), but rows aren't swapped that often so a high tRAS won't hurt performance too much. tRP is the amount of time a row needs to be active before the memory can swap to a different row—so again, it's not as critical as this only comes into play when accessing a new row, and when a row hasn't been open long enough. And finally, tRCD is the number of cycles between when a row is opened and when that row is ready for a column access.

In a worst-case scenario, where a memory row has just been opened and now data is desired from memory in a different row (from the same bank), the number of cycles required for the memory to access that data would be tRP + tRAS + tRCD + CL. With the above DDR4-2400 memory, that would require 84 memory cycles, with each cycle at 1200MHz taking 0.833ns. That would mean a potential delay of 70ns, which on a 4GHz CPU would mean about 280 CPU cycles of waiting. At the other end of the spectrum, if data is needed from a row that's already open, the only delay is CL, which would only be 12.5ns or 50 CPU clock cycles.

Either way, that's a lot of time where the CPU is waiting around for RAM to return the requested data. Thankfully, modern processors have prefetch logic where requests for data can be dispatched well in advance of when that data is needed, and RAM is divided up into multiple banks so that bank 0 could be sending data while bank 4 is still working on opening a new row. Think of prefetch as a chef assistant that constantly looks at what the chef is making and tries to predict what will be needed next, and then goes to get that before it's actually requested. Basically, there's a lot of extra stuff in our PCs to try to avoid relying too much on memory speed.

How much does memory speed impact overall performance?

Given the above discussion of memory speeds and timings, you can hopefully begin to see why memory performance isn't the holy grail of modern PCs. Take bog standard DDR4-2133 CL16 memory, which would have a CL access time of 15ns—50 CPU cycles on a 4GHz processor. That's a lot of wasted CPU cycles, especially on a multi-core processor. Now compare that to the same CPU with high-end DDR4-3200 CL14 memory, which has a CL access time of 8.5ns—only 34 cycles. That's better, but the CPU would still be very inefficient if it had to wait on RAM all the time.

In practice, prefetching logic combined with the cache hierarchy means the delays of system RAM are often hidden, or at least reduced. DDR4-3200 CL14 might offer 50 percent more bandwidth than DDR4-2133 CL16, and it might have 43 percent lower latencies, but your PC won't suddenly become 50 percent faster by upgrading memory. I'll be doing some memory performance tests in the future on the i7-8700K to see just how much of a difference RAM speed and timings make on a modern PC, but generally you're looking at only a 5-10 percent improvement in performance when going from the slowest DDR4 to the fastest DDR4, and there's diminishing returns so a moderate option like DDR4-2666 might only be 2-3 percent slower than a top-rated kit.

How much memory do you need?

Besides memory performance, it's also important to consider capacity. With memory prices having increased by over 100 percent during the past 18 months, finding a good balance between RAM speed and capacity is a critical decision. Budget 2x4GB kits of DDR4 now start at $90 instead of $35, and 2x8GB kits go for $150 or more, with 2x8GB DDR4-3200 CL15 at $215, and 2x8GB DDR4-4000 memory starts at $260. Increased demand from the mobile sector, along with some supply problems on the manufacturing side of things, has resulted in a slow but steady increase in RAM prices.

Those are all extremely painful prices to see, and while I'd love to say 16GB is the minimum amount of RAM to put in a PC, that's not practical anymore. The good news is that for gaming purposes, going beyond 8GB isn't strictly necessary—unless you're running a lot of other applications at the same time. However, it's worth stating that 4GB really isn't enough, so don't bother with a 1x4GB build (unless you plan on adding a second 4GB DIMM in a month or two).

In general, 8GB is a good target for entry-level and budget gaming PCs, while 16GB is the sweet spot. We've been at a plateau for RAM requirements the past several years, and that's good in the sense that we're no longer constantly needing to upgrade our PCs. But it's also a bit disappointing, as it signals the end of the rapid scaling of our PC capabilities. We're no longer doubling performance every couple of years, and certainly not doubling RAM requirements.

Of course, if you do a lot of heavy image and video editing, there's reason to go beyond 16GB. Workstation builds with 64GB or even 128GB are available, but at extreme prices. For gaming purposes, 8GB is reasonable and 16GB should be more than sufficient for the next several years.

What about memory channels?

Besides performance and capacity, there's another element that comes into play, the number of memory channels. This is mostly dictated by your platform choice, so you can't run quad-channel memory on a dual-channel CPU, but it's (usually) possible to use fewer channels than the maximum that your platform supports.

All the mainstream platforms (Intel LGA1151 and AMD AM4) support dual-channel DDR4 configurations, the enthusiast platforms (Intel LGA2011-v3 and LGA2066, or AMD TR4) support quad-channel DDR4, and some servers support up to eight-channel memory. In the above analogy, think of each memory channel as an assistant to the chef. Having one assistant may not be enough, two is probably the sweet spot, and four or more assistants will likely result in some of them sitting around waiting for things to do. Speed is also a factor, so one assistant that's twice as fast could equal two slower assistants, but as pointed out above, memory speed isn't a huge factor in overall system performance.

If you only populate a single memory slot on a dual-channel system, you get half the bandwidth, but caching already helps to hide a lot of the delays associated with system RAM. For gaming performance using a dedicated graphics card, running in single-channel usually only drops performance 5-15 percent. It's not the end of the world, but since it's pretty easy to use a dual-channel setup I still recommend that. On quad-channel platforms, the potential change in performance is even smaller—games in particular are often more sensitive to memory timings (latency) than memory bandwidth. So running dual-channel instead of quad-channel may only drop performance a few percent. Professional applications might show a larger change, however, and some motherboards require that you populate all four channels for stability.

In order to properly utilize all the available channels, you need at least one DIMM per channel. You'll get the best results by purchasing a matched set of DIMMs for your platform, so a 4x4GB kit for a quad-channel platform, or a 2x4GB kit for a dual channel platform. It's theoretically possible to mix and match sticks of RAM, but your system will end up running at the lowest common denominator, assuming it runs at all.

There is one area where dual-channel vs. single-channel can make a much bigger difference, however, and that's integrated graphics. GPUs are designed to use a lot of data, and they're built to deal with the higher latencies. All integrated graphics solutions are slow compared to a decent discrete graphics card (eg, and $100 GTX 1050), but for laptops and family PCs sometimes you have to live with the processor graphics. In that case, dual-channel memory can sometimes improve gaming performance by 25 percent or more compared to single-channel memory. Of course, adding a decent graphics card is still a bigger jump in performance.

Getting the most from your RAM

Now that you have a better understanding of what RAM is, how the various speeds and timings impact performance, and how much RAM you'll need, let me end with a few parting thoughts.

RAM can be one of the more frustrating elements of building a new PC, particularly if you're putting together a budget build. Skimp on the RAM quality and you may end up with a PC that won't even boot! This doesn't happen much now (it was a bigger problem in the aughts), but all motherboard manufacturers provide a qualified vendor list (QVL) of memory that has specifically been tested with the board and that is guaranteed to work. If you're worried about compatibility, start with the QVL for your board.

In practice, I've found it's usually best to just buy memory from a major brand. In alphabetical order, Adata, Corsair, Crucial, GeIL, G.Skill, Kingston, Mushkin, Patriot, Samsung, and Team are all safe choices. Others might work fine as well, but I haven't used anything that wasn't from one of those companies.

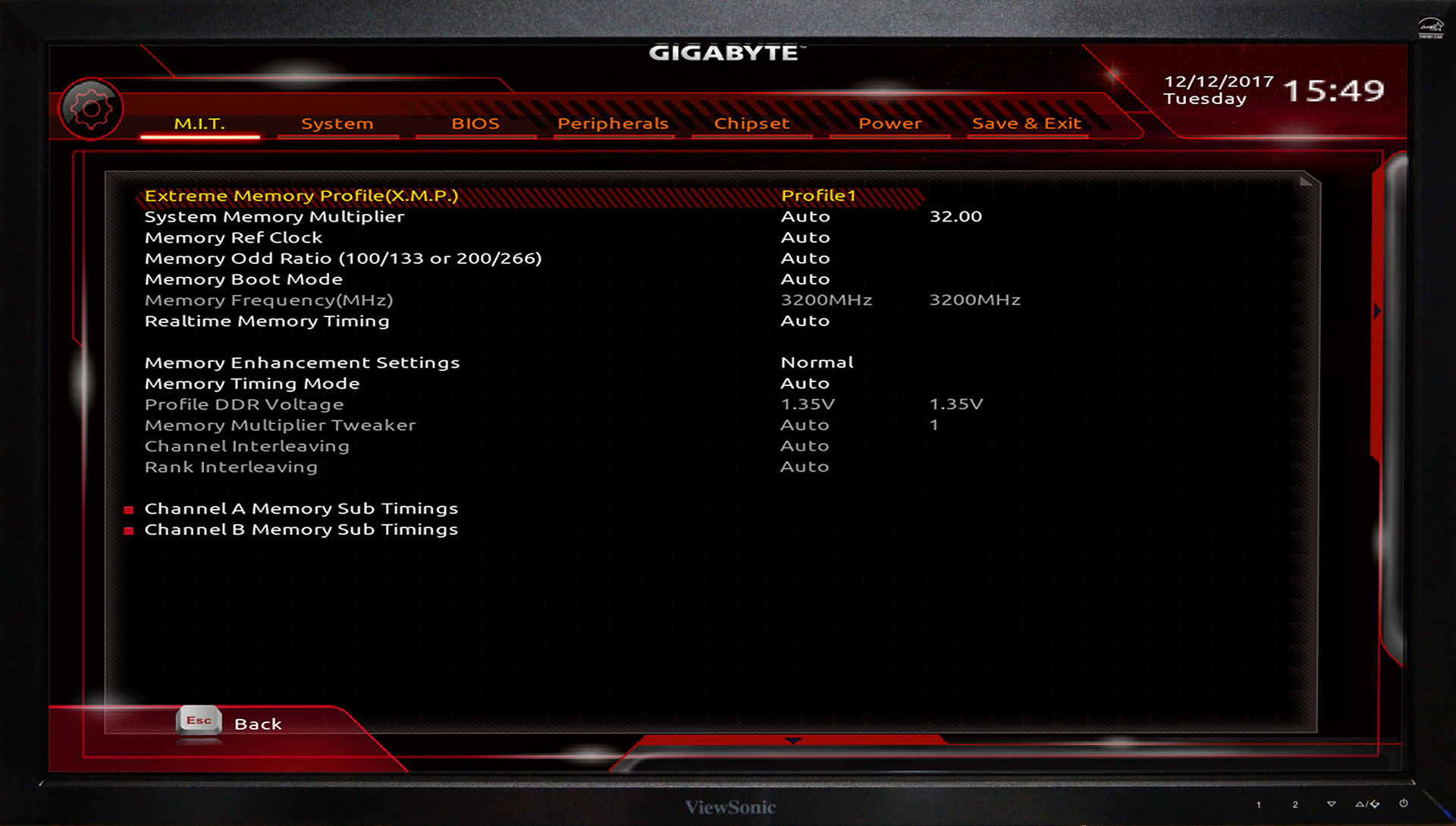

Even with good memory, there are still a few additional steps to take. Most motherboards will boot your system in a default compatibility mode for the memory, which on DDR4 usually means DDR4-2133 with CL16 timings. If you purchase a higher performance kit of RAM, you'll want to go into your BIOS and configure the speed and timings to work properly.

For Intel platforms, nearly all modern motherboards support XMP (Extreme Memory Profile), which will set the speed, timings, and voltages automatically. While the XMP spec comes from Intel, many AMD motherboards support the feature, sometimes with a different name (A-XMP, EZ-XMP, DOCP, or various other options). If you can't find an automatic profile, however, you can input the settings manually under the advanced DRAM timings option. Usually, you only need to input the speed, CL, tRP, tRCD, and tRAS values, and set the voltage as appropriate. If the system fails to boot, however, you might need to or settle for slightly slower speed and/or higher timings.

The last thing I want to note is that which memory slots you use on your motherboard can make a difference, in both performance as well as compatibility. Consult your motherboard manual to find out which slots are best, but it's usually slots two and four (on a dual-channel system), and these will often be color coded so that you just put DIMMs into the two blue (or black, red, etc.) slots.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.