Upscaling and frame generation are the final nails in the coffin for overclocking, and I'm absolutely okay with that

It's not that I'm lazy or that it's without merit, it's just not worth it anymore.

This month I've been testing: The final batch of ergonomic keyboards for the time being. Shame some of them are so expensive because they'd be spot on for lots of folks. I can't work without mine and I suspect that true for most ergo fans.

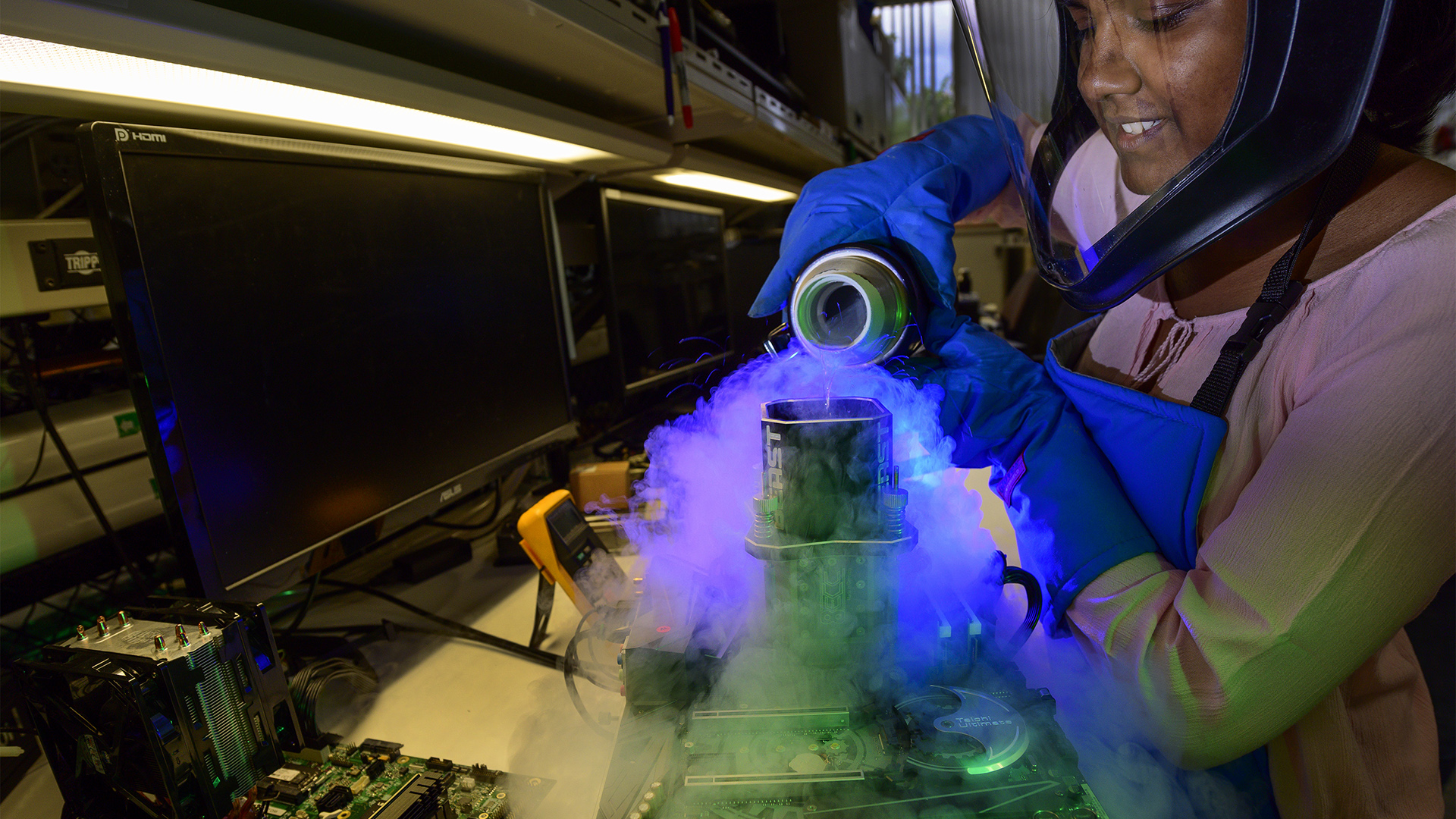

If you could go back in time, say twenty years ago, you would have seen me spend most evenings with a feverish and furrowed brow, head buried deep in the innards of a nest of metal and plastic, my palms stained with silver hallmarks, knuckles cut, fingertips burned. I wasn't an engineer working on a specialised project for the space industry–I was simply running yet another overclocking experiment, messing about with voltage mods, homemade heatsinks, and BIOS tweaking, all to squeeze the last iota of gaming performance from my PC.

But those days are long gone, as overclocking is a mostly pointless exercise these days and thanks to the likes of upscaling and frame generation, the lid has been well and truly nailed shut.

The last time I did any serious overclocking was around 2007, using a freshly purchased Intel Core 2 Quad Q6600 and six month old Nvidia GeForce 8800 GTX. I managed to boost the former's stock 2.6GHz clock speed all the way up to a comfortable 3.4GHz (an increase of 31%) and the latter's core up by something like 20% (I can't recall the specifics), but this all took many hours of messing about and dealing with constant crashes.

Although I can't quite remember how much better all my games ran, I do know that it was quite a decent increase. Probably in order of 20 to 25%, if I had to pin it down. But sheesh, what a mess it all was. That poor graphics card, which had cost me something like £650, looked utterly horrific, with shunts soldered everywhere and crudely constructed heatsinks and fans strapped over it all.

At no point did I ever worry about frying it all to death, which is a tad alarming when I think about it. I suspect I had rather too much faith in my engineering abilities for my own good.

Before that setup, the most overclocking fun I had was doing the 'pencil trick' on early AMD Athlons. Just a few layers of graphite to close an electrical connection on the CPU package was all that was required to set the clocks to anything you like.

And probably the last overclocking I did with any serious intention was around six or seven years ago, seeing if I could get a Titan X Pascal graphics card to boost more than 500MHz extra on top of its stock 1,530MHz value. I don't recall if I was especially successful but I do remember the sheer racket of running the fans at 100% to stop the darn thing from boiling itself to oblivion.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

It was also the point where I realised that I was experimenting with something that cost £1,200 at the time (oh how times have changed!) and it was somewhat of a lightbulb moment for me.

I don't bother with overclocking anymore. My CPU, a Core i7 9700K, runs at stock clocks; heck, I even have all the power management options enabled in the UEFI. It's more than good enough as it is, though I do yearn for something better for rendering and compiling. But I'd never be able to achieve the level of improvement I want just by raising the clock speed a few percent.

My graphics card overclocks itself, as do all of them these days. If the GPU isn't constrained by its power and thermal limits in a game, the chip will run at a clock speed higher than the maximum claimed by its manufacturer. It's supposed to boost up to 2,625MHz but in many games, it trundles away quite happily at 2,850MHz.

I have tried to manually overclock it, of course, but with the best, always-stable result being no more than a 5% improvement in any game's performance, it's just not worth doing it. I think I've done it no more than five times since I've had it and it was only to provide some clock scaling data for analysis.

Nowadays, if I want a lot more performance in games, I simply enable upscaling. A single click in the graphics options gives me an instant boost, with no need for me to do anything else. Sure, I lose a bit of visual quality in some cases, but that's it. I don't need to adjust anything on my hardware to cope with it; everything just works. And while it's not as visually solid as upscaling, frame generation lifts things even further.

At this point, I suspect somebody in the interwebs is prepping a response to the above containing the phrase 'fake frames' but I literally couldn't care less about how 'real' any frame displayed on my monitor is. It could be put there by the power of magic pixies, for all I care, as long as it doesn't mess up the enjoyment of my games.

The death of consumer-grade overclocking was inevitable, of course, because despite the considerable advances in semiconductor technology and chip design, clock speeds just aren't the be-all and end-all on CPUs, like they used to be at the start of this century.

I'm sure many of you out there will remember the race between AMD and Intel to be the first to release a 1GHz desktop CPU and yes, we're currently bouncing around the 6GHz limit now, but there's little difference in games between a 5.5GHz and 6GHz chip (as long as everything else is the same). Only in the world of competitive FPS battle would it actually matter, though most players of that ilk will just run at low graphics settings and resolution to get the best performance.

As I've gotten older, I've found that I can't abide a noisy gaming PC turning my office into a sauna. For many of my games, I switch my graphics card to a settings profile that drops the 285W power limit down to 50%: There isn't a huge drop in performance but the GPU runs so much cooler that the fans barely tick over.

And in those games that I can activate them, upscaling and frame generation are nearly always used (for the latter it depends on whether the game is already running comfortably at 60fps or so). The end result is a quiet, cool office and a gaming experience that's more enjoyable, too.

I would never want the option to overclock one's hardware to ever disappear and I'm certainly suggesting that it's something nobody should ever do, but the whole process has become arguably less relevant as time has gone on. It used to be easy to achieve significant gains, but now you're just scrabbling over measly pickings and it often requires really expensive cooling if you want to stand a chance of seeing a decent performance boost.

Today, games barely even want CPUs with more cores and threads, let a lone a couple hundred more MHz. Graphics cards look after themselves for the most part and even where you can ramp up the clocks, the power consumption and heat output just goes through the roof.

For me, overclocking is well and truly over. Passed on, no more, ceased to be. And the final nails in its coffin, or perch if you get the reference, are very much upscaling and frame gen.

Wonder when I'll be saying the same about them.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?