Red Dead Redemption 2 settings guide, system requirements, port analysis, performance tweaks, benchmarks, and more

Red Dead Redemption 2 is a massive game, with tons of settings, plenty of bugs, and an appetite for high-end hardware.

Red Dead Redemption 2 is finally here on PC, and it has a ton of graphics settings to play with. It's also had a rough launch on PC for many players, though after a month of patches things are settling down. There's plenty to cover, including the choice between DX12 and Vulkan graphics APIs, multi-GPU support, and performance across popular CPUs and GPUs. Let's get to it.

[Note: Initial testing at launch showed numerous problems, including massive stuttering and stalls on 4-core/4-thread and 6-core/6-thread Intel CPUs. We've retested and updated these charts with benchmarks run in mid-December, after multiple patches plus driver updates. The good news: Things are much better now.]

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Red Dead Redemption 2 on a bunch of different AMD and Nvidia GPUs, multiple CPUs, and several laptops. See below for the full details, along with our Performance Analysis 101 article. Thanks, MSI!

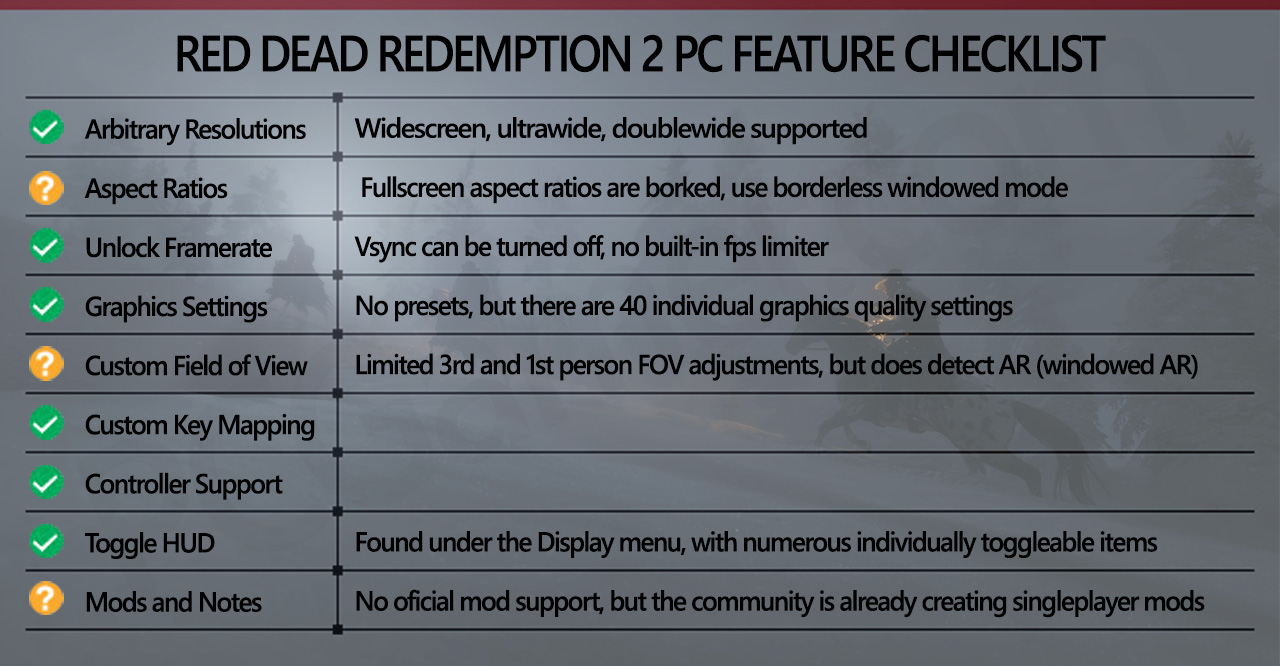

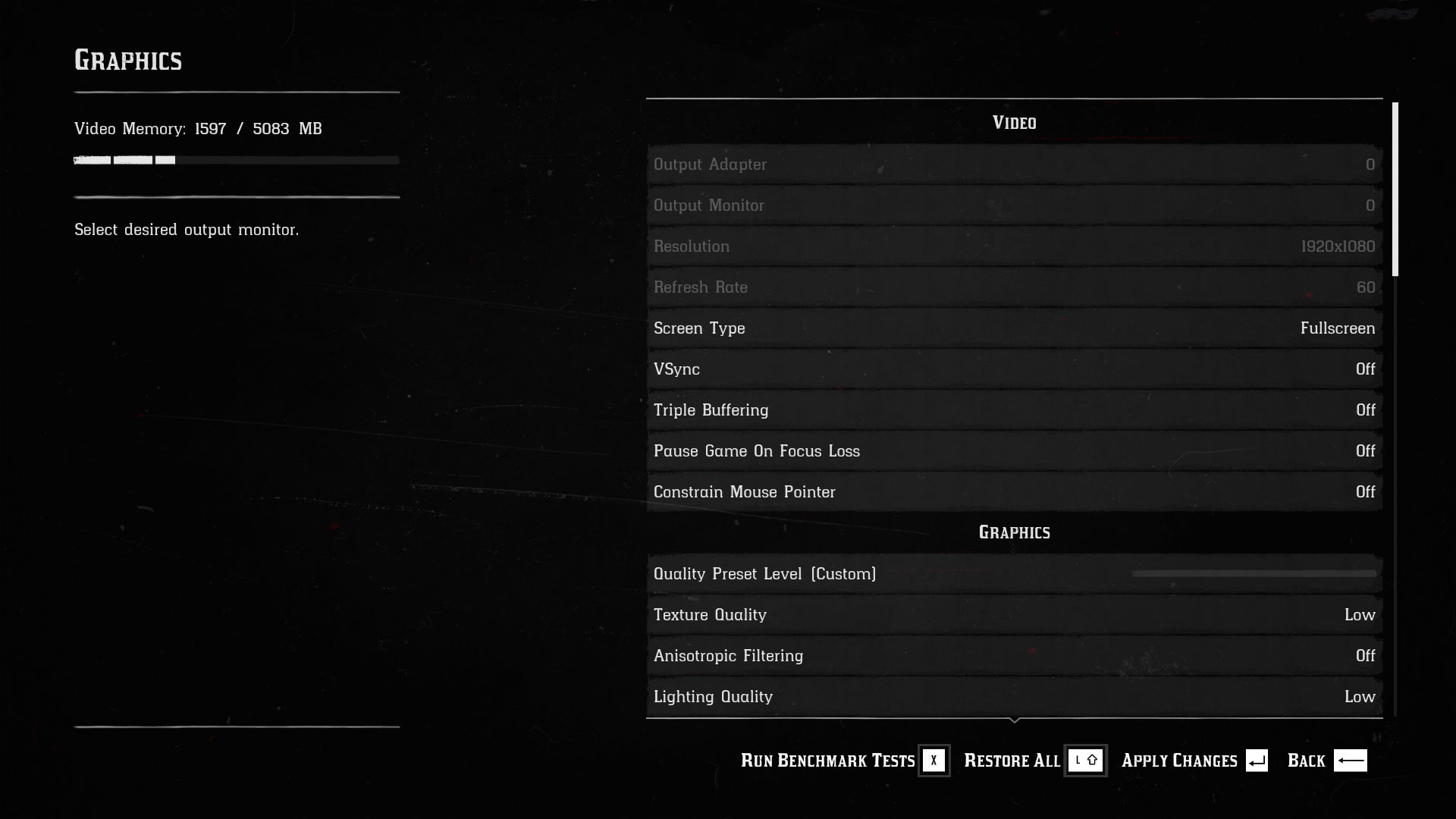

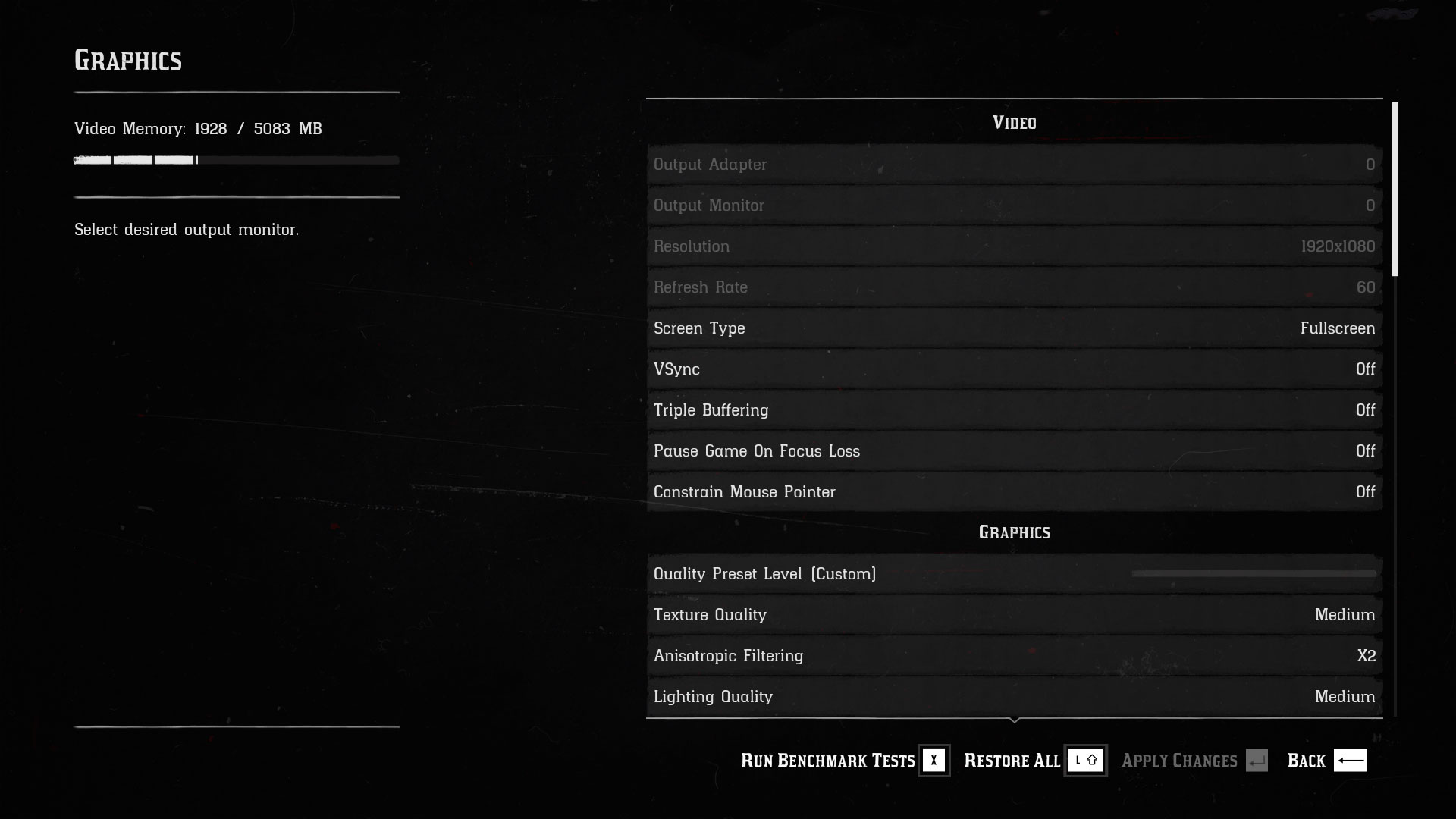

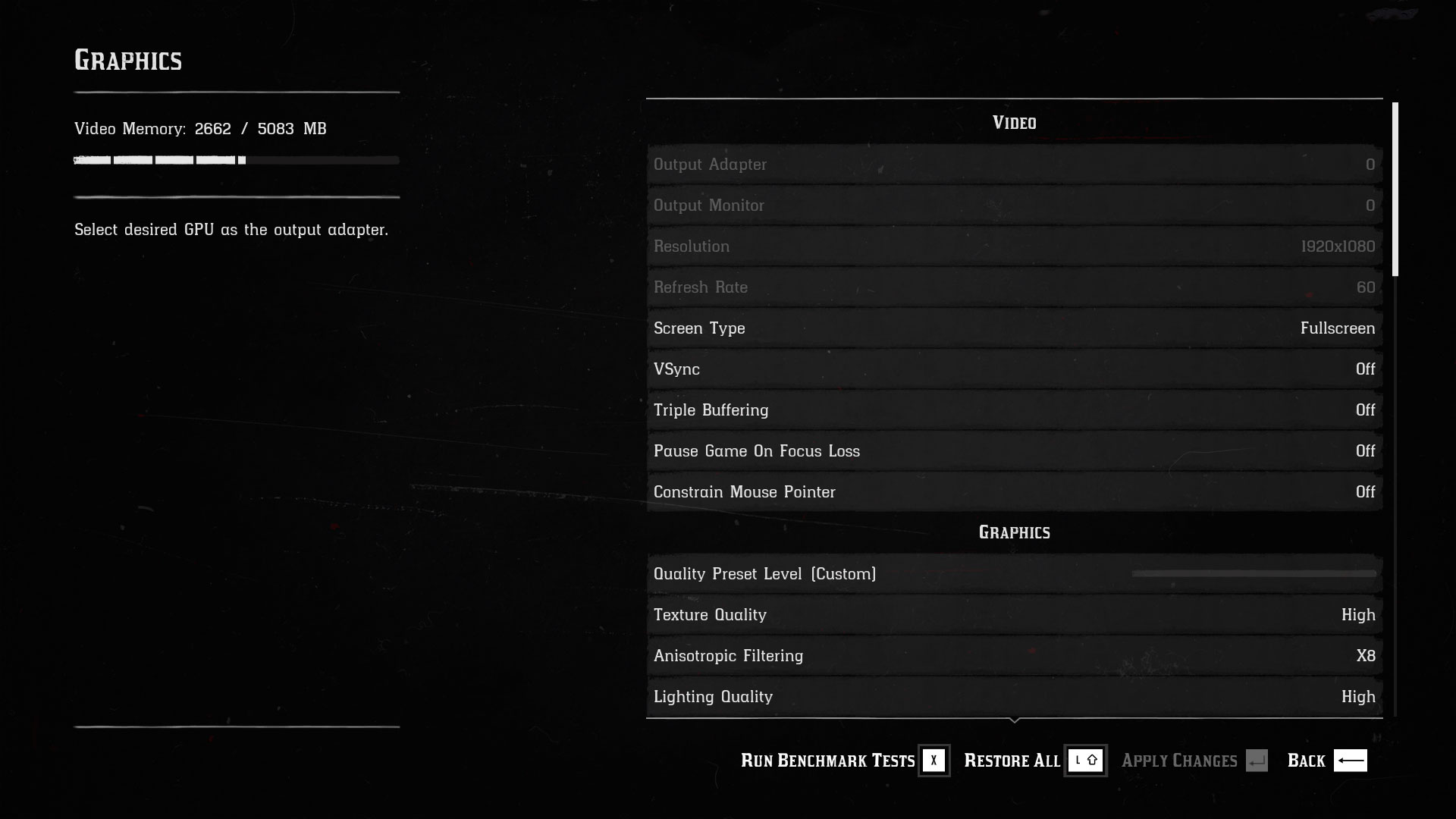

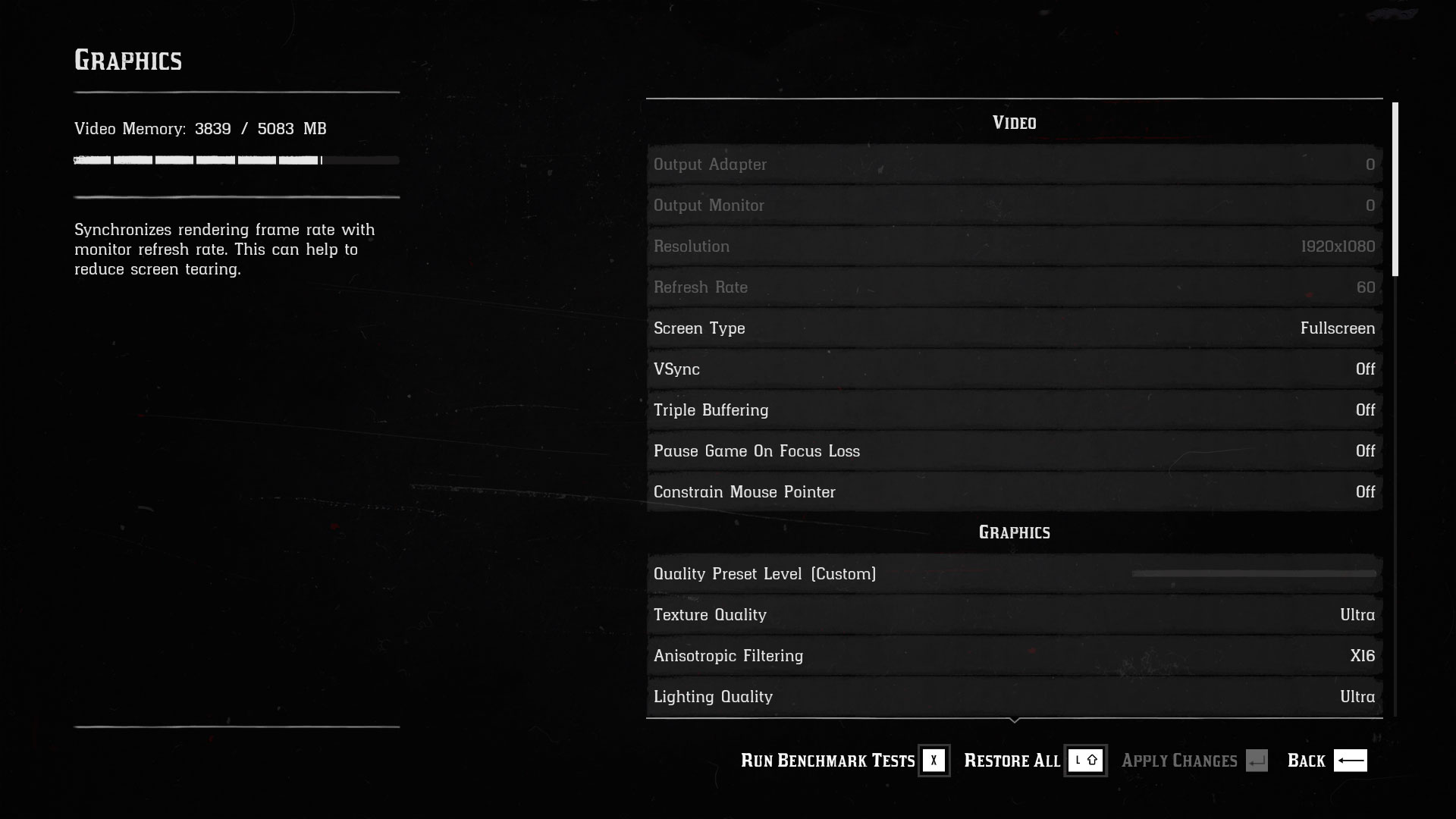

Looking at the PC features, the list of graphics settings is good if perhaps a bit overkill (see below). Resolution support is good—I was able to select widescreen, ultrawide, and doublewide resolutions, as well as old school 4:3 stuff like 1024x768. RDR2 shows some odd behavior in fullscreen mode, but running in borderless windowed mode fixes the problem.

Controller support and remapping the controls get green happy faces. The latter is pretty much required unless you have extra fingers and appendages, or like the brain-bending camera-relative horse controls for some reason.

Official mod support isn't really a thing, but there are already quite a few mods available for the singleplayer campaign, and more are likely to show up. Rockstar is taking the same stance as with GTA5: no mods for multiplayer or you might get banned, but for singleplayer use mods are generally okay. Just be careful if you're toying with mods and then launch Red Dead Online—you'll want to remove any extra files first.

One final piece of good news is that, like Grand Theft Auto 5, Red Dead Redemption 2 is officially component agnostic. Whether your graphics card comes from AMD or Nvidia, or you're running a gaming CPU from AMD or Intel CPU, RDR2 generally doesn't care—Rockstar doesn't have a stake in the hardware vendor game. That doesn't mean all CPUs and GPUs are guaranteed to run flawlessly, but at least RDR2 wasn't specifically designed to favor one component vendor.

Red Dead Redemption 2 settings overview

Like GTA5, RDR2 has no presets for graphics. The game will attempt to auto-detect settings that it deems appropriate for your hardware, but you'll almost certainly end up wanting to tweak things. There's a slider labeled "Quality Preset Level" that might seem like a good starting point, but it has 21 tick marks available, many of them overlap, and it has nebulous targets: 'favor performance,' 'balanced,' and 'favor quality.'

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

The issue is that one PC's 'balanced' settings won't always be the same as another PC, and many of the advanced settings (which are locked by default) get set to different values depending on your CPU and GPU.

3GB cards can't even attempt to run all the ultra settings, and 2GB cards are mostly limited to 1080p low.

Figuring out exactly how to reliably benchmark RDR2 took a bit of trial and error, but I've got that sorted out now. The simple solution is to manually configure every setting for each hardware combination, after cranking the variable "Quality Preset Level" to minimum (or maximum)—annoying, but it could be worse. I've included a large selection of current graphics cards and CPUs, and performance at maximum quality is clearly a problem with current hardware.

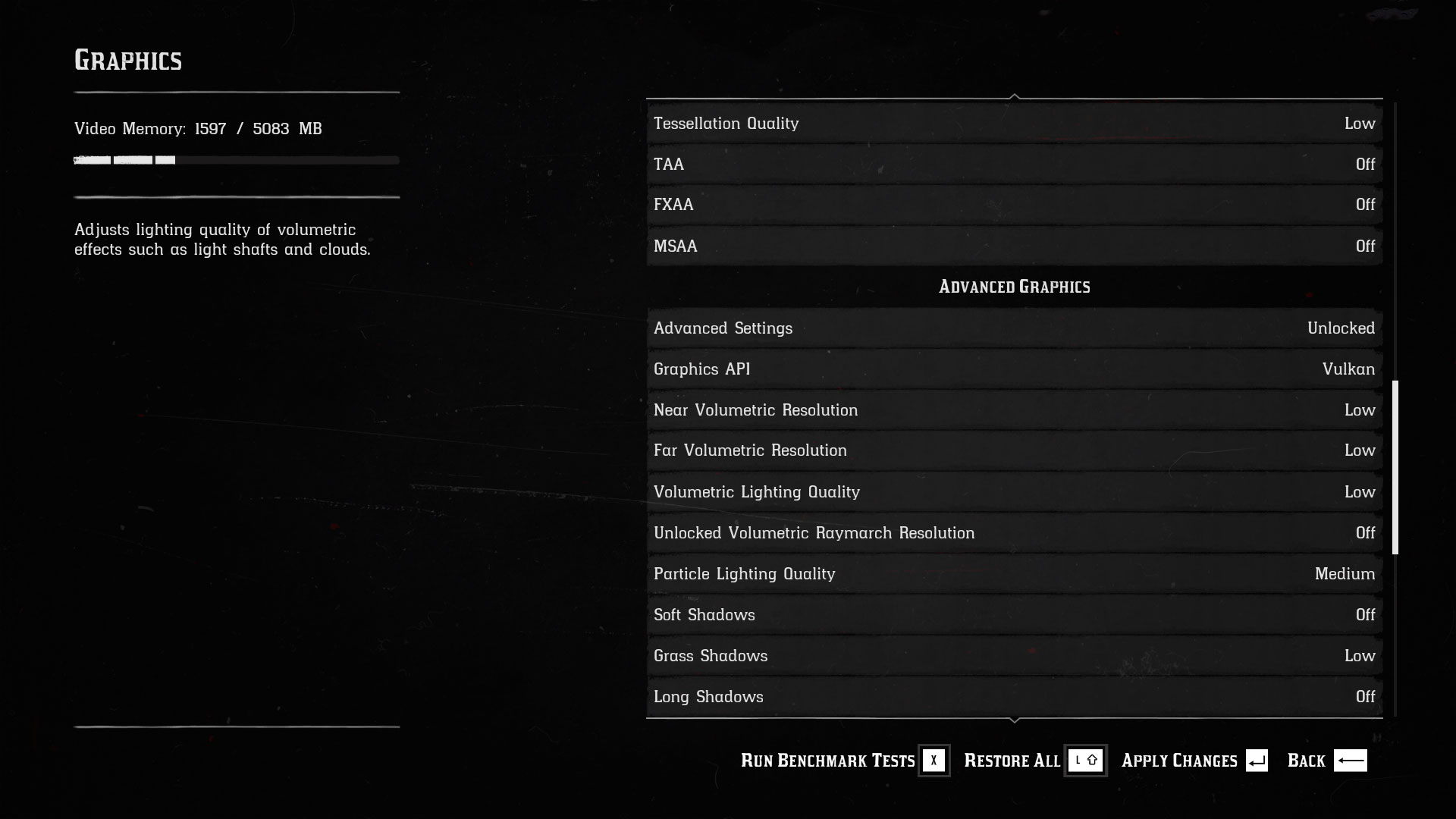

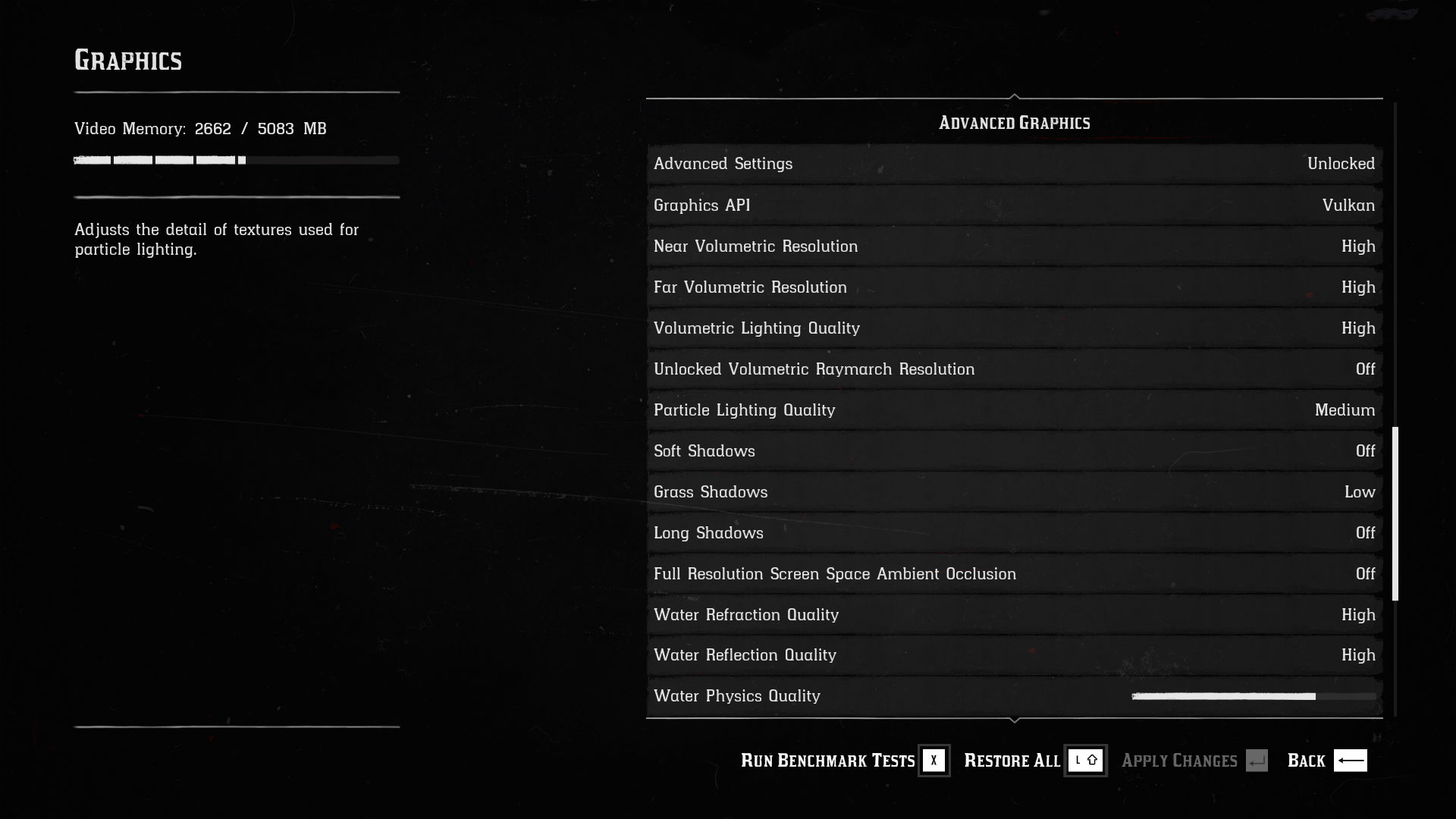

I've standardized on the Vulkan API, which is the default and appears to be the focus of most of the performance improvements since launch. DX12 may in some cases deliver better performance, so if you're using Vulkan and performance seems bad, try DX12—and vice versa.

Also note that 3GB cards can't even attempt to run with all settings at ultra, and 2GB cards are limited to low on many settings. If you have an older GPU with only 1GB or 1.5GB VRAM, you'll be locked into whatever settings the game decides to use. GTA5 has an "ignore memory limits" option, but RDR2 doesn't have an equivalent and won't allow you to exceed your GPU's VRAM.

If you just stick with the default settings on a 1080p display (or drop to 1080p on a higher resolution monitor), you'll probably be okay. And by okay, I mean that if you have at least a GTX 1060 / RX 570 or faster graphics card with 4GB VRAM, you can run RDR2 at 1080p and get around 30-60 fps. If you want to know specifics of how the various GPUs and CPUs stack up, that's what I'm here for.

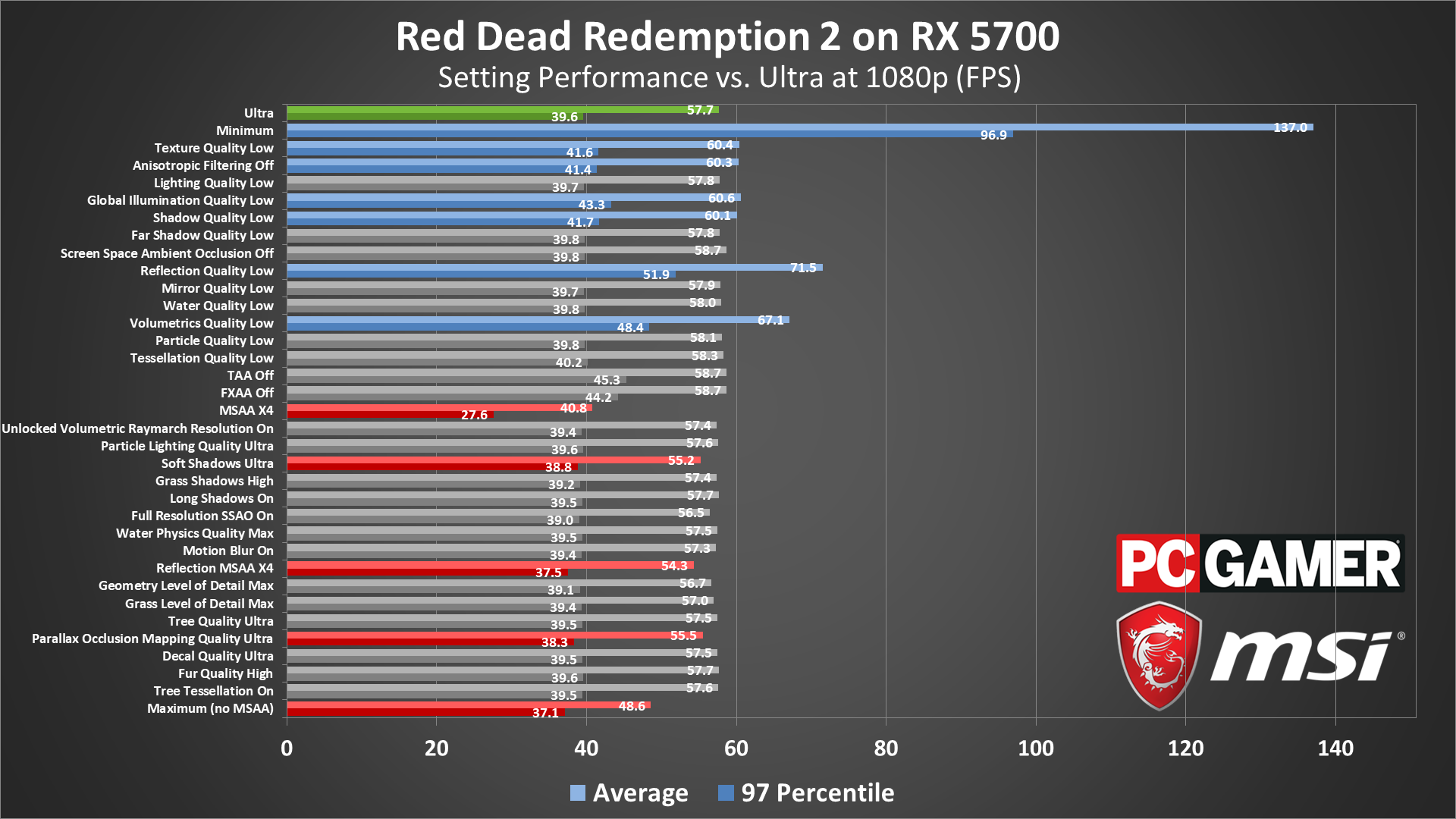

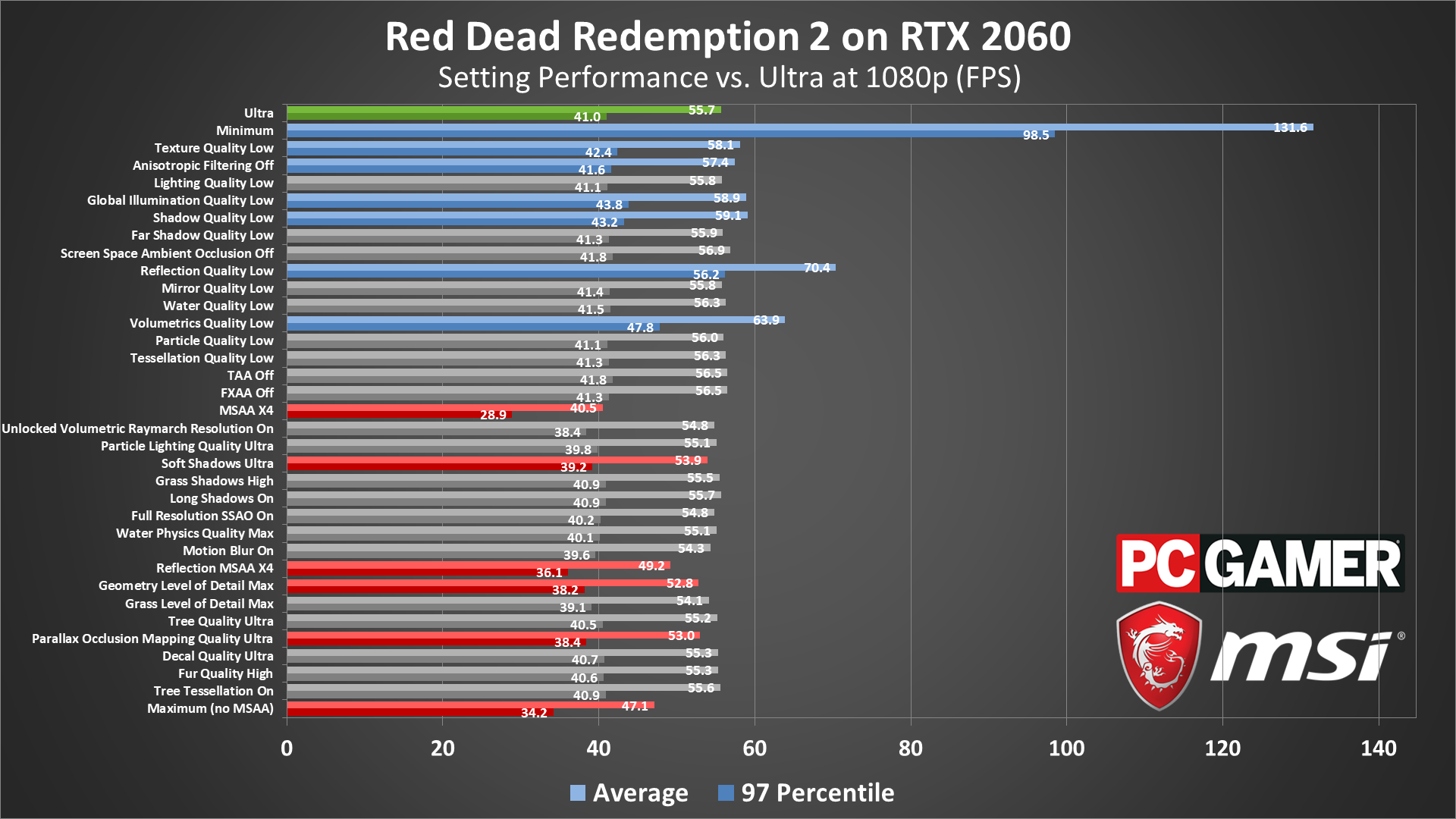

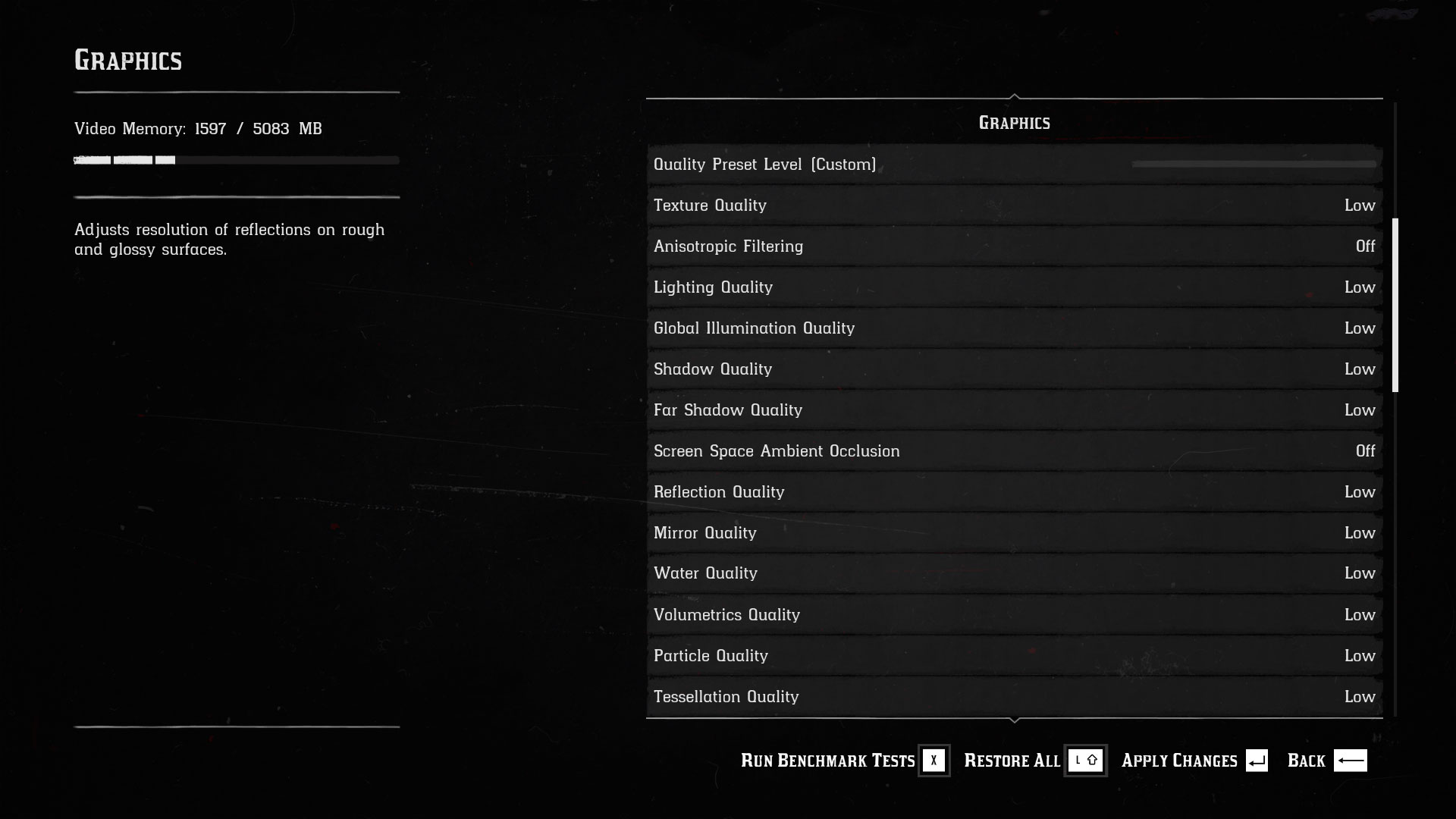

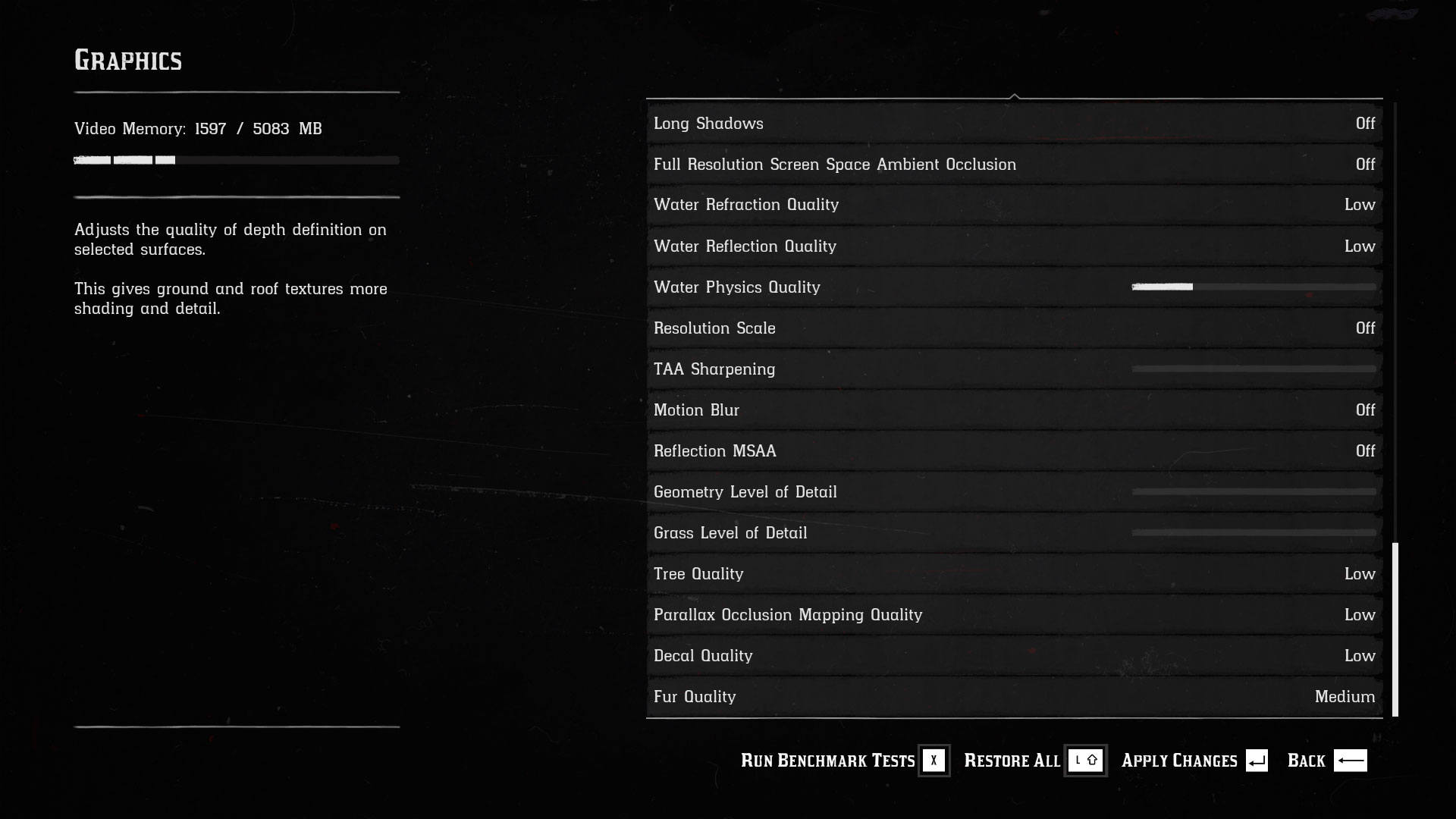

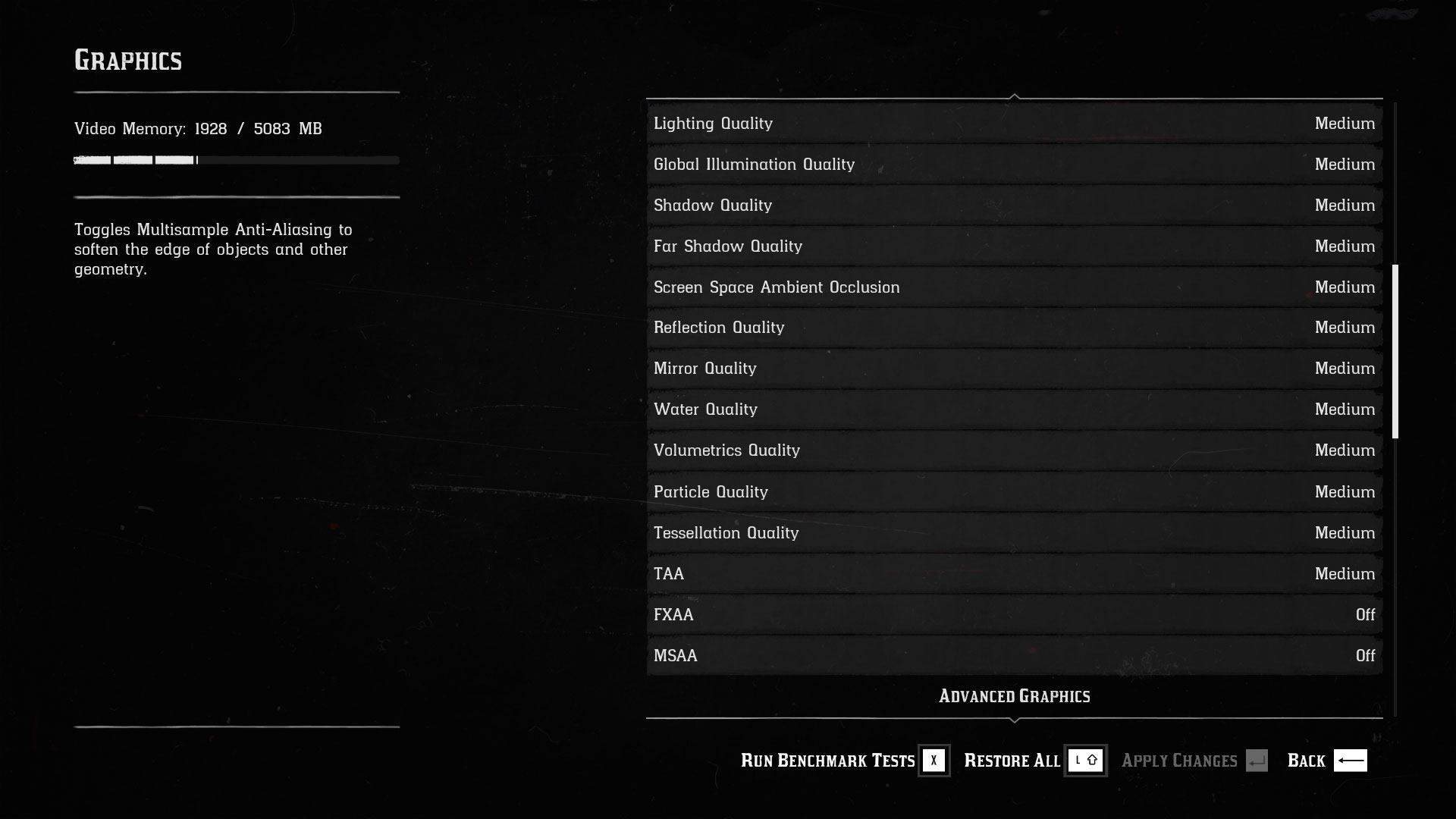

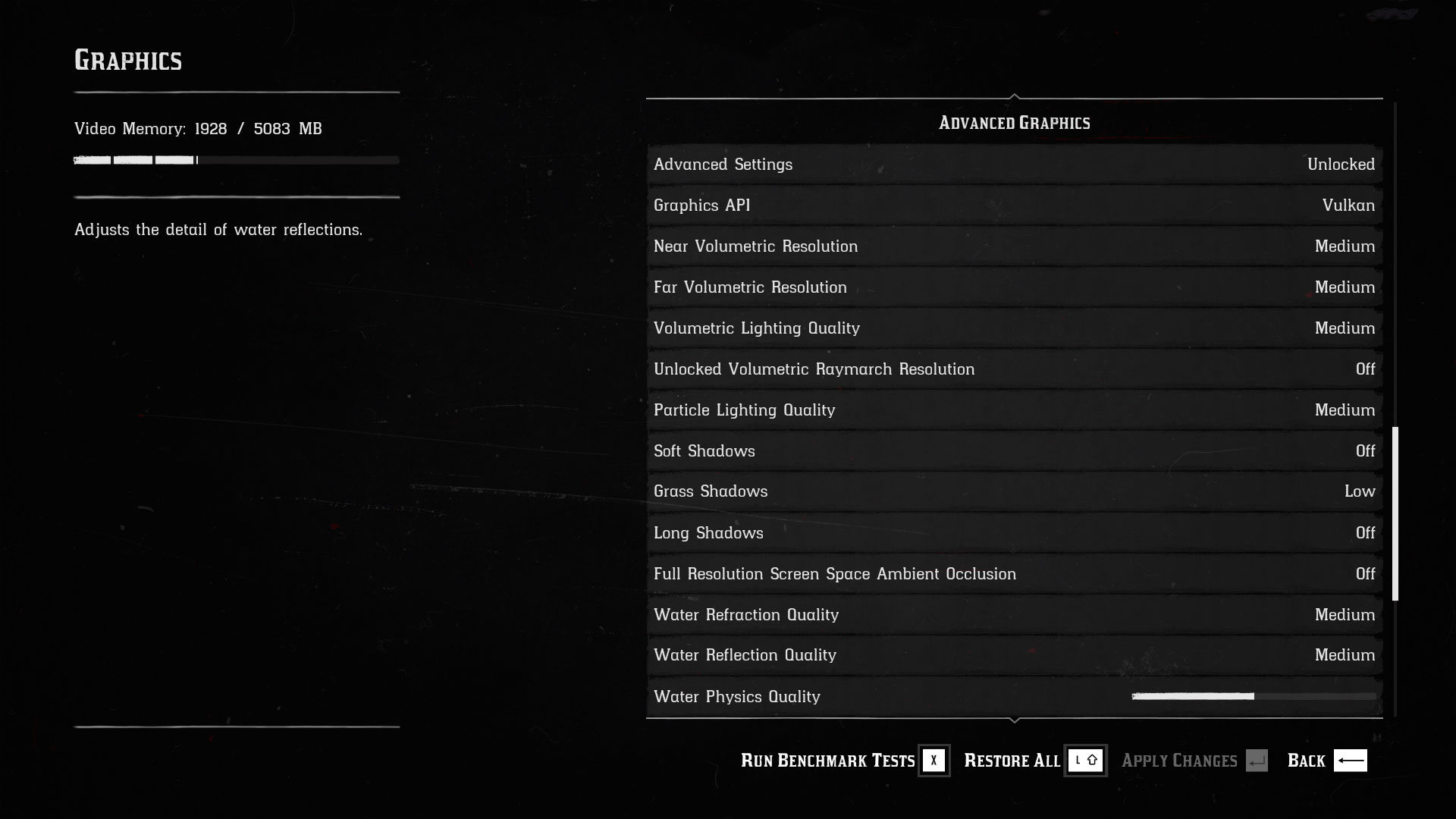

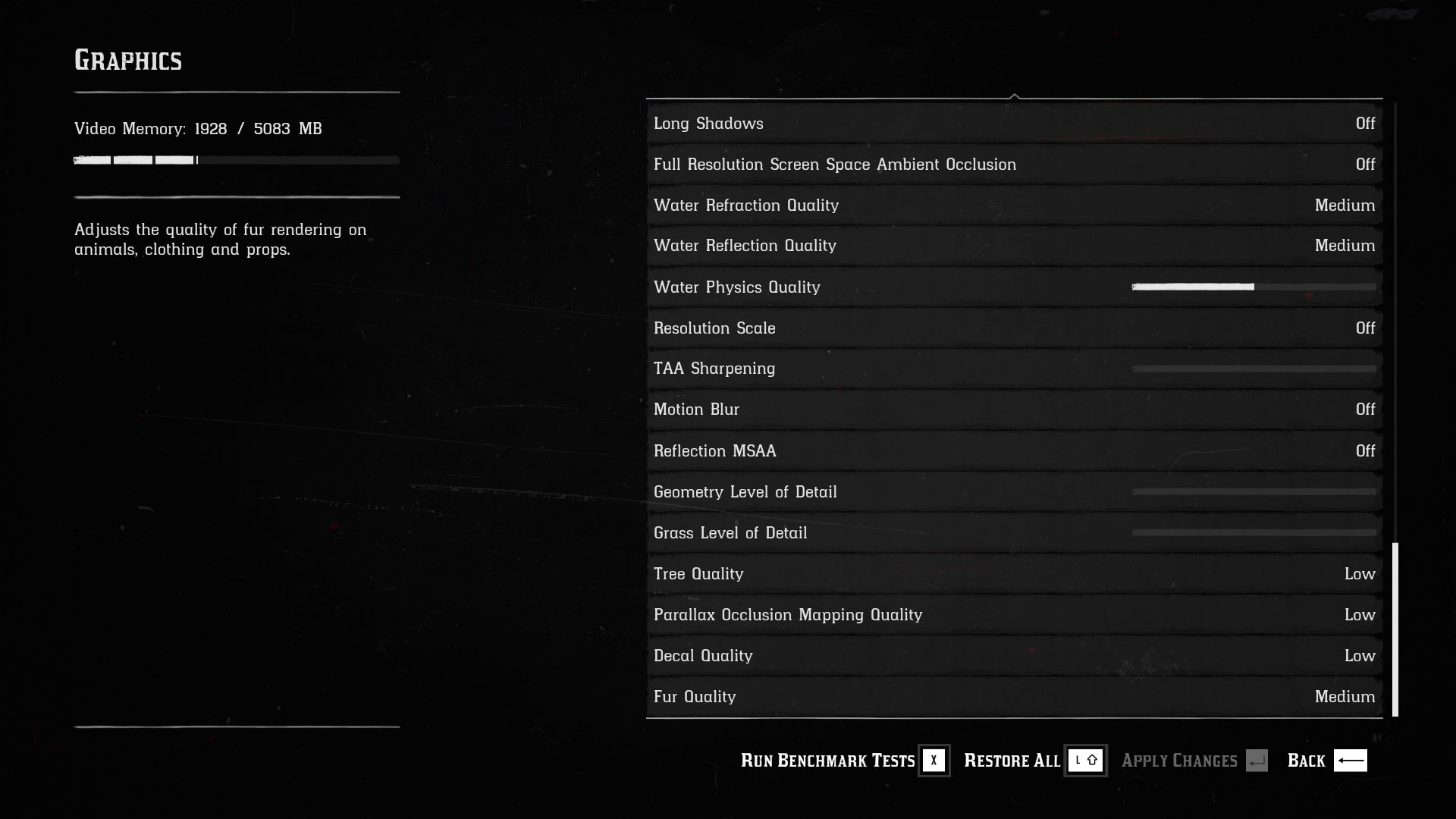

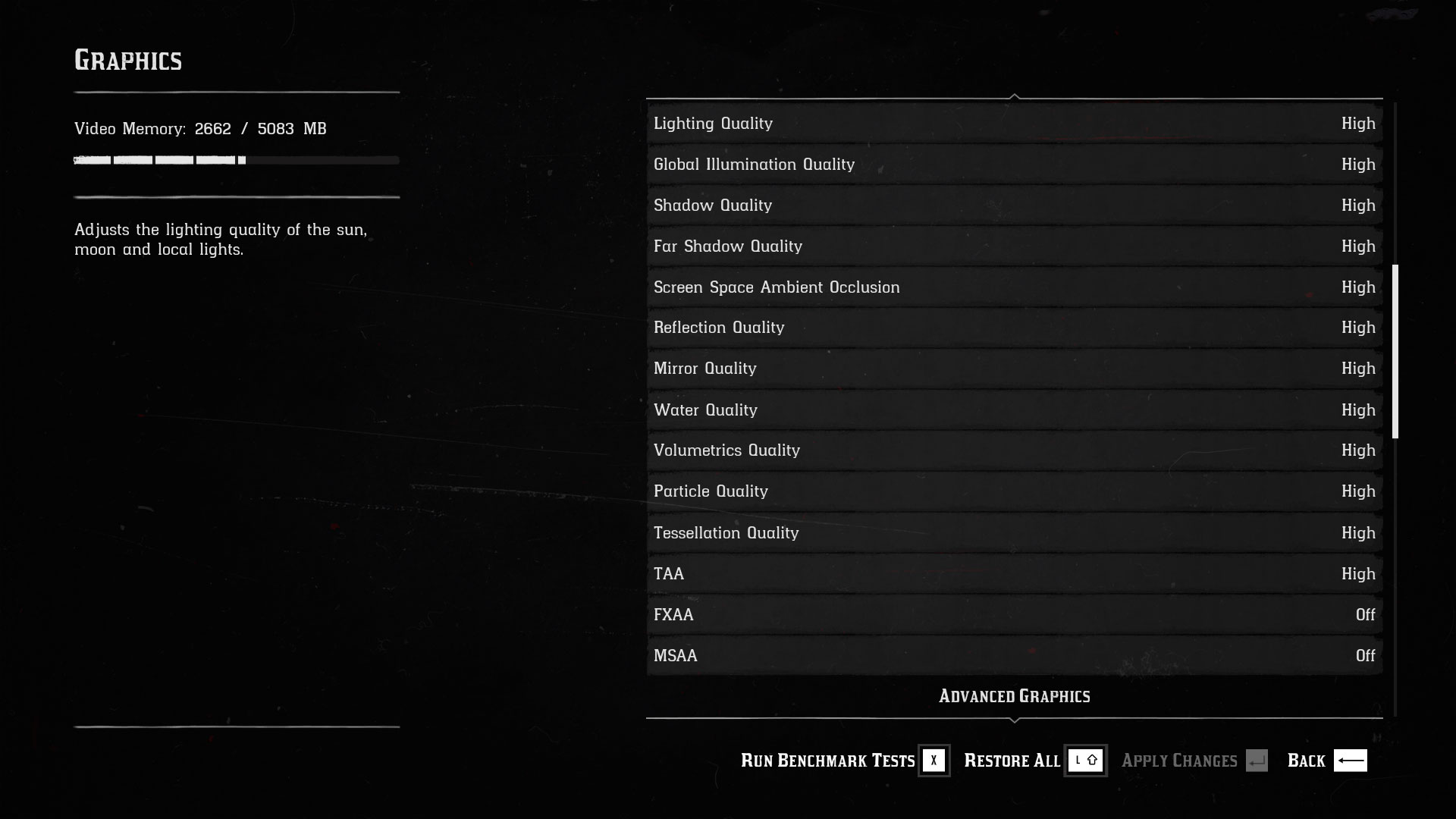

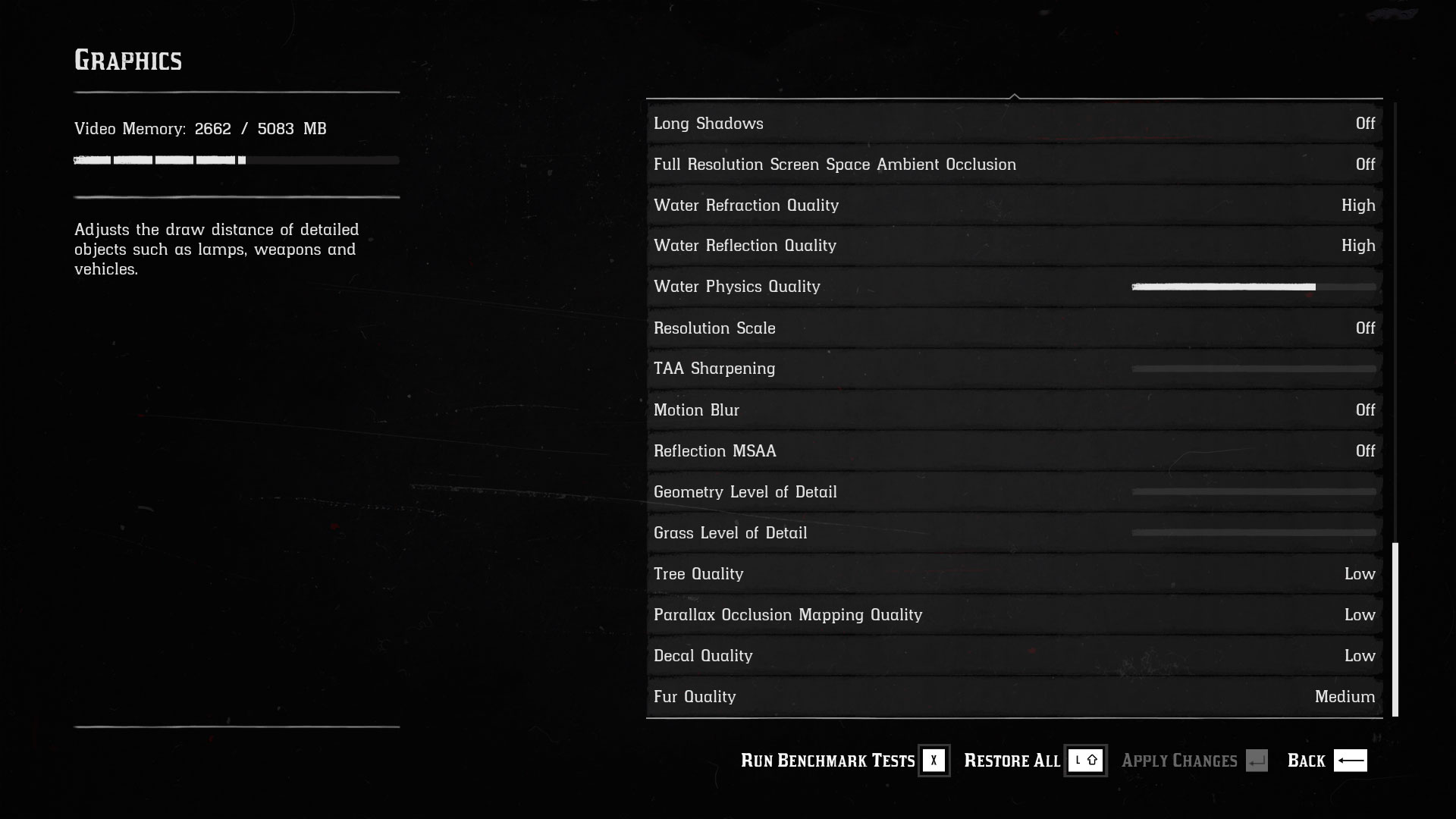

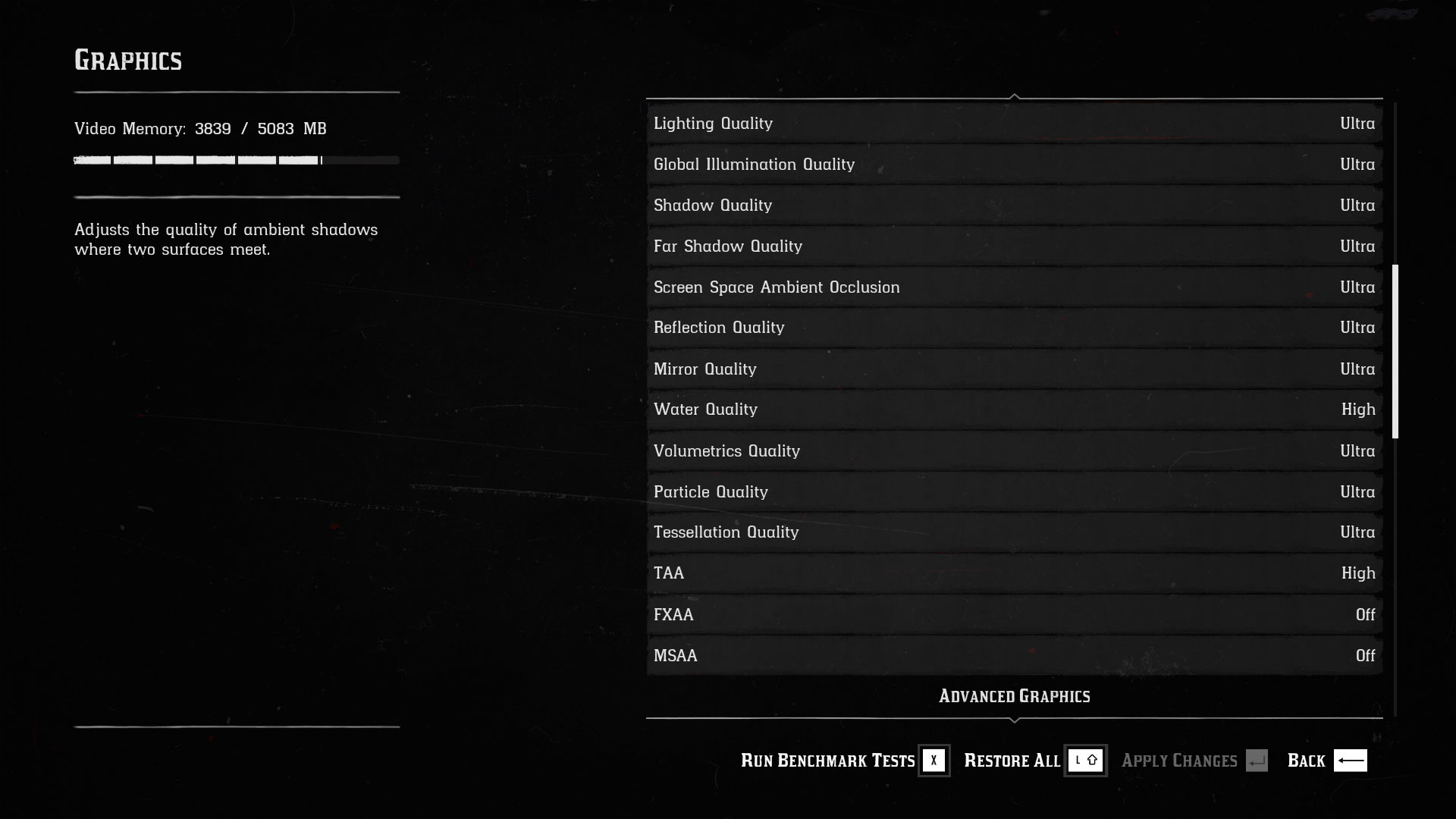

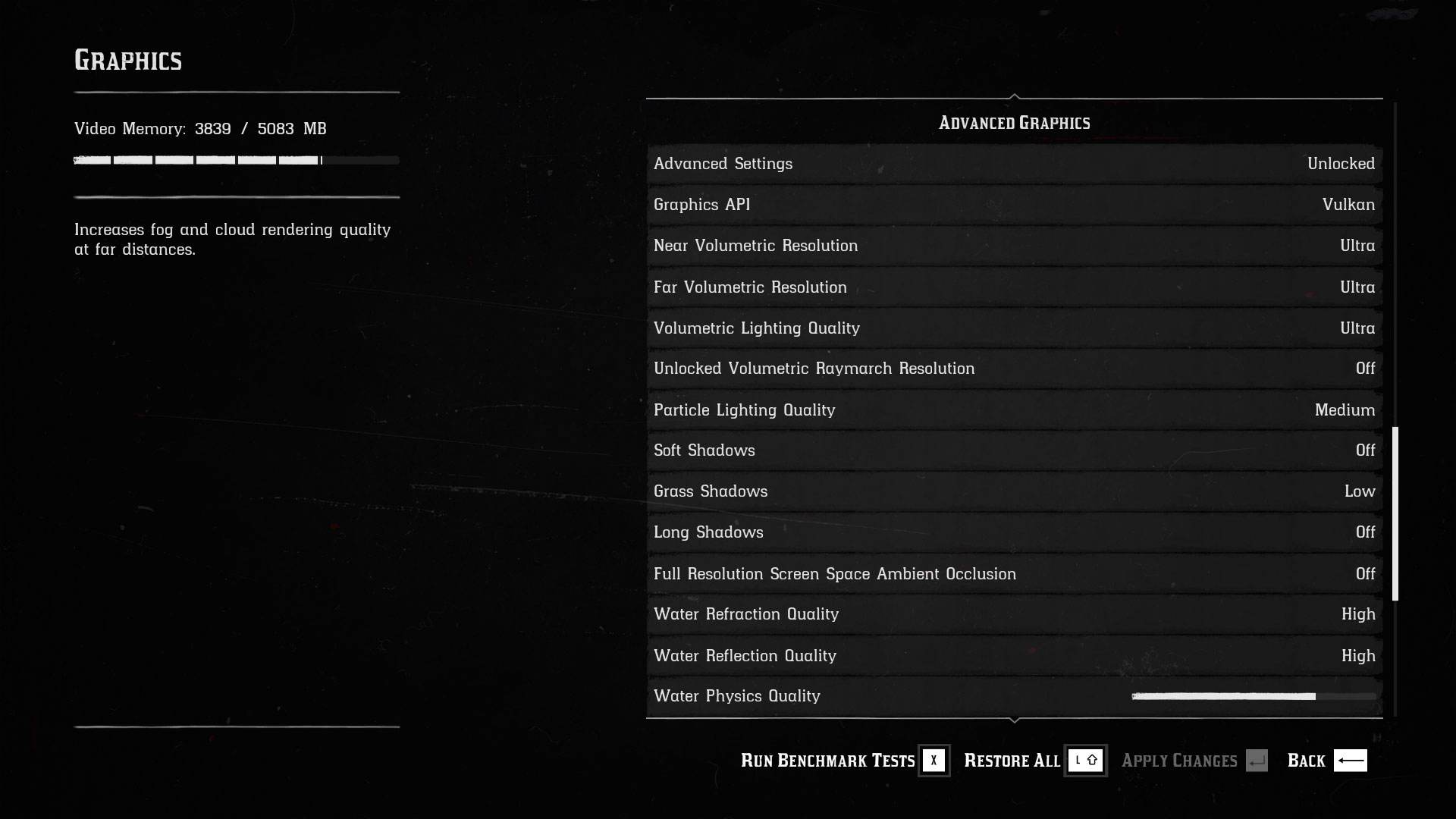

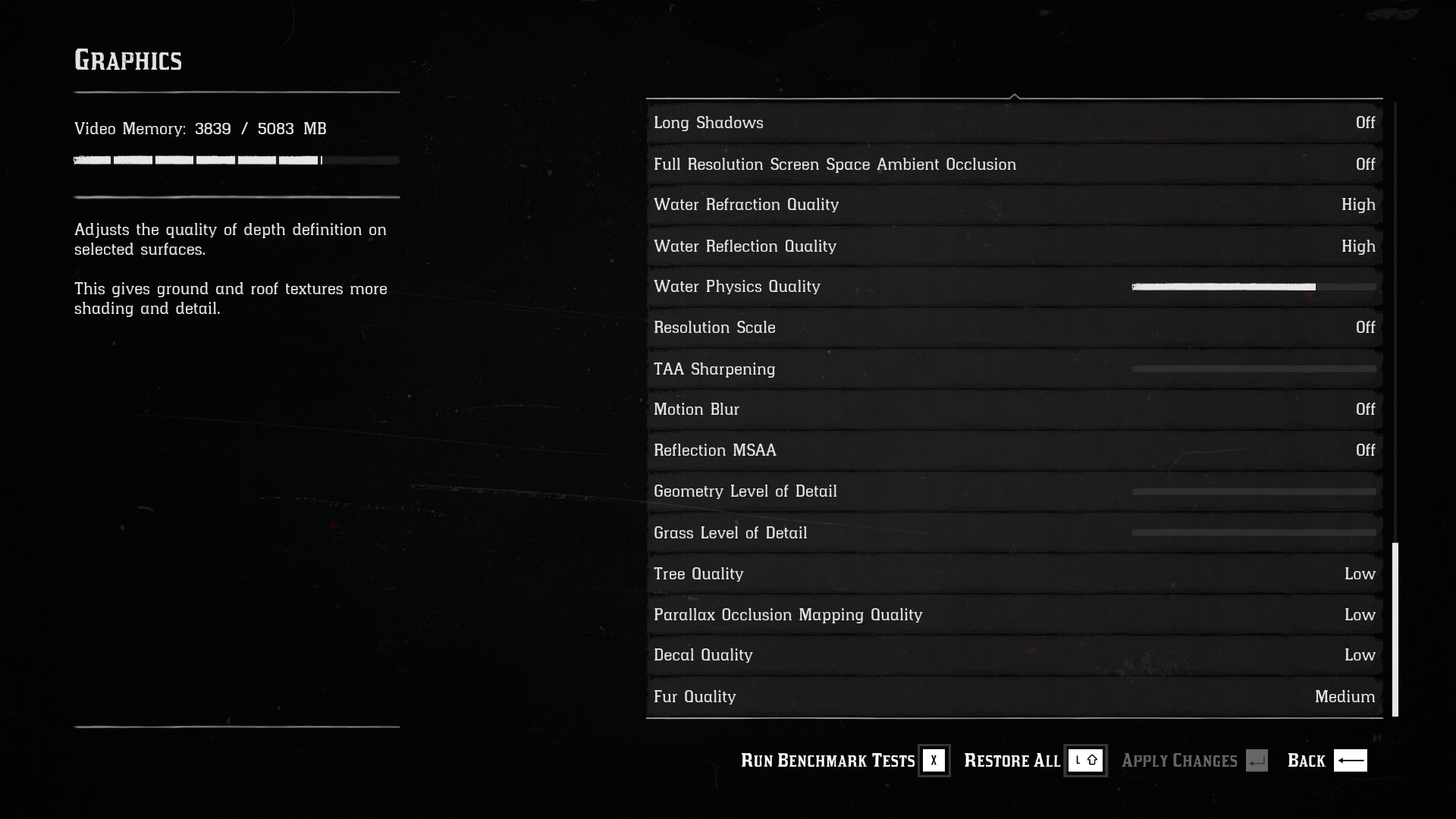

Running through Red Dead Redemption 2's graphics settings, there are about 40 different options to adjust. As a baseline measurement of performance, I unlock the advanced graphics settings and set everything in there to minimum, then drop the Quality Preset Level to minimum, and finally I set the main options to maximum quality but leave MSAA off. That's the starting point for my "ultra quality" and the above settings performance charts on the RTX 2060 and RX 5700. The difference between maximum and minimum on the Quality Preset Level when customizing all the other settings isn't massive, but it's measurable and it's best to be sure.

You can see specific image shots of my test settings in the above gallery, if you're interested. (Note that the latest patch has made a few minor changes in the advanced settings.) Let me also define my low, medium, and high benchmark settings while I'm here. Low is simple: take the settings above, but set everything in the top section to low/off/minimum. Medium and High use the medium and high values for the primary settings, with 2x and 8x anisotropic filtering, respectively (but leave MSAA and FXAA off). Again, images of all of these are in the above gallery.

There. Now we can finally talk about what settings actually matter, as well as performance.

Most of the settings only cause a minor dip in framerates. Reflection Quality and Volumetrics Quality are the two major settings to adjust if you're looking to improve framerates. Global Illumination Quality, Shadow Quality, Screen Space Ambient Occlusion, and Texture Quality can also provide a modest boost to performance—though lower resolution textures are very noticeable and I'd leave them at ultra or at least high on any GPU with 4GB or more VRAM. I'd also leave SSAO on medium or higher if possible.

In terms of advanced settings that can reduce performance, enabling 4x MSAA causes more than a 25 percent drop in framerates. Enable MSAA at your own peril—your GPU almost certainly can't handle it. Unless you're reading this in 2025, in which case, I hope your RTX 5080 or RX 8700 or whatever is awesome. In contrast, TAA is more than sufficient and causes an imperceptible 1 percent dip. Likewise, enabling 4x Reflection MSAA causes performance to drop around 8 percent, and it's not an effect you're likely to notice while playing.

Enable MSAA at your own peril—your GPU almost certainly can't handle it.

Elsewhere, setting Parallax Occlusion Mapping Quality to ultra can cause a modest 4-5 percent drop in performance. Depending on your GPU, the Tree and Grass LOD settings can also drop performance a few percent—but setting trees to max makes them look nicer and is probably worth the hit. And finally, setting Soft Shadows to ultra reduces performance a few percent but is worth considering.

The remaining advanced settings mostly cause a very small (1-2 percent at most) dip in performance—it adds up if you crank everything to max, but individually the various options don't matter much. That's basically true of all the settings I didn't specifically call out. Nearly everything else in the settings won't substantially change performance, based on my testing. That's 23 different settings that all cause less than a 1-2 percent change in performance. Maybe some of those settings matter more on an older or slower GPU, as I only checked the 2060 and 5700, but if you're at that point you should probably just drop the resolution or use resolution scaling first.

Overall, going from my ultra to low 'presets' improves performance substantially—more than double the fps in my testing—while increasing all of the advanced settings from the defaults (the 'maximum' setting at the bottom of the settings charts) causes a modest 15 percent loss of performance. No single GPU is currently able to maintain a steady 60 fps at 4K ultra, and even 1440p ultra is a stretch, so I've only tested those resolutions at high quality settings.

Red Dead Redemption 2 system requirements

The official RDR2 system requirements are pretty tame. Rockstar lists some relatively old hardware for its minimum recommendation, but given the amount of crashing and other problems users have reported, you should probably err on the side of higher-end components. Rockstar also doesn't state what level of performance you should expect, and I'm guessing it's 30 fps at 1080p low with the minimum setup, while the recommended PC hardware is probably aiming for 30 fps or more at 1080p high. Either way, you're going to need a lot of storage space.

Minimum PC specifications:

- OS: Windows 7 SP1

- Processor: Intel Core i5-2500K / AMD FX-6300

- Memory: 8GB

- Graphics Card: Nvidia GeForce GTX 770 2GB / AMD Radeon R9 280 3GB HDD

- Storage Space: 150GB

Recommended PC specifications:

- OS: Windows 10 April 2018 Update (v1803 or later)

- Processor: Intel Core i7-4770K / AMD Ryzen 5 1500X

- Memory: 12GB

- Graphics Card: Nvidia GeForce GTX 1060 6GB / AMD Radeon RX 480 4GB

- Storage Space: 150GB

Those specs don't look too bad, and while the CPU specs initially seemed suspect—a workaround was required to eliminate lengthy stalls and stuttering on lower end CPUs—a patch addressed the problem. Still, having a PC that exceeds the minimum specs is a good idea, especially if you're hoping for a smooth 1080p high at 60 fps. In that case, you're probably looking at an RX 5700 or RTX 2060 Super with a 6-core/12-thread CPU or better.

Red Dead Redemption 2 graphics card benchmarks

That brings us to actual performance, and I continue to use my standard testbed for graphics cards (see the boxout to the right). Red Dead Redemption 2 includes its own benchmark tool, which was used for all of the benchmark data. The built-in benchmark runs through five scenes, the first four of which are fairly static and don't generally represent areas of the game where slowdowns are likely to occur or matter. Each lasts about 20 seconds, while the final sequence is a 130 second robbery followed by a horse ride through town, with some shooting—a much better test sequence that's more representative of play.

I'm collecting frametimes from the last portion, using FrameView (an Nvidia variant of PresentMon). Each GPU is tested multiple times to verify the results, and variability between runs is minimal. Needless to say, I've watched the benchmark a few too many times already. At one point, Arthur fires off up to 14 shots from his six-shooter without reloading—because he's overclocked I guess. Anyway, the benchmark only looks at performance in one area of the game. Other areas will perform better, some will perform worse, but it at least gives a reasonable baseline measurement of the performance you can expect.

At one point in the benchmark, Arthur fires off a dozen shots from his six-shooter without reloading—because he's overclocked, I guess.

All of the discrete GPU testing is done using an overclocked Intel Core i7-8700K with an MSI MEG Z390 Godlike motherboard, using MSI graphics cards. AMD Ryzen CPUs are tested on MSI's MEG X570 Godlike. MSI is our partner for these videos and provides the hardware and sponsorship to make them happen, including three gaming laptops: the GL63 with RTX 2060, GS75 Stealth with RTX 2070 Max-Q, and GE75 Raider with RTX 2080.

I used the presets I defined earlier, along with the latest AMD and Nvidia drivers currently available: AMD 19.12.3 and Nvidia 441.66. Both sets of drivers are game ready for Red Dead Redemption 2.

At low / minimum quality, Red Dead Redemption 2 looks okay, but the texture quality is really poor and the world in general looks very bland and blurry. There's still plenty of geometry and objects to pretty things up, and distant surfaces look okay, but anything close to the camera starts to look like it has textures from the original Deus Ex. There's a massive difference between low, medium, and high texture quality, and a modest difference between high and ultra.

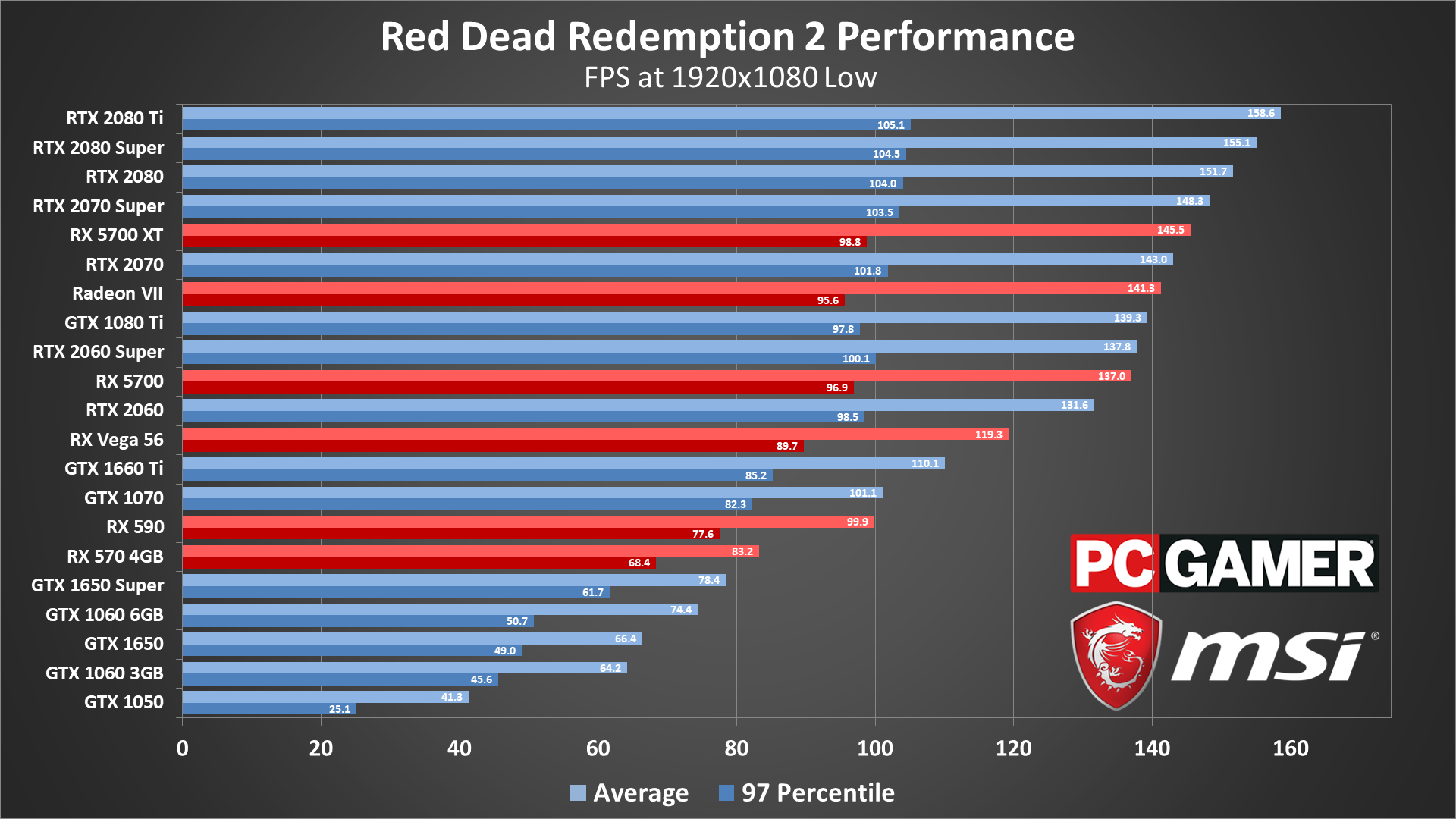

Even at minimum quality settings, performance is nothing special. The GTX 1060, both 3GB and 6GB variant, can average 60 fps, and so can the RX 570, but anything slower is going to struggle. Cards like the GTX 1050 only hit 40 fps, and 2GB VRAM means many settings can't even go any higher.

There are concerns with budget and midrange cards, as minimum fps can fall well below 60, and Rockstar's engine definitely isn't built to hit high framerates. The fastest cards can just barely break 144 fps, but dips into the 100 fps range are plenty common.

AMD GPUs do better on both averages and minimums compared to Nvidia's older Pascal GPUs (GTX 10-series). The RX 570 for instance stays above 60 fps, with a 68 fps minimum in the benchmark. The 1060 6GB in contrast has 97 percentile minimums of just 51 fps. Nvidia's RTX and GTX 16-series parts meanwhile do better, typically matching AMD's equivalents.

After the sketchy launch performance for AMD GPUs in Ghost Recon Breakpoint and The Outer Worlds, both AMD promoted games, I wouldn't have expected AMD to come back swinging in RDR2. Then again, it's been out on consoles for a year, which utilize AMD hardware, and it does use low-level APIs that traditionally have favored AMD. Either way, it's a nice change of pace for Team Red (Dead).

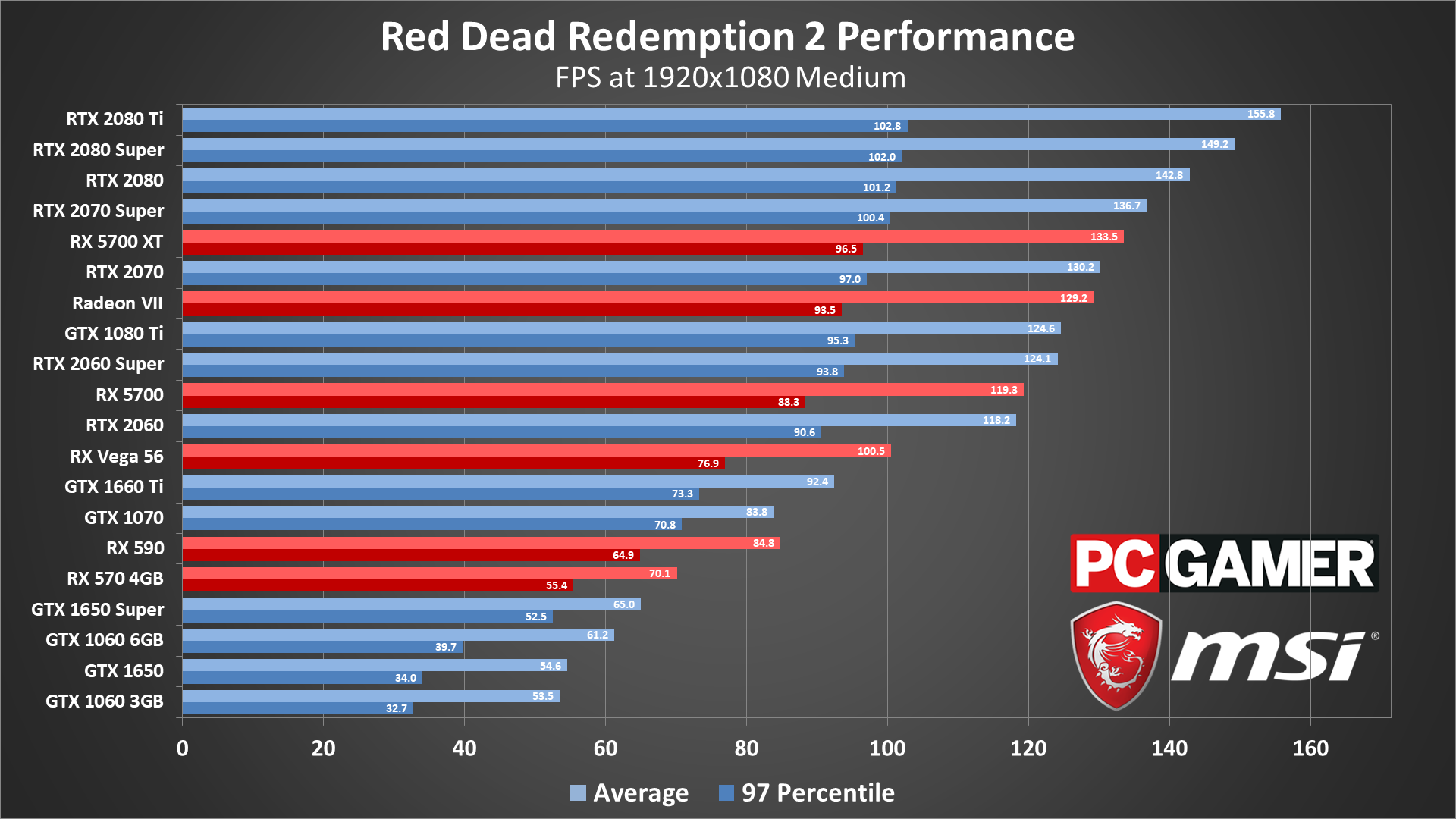

Bumping everything up to medium quality (except the advanced options, as noted earlier), performance drops about 15-20 percent on the slower cards, while the fastest cards are still mostly CPU limited. As far as image quality goes, even the medium quality textures still don't look great up close, but there's a definite improvement vs. minimum quality.

AMD GPUs continue to lead their closest Nvidia counterparts as well—the 570 is 14 percent faster than the 1060 6GB, and 31 percent faster than the 1060 3GB. For reference, there are many games where the 1060 3GB actually comes out ahead of the 570. It's a bit ironic to see AMD GPUs perform this well in a game that's supposedly vendor agnostic, but this is how things stand right now.

Of the cards I've tested, the RX 570 and GTX 1060 6GB still clear 60 fps averages, though minimum fps is far below that. The wide gap between the average and 97 percentile average framerates indicates plenty of stuttering/micro-stuttering and framerate dips, which is definitely happening in RDR2. To smooth things out, you'll want at least GTX 1660 Ti / 1070, or RX 590 level hardware.

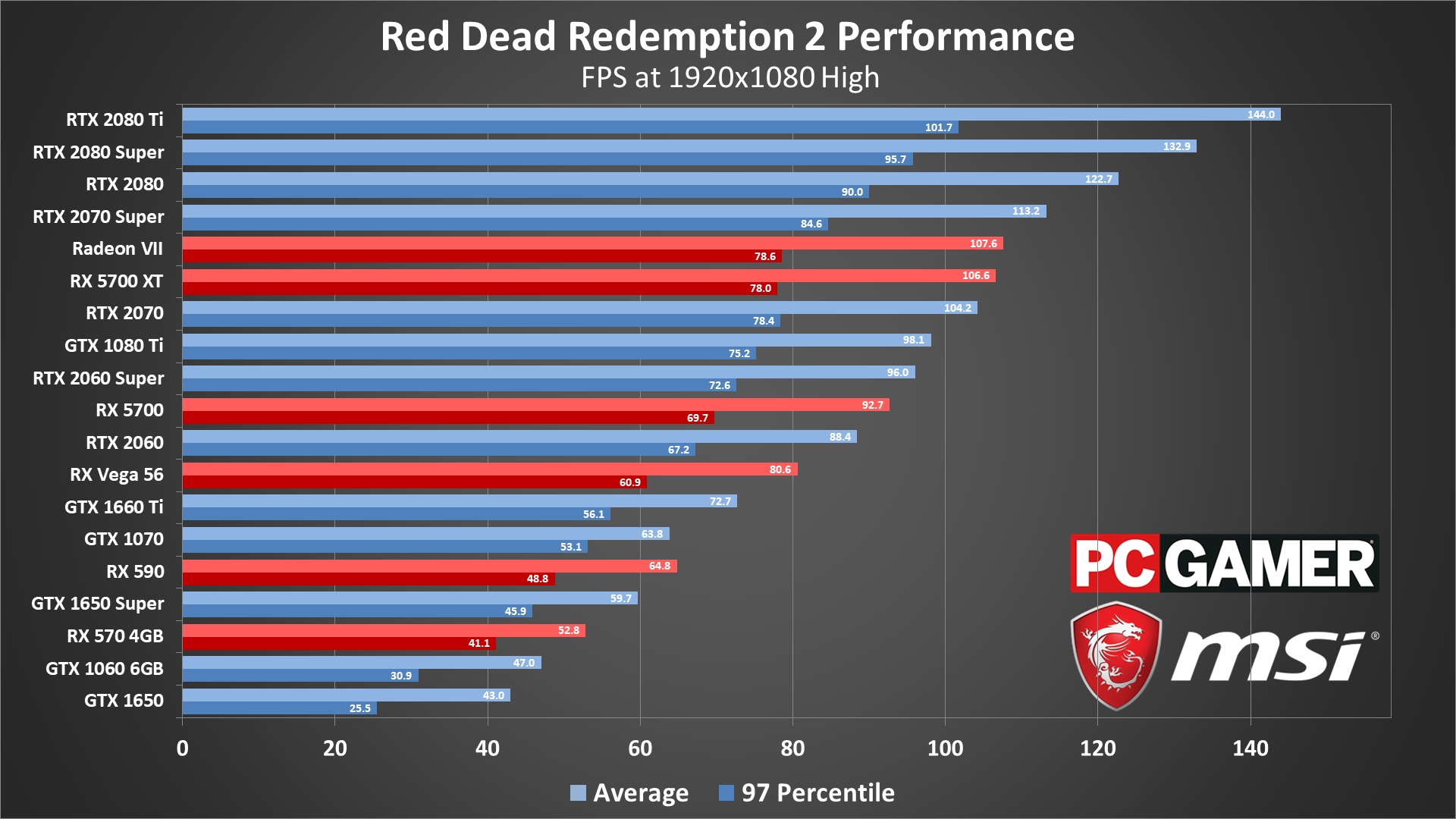

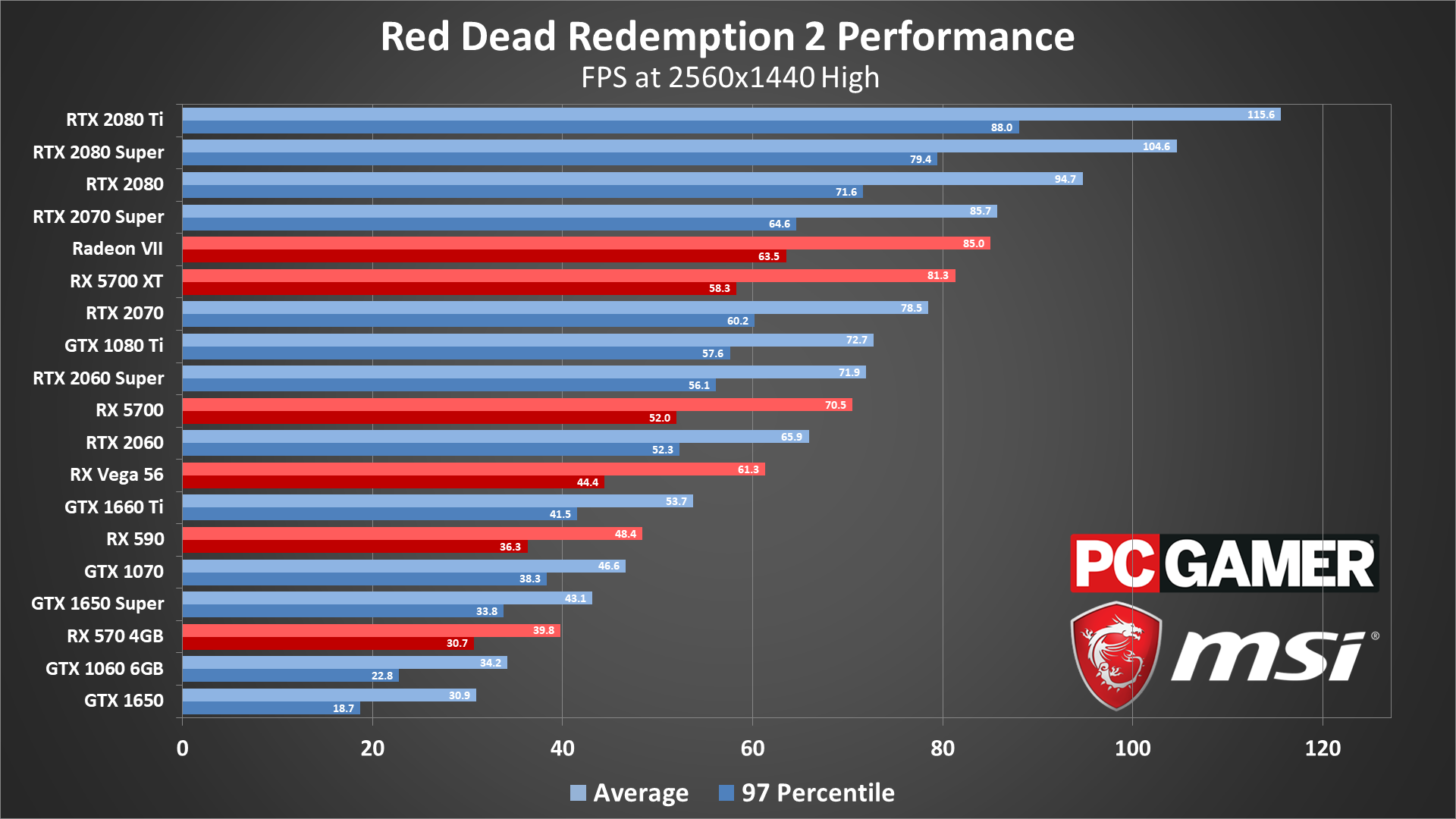

Switching to high quality settings drops performance another 20-25 percent relative to medium, unless you're on an ultra-fast card like a 2080 Ti. This is probably as high as most people should go on current hardware, reserving ultra quality for the future. It's not like the slight change in ultra quality reflections and volumetrics is noticeable.

The newer Nvidia Turing and AMD Navi architectures offer some clear advantages. Notice how the GTX 1660 Ti beats the 1070, and the RTX 2060 Super basically matches the GTX 1080 Ti? The same goes for AMD's RX 5700 XT compared to the Radeon VII.

Hitting 60 fps without high-end hardware gets a bit more difficult, with the RX 590 and GTX 1660 Ti getting there, but with relatively poor minimum fps. To get a steady 60 fps for minimums as well as averages, you're looking at the RX Vega 56 or RTX 2060.

These are also the last settings where I can test the 4GB cards, as ultra quality requires too much VRAM, though I can still do 1440p at high quality on 4GB cards. But first let's look at 1080p ultra.

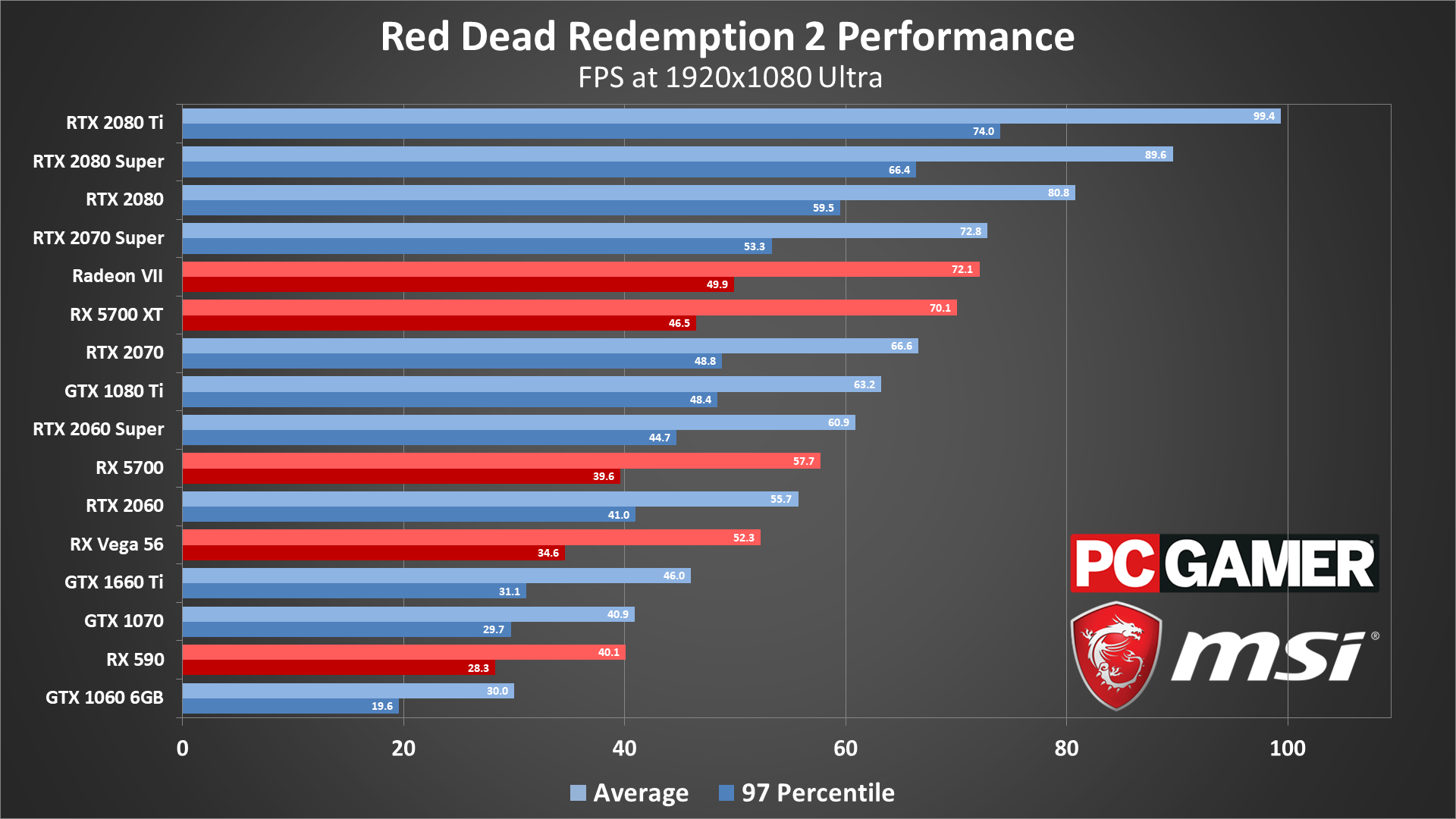

Ultra quality is simply too demanding for most of today's graphics cards. The difference in visual fidelity is also pretty small—slightly better textures, lighting, shadows, etc. And the settings aren't even fully maxed out in my tests, as there are several advanced options that can still be cranked up and drop performance another 10-15 percent.

Sure, the RTX 2080 Ti can still handle 1080p ultra at more than 60 fps, and a handful of other GPUs will average 60 fps as well, but minimums are going to be lower. Otherwise there's not much to say here. If you want to try pushing one or two options to ultra, that's fine. Just leave reflections and volumetrics quality at high or even medium, because you don't really need them. The discernible difference between each level is minimal.

1440p at high settings is less demanding than 1080p at ultra, which makes it viable for high-end cards. The problem is maintaining 60 fps at 1440p, as usual.

AMD's minimum fps are generally worse than the top Nvidia cards now, though the vanilla RTX 2060 looks a bit weak. Of the cards I've tested, only the RTX 2070 and above keep minimums above 60 for Nvidia, while only the Radeon VII from AMD manages a steady 60+ fps.

If you're only looking to stay above 30 fps—basically console level performance—the RX 570 4GB and above will suffice. Only the GTX 1060 and GTX 1650 drop below 30 of the cards tested—maybe 1440p medium would be okay, but high quality isn't.

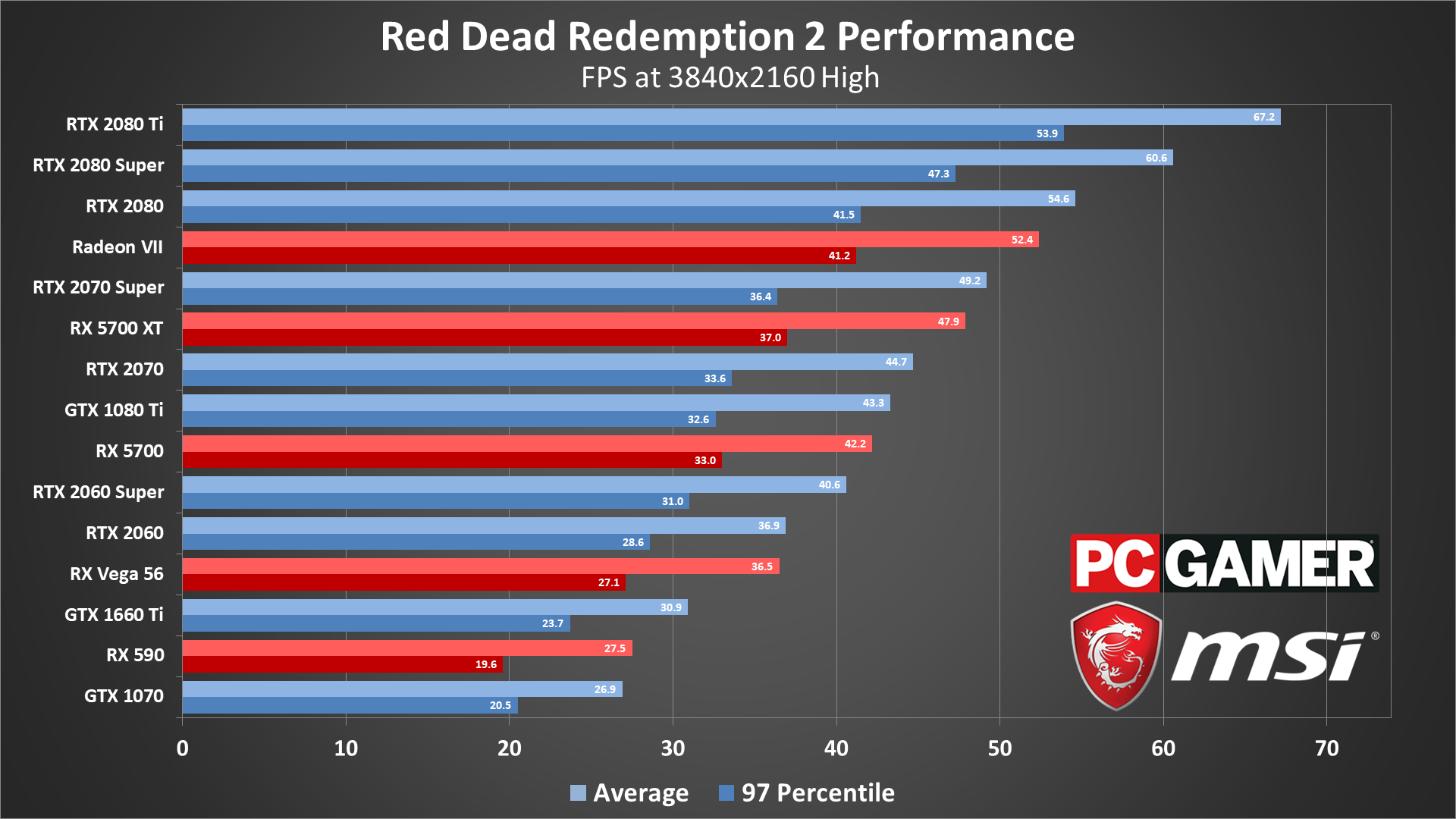

Finally, 4K at high quality is as far as I tried to push things. Ultra quality drops performance about 25-35 percent, depending on your GPU, which means nothing comes close to averaging 60 fps at maximum quality and 4K in RDR2. But 4K high still looks crisp and clean, and at least two GPUs—the RTX 2080 Ti and 2080 Super—can average more than 60 fps. Minimums do still come up short, unfortunately.

I'm reminded of my early GTA5 testing, where I maxed out everything including the advanced settings. Back then, the GTX 980 Ti was the king of graphics cards, but 4K and max quality on a single GTX 980 Ti simply wasn't going to cut it. In fact, GTA5 at maxed settings (including 4xMSAA) plugged along at just 24 fps on the then-fastest GPU.

So if you're looking at RDR2 and wondering how not even the RTX 2080 Ti can handle 4K at maximum quality, this isn't really anything new. Of course, multi-GPU support was still more of a thing back in 2015, whereas SLI and CrossFire support is practically gone these days.

Shockingly (to me, anyway), RDR2 actually does have explicit multi-GPU support under Vulkan. I've tested it with a pair of RTX 2080 Ti cards (which I'm not showing in the charts because I can't in good faith recommend anyone buy $2,000 or more of graphics cards just to play games like RDR2), and average fps improved by 60 percent compared to a single card. So if you have a pair of 2070 Super or faster GPUs, playing RDR2 at 4K, maximum quality, and 60 fps is possible.

Red Dead Redemption 2 CPU benchmarks

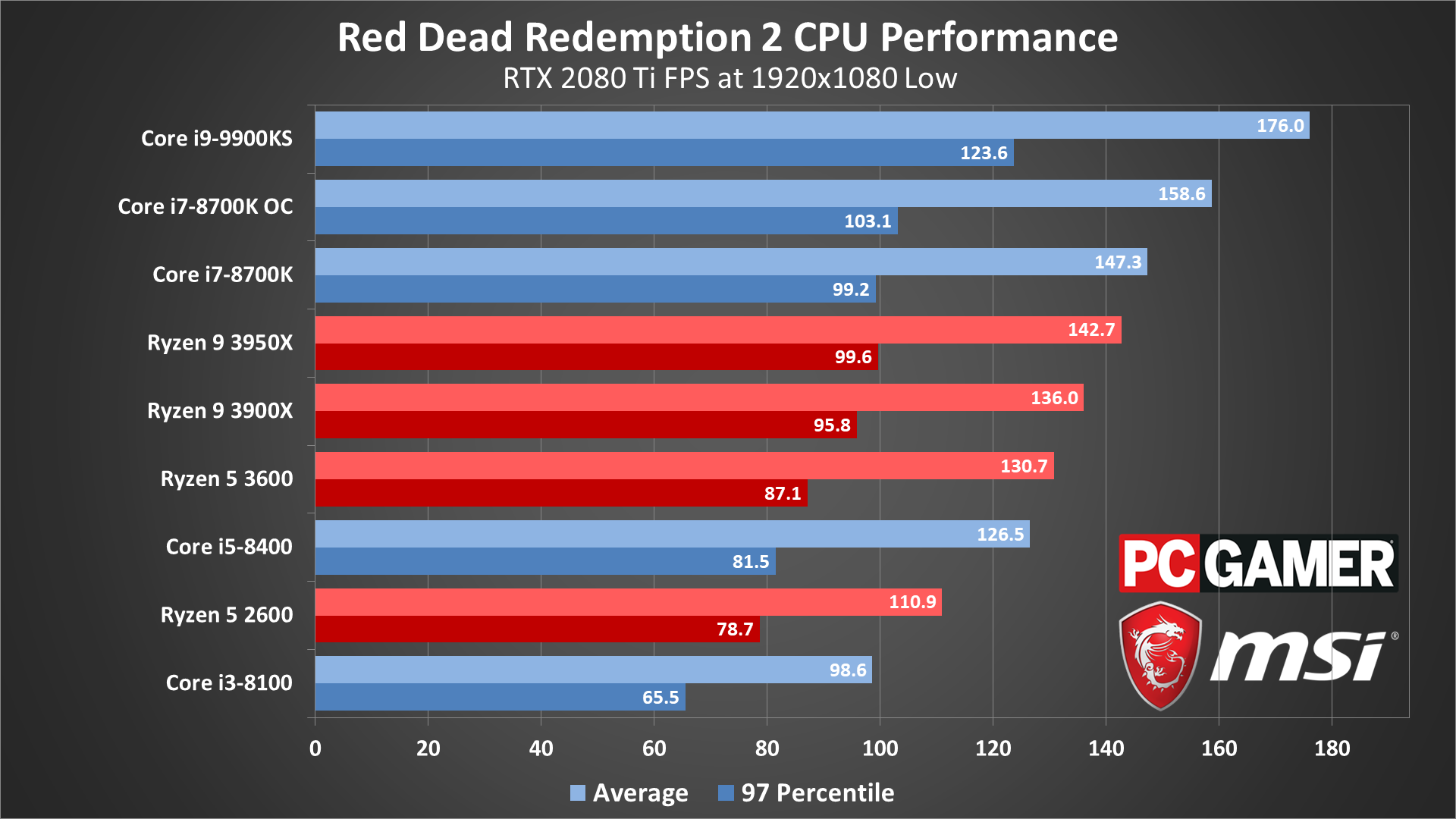

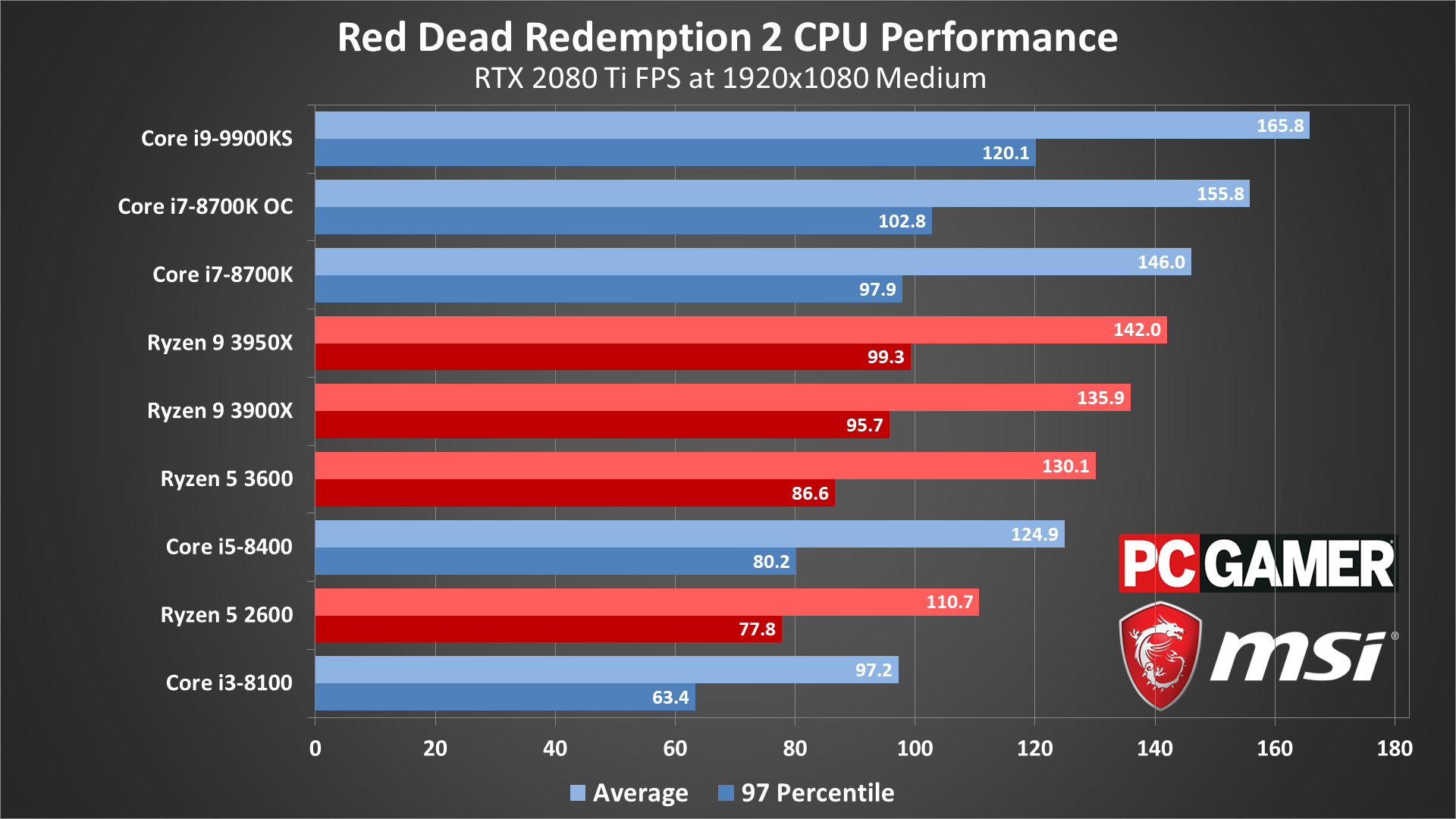

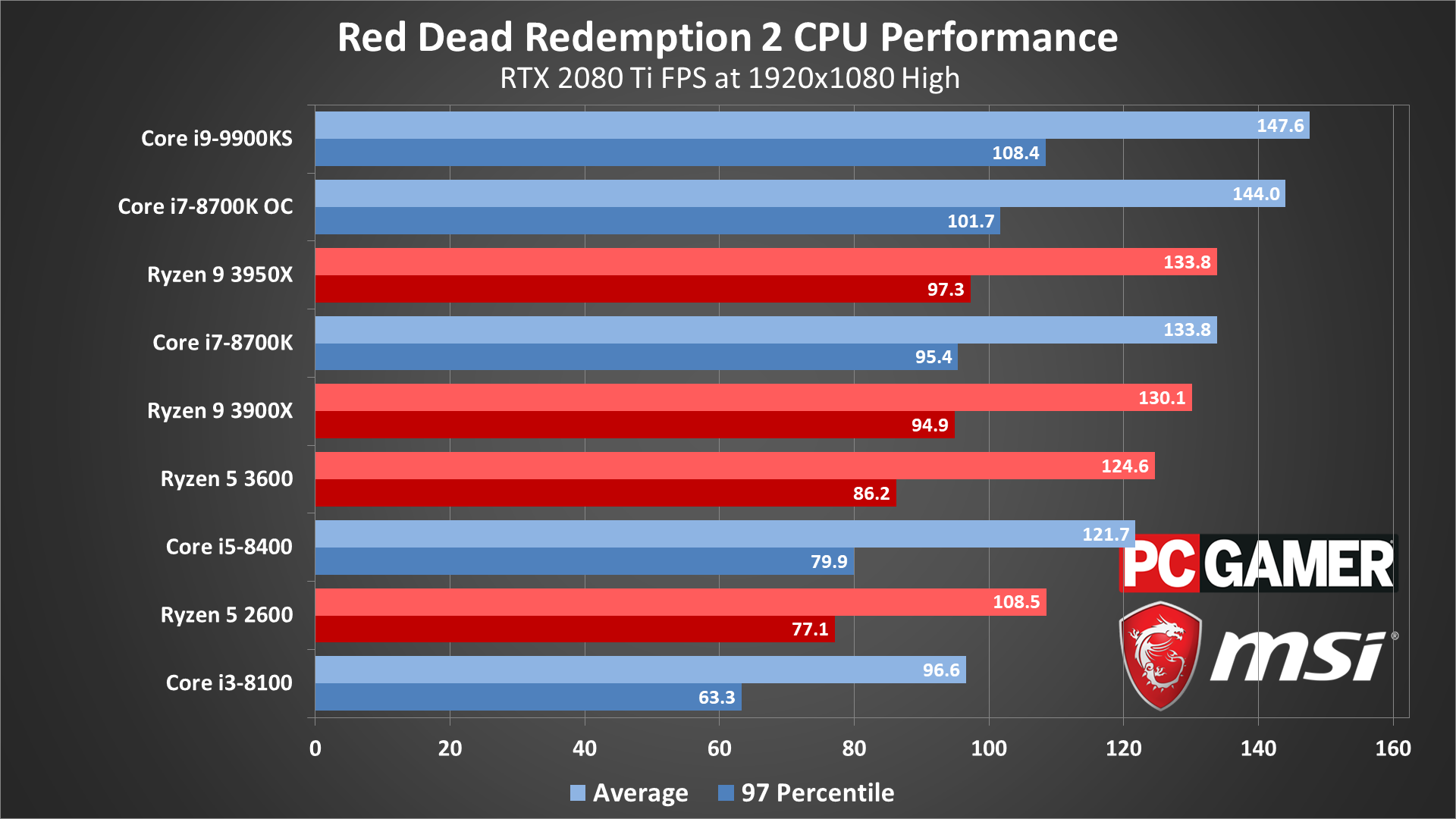

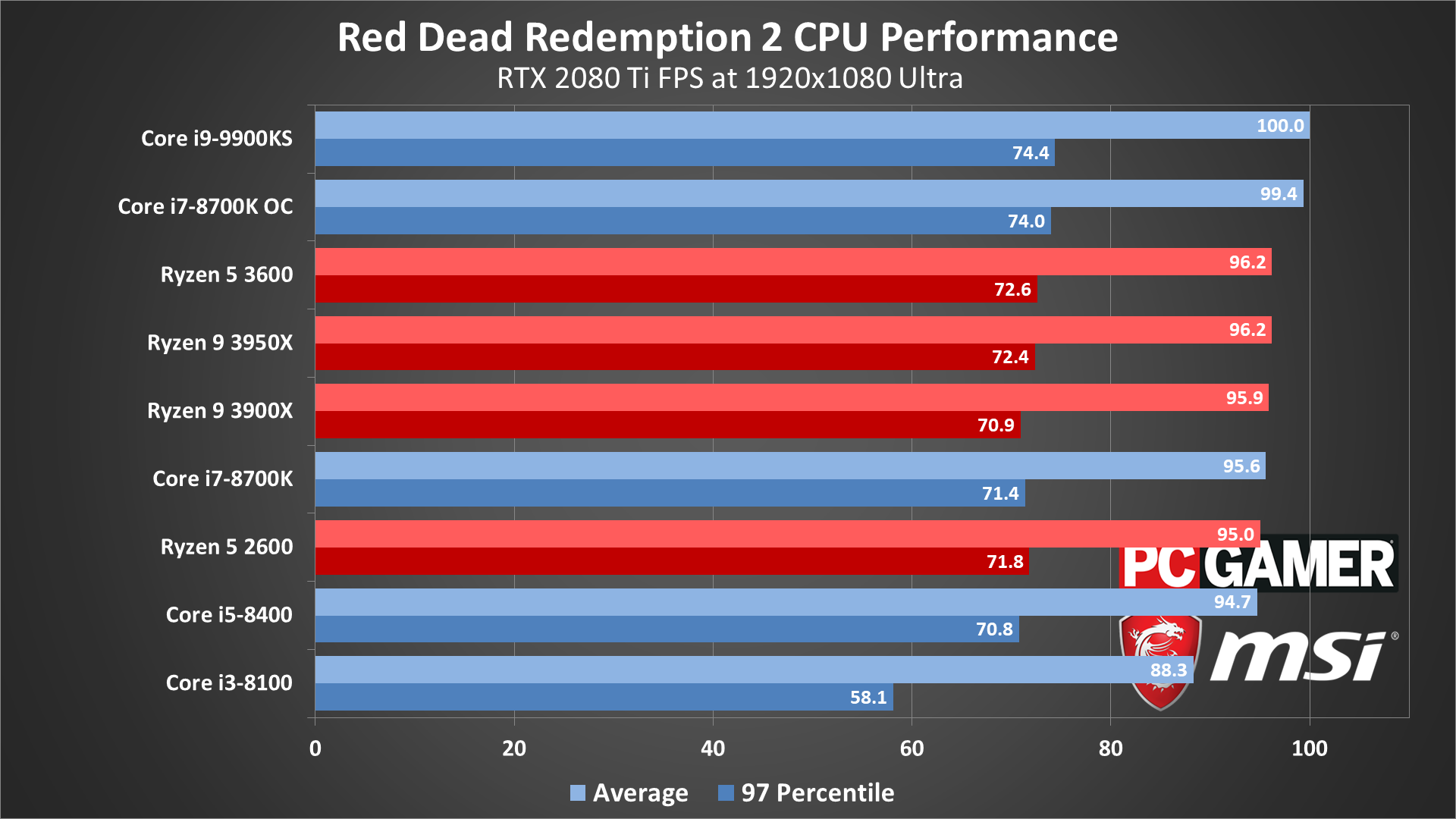

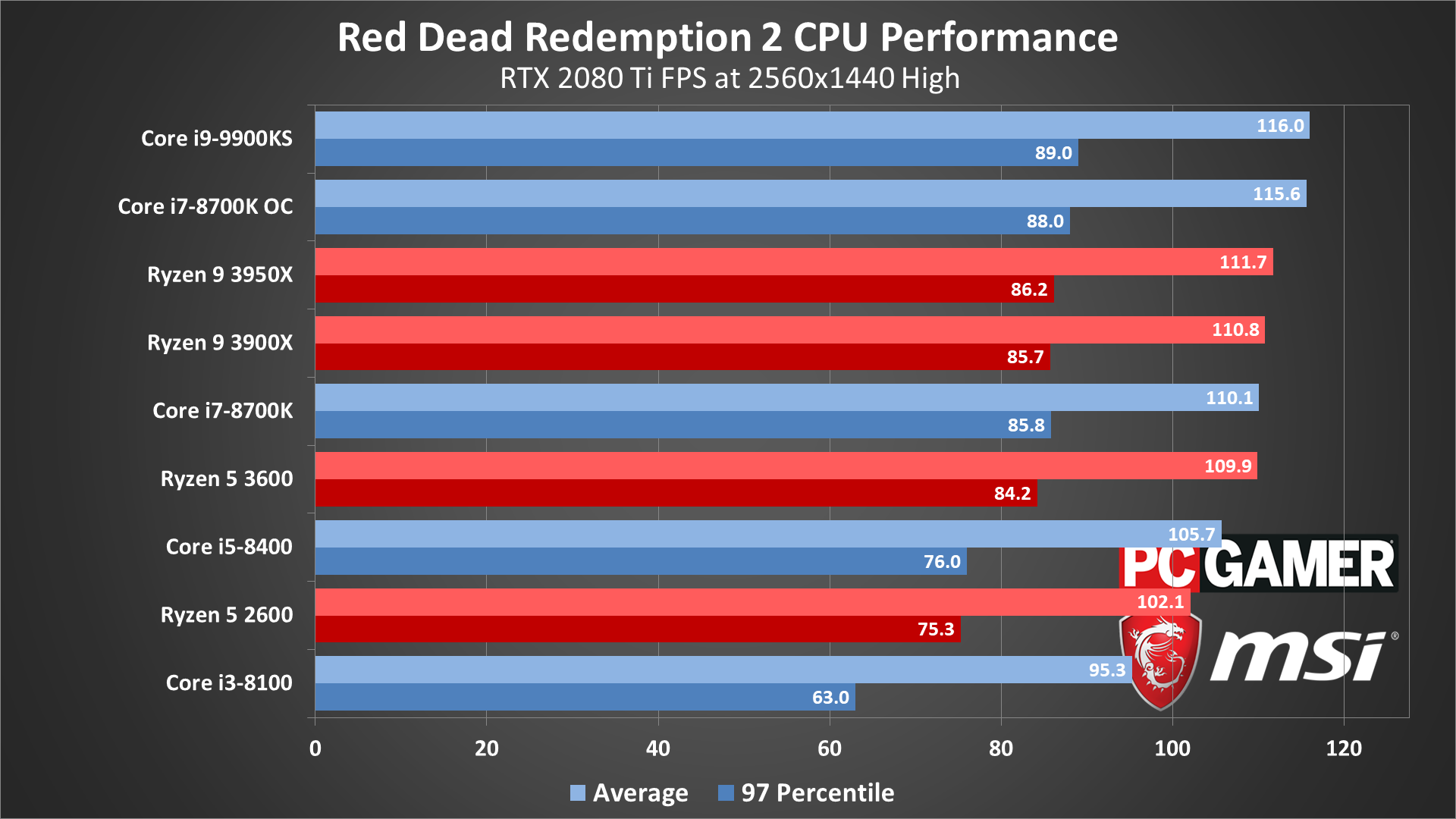

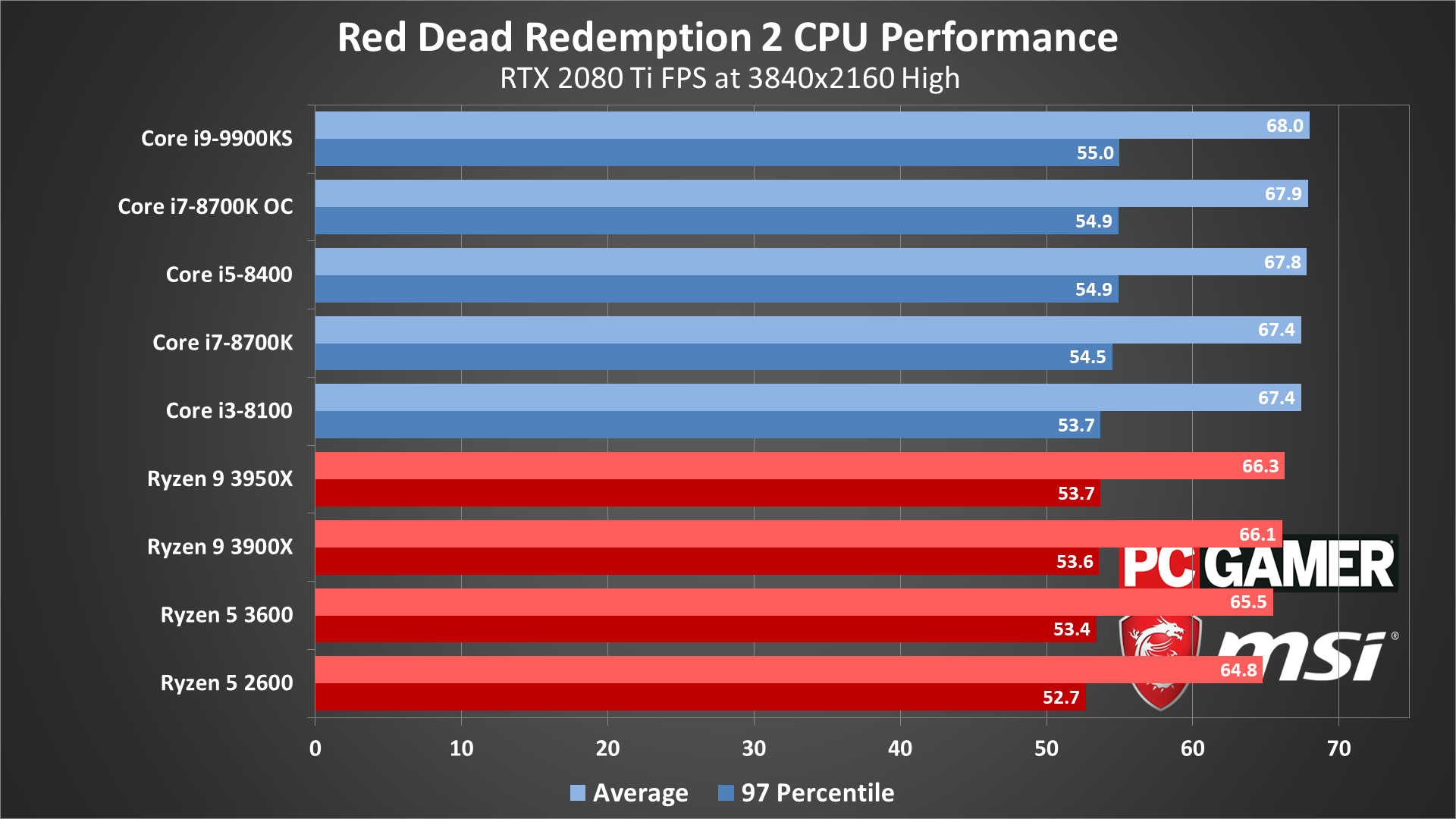

For CPU testing, I'm using the fastest consumer graphics card currently available, the RTX 2080 Ti, in order to show as much of a difference between the CPUs as possible. Stuttering and workarounds were required at launch for some of the processors, but nearly two months later, things have improved substantially. Here are the updated CPU testing results, using the Vulkan API in all cases.

The Core i9-9900KS ends up at the top of the charts, but at least with the latest updates and drivers, even a lowly Core i3-8100 can keep minimums above 60 fps. Core and thread scaling is also good at lower settings, though by 1080p ultra it's mostly a 6-way tie for third place, with only the 9900KS and overclocked 8700K rising a bit above the other chips.

Keep in mind that the Core i3-8100 is going to be very similar to any of Intel's previous generation of Core i5 parts. It has 4-cores and no Hyper-Threading, so even with high-end graphics cards, a steady 60 fps on something like a Core i5-2500K isn't likely. The previous generation Core i7 parts should do better, at least, thanks to their added threads and higher clockspeeds.

Red Dead Redemption 2 laptop benchmarks

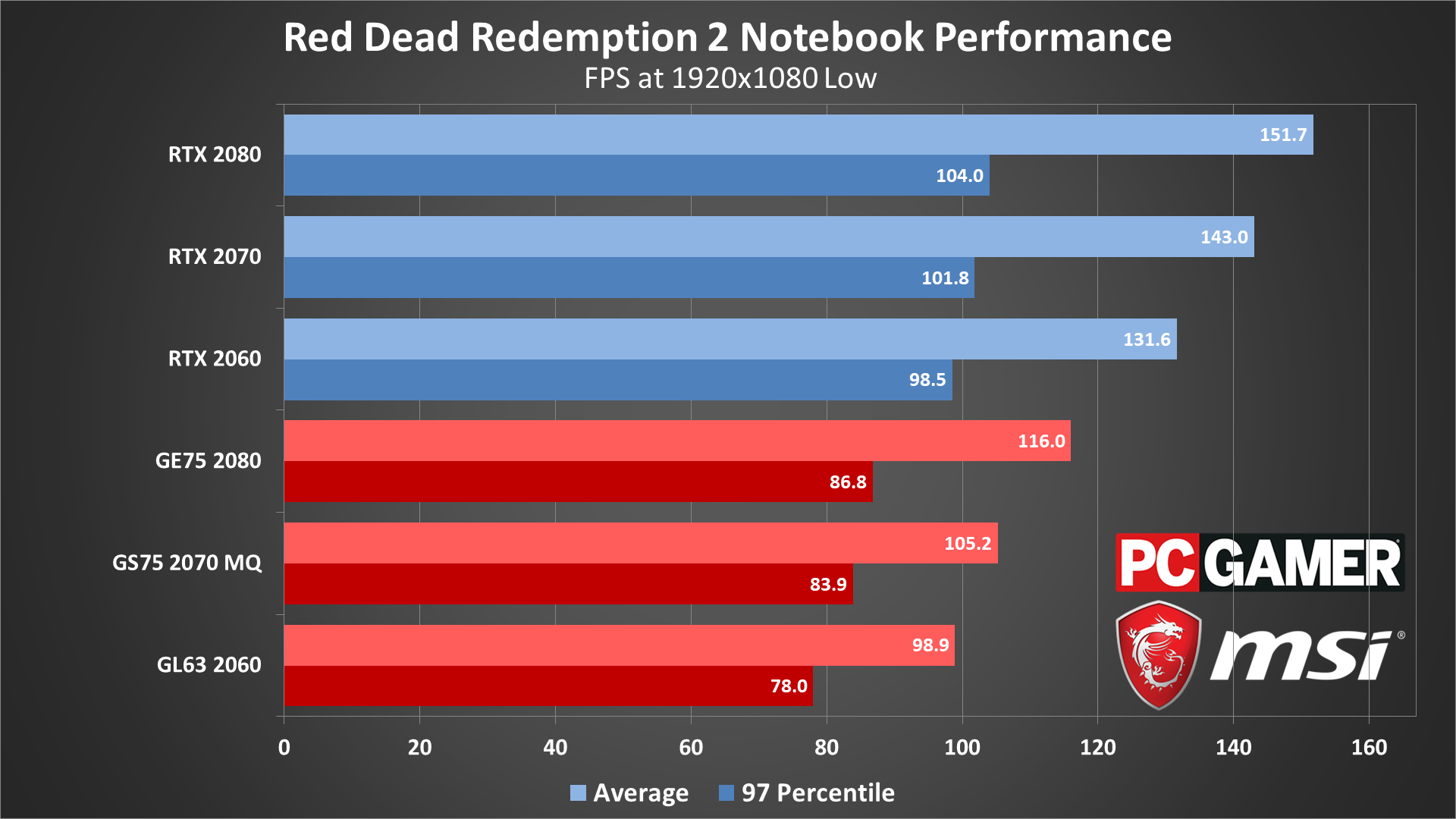

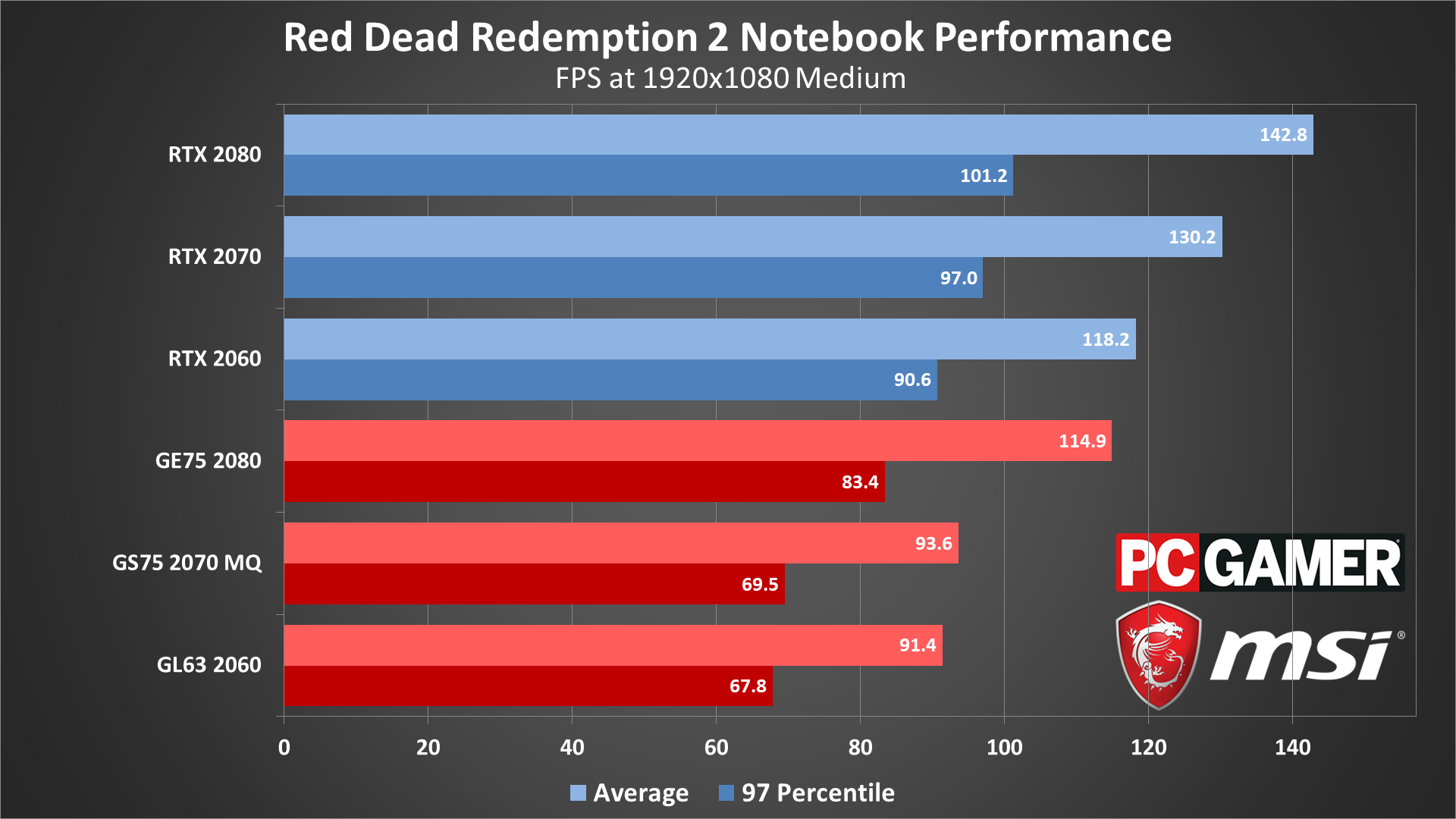

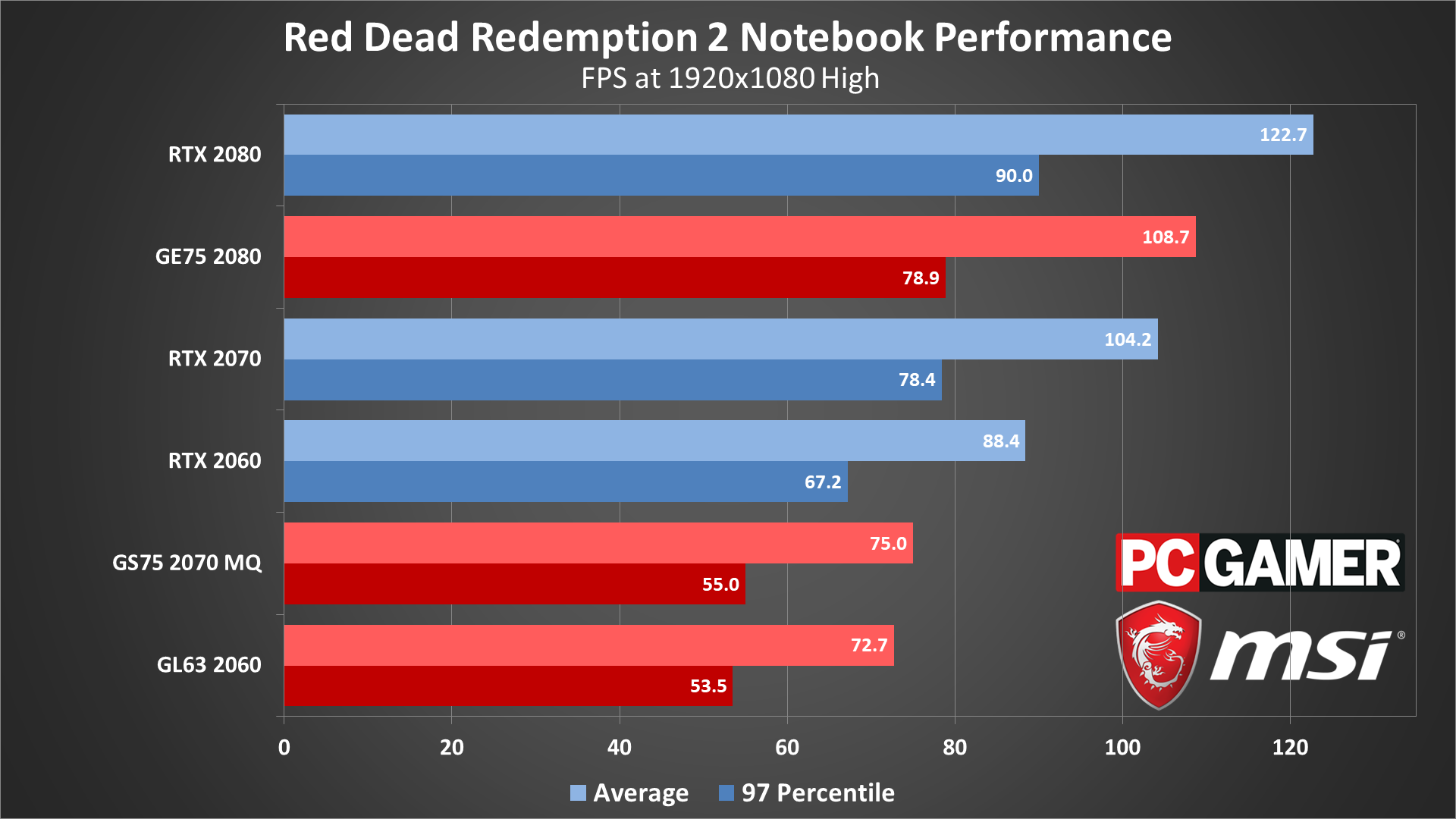

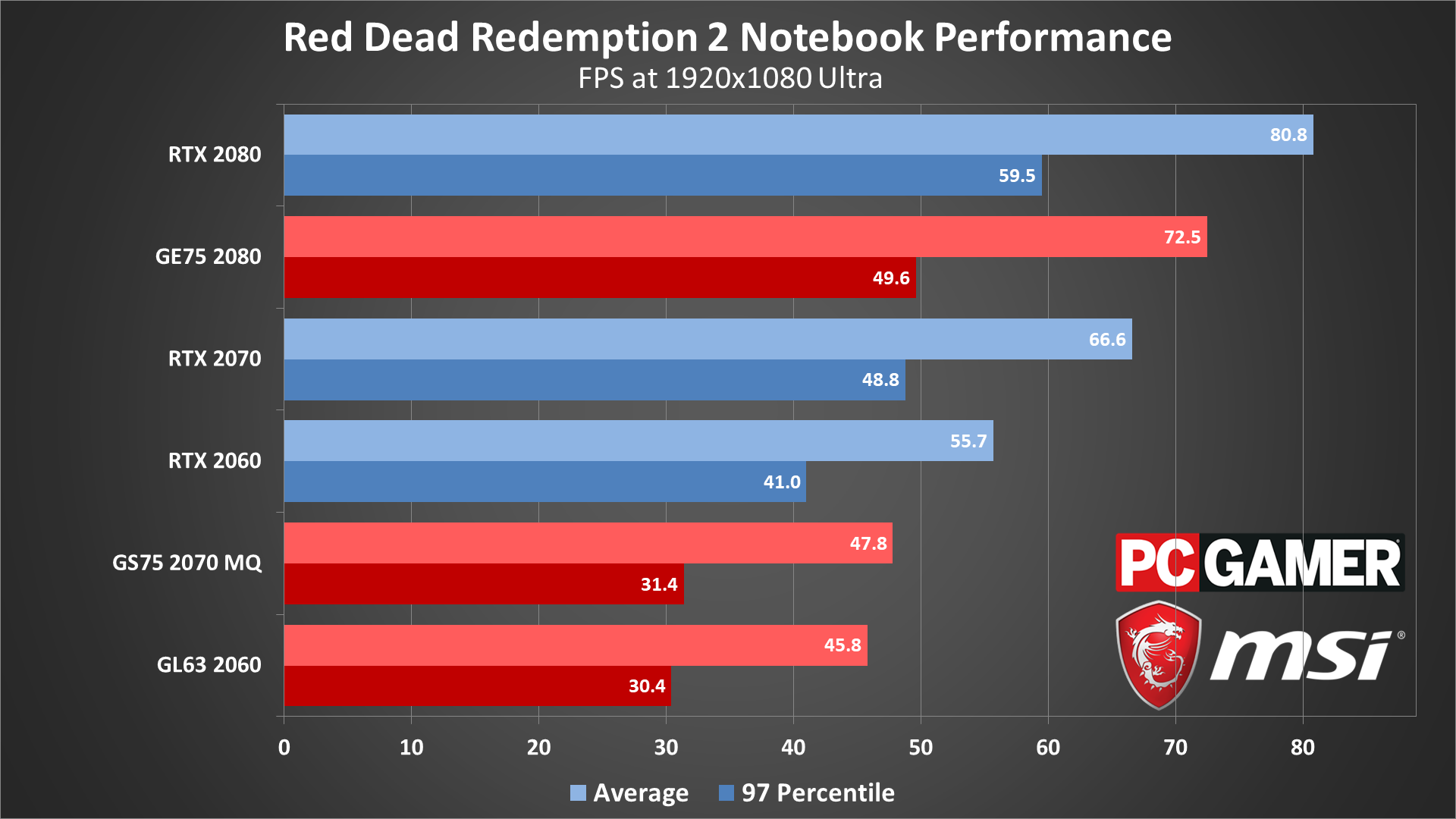

What about laptops? The GL63 has a 4-core/8-thread Core i5-8300H mobile CPU, with lower clocks than you'll typically see from the desktop i5-8400. The other two laptops have 6-core/12-thread Core i7-8750H processors. Thankfully, the different CPUs used on these laptops doesn't appear to be a problem, though the lower clockspeeds relative to desktops are a factor.

The mobile RTX cards aren't able to keep up with the desktop models at low or medium settings. Part of that is because the mobile GPUs are clocked lower (especially the Max-Q variants), but the slower mobile CPUs are certainly a factor. Keeping minimum fps above 60 isn't a problem at low and medium settings, but both the 2060 and 2070 Max-Q fall short at high and ultra, and even the GE75 can't keep a steady 60 fps at 1080p ultra.

Incidentally, all three test laptops from MSI are also equipped with 32GB of system RAM. That normally wouldn't matter for games, but RDR2 seems to push beyond the level of hardware I consider sufficient, so I wanted to check. As a quick test, I slapped an additional 16GB of RAM into my desktop and retested the 2080 Ti. At least in my testing, the extra system RAM wasn't a factor; 16GB is still sufficient.

Parting thoughts and port analysis

Desktop PC / motherboards / Notebooks

MSI MEG Z390 Godlike

MSI Z370 Gaming Pro Carbon AC

MSI MEG X570 Godlike

MSI X470 Gaming M7 AC

MSI Trident X 9SD-021US

MSI GE75 Raider 85G

MSI GS75 Stealth 203

MSI GL63 8SE-209

Nvidia GPUs

MSI RTX 2080 Ti Duke 11G OC

MSI RTX 2080 Super Gaming X Trio

MSI RTX 2070 Super Gaming X Trio

MSI RTX 2060 Super Gaming X

MSI RTX 2060 Gaming Z 8G

MSI GTX 1660 Ti Gaming X 6G

MSI GTX 1660 Gaming X 6G

MSI GTX 1650 Gaming X 4G

AMD GPUs

MSI Radeon VII Gaming 16G

MSI Radeon RX 5700 XT

MSI Radeon RX 5700

MSI RX Vega 64 Air Boost 8G

MSI RX Vega 56 Air Boost 8G

MSI RX 590 Armor 8G OC

MSI RX 580 Gaming X 8G

MSI RX 570 Gaming X 4G

Thanks again to MSI for providing the hardware for our testing of Red Dead Redemption 2. To put things bluntly, this was a bungled launch on PC. The cynics among us will point at the delayed Steam release as proof that Rockstar knew the PC launch of RDR2 was premature. Even if Rockstar didn't know, it's surprising to see big stability problems in a marquee PC game as good looking as RDR2.

Six weeks later, after the Steam release, the stability and performance woes are mostly a thing of the past. You'll still need a potent rig to crank up the settings and resolution while staying above 60 fps, but at low to medium settings, you can play Red Dead 2 on pretty modest hardware.

Your CPU might be a factor as well, but thankfully the horrible stutters have been fixed in my experience. A 6-core CPU or better is still recommended, which means i5-9400F or better, or an AMD Ryzen 5 2600 or better, but those are both quite affordable at around $125 these days.

Just be warned that if you only have 2GB VRAM, Red Dead 2 will restrict the settings you can attempt to run. Even at minimum quality, it can still be an enjoyable game, but you really need at least 4GB VRAM to get the full graphics experience, and 6GB or more is preferred.

RDR2 shows clear signs of having been designed for console hardware.

Let me also talk briefly about the PC port of RDR2, which shows clear signs of having been designed for console hardware. There are many indications that RDR2 has code that makes assumptions about your PC's hardware, and those assumptions can be wildly inappropriate at times. Two shining examples of this are the CPU stuttering problems at launch and the minimum fps results on high-end GPUs.

The horrible initial performance on 4-core/4-thread and 6-core/6-thread CPUs was really weird, considering the official minimum spec CPU is an i5-2500K. Thankfully that was fixed, but why would an i5-8400 do so much worse than an i7-8700K, with seconds long pauses at times? The PS4 and Xbox One both have 8-core AMD Jaguar processors, and it looks as though the PC code spawned too many threads on CPUs with fewer than eight threads. Or at least it allocates processing time very poorly, which is why the original workaround was to limit RDR2 to 98 percent of CPU time.

The other clear indication of "console portitus" is the minimum fps results at 1080p low and medium. Pretty much regardless of your graphics card, you can't get minimum fps much above 100. Well, maybe with an RTX 2080 Ti and Core i9-9900KS, but that's a bit much to expect from PC gamers. It's not that you need to hit 100-144 fps to enjoy Red Dead, but it would be nice if it were at least a viable option.

Considering RDR2 launched over a year ago on console, the state of the PC launch was shocking. Especially in light of the fact that GTA5 continues to sell like hotcakes on Steam. It's not like Rockstar doesn't have the funds to do a proper port, or even the expertise.

But hey, at least Red Dead Redemption 2 made it out on PC right in time for Black Friday, which I'm sure had absolutely nothing at all to do with it releasing in a rough state. Six weeks later, things are much better, and hopefully they'll continue to improve. Certainly, there will be plenty of limited time modes and challenges to keep us coming back to the Old West for years to come.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.