Players with 144 fps have a better K/D ratio than those with 60 fps, says Nvidia

Long considered the standard for a smooth gaming experience, 60fps may be holding you back.

Bring up the topic of framerates in games, and you'll encounter a wide variety of attitudes. There are the extreme gamers that want the best hardware possible, who complain any time a game can't run at triple digit framerates. At the other end of the spectrum are people that will try to say 30fps is sufficient—and they might even trot out the old argument that human eyes can't even see that fast. The question of how many frames per second can the human eye see isn't actually that simple, however, and in a nice change of pace Nvidia has done some research into how much benefit there is to higher framerates and refresh rates.

First, let's make something clear: while your eyes may not actually see a difference between 30 and 60 fps, or 60 and 144 fps, or even 144 and 240 fps, that's not the same as not being able to feel the difference. If you've ever played a game that's running smoothly at 60fps or more, and then tried the same game at 30 fps, you will absolutely feel the difference—and in my experience, the same goes for higher framerates, especially if you're using a monitor that supports a higher refresh rate. It's why we recommend 144Hz displays as the best gaming monitors, and why faster graphics cards to drive those refresh rates are beneficial.

Being able to feel the difference, even if you can't always see it, is nice, but anecdotally, I also think—and have heard from others—that higher framerates and refresh rates can make you more competitive in games. Proving that is a lot more difficult, but thankfully, it's no longer simply a statement of opinion, as Nvidia has provided some statistics to back up such claims.

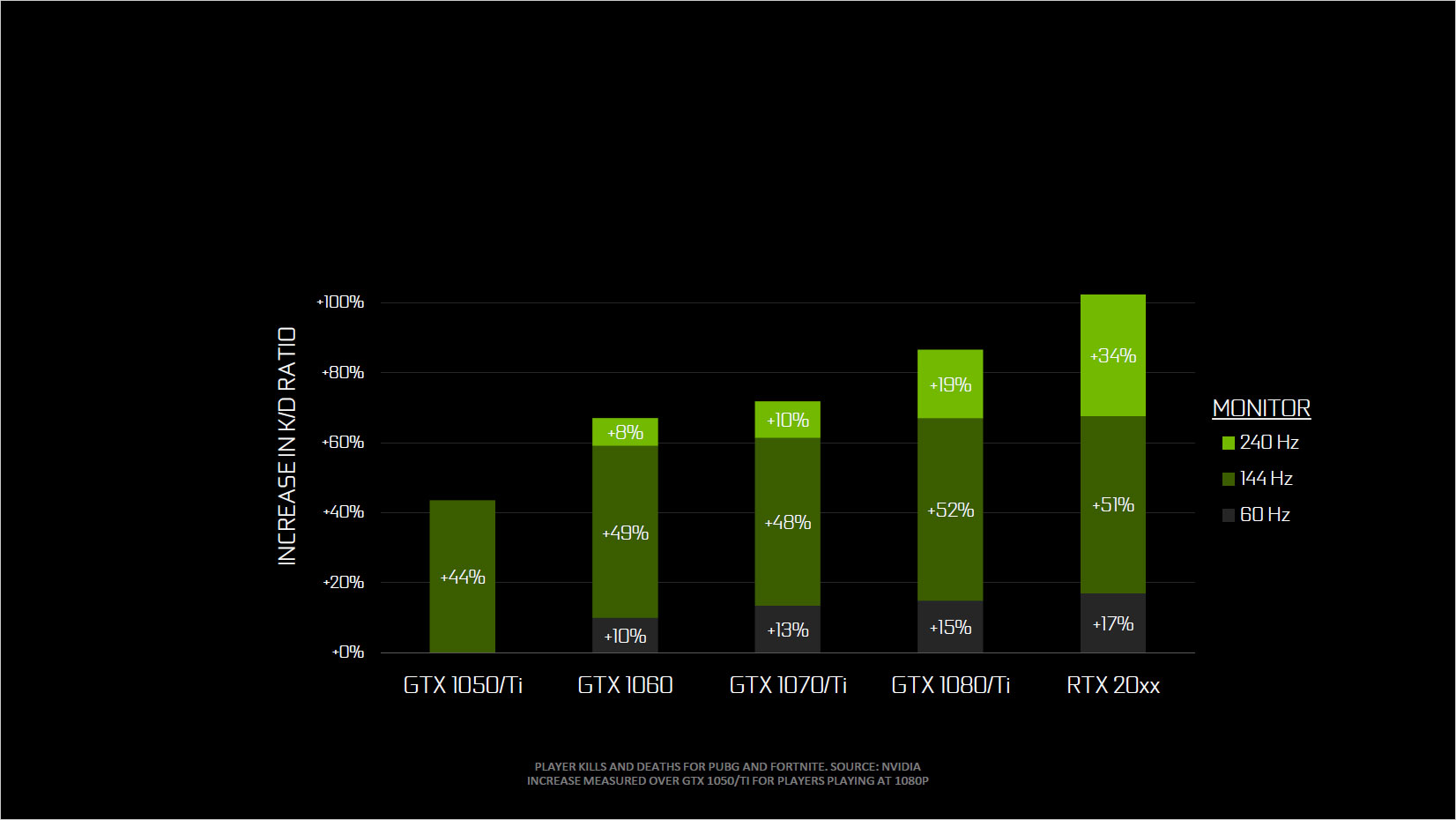

Nvidia used more than a million sample points collected via anonymous GeForce Experience data, and then analyzed the data (which means no AMD cards). Specifically, Nvidia is looking at player performance in two popular battle royale games: PUBG and Fortnite. (The results should transfer over to other games like Apex Legends, Call of Duty Black Ops, and non-BR games as well.) How do you quantify player performance? Nvidia looked at kill/death ratio and matched that up with number of hours played per week, then finally broke that down into graphics hardware and monitor refresh rate. Nvidia limited its analysis to 1080p, which provides for the highest refresh rates and also serves to normalize things a bit.

It's worth noting that this research and data comes from Nvidia, which of course has a vested interest in people buying faster graphics cards. There are also things to consider like serious gamers spending more money on serious hardware, but the amount of time played per week should help account for that. Either way, this is a subject I've thought about many times over the years, especially after first playing games on a 144Hz monitor. It's hard to go back to 60Hz once you've experienced 144Hz, and I would absolutely take the stand to say that 144Hz displays are 'better.' I've wanted to put together research on this subject, but getting enough sample points can be extremely difficult. Nvidia gets around this by using GeForce Experience data, and while that omits AMD cards from the analysis, the results should be true regardless of your GPU manufacturer.

Here's what Nvidia found:

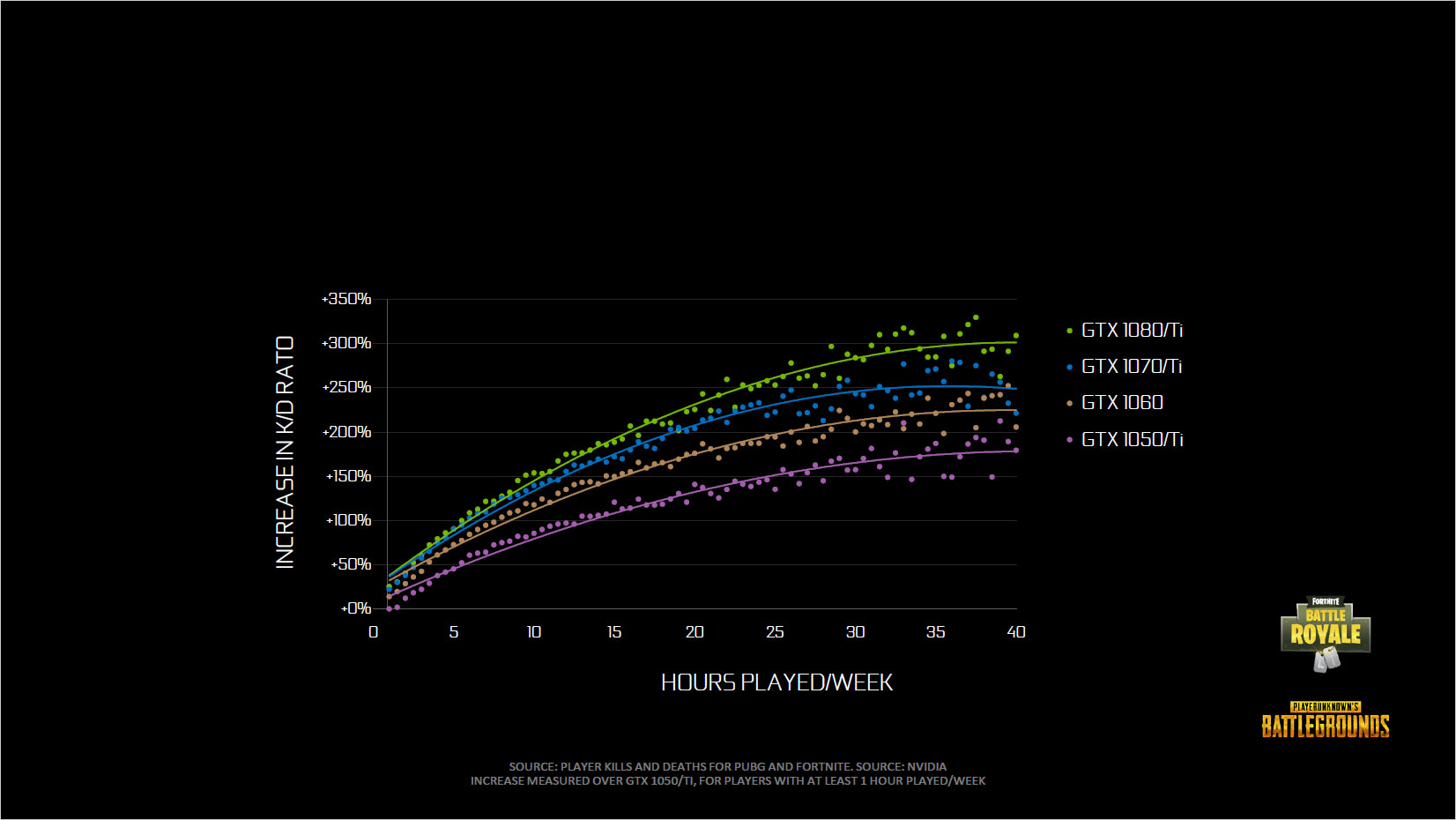

First, we have K/D ratio plotted against graphics hardware and number of hours played per week. You might say it's not really surprising that people with slower GPUs like the GTX 1050 Ti don't do as well as people with high-end cards like a GTX 1080—serious gamers are more likely to buy more expensive hardware, right? But then I'm not sure you could qualify someone that plays 20-40 hours of battle royale games each week as a 'casual' gamer. Regardless, the data shows that faster hardware, which in turn means higher framerates, definitely has a high correlation with improved K/D ratio.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Note that in order to avoid skewing the results, all the points in the charts needed to have sufficient samples. That means 50+ for the above chart, and 300+ for the others. In terms of statistics, that's large enough to draw some valid observations. It's also why the RTX cards aren't represented, as in many cases they're too new and there weren't enough samples available. But now comes the interesting part. How does K/D ratio match up with refresh rate?

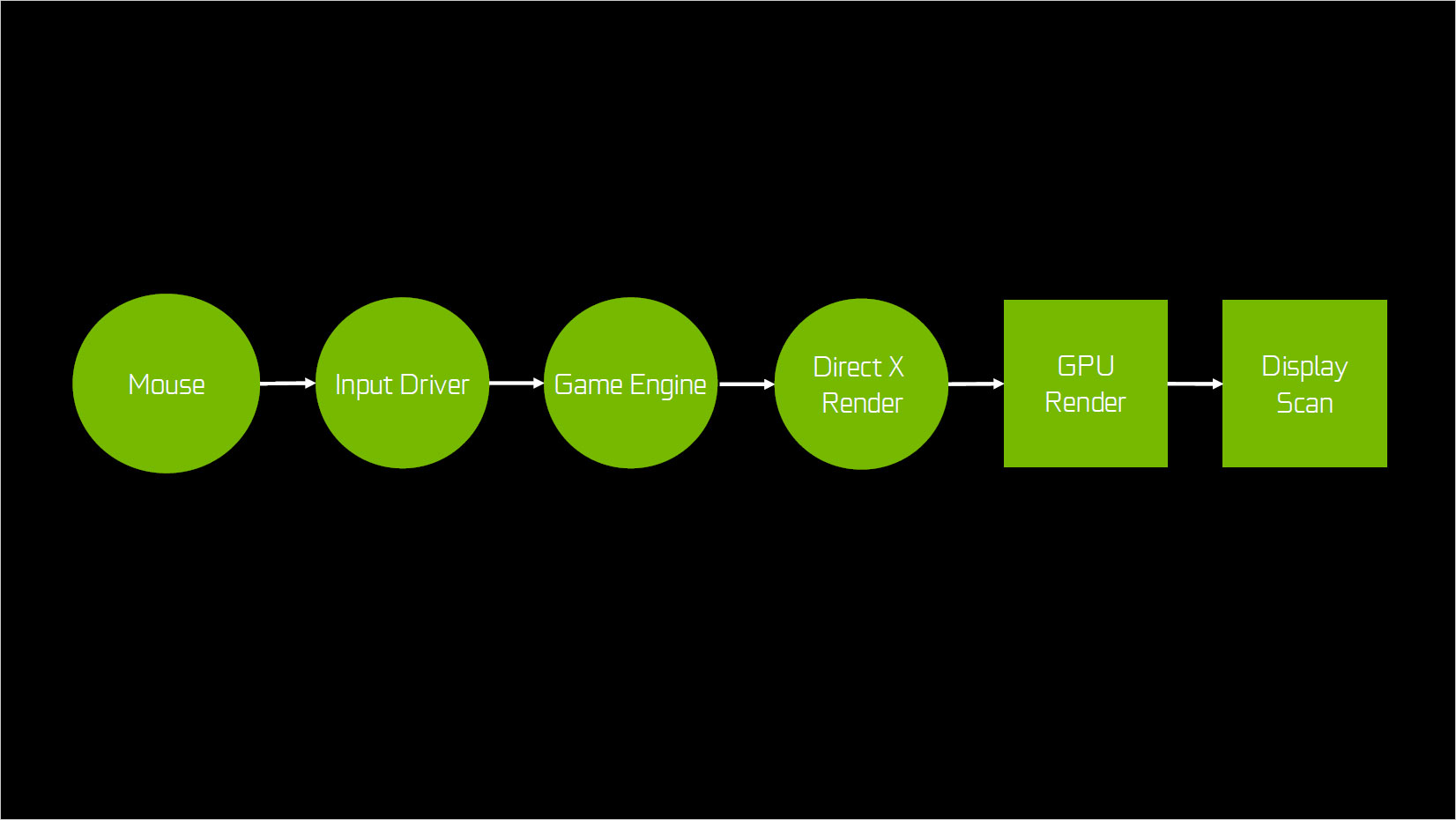

Why is it that refresh rate and graphics card performance help improve your overall skill level in games? It's pretty simple, actually. The below image shows what happens between user input (mouse or keyboard) and the resulting frame appearing on your display. The first two items are generally fixed, or at least don't show a lot of variation—your mouse and keyboard usually have relatively high sampling rates. The final four items rely more on your PC's hardware, however. Game engine will depend more on your CPU, DirectX and GPU render will hit your graphics card, and the display scan time is determined largely by your refresh rate.

A game might have anywhere from 5-15 ms of processing time for the input and game engine, and then your graphics card and display join the fray. If you're running at 30fps, your graphics card would add an additional 33ms of latency—60fps would add 16.7ms, and 144fps would cut that down to 7ms. But running at more than 60fps without a higher refresh rate display doesn't do much good, as you get either tearing or a dropped frame. To get the full benefit, you need a fast monitor as well.

Most competitive gamers already know all of this, which is why the top players will run at lower settings to boost framerates. Popular streamers will also use a secondary PC for the data stream, to avoid compromising the main PC's performance (among other things). But while there's no substitute for practice—even with the best hardware in the world, if you're only playing a few hours a week you're not going to keep up with the 30+ hour people—making sure your hardware is up to snuff can definitely help.

You don't have to run out and buy the latest and greatest display and graphics card, of course, but next time you're looking at upgrading, I strongly recommend giving extra thought to your monitor. Even if you're not aiming for the top of the leader boards, having a high-quality display and a fast graphics card definitely improve the overall experience.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.