PC graphics options explained

Understand what graphics options mean, and which to choose.

- Resolution

- Frames Per Second

- Vsync

- Adaptive Sync

- G-sync & Freesync

- Upscale & Downsample

- Nvidia DLSS

- AMD FSR

- Anti-aliasing

- Which AA?

- Bilinear and trilinear filtering

- Anisotropic filtering

- Graphics presets

- Ambient occlusion

- HDR (High Dynamic Range)

- Bloom

- Motion blur

- DOF

- Ray tracing

- Path tracing

- Sharpening

- Global illumination

- LoD (Level of Detail)

- FoV (Field of View)

- Gamma

Resolution

Frames Per Second

Vsync

Adaptive Sync

G-sync & Freesync

Upscale & Downsample

Nvidia DLSS

AMD FSR

Anti-aliasing

Which AA?

Bilinear and trilinear filtering

Anisotropic filtering

Graphics presets

Ambient occlusion

HDR (High Dynamic Range)

Bloom

Motion blur

DOF (Depth of Field)

Ray tracing

Path tracing

Sharpening

Global illumination

LoD (Level of Detail)

FoV (Field of View)

Gamma

Getting your graphics settings right for optimal performance can be difficult, especially if you've not managed to nab one of the best GPUs. Nowadays you can let your graphics card software do the tuning for you, and it'll usually do a fine job balancing your graphic settings for each individual game. But that's not really the PC gamer way, is it?

We tinker on our own terms, so we have to know what we're getting ourselves into when it comes to graphics settings.

If you're new to graphics tuning, this guide will explain the major settings you need to know about, and without getting too technical, what they're doing. Understanding how it all works can help with troubleshooting when you're running into performance issues. I'll let you know how likely each of the settings below is to tank your framerates, too.

For the sections on anti-aliasing, anisotropic filtering, and post-processing that follow, I consulted with Nicholas Vining, technical director and lead programmer at Gaslamp Games (the Dungeons of Dredmor devs), as well as Cryptic Sea designer/programmer Alex Austin. I also received input from Nvidia regarding my explanation of texture filtering. Keep in mind that graphics rendering is much more complex than presented here.

What is monitor resolution?

A pixel is the most basic unit of a digital image—a tiny dot of color—and resolution is the number of pixel columns and pixel rows in an image or on your display. The most common display resolutions today are: 1280 x 720 (720p), 1920 x 1080 (1080p), 2560 x 1440 (1440p), and 3840 x 2160 (4K). Those are 16 x 9 resolutions—if you have a display with a 16 x 10 aspect ratio, they’ll be slightly different: 1920 x 1200, 2560 x 1600, and so on while ultrawide displays can be 2560 x 1080, 3440 x 1440, etc.

What is FPS?

If you think of a game as a series of animation cells—still images representing single moments in time—the FPS is the number of images generated each second. It's not the same as the refresh rate, which is the number of times your display updates per second, and is measured in hertz (Hz). 1Hz is one cycle per second, so the two measurements are easy to compare: a 60Hz monitor updates 60 times per second, and a game running at 60 FPS should feed it new frames at the same rate.

The more work you make your graphics card do to render bigger, prettier frames, the lower your FPS will be. If the framerate is too low, frames will be repeated and it will become uncomfortable to view—an ugly, stuttering world. Competitive players seek out high framerates in an effort to reduce input lag, but at the expense of screen tearing, while high-resolution early adopters may be satisfied with playable framerates at 1440p or 4K. The most common goal today is 1080p/60 fps, though 1440p, 4K, and framerates above 120 are also desirable. A high refresh rate monitor (120–144Hz) with the framerate to match is ideal.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Because most games don't have a built-in benchmarking tool, the most important tool in your tweaking box is software that displays the current framerate. Steam has it's own in-game hadowPlay or FRAPS work fine in many games, or you can use utilities like Riva Tuner for more options on what to show.

What is Vsync and screen tearing?

When a display's refresh cycle is out of sync with the game's rendering cycle, the screen can refresh during a swap between finished frames. The effect is a break in the image called a screen tear, where we're seeing portions of two or more frames at the same time. It is also our number one enemy after low framerate.

One solution to screen tearing is vertical sync (vsync). It’s usually an option in the graphics settings, and it prevents the game from messing with the display until it completes its refresh cycle, so that the frame swap doesn't occur in the middle of the display updating its pixels. Unfortunately, vsync causes its own problems, one being that it contributes to input lag when the game is running at a higher framerate than the display's refresh rate.

What is Adaptive Sync?

The other big problem with Vsync happens when the framerate drops below the refresh rate. If the framerate exceeds the refresh rate, Vsync locks it to the refresh rate: 60 FPS on a 60Hz display. That's fine, but if the framerate drops below the refresh rate, Vsync forces it to jump to another synchronized value: 30 FPS, for instance. If the framerate fluctuates above and below the refresh rate often, it causes stuttering. We'd much rather allow the framerate to sit at 59 than punch it down to 30.

To solve this, Nvidia's Adaptive Vertical Synchronization disables Vsync anytime your framerate dips below the refresh rate. It can be enabled in the Nvidia control panel and I recommend it if you're using Vsync.

What is G-sync and Freesync?

The problem all stems from one thing: displays have a fixed refresh rate. But if the display's refresh rate could change with the framerate, we could eliminate screen tearing and eliminate the stuttering and input lag problems of Vsync at the same time. Of course, you need a compatible video card and display for this to work, and there are two technologies that do that: Nvidia has branded its technology G-sync, while AMD's efforts are called FreeSync.

It used to be the case that Freesync monitors would only work in combination with an AMD GPU. The same went for G-Sync monitors and Nvidia GPUs. Nowadays, though, it is possible to find G-Sync compatible FreeSync monitors which work perfectly well with both AMD and Nvidia graphics cards. However, only Nvidia GPUs can use variable refresh rates on G-Sync displays.

Check our best monitors guide for further advice.

What is upscaling and downsampling?

Back in the day, games would offer a 'rendering resolution' setting that lets you keep the display resolution the same while adjusting the resolution the game is being rendered at. If the rendering resolution was lower than your display resolution, it would be upscaled to fit your display resolution.

If you rendered the game at a higher resolution than your display resolution, which is an option in Shadow of Mordor, the image would be downsampled (or 'downscaled').

Because it determines the number of pixels your GPU needs to render, resolution has the greatest effect on performance. This is why console games which run at 1080p often upscale from a lower rendering resolution—that way, they can handle fancy graphics effects while maintaining a smooth framerate.

What is DLSS?

Nvidia's Deep Learning Super Sampling (DLSS) means that anyone using GeForce RTX cards gain the benefit of technology far superior to older anti-aliasing and upscaling techniques. To work, DLSS requires RTX graphics cards' AI-accelerating Tensor Cores, which the Nvidia Turing architecture integrates into the die for AI workloads.

According to Nvidia, "DLSS leverages a deep neural network to extract multidimensional features of the rendered scene and intelligently combine details from multiple frames to construct a high-quality final image. DLSS uses fewer input samples than traditional techniques such as TAA, while avoiding the algorithmic difficulties such techniques face with transparency and other complex scene elements."

There have been several evolutions of Nvidia's DLSS, with the earliest version using its GPU-accelerated algorithm to upscale game graphics. It did a better and more efficient job than Temporal anti-aliasing, so Nvidia's work continued.

While DLSS 1 was only slightly better at upscaling than traditional anti-aliasing, DLSS 2 brought new temporal feedback techniques, which delivered sharper image details and improved stability frame to frame. It's Frame Generation tech used deep learning on top of TAA techniques, and through super sampling essentially averaged out the pixels in between frames to improve fidelity.

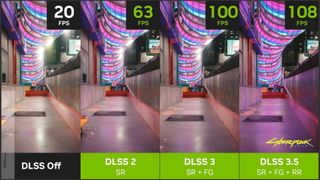

DLSS 3 is exclusive to the 40-series of Nvidia GPUs, and combines DLSS Super Resolution and Nvidia Reflex with DLSS Frame Generation—with this last bit being the differentiating factor. The AI-powered frame generation relies on Ada Lovelace's Optical Flow Accelerator (OFA) as well as the new 3rd generation Tensor cores.

DLSS 3.5 adds Ray Reconstruction, which is available across the whole RTX family, not just the 40-series. This replaces the denoisers in the graphics pipeline with this AI-powered element that works alongside the traditional Super Resolution upscaling workflow.

It's this removal of the standard denoisers which is delivering the slight performance increase, but the improval in overall image fidelity which is the most stark difference against previous methods.

What is FSR?

FidelityFX Super Resolution is AMD's solution to upscaling. It's a superset of FSR upscaling and Fluid Motions Frames technology, which is AMD's answer to Nvidia's Frame Generation.

Essentially, Fluid Motions Frames (FMF) interpolates new frames based on existing frame data and inserts them in between frames rendered using the full 3D pipeline, in order to gain improved visual fluidity or smoothness. Running alongside AMD's Anti-Lag+ feature it does a much better job at reducing latency than it does otherwise.

While Nvidia's RTX GPUs have dedicated optical flow accelerating blocks, AMD does all the calculations in the GPUs shaders using asynchronous compute capacity. What that means is that it's dependent on how much of the GPU's asynchronous compute is being used by the game itself.

Being software-based, FMF is more widely compatible than Nvidia's newer, 40-series specific DLSS version. And with FSR 3.1 on the horizon, less fuziness and flickering is expected from its application.

Some games will even allow the marriage of Nvidia's DLSS and AMD's FidelityFX super resolution tech.

What is anti-aliasing?

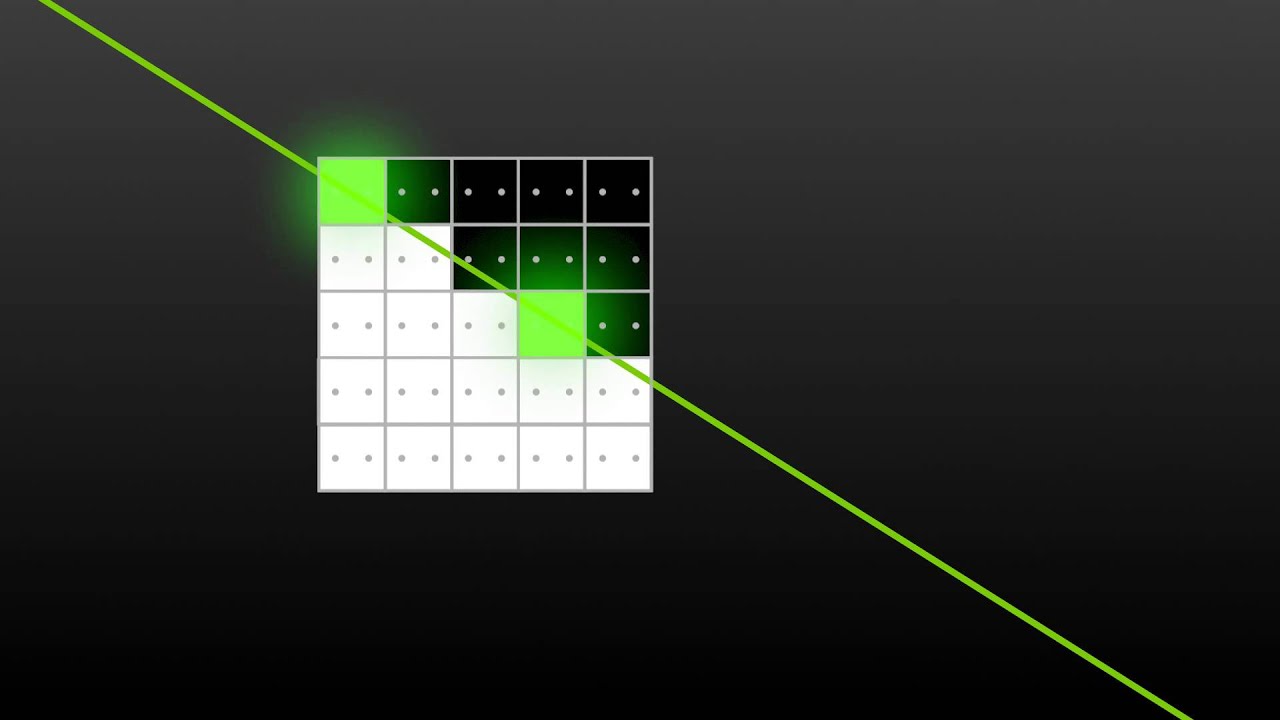

If you draw a diagonal line with square pixels, their hard edges create a jagged ‘staircase’ effect. This ugliness (among other artifacts) is called aliasing. If resolutions were much higher, it wouldn’t be a problem, but until display technology advances, we have to compensate with anti-aliasing.

There are many techniques for anti-aliasing, but supersampling (SSAA) is useful to explain the process. It works by rendering frames at a higher resolution than the display resolution, then squeezing them back down to size. On the previous page, you can see the anti-aliasing effect of downsampling Shadow of Mordor from 5120 x 2880 to 1440p.

Consider a pixel on a tile roof. It’s orange, and next to it is a pixel representing a cloudy sky, which is light and blueish. Next to each other, they create a hard, jagged transition from roof to sky. But if you render the scene at four times the resolution, that one orange roof pixel becomes four pixels. Some of those pixels are sky-colored and some are roof-colored. If we take the average of all four values, we get something in between. Do that to the whole scene and the transitions become softer.

That's the gist, at least, and while it looks very good, supersampling is extremely computationally expensive. You’re rendering each frame at a resolution two or more times higher than the one you’re playing at—even with our multiple high-end graphics cards, trying to run supersampling with a display resolution of 2560x1440 isn't practical. That’s why there are so many more efficient alternatives:

Multisampling (MSAA): More efficient than supersampling, but still demanding. This is typically the standard, baseline option in older games, and it's explained very simply in the video below.

Coverage Sampling (CSAA): Nvidia’s more efficient version of MSAA. You won't see this much anymore.

Custom-filter (CFAA): AMD’s more efficient version of MSAA. You also won't see this much anymore.

Fast Approximate (FXAA): Rather than analyzing the 3D models (i.e. MSAA, which looks at pixels on the edges of polygons), FXAA is a post-processing filter, meaning it applies to the whole scene after it has been rendered, and it's very efficient. It also catches edges inside textures which MSAA misses. This is the default in many modern games because it has very little overhead, though it tends to miss a lot of jaggies.

Morphological (MLAA): Available with AMD cards, MLAA also skips the rendering stage and processes the frame, seeking out aliasing and smoothing it. As Nicholas Vining explains: "Morphological anti-aliasing looks at the morphology (read: the patterns) of the jaggies on the edges; for each set of jaggies, it computes a way of removing the aliasing which is pleasing to the eye. It does this by breaking down edges and jaggies into little sets of morphological operators, like Tetris blocks, and then uses a special type of blending for each Tetris block." MLAA can be enabled in the Catalyst control panel.

Enhanced Subpixel Morphological (SMAA): Another post-processing method, described as combining MLAA with MSAA and SSAA strategies. You can apply it with SweetFX, and many modern games natively support this.

Temporal (TAA or TXAA): TXAA was initially supported on Nvidia's Kepler and later GPUs, but more general forms of temporal anti-aliasing are now available and are typically just labeled TAA. TAA compares the previous frame with the current frame to look for edges and help remove jaggies. This is done through a variety of filters and can help reduce the 'crawling' motion on edges, which looks a bit like marching ants. It cannot, however, remove actual ants from inside your display. You should probably just throw that display out.

As Vining again explains: "The notion here is that we expect frames to look a lot like each other from frame to frame; the user doesn't move that much. Therefore, where things haven't moved that much, we can get extra data from the previous frame and use this to augment the information we have available to anti-alias with."

Multi-Frame (MFAA): Introduced with Nvidia's Maxwell GPUs. Whereas MSAA samples in set patterns, MFAA allows for programmable sample patterns. Nvidia does a good job of explaining MSAA and MFAA simply in the video below.

Anti-aliasing settings almost always include a series of values: 2x, 4x, 8x, and so on. The numbers refer to the number of color samples being taken, and in general, the higher the number, the more accurate (and computationally expensive) the anti-aliasing will be.

Then there's the special case of the 'Q.' CSAA attempts to achieve a quality better than or equal to MSAA with fewer color samples, so 8xCSAA actually only takes four color samples. The other four are coverage samples, explained here. 8QxCSAA, however, bumps the number of color samples back up to eight for increased accuracy. You'll rarely encounter CSAA these days, so let's call that a fun fact.

Which anti-aliasing should I choose?

It depends on your GPU, your preference, and what kind of performance you're after. If framerate is an issue, however, the choice is usually obvious: FXAA is very efficient. If you've got an RTX card, and the game you're playing supports it, give DLSS a try—you paid for it, and it's top of the line. In older games, you'll probably have to do a bit of testing to get the combination of look and performance you want. If you have the hardware to do it, you can also try supersampling instead of using the built-in options, which usually works. Overriding settings in other ways, however, isn't a sure thing.

What is biliniar and triliniar filtering?

Texture filtering deals with how a texture—a 2D image (and other data)—is displayed on a 3D model. A pixel on a 3D model won’t necessarily correspond directly to one pixel on its texture (called a ‘texel’ for clarity), because you can view the model at any distance and angle. So, when we want to know the color of a pixel, we find the point on the texture it corresponds to, take a few samples from nearby texels, and average them. The simplest method of texture filtering is bilinear filtering, and that’s all it does: when a pixel falls between texels, it samples the four nearest texels to find its color.

Introduce mipmapping, and you have a new problem. Say the ground you’re standing on is made of cracked concrete. If you look straight down, you’re seeing a big, detailed concrete texture. But when you look way off into the distance, where this road recedes toward the horizon, it wouldn’t make sense to sample from a high-resolution texture when we’re only seeing a few pixels of road. To improve performance (and prevent aliasing, Austin notes) without losing much or any quality, the game uses a lower-resolution texture—called a mipmap—for distant objects.

When looking down this concrete road, we don’t want to see where one mipmap ends and another begins, because there would be a clear jump in quality. Bilinear filtering doesn’t interpolate between mipmaps, so the jump is visible. This is solved with trilinear filtering, which smooths the transition between mipmaps by taking samples from both.

What is anisotropic filtering

Trilinear filtering helps, but the ground still looks all blurry. This is why we use anisotropic filtering, which significantly improves texture quality at oblique angles.

To understand why, visualize a square window—a pixel of a 3D model—with a brick wall directly behind it as our texture. Light is shining through the window, creating a square shape on the wall. That's our sample area for this pixel, and it's equal in all directions. With bilinear and trilinear filtering, this is how textures are always sampled.

If the model is also directly in front of us, perpendicular to our view, that's fine, but what if it's tilted away from us? If we're still sampling a square, we're doing it wrong, and everything looks blurry. Imagine now that the brick wall texture has tilted away from the window. The beam of light transforms into a long, skinny trapezoid covering much more vertical space on the texture than horizontal. That's the area we should be sampling for this pixel, and in the form of a rough analogy, this is what anisotropic filtering takes into account. It scales the mipmaps in one direction (like how we tilted our wall) according to the angle we're viewing the 3D object.

This is a difficult concept to grasp, and I have to admit that my analogy does little to explain the actual implementation. If you're interested in more detail, here's Nvidia's explination.

What do the numbers mean?

Anisotropic filtering isn't common in modern settings menus anymore, but where it does appear, it generally comes in 2x, 4x, 8x, and 16x flavors. Nvidia describes these sample rates as referring to the steepness of the angle the filtering will be applied to:

"AF can function with anisotropy levels between 1 (no scaling) and 16, defining the maximum degree which a mipmap can be scaled by, but AF is commonly offered to the user in powers of two: 2x, 4x, 8x, and 16x. The difference between these settings is the maximum angle that AF will filter the texture by. For example: 4x will filter textures at angles twice as steep as 2x, but will still apply standard 2x filtering to textures within the 2x range to optimize performance. There are subjective diminishing returns with the use of higher AF settings because the angles at which they are applied become exponentially rarer."

Anisotropic filtering it's not nearly as much of a hit as anti-aliasing, which is why it rarely appears in menus these days—it's just on, no matter what. Using the BioShock Infinite benchmarking tool, I only saw average FPS drop by 6 between bilinear filtering and 16x anisotropic filtering. That's not much of a difference considering the huge quality increase. High texture quality is pointless with poor filtering.

Which graphic quality preset should I choose?

What quality settings actually do will vary between games. In general, they raise and lower the complexity of game assets and effects, but going from 'low' to 'high' can change a bunch of variables. Increasing the shadow quality, for instance, might increase the shadow resolution, enable soft shadows as well as hard shadows, increase the distance at which shadows are visible, and so on. It can have a significant effect on performance.

There's no quick method for determining the best quality settings for your system—it's a case where they just need to be tested, and I recommend starting with Nvidia or AMD's suggestions, then raising the quality on textures, lighting, shadows and checking your framerate.

What is ambient occlusion?

Ambient lighting exposes every object in a scene to a uniform light—think of a sunny day, where even in the shadows, a certain amount of light is scattered about. It's paired with directional light to create depth, but on its own it looks flat.

Ambient occlusion attempts to improve the effect by determining which parts of the scene shouldn't be exposed to as much ambient lighting as others. It doesn't cast hard shadows like a directional light source, rather, it darkens interiors and crevices, adding soft shading.

Screen space ambient occlusion (SSAO) is an approximation of ambient occlusion used in real-time rendering, and has become commonplace in games in the past few years—it was first used in Crysis. Sometimes, it looks really dumb, like everything has a dark 'anti-glow' around it. Other times, it's effective in adding depth to a scene. All major engines support it, and its success will vary depending on the game and implementation.

Improvements on SSAO include HBAO+ and HDAO.

What is HDR?

High dynamic range was all the rage in photography a few years ago. The range it refers to is the range of luminosity in an image—that is, how dark and bright it can be. The goal is for the darkest areas to be as detailed as the brightest areas. A low-dynamic-range image might show lots of detail in the light part of a room, but lose everything in the shadows, or vice versa.

In the past, the range of dark to light in games was limited to 8 bits (only 256 values), but as of DirectX 10 128-bit HDRR is possible. HDR is still limited by the contrast ratio of displays, though. There's no standard method for measuring this, but LED displays generally advertise a contrast ratio of 1000:1.

What is bloom?

The famously overused bloom effect attempts to simulate the way bright light can appear to spill over edges, a visual cue that makes light sources seem brighter than they are (your display can only get so bright). It can work, but too often it's applied with a thick brush, making distant oil lamps look like nuclear detonations. Thankfully, most games offer the option to turn it off.

The screenshot above, which has now blinded you, is from Syndicate, which probably includes the most hilarious overuse of the effect.

What is motion blur?

Motion blur is pretty self-explanatory: it's a post-processing filter which simulates the film effect caused when motion occurs while a frame is being captured, causing streaking. Many gamers I've seen in forums or spoken to prefer to turn it off: not only because it affects performance, but because it just isn't desirable. I've seen motion blur used effectively in some racing games, but I'm also in the camp that usually turns it off. It doesn't add enough for me to bother with any performance decrease.

What is depth of field?

In photography, depth of field refers to the distance between the closest and furthest points which appear in focus. If I'm taking a portrait with a small DOF, for instance, my subject's face might be sharp while the back of her hair begins to blur, and everything behind her is very blurred. In a high DOF photo, on the other hand, her nose might be as sharp as the buildings behind her.

In games, DOF generally just refers to the effect of blurring things in the background. Like motion blur, it pretends our 'eyes' in the game are cameras, and creates a film-like quality. It can also affect performance significantly depending on how it's implemented. On the LPC, the difference was negligible, but on the more modest PC at my desk (Core i7 @ 3.47 GHz, 12GB RAM, Radeon HD 5970) my average framerate in BioShock Infinite dropped by 21 when going from regular depth of field to Infinite's DX11 'Diffusion Depth of Field.'

What is ray tracing?

Ray tracing is a method for simulating light in a game. It means that rather than baking lighting onto game models, the light rays are dynamic and react to moving objects. It's a fantastic way to make a scene look more realistic, with better reflections and refractions, as well as shadows and lighting in general, but it can come at a high cost to your fps.

Graphics card manufacturers have been working on ways to improve ray tracing for some time, and to great effect. Combining it with Nvidia's DLSS and Frame Generation tech, it's much easier to get games running at higher fps today with ray tracing on.

What is path tracing?

Path tracing is a technique for amping up the lighting in a game scene. It utilises ray tracing along with other methods to achieve dynamic lighting and reflections. The more advanced the path tracing, the closer a game can get to photorealism. As graphics cards become more powerful, path tracing becomes much more common and viable. Of course, that level of mathematical complexity means your GPU will be under a lot of strain, so use it with caution.

What is image sharpening?

Image sharpening does exactly what it says on the tin. It makes the game look sharper. It's easier to spot around the edges of models and wherever textures are applied. It's considered a post-processing effect and doesn't tend to get in the way where performance is concerned.

What is global illumination?

If you look around, you'll notice that some surfaces are lit with light that isn't necessarily from direct sources. Global illumination controls all the bounced and otherwise indirect light that permeates game worlds. It's nowhere near as graphically intensive when baked in with lightmapping, though dynamic global illumination will likely make your GPU suffer.

What is level of detail?

Games use LoD mapping to control how detailed the models are in a game setting. The further away something is from the camera, the lower the LoD map, and the fewer polygons it will use. The level of detail setting will dictate how close the camera has to be to an object before a higher LoD map is used. With a lower LoD there will be polygons used in closer models, rather than just those in the distance.

It has the potential to free up some frames. GPUs nowadays don't tend to have too much trouble with polygons, but it often depends how well the devs have optimised the game models. In Cities Skylines 2, for example, setting the LoD to very low gave me a boost of almost 10 fps.

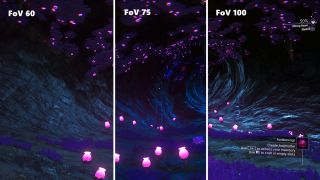

What is field of view?

Essentially, a higher field of view means you're able to see more of the scene in front of you. It's locked in some competitive fps games since increasing it can give players a distinct advantage: bringing what would be their peripheral vision into view. That said, the higher the FoV the more likely you'll have to deal with the 'fisheye' effect. A wider gaming monitor might call for an increased field of view, to account for the stretch.

What is gamma?

All you need to know about gamma in your game settings is that increasing it will up the overall brightness of the scene. It makes shadowy scenes lighter, and bright scenes darker. As a post processing effect, it won't tank your game if you want to turn it up, so don't feel bad about increasing it in horror games if you're feeling delicate. Just know that a super high gamma can make a game look washed out, while a low gamma will put you in the dark.

Screw sports, Katie would rather watch Intel, AMD and Nvidia go at it. Having been obsessed with computers and graphics for three long decades, she took Game Art and Design up to Masters level at uni, and has been rambling about games, tech and science—rather sarcastically—for four years since. She can be found admiring technological advancements, scrambling for scintillating Raspberry Pi projects, preaching cybersecurity awareness, sighing over semiconductors, and gawping at the latest GPU upgrades. Right now she's waiting patiently for her chance to upload her consciousness into the cloud.

- Tyler WildeEditor-in-Chief, US

Most Popular