Intel details how it could make Xe GPUs not suck for DX12 games

Existing DirectX 12 techniques mean Intel's new Xe GPU could deliver higher performance when working with CPU graphics

An online GDC talk from Intel developer, Allen Hux, has detailed how game devs could use existing DirectX 12 techniques to get both its Xe discrete and integrated GPUs working together for the greater good. The greater good is higher frame rates in your games, by the way.

In actual fact the techniques Hux introduces would work for any discrete graphics card when paired up with an Intel processor, maybe even an AMD chip with some of that Vega silicon inside it too.

So, an AMD Radeon or Nvidia GeForce GPU could happily marry up with the otherwise redundant graphics chip inside your Core i7 processor. That would offload some of the computational grunt work from your CPU and give your games a boost.

Since Intel announced its intentions to create a discrete graphics card, ostensibly to rival AMD and Nvidia in the gaming GPU space, there has been speculation about whether it might use the ubiquity of its CPU-based graphics silicon to augment the power of its Xe GPUs. That seemed to be confirmed when Intel's Linux driver team started work on patches to enable discrete and integrated Intel GPUs to work together last year.

Given that the Intel Xe DG1 graphics card that got a showing at CES in January is a pretty low-power sorta thing, and the Tiger Lake laptops it's likely to drop into will have similarly low-spec gaming capabilities, any sort of performance boost Intel can get will help. Literally anything.

Though that is, of course, dependent on any game developer actually putting in the effort to make their game code function between a pair of different GPUs. And that's not exactly a given however simple a code tweak it might be to enable Intel's wee multi-adapter extension…

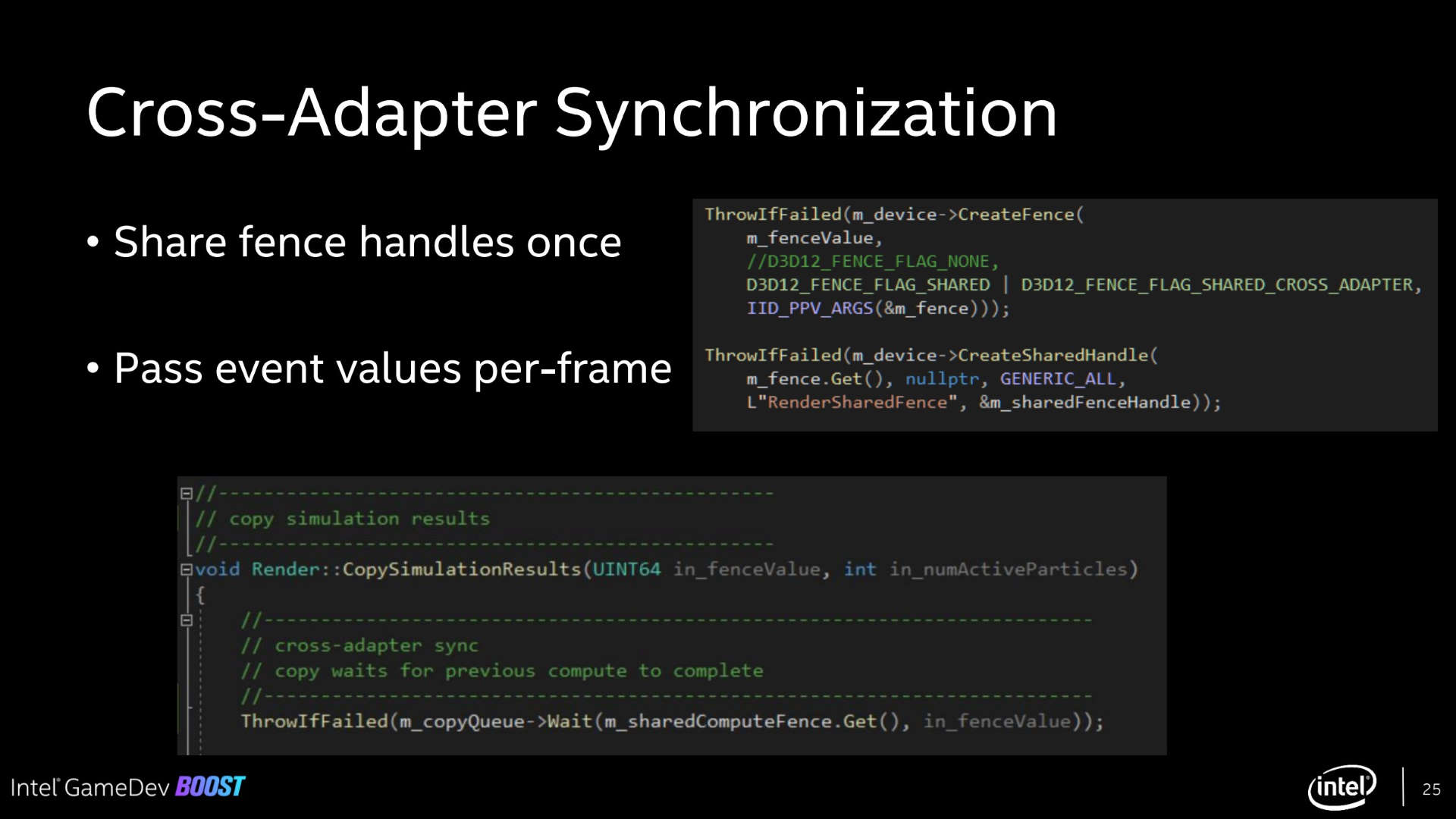

Essentially the multi-GPU tweak being introduced here uses the existing bits of DirectX 12 that allow for different graphics silicon to work together, where the traditional SLI and CrossFire tech mostly required identical graphics cards to deliver any performance boost.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

It looks like super-complicated stuff, and way beyond the ken of a simple fellow such as myself, but the idea is to use the Explicit Multi-Adapter support already in DX12 to allow a developer to carry out compute and render workloads on different parts of the system.

Generally the discrete GPU will be the higher performance part, and would take care of the rendering grunt work, but there is still a lot of compute power left in the integrated graphics chips of a CPU that would otherwise be wasted. The example Hux gives covers particle effects, but he says the recipe could equally work for physics, deformation, AI, and shadows. Offloading that work from the main graphics card could help to deliver some decent performance uplift.

Could, however, is the operative word. Hux calls it "essentially an enhancement of async compute" but there aren't a huge number of games that utilise that today. Sometimes games get a post-launch feature update, such as recently Ghost Recon Breakpoint, to enable Async Compute, but that's still not a regular occurance. And as subsection of an already niche technique that means this multi-GPU feature would likely be rarely seen however effective it could be.

But it adds more fuel to the rumour bonfire regarding the potential for Intel to marry it's Xe discrete and integrated GPUs in the next-gen Tiger Lake laptops. We know the DG1 graphics card is relatively weak, offering maybe 30fps in Warframe on low 1080p settings, and some frames per second in Destiny 2, thanks to its CES unveiling, but maybe with a little help from its integrated GPU friend Intel's Xe architecture could lead to some genuinely impressive thin-and-light gaming ultrabooks.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.