Total War: Warhammer DX12 boost for AMD still can't match Nvidia's DX11 performance

A tale of two APIs with wildly differing results. DirectX 12 is far from a performance panacea.

I just revisited the topic of Doom performance, and since the DX12 API patch is publicly available, I also retested Total War: Warhammer. We got a preview of DX12 performance prior to the launch, and there's a bunch of background information covered in the Doom article that's worth reading if you're not familiar with the idea of APIs and what low-level access means, but I'll skip that this time and cut straight to the main event.

Unlike Doom where the Vulkan support can be largely quantified as vendor agnostic—meaning, both AMD and Nvidia have helped the developer—Warhammer is an AMD Gaming Evolved release. That doesn't mean Nvidia can't work with developer Creative Assembly, but in practice they're not likely to do nearly as much. The same thing happens on Nvidia-partnered games, so I'm not too worried about it.

The initial preview of Warhammer's DX12 performance left me with some unanswered questions, as it was only a benchmark, and the public release of the game lacked the same benchmark functionality. At the time, I couldn't create an apples-to-apples comparison between the DX11 and DX12 performance. With a public launch of DX12 support—still classified as 'beta'—and the addition of a benchmark mode to the retail release, I can now give some hard numbers on the performance gains and losses that come with various GPUs.

Here's where things get a bit crazy. This is an AMD-branded title, so naturally you'd expect AMD to come out on top. Prior to the DX12 patch, that wasn't even remotely the case—under DX11, AMD basically gets curb-stomped. So heavy optimizations for AMD hardware under DX12 are the order of the day it would seem, but will it be enough?

CPU: Intel Core i7-5930K @ 4.2GHz

Mobo: Gigabyte GA-X99-UD4

RAM: G.Skill Ripjaws 16GB DDR4-2666

Storage: Samsung 850 EVO 2TB

PSU: EVGA SuperNOVA 1300 G2

CPU cooler: Cooler Master Nepton 280L

Case: Cooler Master CM Storm Trooper

OS: Windows 10 Pro 64-bit

Drivers: AMD Crimson 16.7.2, Nvidia 368.69/.64

I'm using my standard testbed, which you can see to the right. I did a quick spot-check of CPU requirements, and as noted in the earlier look at Warhammer, this game needs a certain level of CPU performance; more on this below. All of the cards were retested with DX11 and DX12, using AMD's Crimson 16.7.2 drivers and Nvidia's 368.69 drivers—the exception being the recently launched GTX 1060, where I used the 368.64 drivers.

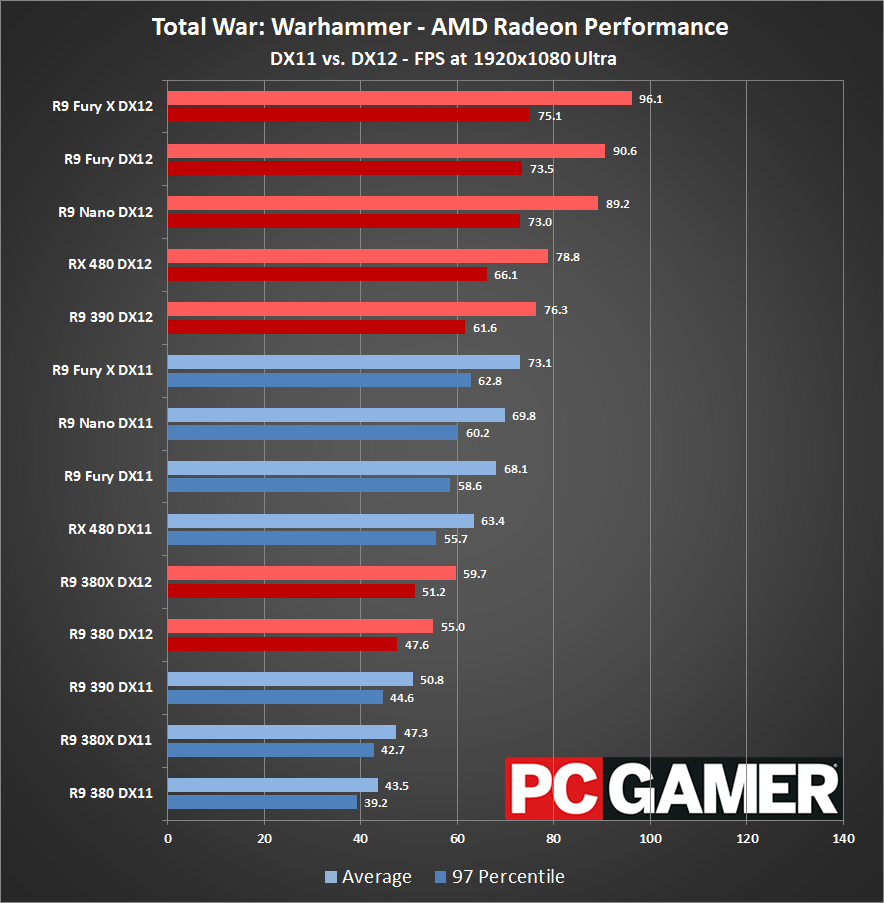

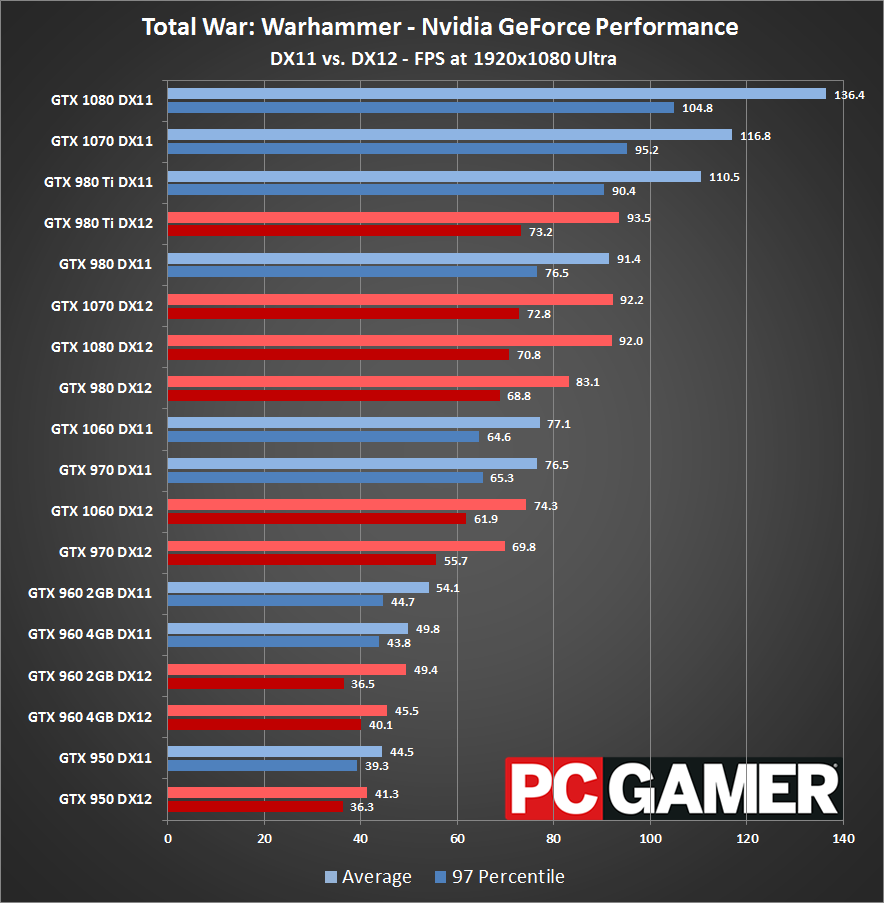

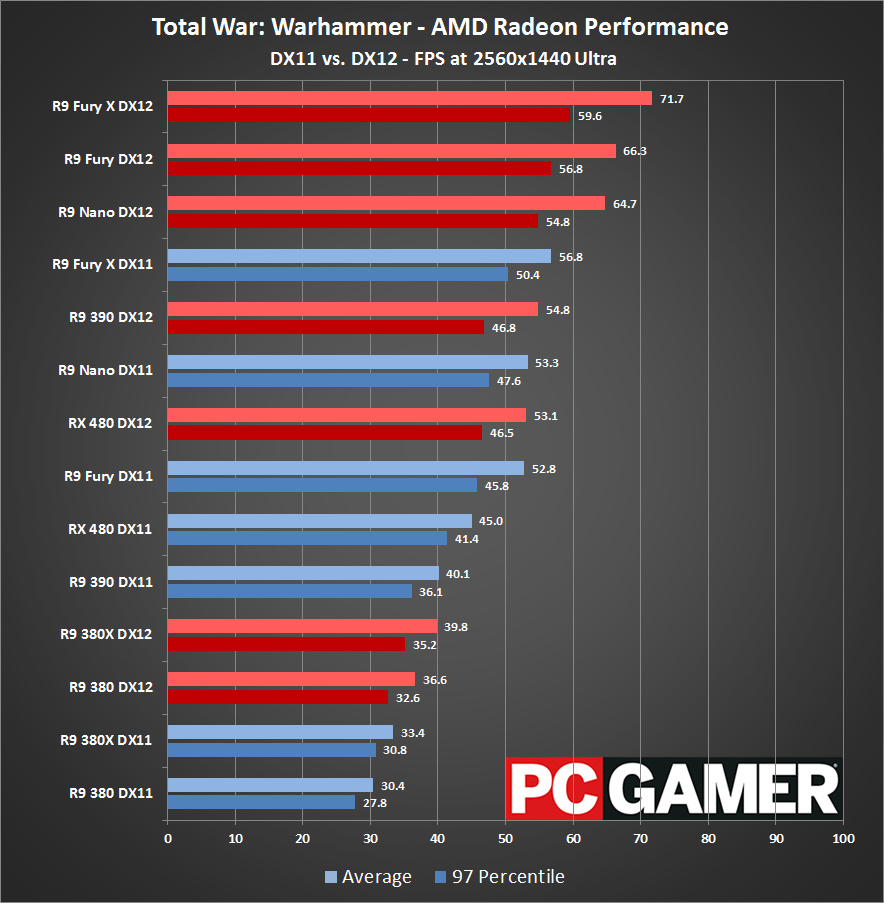

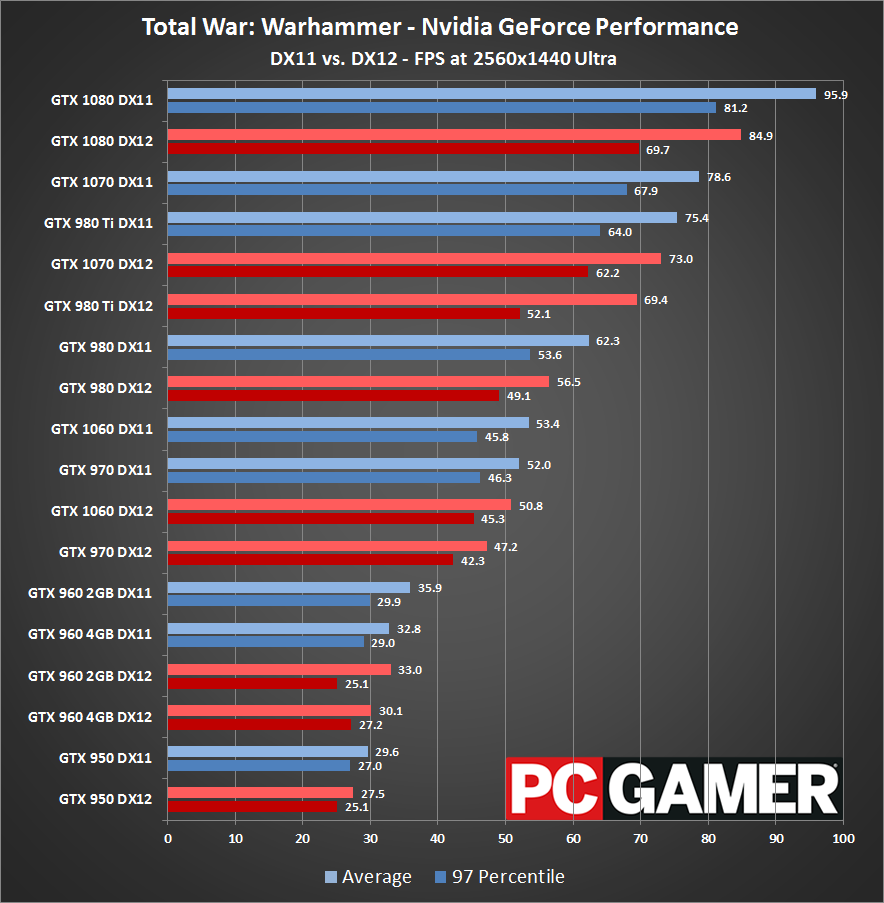

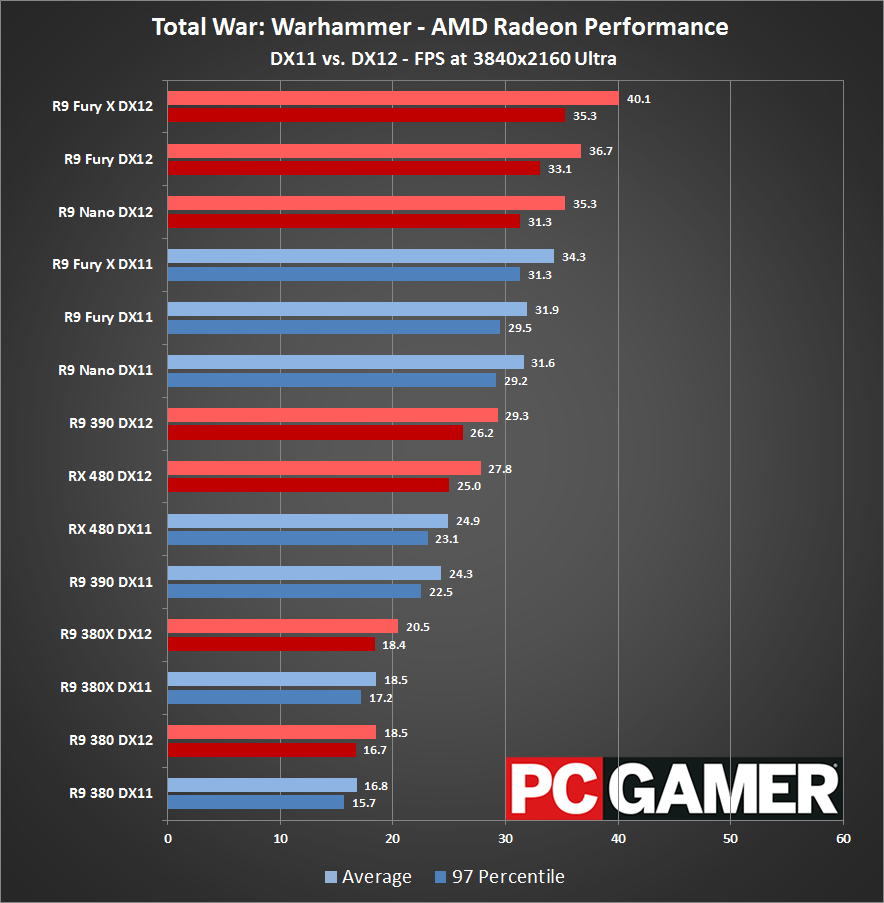

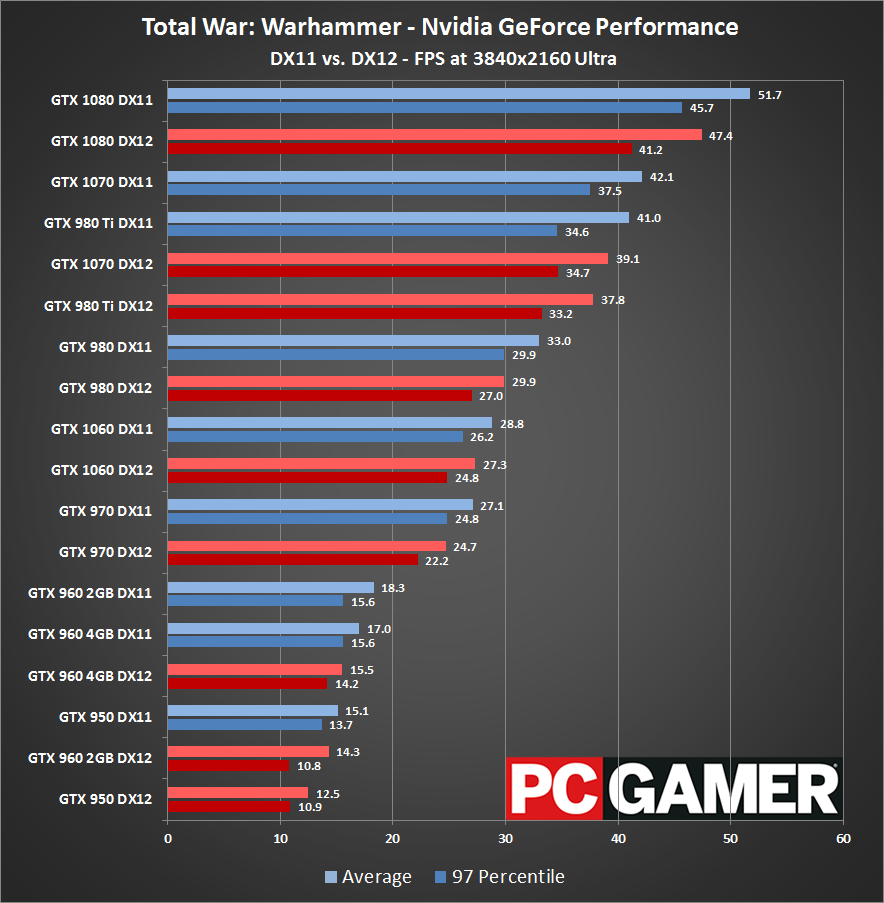

Due to the sheer number of cards, I've separated each resolution into AMD and Nvidia hardware, and colored DX12 results red and DX11 results blue. (If you're interested in the full charts, here are the 1080p, 1440p, and 4K results.) Unlike Doom, Warhammer includes a built-in benchmark, and the variance between benchmark runs is generally less than one percent. I log frame times using FRAPS for DX11 and PresentMon for DX12, and calculate the average and 97 percentile average (the average of the highest three percent of frame times) so that one or two bad frames don't skew the results.

In an ideal world, switching to a low-level API like DX12 or Vulkan shouldn't reduce performance—it's there for developers who want to improve performance, so if that's not happening, they're not trying hard enough. That's my view on things at any rate. You don't spend a bunch of time tweaking and overclocking a PC with the expectation of getting lower performance, and low-level APIs are basically the 'overclocking' of software development. As we'll see shortly, the 'don't run slower with a low-level API' mantra isn't really panning out for all vendors this round.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Just as we saw in Doom, AMD graphics cards see some major performance gains with DX12 compared to DX11. Minimum fps is up 20-25 percent, and the average is up 25-35 percent—and in the case of the R9 390, performance is 50 percent better. I'd like to say this is thanks to the marvels of DX12, but honestly a big part of the problem is just how poorly AMD does under DX11. The R9 Fury X for example is slower than a GTX 970 under DX11, which is absurd. Even with DX12, the GTX 980 Ti is still about 15 percent faster than the Fury X, though 1080p has never been Fiji's strong suit.

Nvidia GPUs meanwhile show a universal drop in performance with DirectX 12. The mainstream GPUs (1060/980 and below) see a 5-10 percent drop in average fps and a slightly higher 10-20 percent drop on minimum fps, but the real killer is the high-end cards. 980 Ti loses 15 percent, 1070 loses 20 percent, and the 1080 loses a whopping 33 percent (with 20-30 percent drops on minimum fps as well). This basically indicates the developers have ported their code to DX12 and optimized for AMD GPUs, while Nvidia's DX11 results were good enough that no effort was spent trying to improve on them. If you have an Nvidia GPU and you're playing Warhammer, you're not getting shafted really—just don't bother with DX12.

Given the above inequality in how the two GPU vendors are treated by the APIs, the best thing to do here is to compare AMD DX12 performance against Nvidia DX11 performance. The 980 Ti, 1070, and 1080 are out of reach of AMD's GPUs at this point in time—maybe a non-beta DX12 patch will change things further. The 980 comes out ahead of the Fury and Nano, with the 970 and 1060 falling just below the RX 480. The 390 performance is still lower than it normally sits, but Warhammer doesn't appear to use more than 2GB VRAM (based on the GTX 960 testing). And at the bottom of the charts, the 380X and 380 with DX12 manage to assume their usual slots just ahead of the 960.

AMD's gains under DX12 are slightly more modest at 1440p, improving by 20-25 percent on most cards and over 35 percent on the 390. Minimum fps is generally up 15-20 percent, and the 390 sees a 30 percent increase. But as before, it's partly because AMD's DX11 results are so dismal—the GTX 980 still holds a lead over the Fury X for example, and the 970 and 1060 are tied with the Fury and Nano. DX12 moves things in the right direction, but not really into the lead.

Nvidia sees 5-10 percent drops in performance again at 1440p, and there's no reason to run any of the Nvidia GPUs we tested in DX12 mode right now. But if we use Nvidia DX11 scores against AMD DX12 results, the overall positioning of the cards is pretty close to what we see in other games.

Given the number of pixels being pushed around at 4K, I'm surprised the choice of API still has this much of an impact on performance. Realistically, the GPU should be a major bottleneck and it shouldn't have too much trouble using all of its resources, but AMD cards—from the R9 380 through the R9 Fury X—continue to show 10-20 percent improvements to framerates. And Nvidia likewise continues to show 5-10 percent decreases in frame rates on most of the cards, while the 2GB 960 and 950 drop by roughly 20 percent.

The big picture view still has 980 Ti DX11 beating Fury X DX12, barely, with the other cards assuming their usual places. Curiously, even though the 2GB Nvidia cards seem to struggle at 4K, the higher clock speed on the 960 2GB keeps it ahead of the 960 4GB. (Both 960 cards are EVGA models, but the 4GB card runs at 1127MHz base clock while the 2GB has a 1279MHz base factory overclock.)

You'll still want a decent CPU

One thing that hasn't changed with Warhammer is the need for a good CPU—depending on your graphics card and settings, of course. At 1080p DX11 with a GTX 1080, the i7-5930K 4.2GHz ends up being 66 percent faster than a simulated i3-4350 (3.6GHz). But the gap narrows quite a bit at 1440p DX11 (a 20 percent improvement) and at 4K DX11 there's basically no difference. With DX12, 1080p improves 57 percent, 1440p by 46 percent, and 4K doesn't change—though you'll get better performance under DX11 in all cases. Drop down to a lower Nvidia GPU ilke the 970, though, and there's not much of a difference—only the DX12 1080p result improves by 20 percent, but the other scores are a tie.

What about CPU requirements for AMD hardware? I checked with the R9 Fury X and R9 390 to find out. The R9 Fury X performance doesn't change under DX11, but under DX12 the additional cores and clock speed are good for 50 percent more performance at 1080—but only 15 percent more at 1440p, and no change at 4K. Meanwhile, the R9 390 shows results similar to our GTX 970 testing—there's no real benefit from a faster CPU under DX11 or at higher resolutions, but 1080p DX12 does see a 20 percent increase in performance.

Tastes like a beta

If Doom's Vulkan update is an example of a vendor agnostic approach to low-level API support, the Warhammer DX12 public beta is on the opposite end of the spectrum. This is a patch that's really only useful to AMD users right now, and unless the developer goes back to do additional tuning for non-AMD GPUs, that's unlikely to change. Given the AMD Gaming Evolved branding, I wouldn't really expect them to spend a lot more effort on improving Nvidia performance. More perplexing however is the gulf between AMD and Nvidia performance under DX11, which ideally shouldn't have existed in the first place.

It's a strange new world we live in when a game can be promoted by a hardware vendor and yet fail to deliver good performance for more than a month after launch. I'd guess the publisher wanted to get the main product released and felt there was no need to delay that just so the developer could 'finish' working on the DX12 version (which is basically the same thing we've seen with Doom's Vulkan update, Hitman's DX12 patch, and to a lesser extent Rise of the Tomb Raider's DX12 support). The concern is that if this sort of behavior continues, low-level API support may end up being a second class citizen.

Even now, nearly two months after launch, Warhammer's DX12 support is still classified as 'beta,' and I have to wonder when—or if—that classification will ever be removed. Rise of the Tomb Raider could call their DX12 support 'beta' as well, and it has a disclaimer whenever you enable the feature: "Using the DirectX 12 API can offer significantly better performance on some systems; however, it will not be beneficial on all." Most of the games with DX12 or Vulkan support so far should have a similar disclaimer, and that's part of the concern with low-level APIs.

Right now, the developers are tasked with doing more work to properly support hardware using these low-level APIs. AMD benefits from DX12 in Warhammer while Nvidia doesn't, but there's more to it than just pure single GPU performance. One feature that's still missing on the vast majority of these games is multi-GPU support for example. It's easy to understand why, as users with SLI or CrossFire setups are a tiny minority in the world of PC gaming hardware, but previously the support for multi-GPU was typically in the hands of the driver teams at AMD and Nvidia. With low-level APIs, the developers will need to be more actively involved, and so far only one game—Ashes of the Singularity—has managed to include support for multiple GPUs, and even then only two GPUs are supported. Somewhat ironically, under DX11 both SLI and CrossFire are supported, though the biggest gains come at 4K where the GPU is the major bottleneck.

We're still in the early stages of DX12 and Vulkan deployment, so there's hope things will get better once developers get a handle on the situation. In a sense, we've reset how things are done in the graphics industry, and both the hardware and software people are dealing with the ramifications. Once the major engines (Unreal Engine 4, Unity, etc.) have included DX12 and/or Vulkan support for a while, games that license those engines should benefit with little additional work on the part of their developers. In theory, at least. And now that the DX12 platform has been defined, similar to what we've seen in the past with DX11, DX10, DX9, etc., the hardware should evolve to better utilize the new features offered.

Total War: Warhammer is one game, so people shouldn't base their hardware purchases solely on how it performs. It's another example of a game with low-level API support where AMD sees gains while Nvidia performance drops, but it's also in the same category as Ashes of the Singularity and Hitman—namely, it's promoted by AMD and sports their logo. If you focus on only DX12 or DX11 performance, the GPU standings change quite a bit, but once you select the best API for each GPU, things are basically back to 'normal.'

Welcome to the new status quo.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.