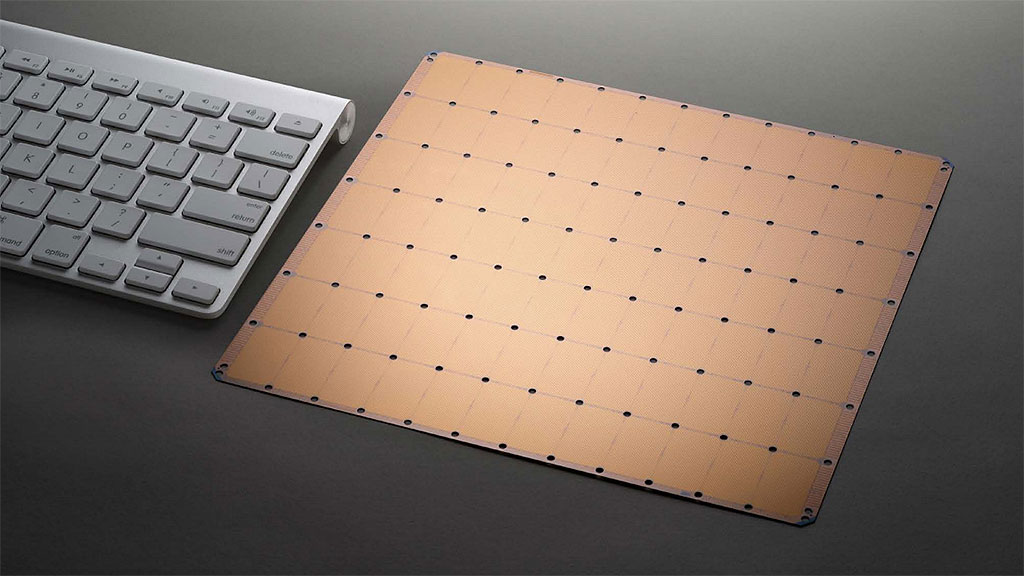

The world’s first trillion-transistor chip is bigger than some mouse pads

That's not fitting inside my PC.

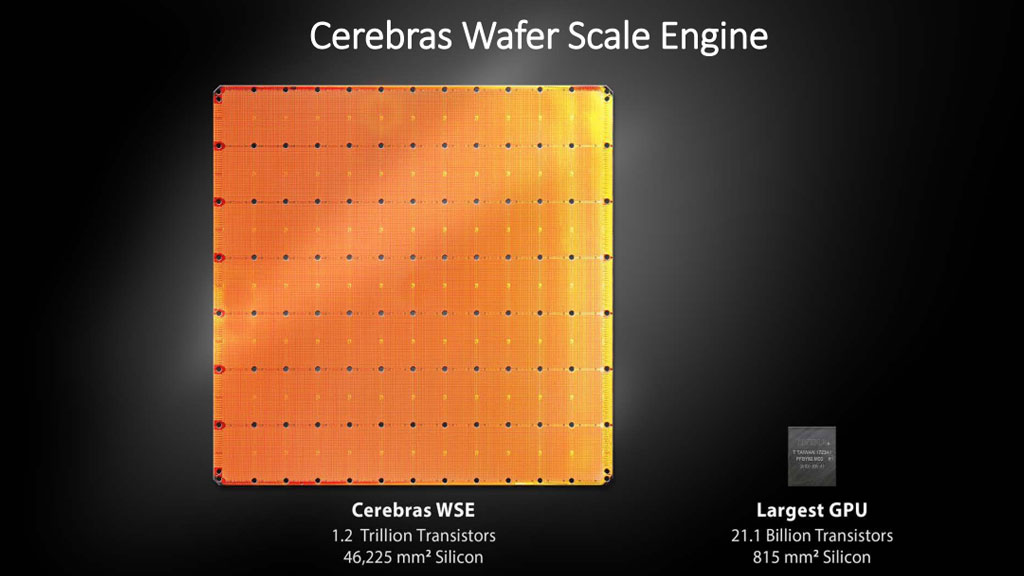

Semiconductors have tended to shrink in size over the years, but a new slice of silicon from Cerebras, a company specializing in artificial intelligence and deep learning, has unveiled the world's largest chip. Called the Wafer Scale Engine (WSE, pronounced "wise"), the chip is the first to pack more than 1 trillion (yes, TRILLION!) transistors (1.2 trillion, actually).

To put that into perspective, Nvidia's latest Turing GPU packs 18.9 billion transistors, while AMD's Zen 2 in 8-core form pairs a 7nm chiplet with 3.9 billion transistors to a 12nm I/O die with 2.09 billion transistors. How quaint!

According to Cerebras, at 46,225 mm-squared, the WSE is 56 times larger than the biggest GPU ever made. It also boasts a staggering 400,000 cores and 18GB of on-chip memory (SRAM), with an interconnect capable of shuttling data at 100 petabits per second (PB/s). That amounts to 33,000 times more bandwidth than any other interconnect solution.

What's all that computing power used for? Cerebras designed the WSE to run Crysis. No, seriously. Okay, that's a lie. The truth is, this is not a slice of silicon that will ever find its way into a consumer desktop. At 215mm x 215mm, it's wider than some mouse pads.

Staying true to its mission, Cerebras designed the WSE from the ground up for deep learning, and specifically to accelerate tasks that would normally take months to complete, to finish in minutes.

"We believe that deep learning is the most important computational workload of our time. Its requirements are unique and demand is growing at an unprecedented rate. Large training tasks often require peta- or even exa-scale compute: it commonly takes days or even months to train large models with today’s processors," Cerebras explains.

Deep learning is at the core of almost everything these days, or so it feels like it. From Google Photos to the digital assistant on your smartphone, deep learning plays a big role in how these services learn, grow, and adapt. Or for an example that hits closer to home for gamers, there's Nvidia's Deep Learning Super Sampling (DLSS) tech.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

The WSE is built on a 16-nanometer manufacturing process at TSMC and requires 15 kilowatts of power to run, which is another reason you won't ever see this in a desktop.

Paul has been playing PC games and raking his knuckles on computer hardware since the Commodore 64. He does not have any tattoos, but thinks it would be cool to get one that reads LOAD"*",8,1. In his off time, he rides motorcycles and wrestles alligators (only one of those is true).