The ongoing testing of Intel's X299 and i9-7900X

After several weeks of testing, the firmware remains a work in progress.

Life on the bleeding edge

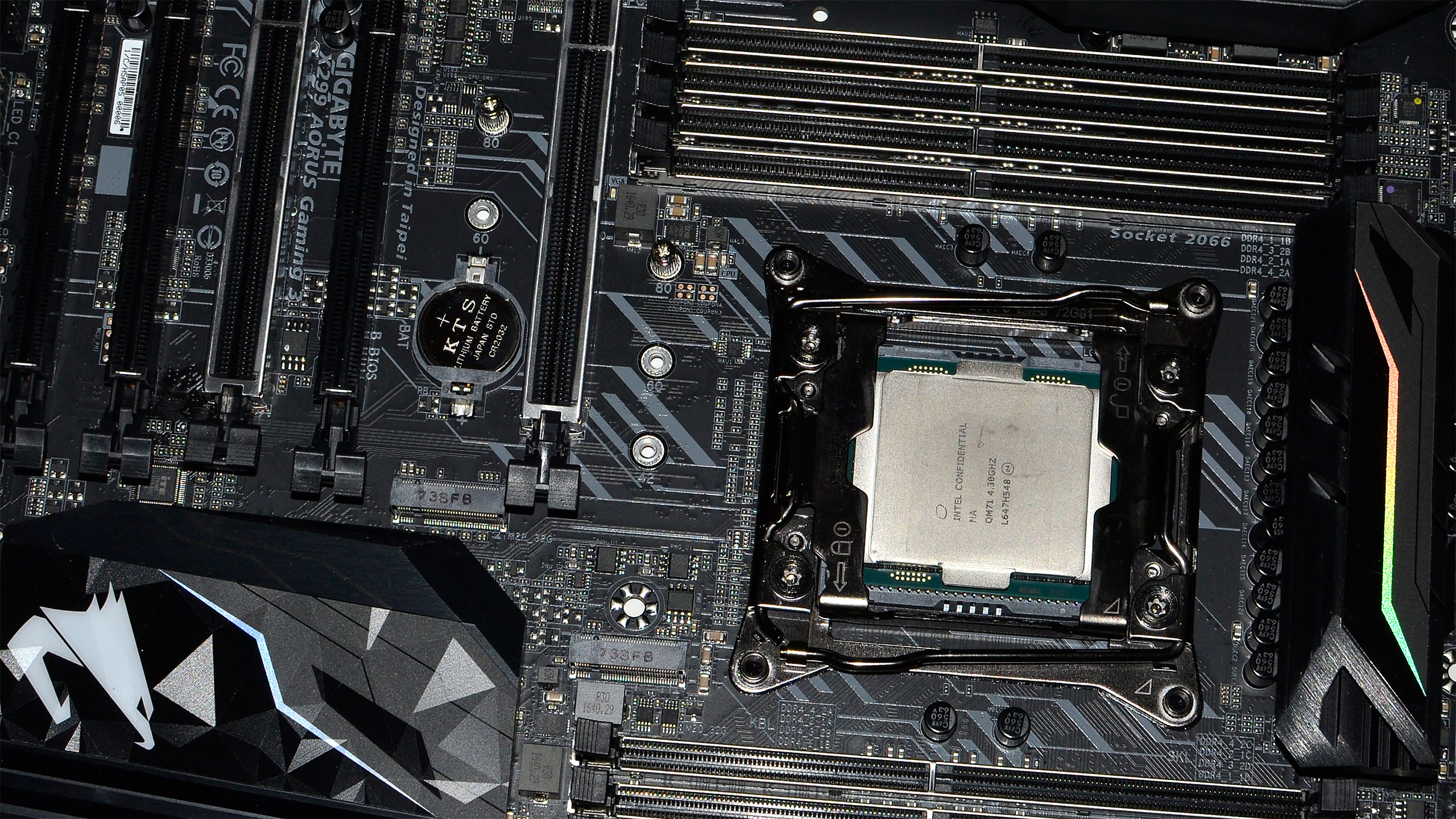

Earlier this week, Intel launched its new Skylake-X and Kaby Lake-X series of processors, all on the new X299 chipset and LGA2066 socket, aka the Basin Falls platform. I updated my CPU test suite in order to have current versions of the benchmark software, providing meaningful results for all the CPUs, including Skylake-X i9-7900X. Unfortunately, Skylake-X has presented its own set of problems. I mentioned this earlier today talking about Kaby Lake-X, but it bears repeating: this is a brand-new platform with new features, and there are still a few kinks to work out. Par for the course.

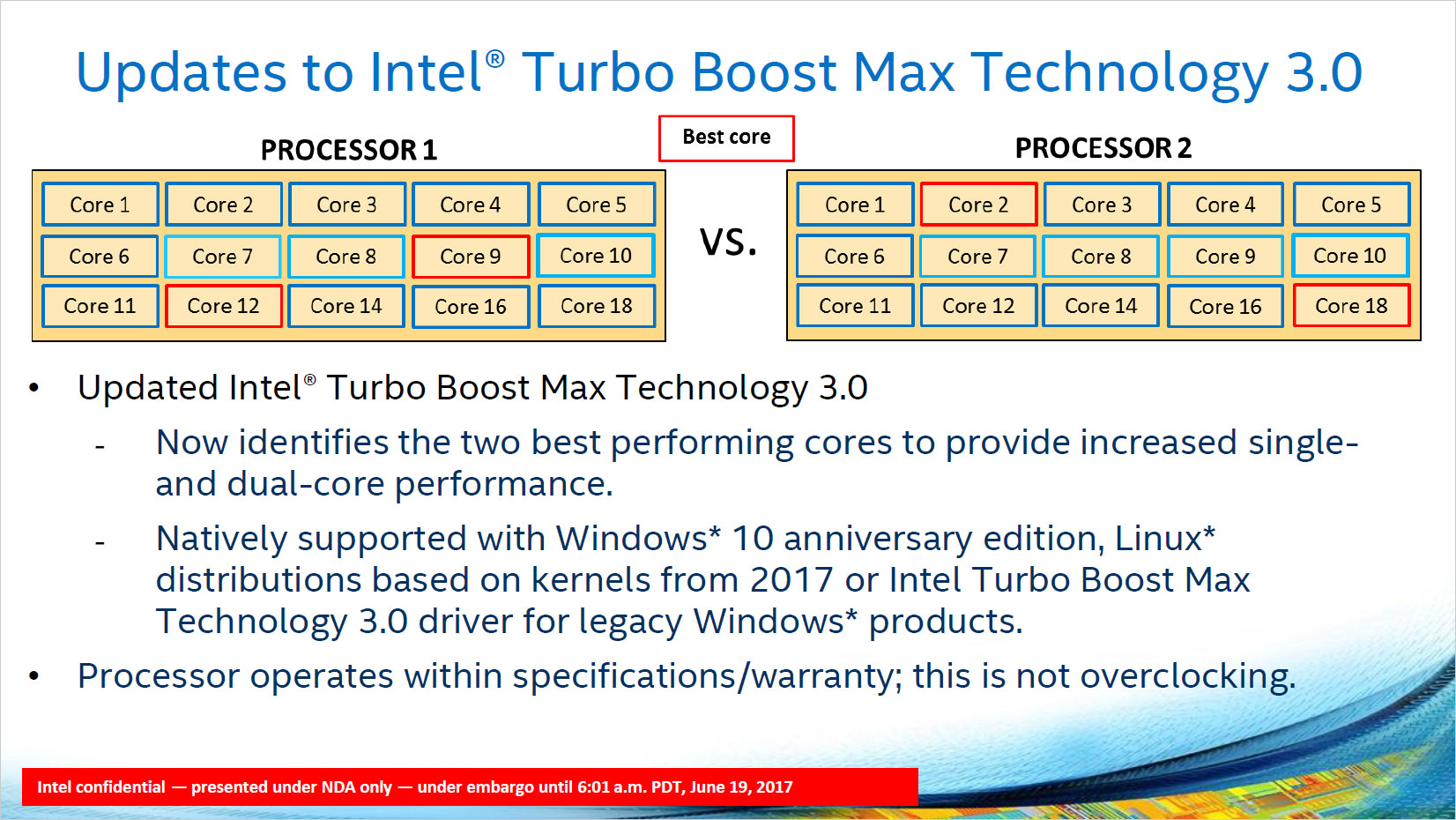

After five BIOS revisions, I still don't have firmware that works as expected, at least with Intel's Turbo Boost Max 3.0 (TBM3) technology. The way the i9-7900X is supposed to work is that it can run all 10 cores at up to 4.0GHz, the Turbo Boost 2.0 all-core clockspeed. The base clockspeed is only 3.3GHz—meaning, no matter what you do, the CPU should be able to run at 3.3GHz without any problems—but in practice the chip should run much faster than that. In lighter workloads, Turbo Boost 2.0 says the i9-7900X can run at up to 4.3GHz, and TBM3 takes that a step further and allows the chip to hit 4.5GHz in workloads that only use one or two cores.

What I'm actually seeing on Gigabyte's X299 Gaming 9 motherboard is unfortunately quite different. The Gigabyte BIOS allows you specify clockspeeds for various core counts, so at stock it has a 45X multiplier on the 1/2 core options, then 41 on 3/4 cores, and 40 on all the options for 5-10 cores. Best as I can tell, at present only the 10-core setting is actually in effect, so if I set that to 40 (stock), I'll get 4.0GHz, whether I'm running a single-threaded workload or a 20-threaded workload. On an earlier BIOS, the CPU tended to run at the highest value set on any of the core counts, so 4.5GHz since that's what the 1/2-core loads specified.

The latest F5k BIOS that I received today came with a message that states, "the X299 AORUS Gaming 9 will follow Intel Turbo Boost policy by default under 'Enhanced Multi-Core Performance' which activates more cores for higher clocks." In my testing, that's still not happening. I've also noticed that the thread parking feature of Turbo Boost Max 3.0 isn't working, which is supposed to determine which CPU cores are 'best' and then park single-threaded workloads on those cores. The slide above explains how TBM3 is supposed to work, but either Windows 10 Creators Update isn't working right, or the firmware isn't working right—or perhaps both.

What about overclocking? That's not working as expected either—I set the CPU for a 4.7GHz clockspeed on all cores, and ended up with variable clockspeeds—setting everything to 45 was actually closer to proper 'stock' performance than anything else I've tried. Anyway, I'll save overclocking for next week.

That's why this is a preview of Skylake-X performance, rather than a full review. While I wait for a couple more X299 motherboards to arrive, I'm left with benchmark results that aren't quite matching Intel's specifications. I have an upper bound of 4.5GHz performance, a lower bound of 4.0GHz, and somewhere in between is where moderate workloads should sit.

Why does any of this matter?

So what's the big deal—4.0GHz vs. 4.5GHz shouldn't matter much, right? And enthusiasts will just overclock to 4.6-4.7GHz on all 10 cores anyway. I could just run 4.5GHz and call it a day… but that's not technically stock performance. The bigger issue however isn't with the i9-7900X, it's with the future 12-core to 18-core i9-7920X through i9-7980XE.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Imagine if this were an 18-core processor. It's not going to run all 18-cores fully stable at 4.5GHz, at least not without extreme cooling of some form. Intel hasn't even revealed the official clockspeeds yet, but I suspect the base clock will be around 3.0GHz, with a maximum all-core turbo in the 3.0 and 3.5GHz range. Lighter workloads on the other hand should still reach up to 4.5GHz, give or take, but if the BIOS locks the CPU into just one clockspeed, you'd have a CPU that sits at a constant 3.5GHz. Speed Shift, Turbo Boost, and all that other fancy stuff would be useless.

In other words, good firmware is critical on a motherboard, especially anything that aims to be an 'enthusiast' motherboard. Gigabyte was one of the better options for Ryzen's X370 chipset, but the only experience I've had with X299 so far has been underwhelming. Or maybe I just have an early revision of the motherboard that isn't behaving properly—I'll find out soon enough.

New features for Skylake-X

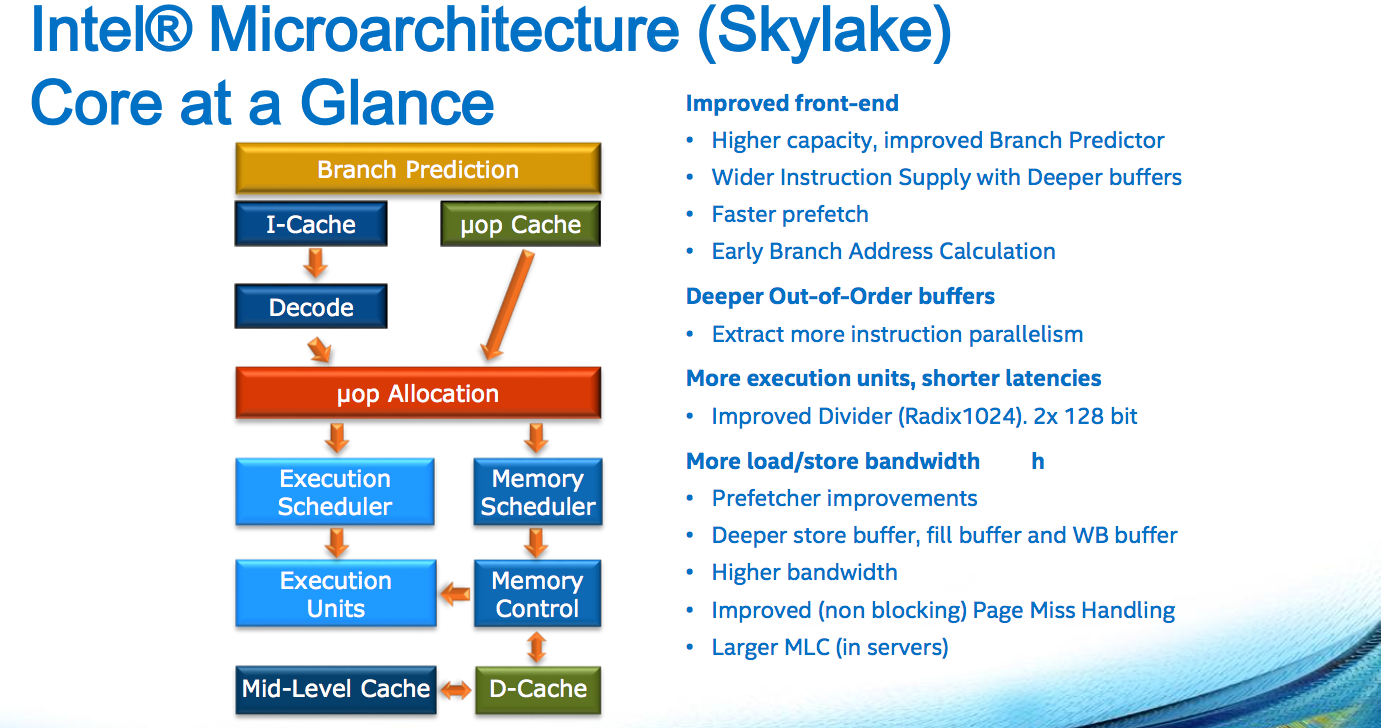

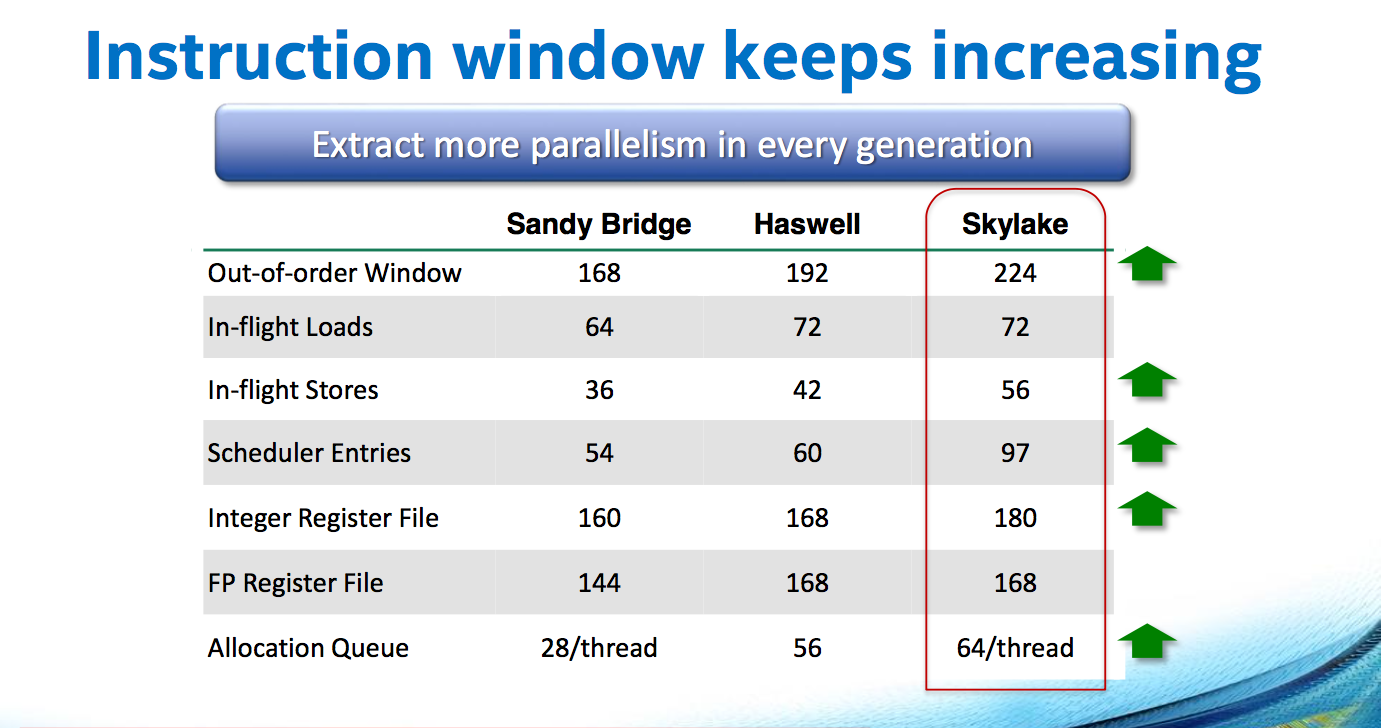

Beyond the firmware issue, which has unfortunately overshadowed much of my testing so far, there are some interesting changes going on with Skylake-X relative to the previous generation Broadwell-E (and Haswell-E) processors. Most of the architectural changes match up with Skylake relative to Haswell. So for example all of the buffers and other aspects of the architecture are larger, in an attempt to improve instruction level parallelism. Here's the overview of what changes have been made with Skylake-X:

If you're not familiar with the inner workings of modern processors, these slides are probably meaningless. The main point is that these changes are what helps to improve IPC, Instructions Per Clock, each generation. Intel also went from a 256kB L2 cache to a 1024kB L2 cache, with a smaller L3 cache relative to previous designs, in order to deliver a more balanced architecture. The result is that each Skylake processor core is larger compared to each Broadwell core, despite using the same 14nm process. And even with all of the enhancements, per-core performance is only about 10 percent faster compared to the Haswell/Broadwell generation.

Intel has also improved the AVX hardware in Skylake-X, with AVX512. AVX512 has 32 registers, and the size of the AVX portion of the CPU cores is massive—about the size of an Atom processor core, according to Bob Valentine, one of the main people behind the AVX instruction set. There are new AVX instructions as well, mostly designed to improve the flexibility and performance of the instruction set, so Boolean and logical operations are faster and use less power, masking is now supported, and quad-word (64-bit integer) arithmetic is now a 'first class data type.'

But a lot of the AVX improvements will be largely meaningless as far as gaming goes, since games tend to not include AVX support. Intel notes that floating point performance has improved by 8X over the past four processor generations, thanks to AVX. GPUs are still substantially faster, at least for algorithms where the code works well, but there are use cases for AVX.

Intel also revealed late last week that Skylake-X uses a mesh architecture for communication among the cores. This differs from the ring bus used in the previous several high-end solutions. The primary advantage is that the mesh architecture should be more readily scalable, but it also appears to increase the latency for some data accesses. It's not entirely clear how big of an effect this has on overall performance, but combined with the larger L2 caches and smaller L3 caches—with the L3 cache now functioning as a victim cache—Skylake-X has definitely received a lot of improvements and changes compared to the previous generation.

The last item related to architectural changes that I want to touch on is Speed Shift, a technology designed to improve the speed at which a CPU can change performance states. Using the 10-core i9-7900X against the 10-core i7-6950X as a comparison, Intel says the 7900X can fully transition to maximum clockspeed in 8ms, while the 6950X requires 33ms to partially transition, and up to 280ms to fully transition. This mostly applies when using the 'balanced' power profile, however—the 'high performance' power profile keeps the CPU in the maximum performance state so the transition between clockspeeds doesn't generally need to occur.

A preview of Skylake-X and Kaby Lake-X performance

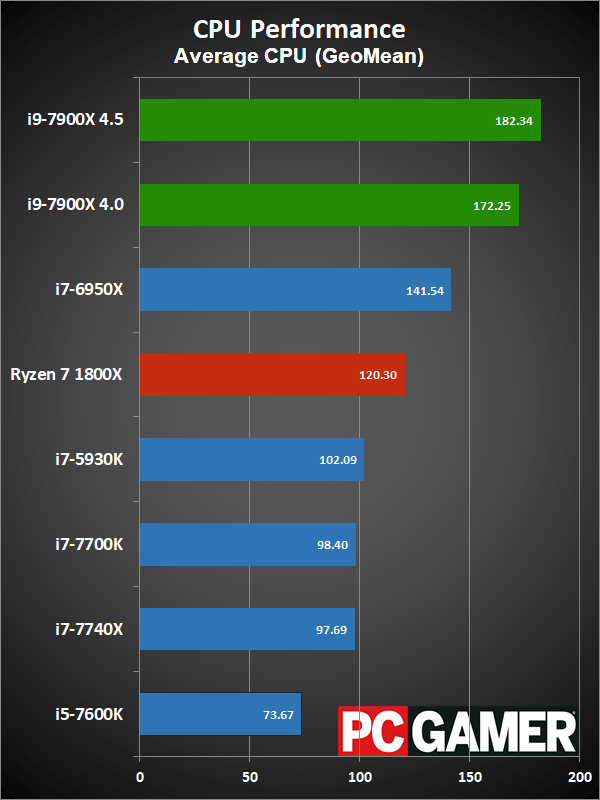

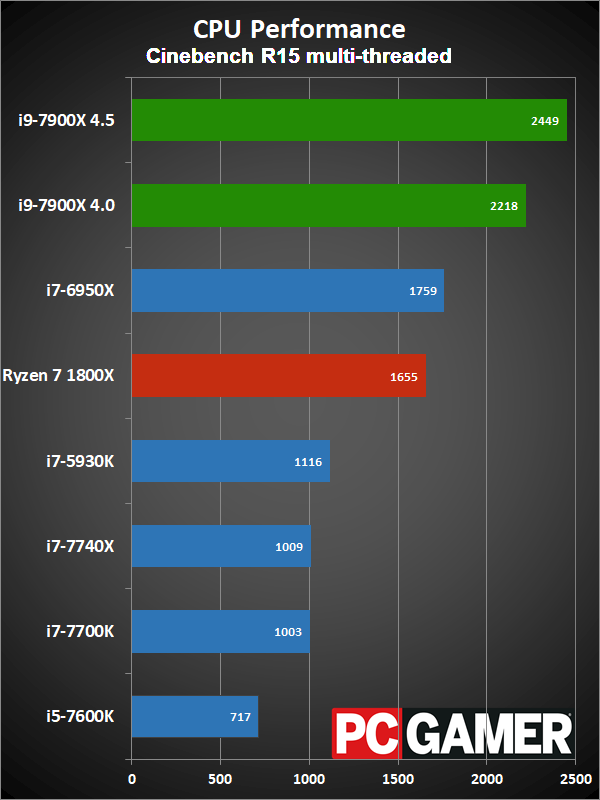

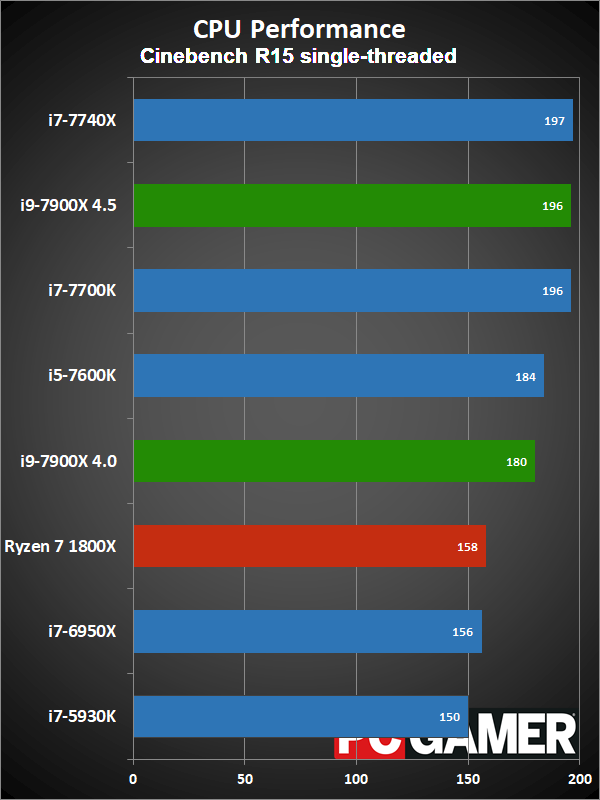

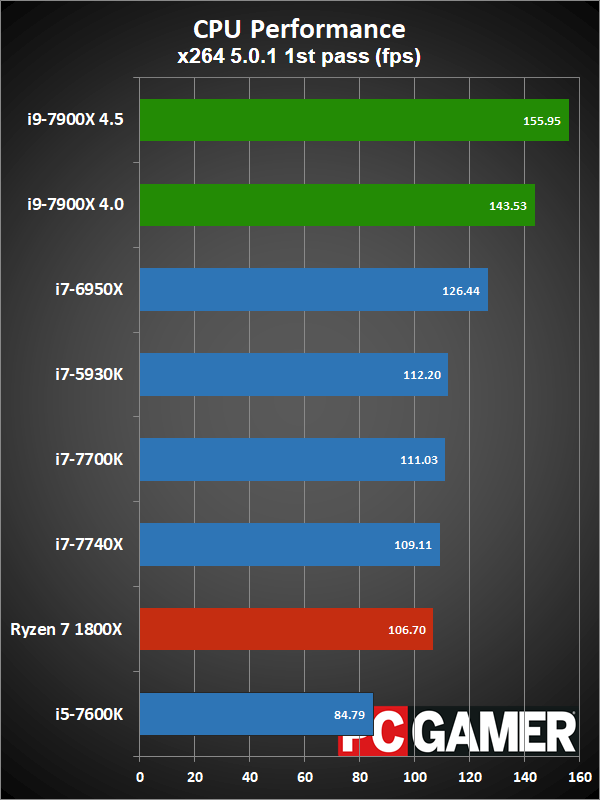

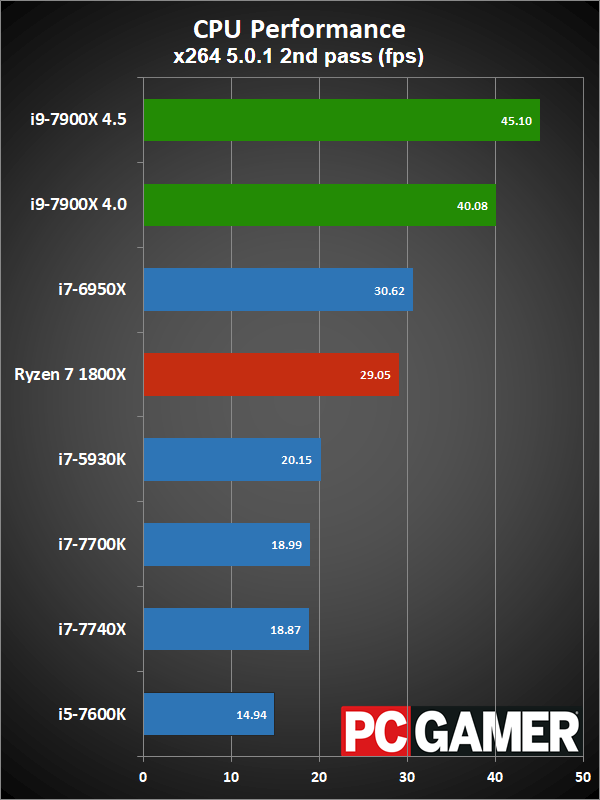

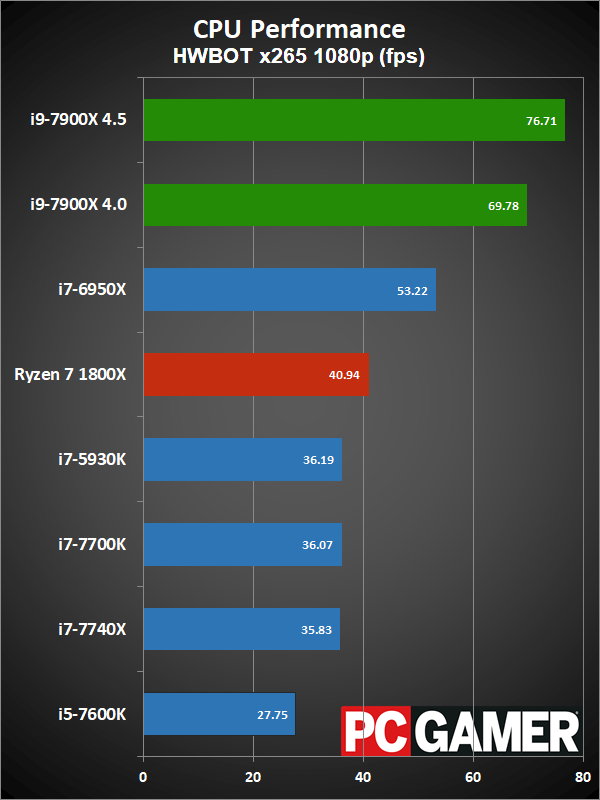

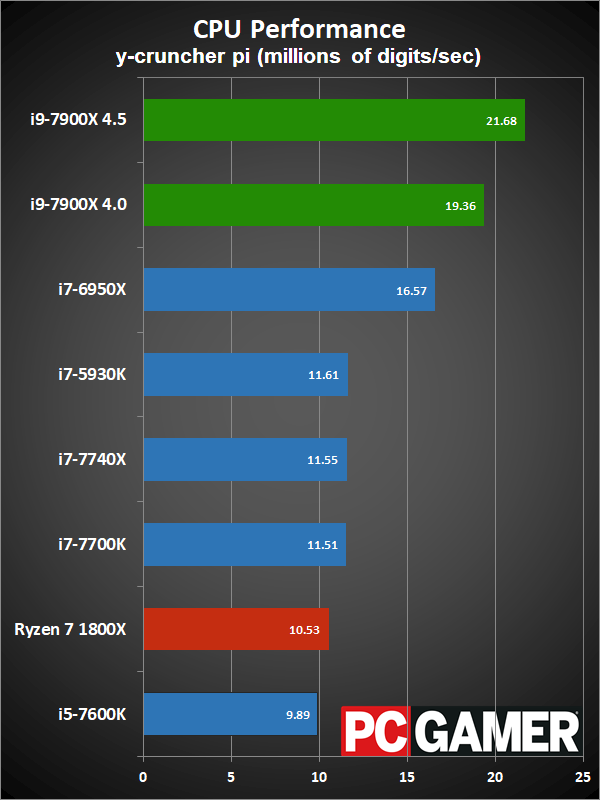

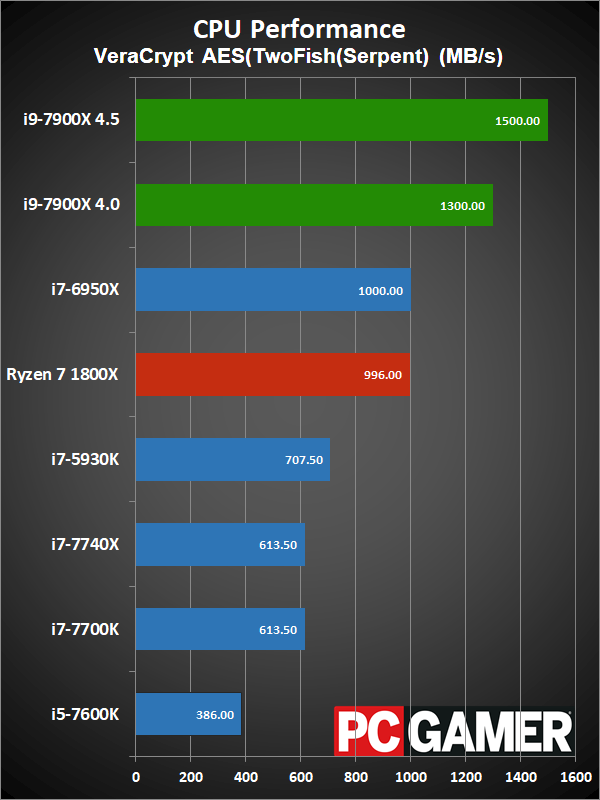

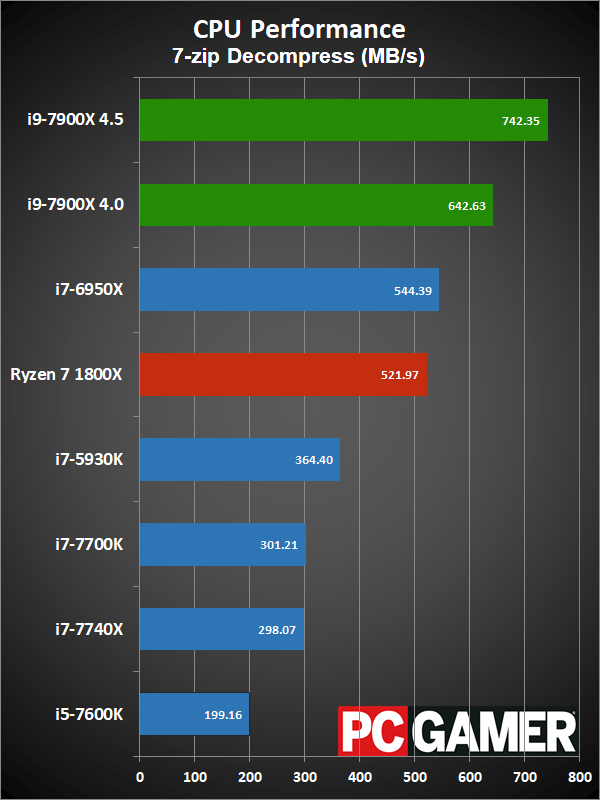

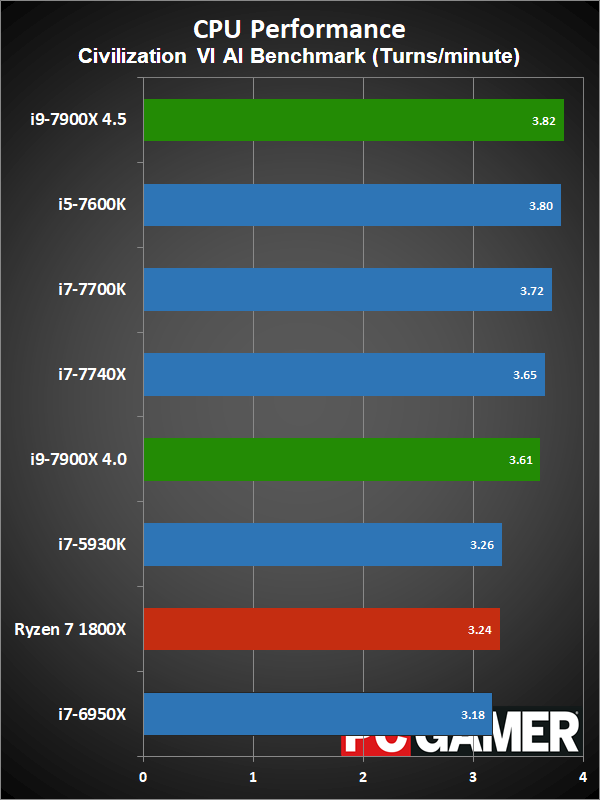

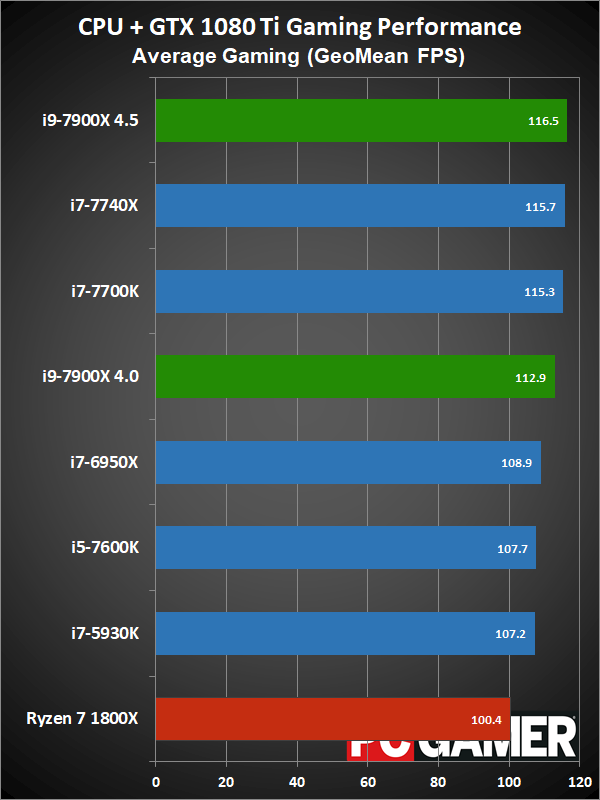

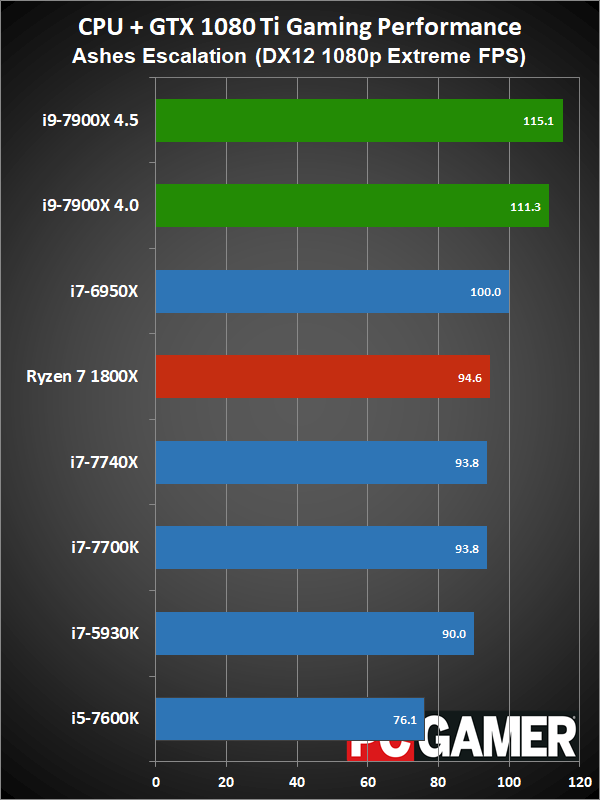

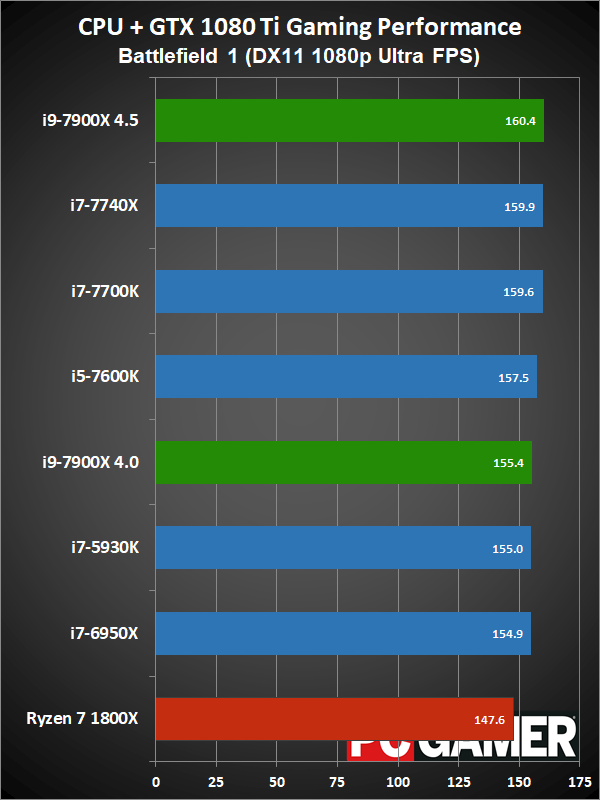

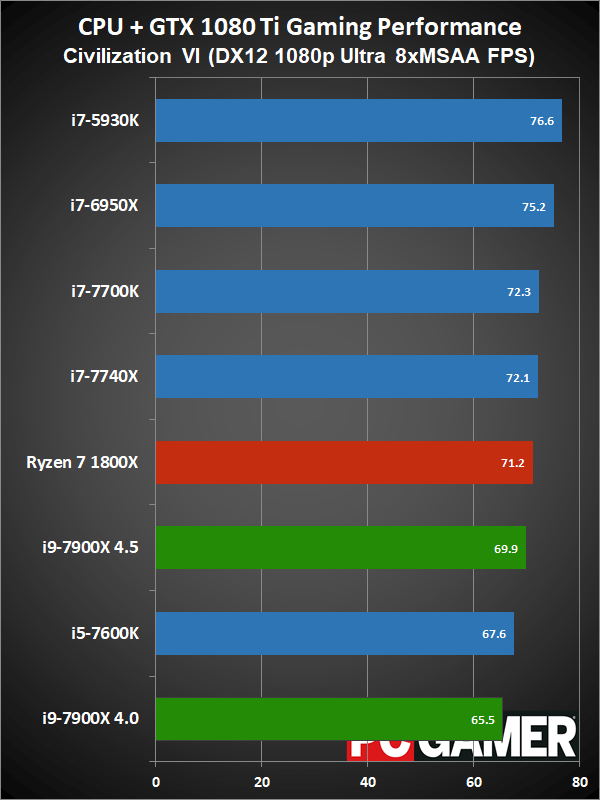

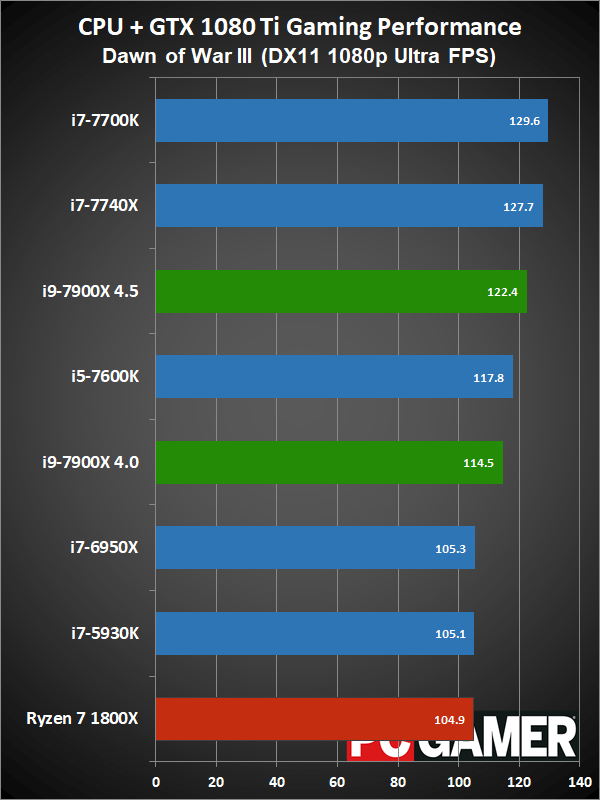

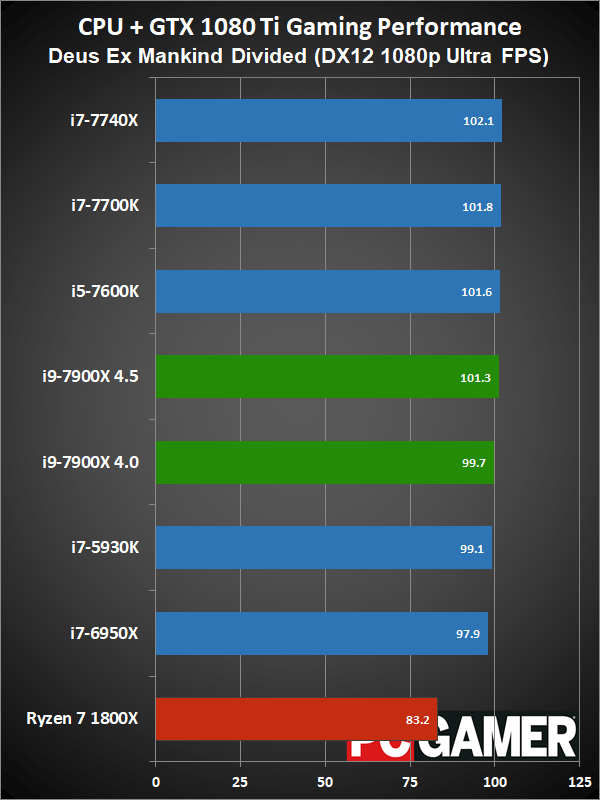

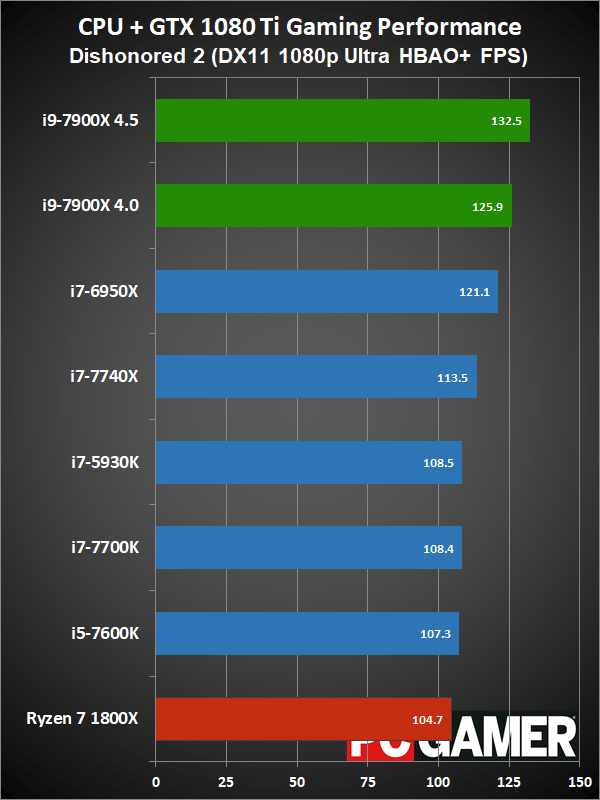

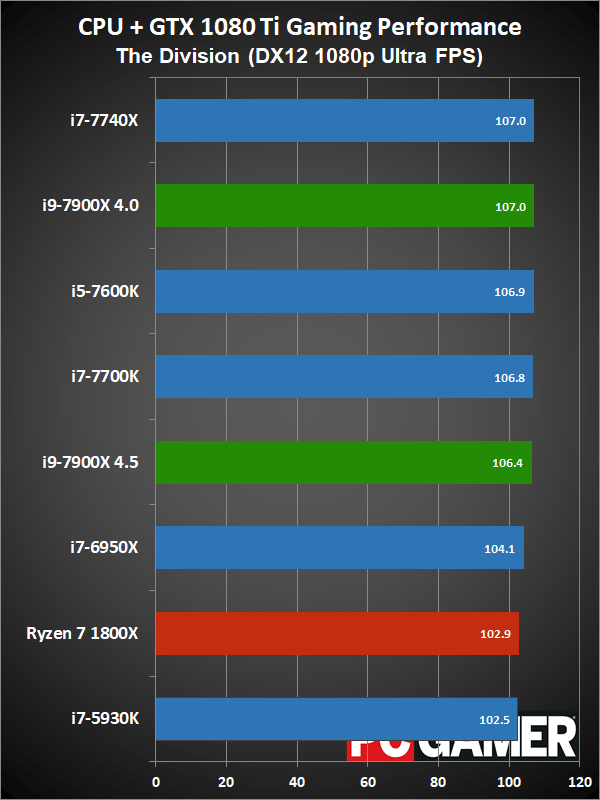

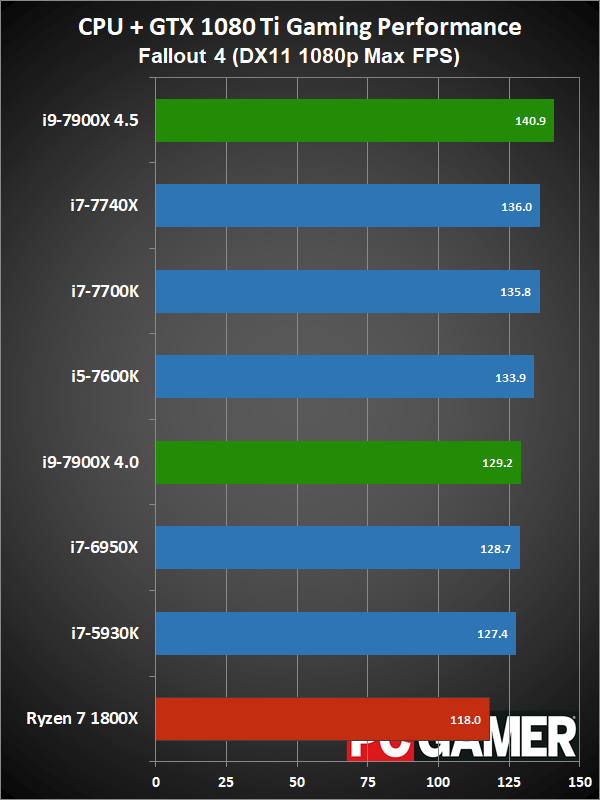

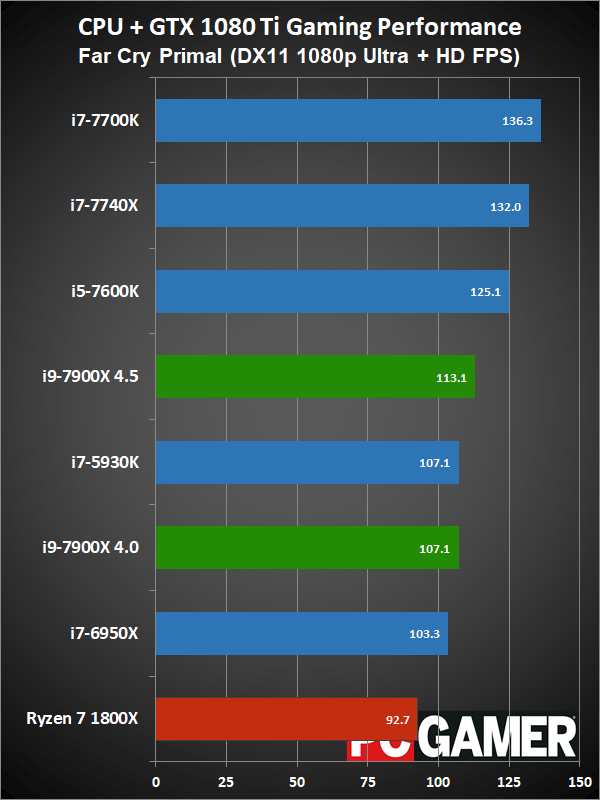

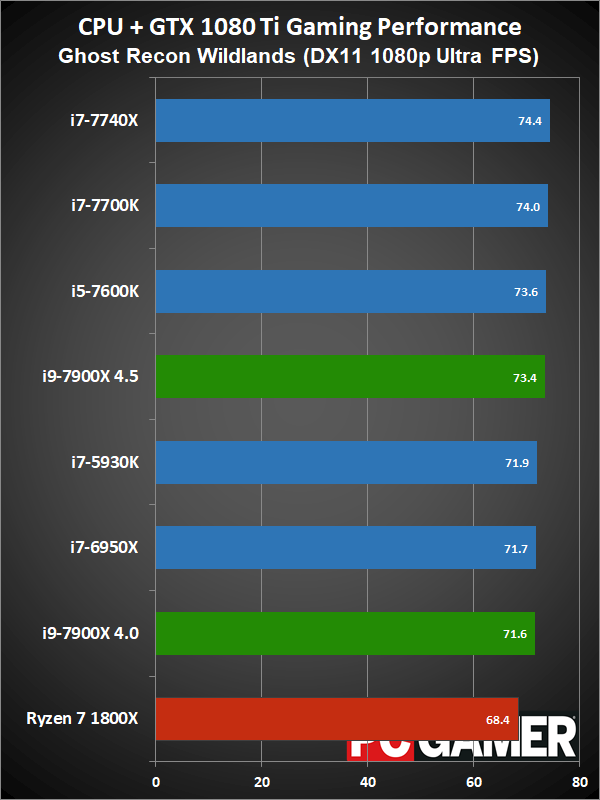

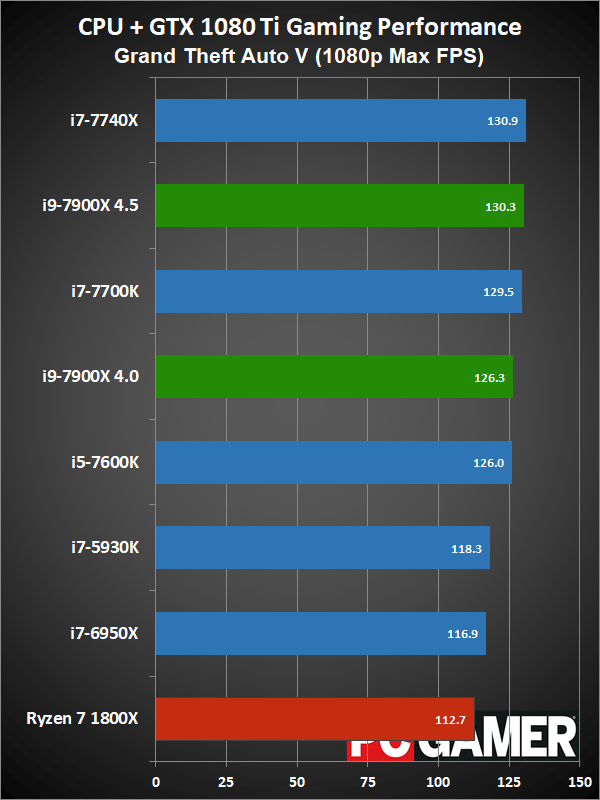

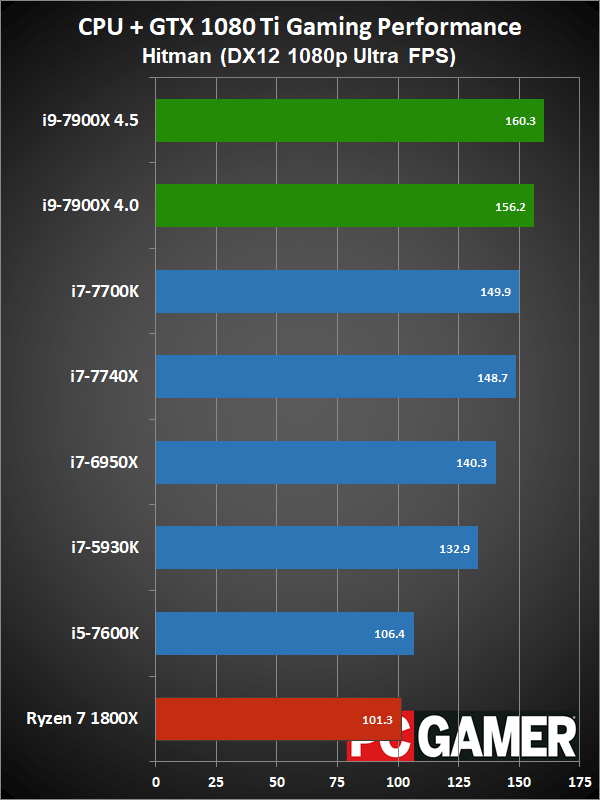

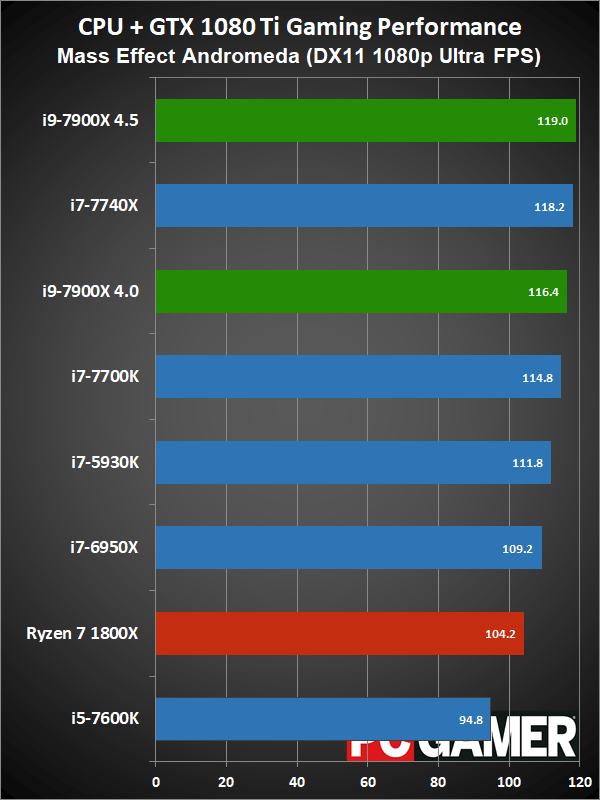

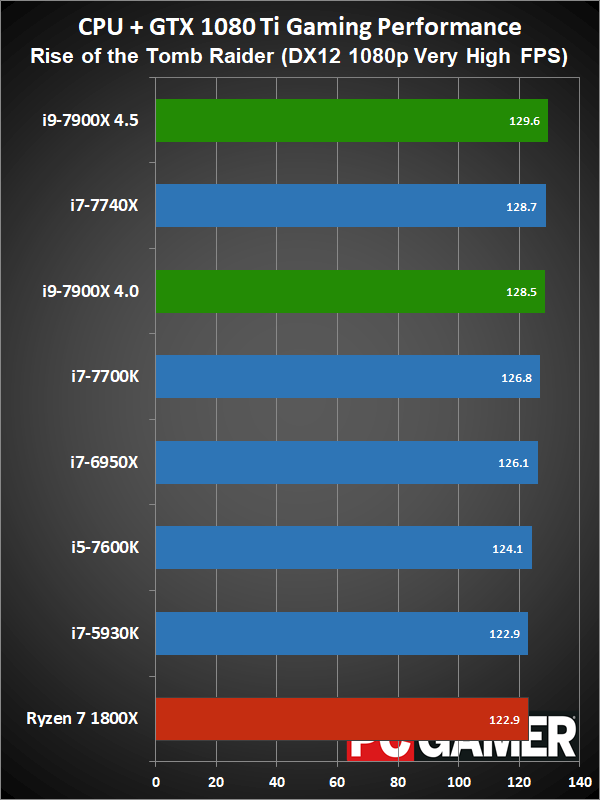

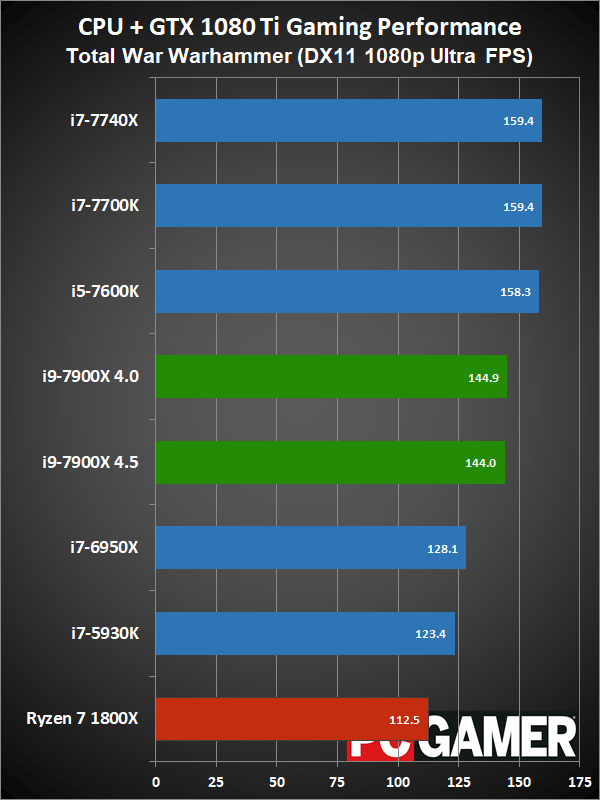

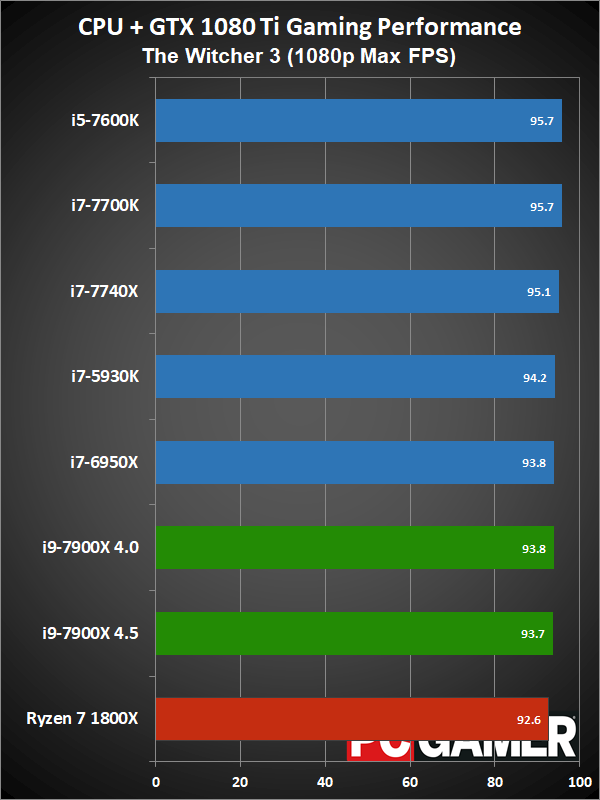

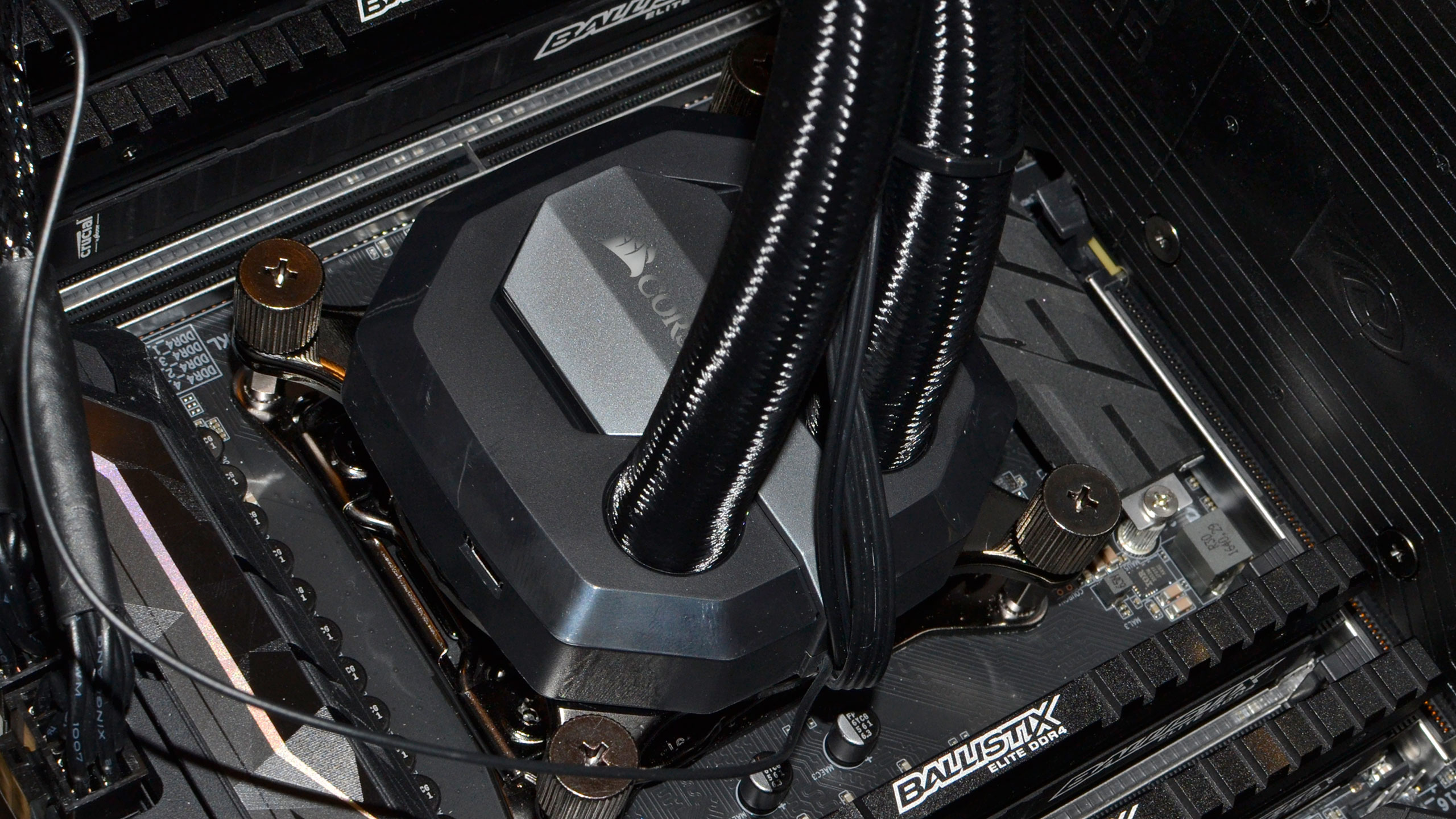

Okay, disclaimers about clockspeeds and Turbo Boost aside, here are the current results. The test hardware has been updated to use the GTX 1080 Ti FE, all systems were tested with 16GB of DDR4 memory (DDR4-2667 on the X99/X299 platforms, and DDR4-3200 on AM4 and LGA1151). I have two results for the i9-7900X, one with it running at a conservative 4.0GHz, and one at an aggressive 4.5GHz. The 4.0GHz clockspeed is what you should see at 'stock' for heavily threaded workloads, while the 4.5GHz results will better represent lighter workloads.

As for the Kaby Lake-X i7-7740X, performance should be 'final' with the current BIOS. In testing, it ran at a constant 4.5GHz, but the chip is rated to do just that so it's not a problem. However, firmware can affect more than just clockspeeds, so I'm still waiting to test a couple more boards before providing the full review of the i7-7740X. And with the caveats out of the way, here are the current performance results, starting with CPU-centric workloads:

There are no surprises here. With a 10-core clockspeed of 4.0GHz as standard, plus architectural enhancements, the i9-7900X easily beats any and all current contenders. I'll be adding a few more CPUs to the charts over the next week (i7-6900K and i7-6850K for Intel, and Ryzen 7 1700, Ryzen 5 1600X, and Ryzen 5 1500X for AMD is my plan), but in CPU centric workloads Skylake-X proves compelling. If you do work that requires large amounts of multi-threaded processing, the upgrade in performance over the i7-6950X is substantial—not quite as large if you overclock both CPUs, but still. Single-threaded performance is also good, looking at Cinebench, at least if we take the 4.5GHz result as the expected outcome. The Civilization VI AI benchmark meanwhile shows that the game doesn't scale quite as well with multi-threaded CPUs as we might have hoped.

The i9-7900X also has clear benefits over the i7-6950X in that it 'only' costs $999, compared to $1,723 (on 'sale' for just $1,560 right now). But one thing that you might not expect is that the i9-7900X (at least on the test platforms) uses more power than the i7-6950X, sometimes by a rather large margin. Idle power is similar, around 140-145W, but a pure CPU workload like Cinebench managed to pull well over 75W more than the previous generation. That might go back to the BIOS and firmware stuff again, but it's a surprising difference considering both processors have a 140W TDP rating.

Moving on to gaming performance, at 4.5GHz the i9-7900X comes in just ahead of the i7-7740X—which is basically tied with the i7-7700K. At 4.0GHz, however, it falls a few percent below the 7700K, but still beats the 6950X and 7600K. The i9-7900X is definitely fast enough for gaming, but the extra cores aren't always very useful.

Looking at individual results, three games out of the 16 games I tested actually show a clear benefit with more than 4-core/8-thread CPUs: Ashes of the Singularity: Escalation (DX12), Dishonored 2 (DX11), and Hitman (DX12)—and maybe Mass Effect: Andromeda, at least a little bit. Other games clearly prefer the higher clockspeeds of the 4-core parts, with Total War: Warhammer and Far Cry: Primal showing a particularly large gap between LGA1151 and LGA2011/2066 parts. It may be that those game just happen to be heavily tuned for Intel's 4-core design, or that the new mesh architecture and changes in cache latency are coming into play, but overall there's not a huge benefit for most games in going from 4-core/8-thread to 10-core/20-thread (or even 6-core/12-thread).

But this is if you're running relatively clean in Windows 10. If you're doing other stuff in the background, particularly things like livestreaming, more cores can help smooth things out. CPU-based video encoding of a livestream using OBS as an example, will definitely like having all those cores.

I also want to point out that this is gaming at 1080p, mostly maximum quality, with the fastest single GPU gaming solution outside of the Titan Xp. I've selected the resolution and GPU as a balance between testing at 1080p medium or high quality, where the CPU might play a larger role in limiting framerates, and 1440p ultra, where the GPU would be the major bottleneck. Here there's still a chance for CPUs to improve performance, at least in about half of the games. If we swap out the GTX 1080 Ti for a GTX 1070, even with reduced quality settings, the gap between the CPUs will narrow quite a bit.

Initial thoughts on Skylake-X and X299

I've been looking forward to Skylake-X for a while, mostly because my main graphics card testbed is nearing three years old and I wanted a good reason to replace it. More cores, higher clockspeeds, and improved performance per clock are all good things. Lower prices relative to Broadwell-E is also welcome news. But there's a lot going on here, and as with most new platform launches, we're still in the early days.

Compared to AMD's Ryzen launch, Skylake-X hasn't been quite as rough. There are problems for sure, but outside of overclocking I haven't had any system crashes, and memory compatibility has been good with the few modules I've tried. But doing a scored review on day one of a new platform is premature—and yes, I'm aware I haven't provided fully scored reviews of the Ryzen CPUs either. I aim to correct that in the coming weeks, once things have settled down a bit.

Besides the platform, however, I'm more than a bit irritated by Intel's decision to limit the 6-core and 8-core Skylake-X parts to 28 PCIe lanes. The i7-5820K and i7-6800K always felt like silly market segmentation, where you had to spend $150 extra to get a minor bump in clockspeed and an additional 12 PCIe lanes. Now, though, it's obvious Intel intends to keep high PCIe lane counts as high-end options. If you want 44 PCIe lanes, the only Intel parts that provide that are Core i9, which starts at $999. AMD will be offering 60 PCIe lanes on all Threadripper parts, but we still don't know exactly how well they'll perform or how much they'll cost.

But do you need more than 28 PCIe lanes? For most gamers, the answer is easy: nope. Only dual GPUs really benefit, and even then the benefit is pretty small. And there are plenty of games that don't even properly support multiple GPUs, making that an increasingly niche market. If you want the best and are willing to pay for it, Skylake-X and the i9-7900X make a strong case… but you really will pay for it, at twice the price of the Ryzen 7 1800X, and over three times the cost of the Ryzen 7 1700.

For gaming, you'd be better off putting more money into your GPU rather than upgrading to a 10-core processor. For professionals, though, this is the fastest prosumer chip this side of the i9-7980XE and Threadripper. But maybe think about waiting until October, when the i9-7980XE is actually available and we're hopefully past the teething stage for X299 motherboards.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.