The Large Hadron Collider is sucking in graphics cards at a rapidly increasing rate

Thanks to the processing efficiency of the modern graphics chip, more and more LHC experiments are turning to GPU processing.

Don't panic, the folk at CERN aren't hurling graphics cards into one another beneath Switzerland to see what happens when GPU particles collide. They're actually using Nvidia's graphics silicon to cut down the amount of energy it needs to compute what happens when the Large Hadron Collider (LHC) collides other stuff.

Particles and things. Beauty quarks. Y'know, science stuff.

It's no secret that, while the humble GPU was originally conceived for the express purpose of chucking polygons around a screen in the most efficient way, it turns out the parallel processing prowess of modern graphics chips makes for an incredibly powerful tool in the scientific community. And an incredibly efficient one, too. Indeed A Large Ion Collider Experiment (ALICE) has been using GPUs in its calculations since 2010 and its work has now encouraged their increased use in various LHC experiments.

The potential bad news is that it does mean there's yet another group desperate for the limited amount of GPU silicon coming out of the fabs of TSMC and Samsung. Though at least this lot will be using it for a loftier purpose than mining fake money coins.

Right, guys? You wouldn't just be mining ethereum on the side now, would you?

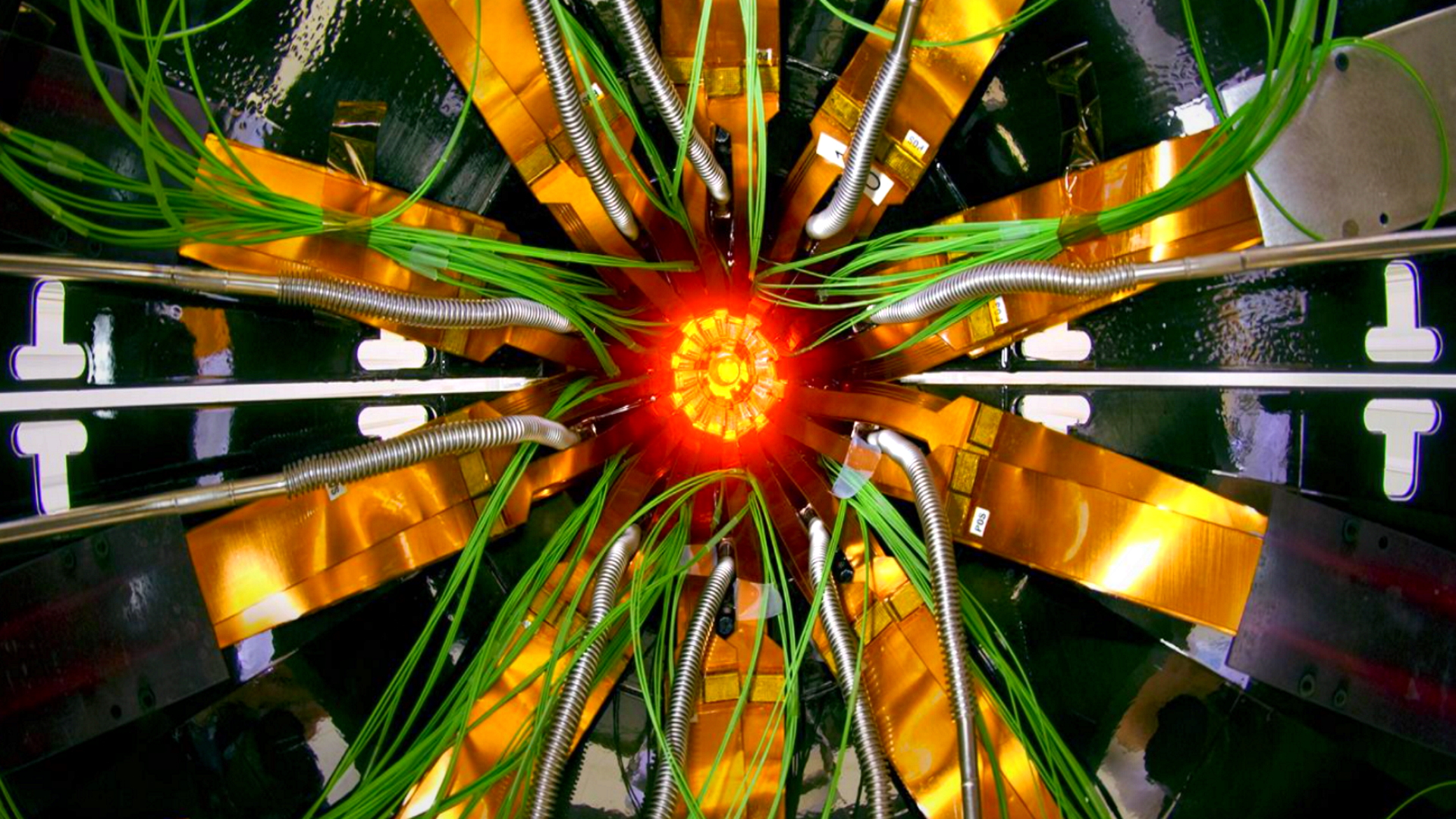

On the plus side the CERN candidate nodes are currently using last-gen tech. For the upcoming LHC Run 3—where the machine is recommissioned for a "three-year physics production period" after a three-year hiatus—the nodes are pictured using a pair of AMD's 64-core Milan CPUs alongside two Turing-based Nvidia Tesla T4 GPUs.

Okay, no-one tell them how much more effective the Ampere architecture is in terms of straight compute power, and I think we'll be good. Anyways, as CERN calculates, if it was just using purely CPU-based nodes to parse the data it would need about eight times the number of servers to be able to run its online reconstruction and compression algorithms at the current rate. Which means it's already feeling pretty good about itself.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Given that such efficiency increases do genuinely add up for a facility that's set to run for three years straight, shifting more and more over to GPU processing seems like a damned good plan. Especially because, from this year, the Large Hadron Collider beauty (LHCb) experiment will be processing a phenomenal 4 terabytes of data per second in real time. Quite apart from the name of that experiment—so named because it's checking out a particle called the "beauty quark"🥰—that's a frightening amount of data to be processing.

Best AIO cooler for CPUs: All-in-one, and one for all... components.

Best CPU air coolers: CPU fans that don't go brrr.

"All these developments are occurring against a backdrop of unprecedented evolution and diversification of computing hardware," says LHCb's Vladimir Gligorov, who leads the Real Time Analysis project. "The skills and techniques developed by CERN researchers while learning how to best utilise GPUs are the perfect platform from which to master the architectures of tomorrow and use them to maximise the physics potential of current and future experiments."

Damn, that sounds like he has got at least one eye on more recent generations of Nvidia workstation GPUs. So I guess we will end up fighting the scientists for graphics silicon after all.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.