Straight Outta Comp-Sci: now AI voice actors can rap

"We like to keep it classy like Helvetica."

On his track Rap God the effervescently witty Eminem takes aim at one common criticism of his delivery style: "They said I rap like a robot so call me Rap-bot—But for me to rap like a computer must be in my genes, I got a laptop in my back pocket..." and so on.

It's a fairly common criticism in hip-hop, applied to many less famous than Eminem. The idea of a 'robotic' delivery style is a big diss: your delivery is monotonous, the rhymes are obvious, you don't please the ear.

Welp, looks like we may need to re-think things, thanks to an AI voice company called Replica and the semi-cursed result of its employees messing around in what was apparently a 'hackathon' (thanks, Verge).

Remarkably enough, the rap itself comes from one individual, split across the various AI actors. Replica employee Shreyas told PCG that "One Replica team member performed all the rap bits. After passing his source recording through our system, we then can apply the tone/cadence/performance onto any of our 50+ (and growing) AI voices."

It's got some funny lines, too.

"So maybe you can see that I'm a virtual actor,

And I'm breaking new ground like a literal tractor."

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Not bad, though what would make that perfect would be an AI with the voice of Alan Partridge, Norfolk's greatest DJ.

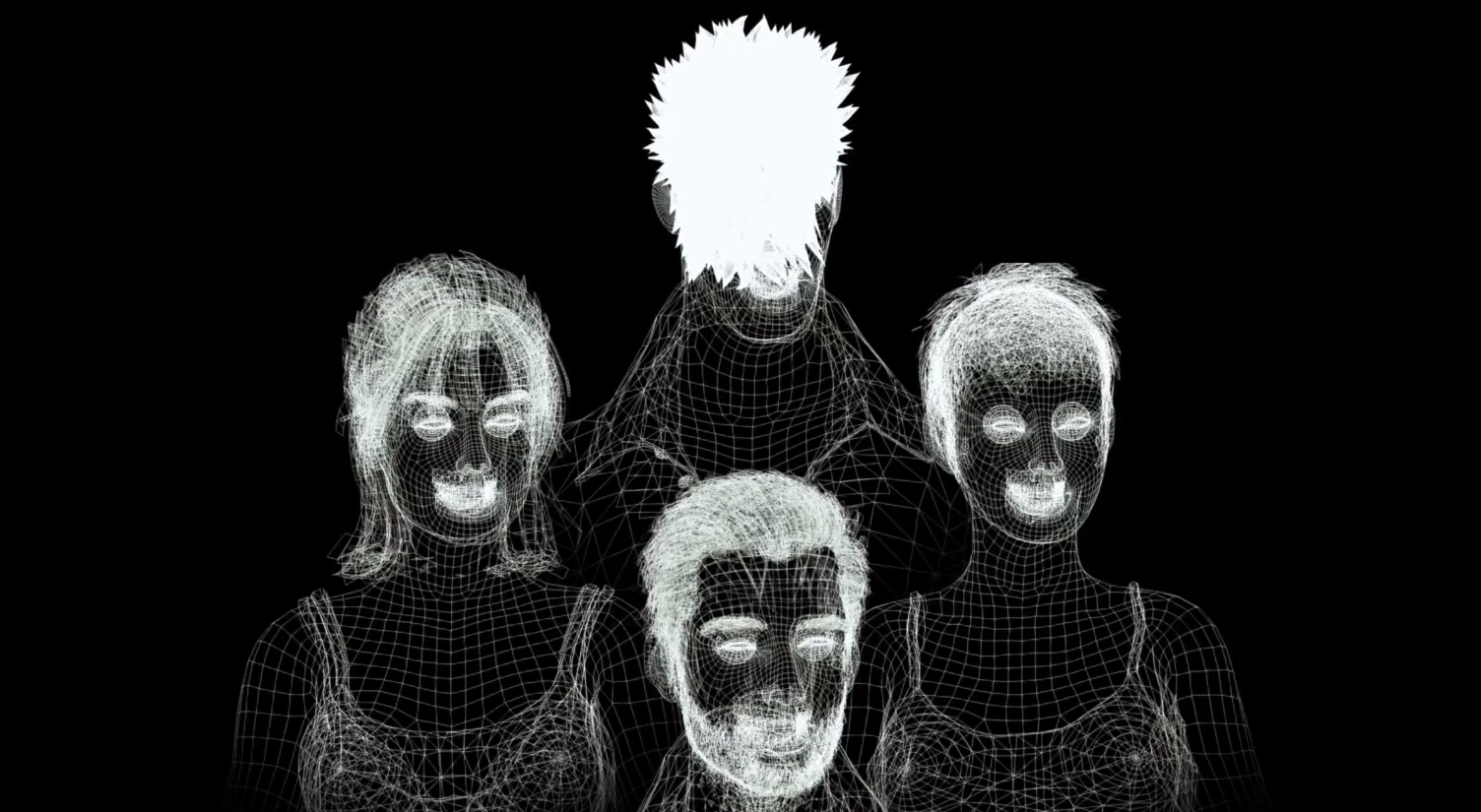

"The video itself is the result of something produced internally during a hackathon, using a feature that's currently under development," explains Shreyas. "The feature itself is purely about applying the same cadence/timing/delivery from a source recording onto any of our AI voices. The fact that it can actually be pushed so far as to pull off a rap is very cool—and an unintentional consequence of the feature that we were originally only intending for adding perfectly timed lip-sync to a video or 3D animation. For example, here's the same feature applied to Unreal Engine's Metahumans."

The feature is due to launch later this year, after debuting at GDC in July. The idea is basically to make it possible for developers to have a large cast of voice-acted characters, with accurate lip-synching and intonation, without having to go through the process of individually animating and recording every line. Depending on the results it could be a huge advance for smaller developers: we're all laughing at the virtual rappers now, but in a few years this tech could be in half the games we play.

Rich is a games journalist with 15 years' experience, beginning his career on Edge magazine before working for a wide range of outlets, including Ars Technica, Eurogamer, GamesRadar+, Gamespot, the Guardian, IGN, the New Statesman, Polygon, and Vice. He was the editor of Kotaku UK, the UK arm of Kotaku, for three years before joining PC Gamer. He is the author of a Brief History of Video Games, a full history of the medium, which the Midwest Book Review described as "[a] must-read for serious minded game historians and curious video game connoisseurs alike."