'Sputtering' atom-thick copper could give us cheaper PC chips

It's no surprise that ditching gold from chip manufacturing makes it cheaper, but copper could also make it lower power and last longer, too.

The gold standard of semiconductors looks like it might just be copper. Really, really thin bits of copper. A team of scientists from multiple universities, across South Korea and the US, have collaborated to find a way to create thin films of copper that resist corrosion and come in at just a single atom thick. But why should we give a pair of silicon ingots about some flimsy copper sheets?

Because there's the very real possibility that with this new method, dropping skinny copper films into the semiconductor industry could drive costs down, reduce the amount of power devices demand, and could potentially increase the lifespan of such devices, too. And it's all about ridding chips of that pesky gold stuff.

That's like a triple-whammy of good things at a time where shrinking down transistors is harder and harder, and not necessarily the magic bullet to keeping Moore's Law ticking along.

"Oxidation-resistant Cu could potentially replace gold in semiconductor devices," says Professor Se-Young Jeong, project lead at Pusan National University, "which would help bring down their costs. Oxidation-resistant Cu could also reduce electrical consumption, as well as increase the lifespan of devices with nanocircuitry."

You can find gold used inside processors and other components on your motherboard, even in PCBs themselves, and it's used because of its electrical conduction properties. It's also resistant to the effects of oxidisation, but damn is it expensive.

The collaboration between Pusan National University and Sungkyunkwan University, in South Korea, and Mississippi State University, in the USA, has created a method for manufacturing this atom-thick sheet of copper, and hence a way to stop it from oxidising and therefore corroding.

Copper is also one hell of a good conductor, which is why we use it in PC cooling, but it's not just heat it's good at conducting, but also electricity, too. You'll find copper used in all sorts of electronics, but the issue is that, because of corrosion, it's lifespan isn't necessarily as long as other metals with similar characteristics.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

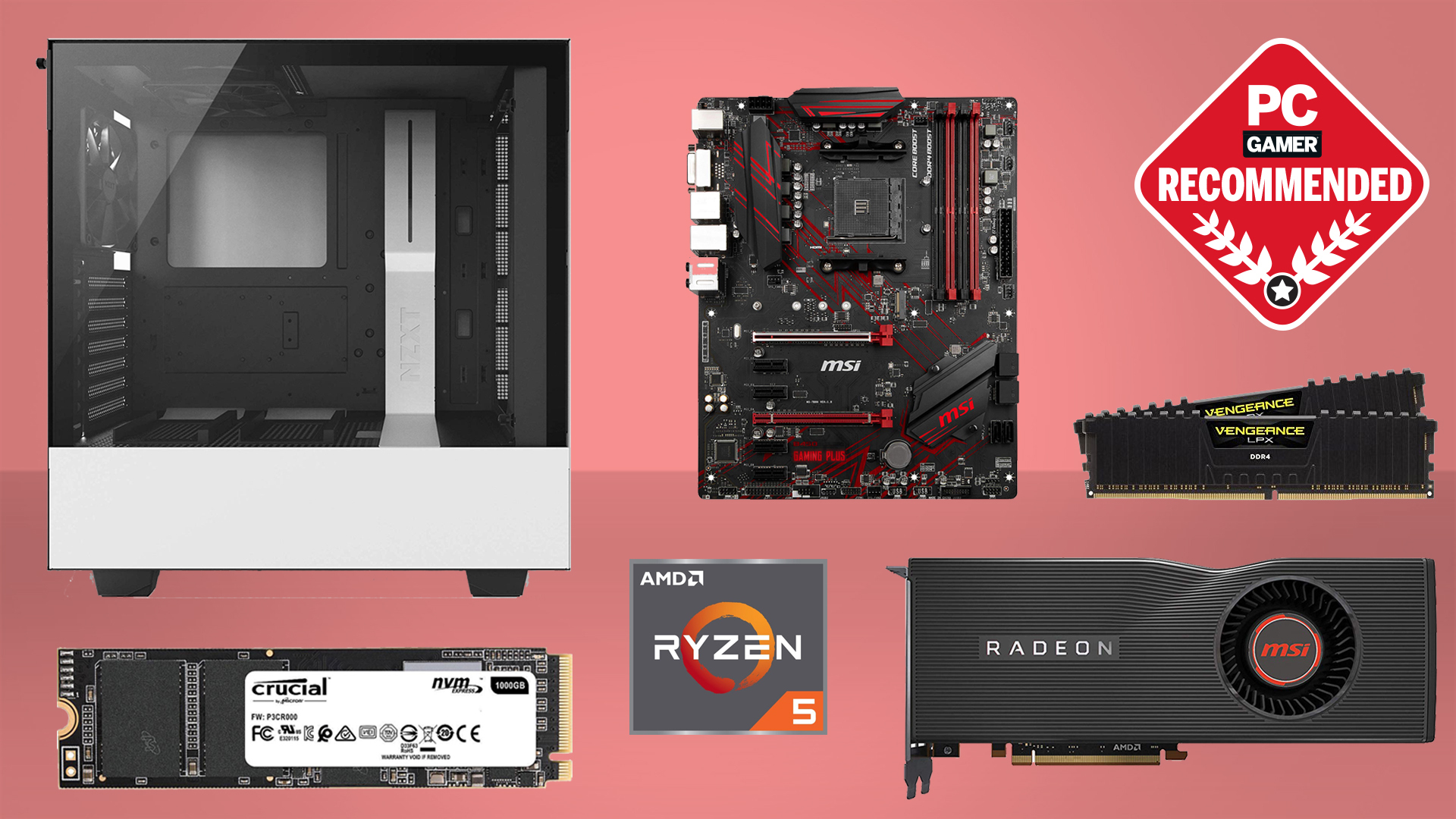

Best CPU for gaming: The top chips from Intel and AMD

Best gaming motherboard: The right boards

Best graphics card: Your perfect pixel-pusher awaits

Best SSD for gaming: Get into the game ahead of the rest

By creating what it calls "nearly defect-free" surfaces of one atom thick, or single-crystal copper sheets it can get around the oxidisation issue entirely. The child in me is also stupidly pleased about the phrasing of "atomic sputtering epitaxy" as the method of actually achieving the copper film, because to my ignorant brain it reads like some scientist just sneezed.

The full study can be found in the journal, Nature, but it's also worth noting that the findings aren't just going to benefit us PC Gamers through future copper-tinged chips, but your favourite bronze sculptures could see some protection because of this method, too. I'm into The Thinker, because who doesn't love a big pensive naked lad on a plinth?

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.