OpenAI plans to build its own AI chips on TSMC's forthcoming 1.6 nm A16 process node

The report suggests that Broadcom or Marvell will design the chip, but Apple might be a partner, too.

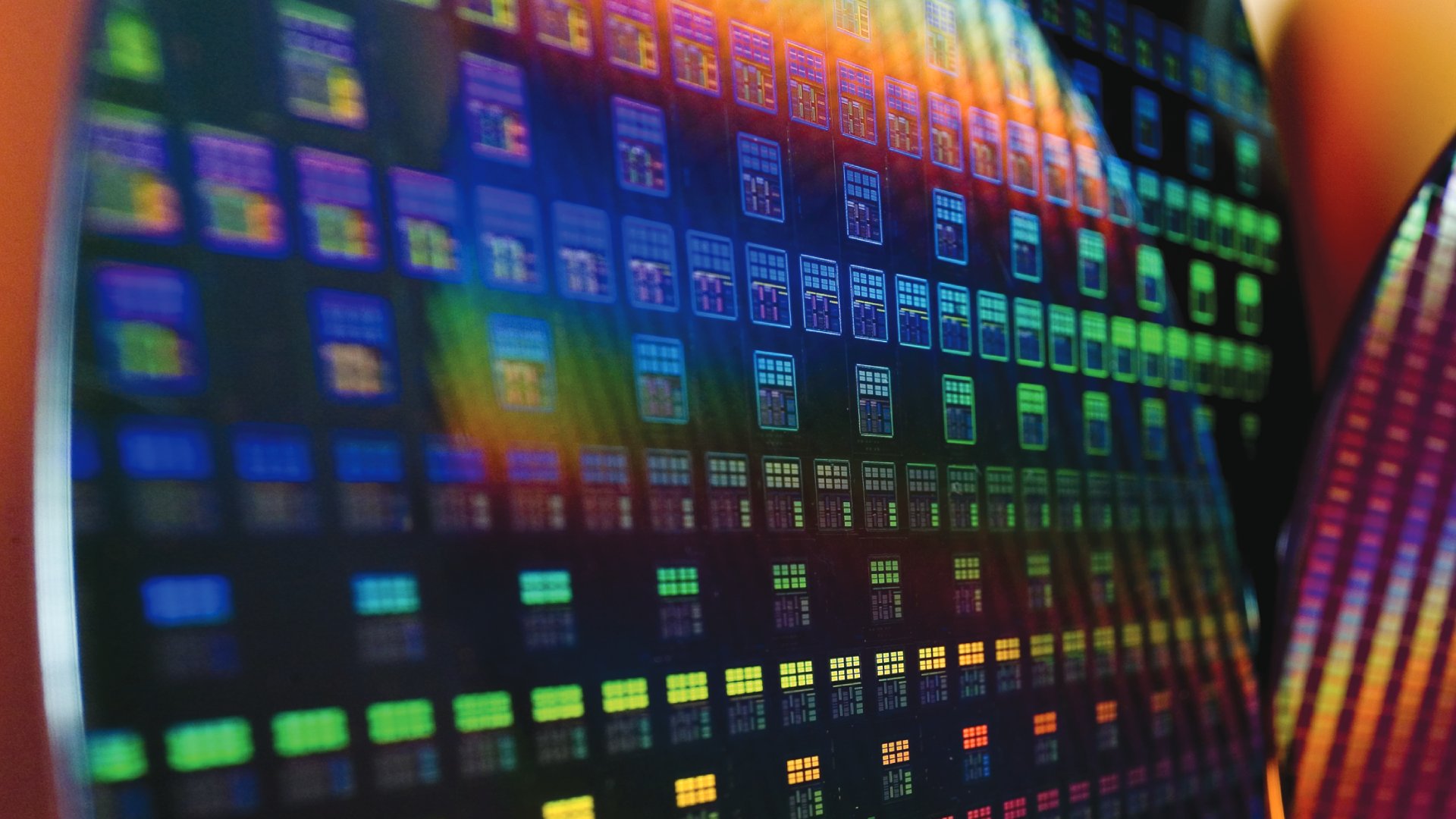

ChatGPT developer OpenAI has been musing over building its own AI chips for some time now but it looks like the project is definitely going ahead, as United Daily News reports the company is paying TSMC to make the new chips. But rather than using its current N4 or N3 process nodes, OpenAI has booked production slots for the 1.6 nm, so-called A16, process node.

The report from UDN (via Wccftech) doesn't provide any concrete evidence for this claim but the Taiwanese news agency is usually pretty accurate when it comes to tech forecasts like this. At the moment, OpenAI spends vast amounts of money to run ChatGPT, in part due to the very high cost of Nvidia's AI servers.

Nvidia's hardware dominates the industry, with Alphabet, Amazon, Meta, Microsoft, and Tesla spending hundreds of millions of dollars on its Hopper H100 and Blackwell superchips. While the cost of designing and developing a competitive AI chip is just as expensive, once you have a working product, the ongoing costs are much lower.

UDN suggests that OpenAI had originally planned to use TSMC's relatively low-cost N5 process node to manufacture its AI chip but that's apparently been dropped in favour of a system that's still in development—A16 will be the successor to N2, which itself isn't being used to mass produce chips yet.

TSMC states that A16 is a 1.6 nm node but the number itself is fairly meaningless now. It will use the same gate-all-around (GAAFET) nanosheet transistors as N2 but will be the first TSMC node to employ backside power delivery, called Super Power Rail.

But why would OpenAI want to use something that's still a few years away from being ready for bulk orders? UDN's report suggests that OpenAI has approached Broadcom and Marvell to handle the development of the AI chips but neither company has much experience with TSMC's cutting-edge nodes, as far as I know.

One possibility is that the whole project is being done in collaboration with Apple, which uses ChatGPT in its own AI system. That's currently powered via Google's AI servers but given how much Apply prefers using its own technology these days, I wouldn't be surprised if it was also looking to develop new AI chips.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

With mounting losses and an AI market packed with competitors, OpenAI's future looks somewhat uncertain, though rumours of investment from Apple and Nvidia may help turn things around. The regular influx of millions of dollars of funding is certainly helping it maintain stock value, for example.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

But if OpenAI is ultimately bought by Microsoft, Meta, or even Nvidia (or perhaps part-owned by all three), then it's unlikely that the OpenAI chip project would ever be finished, as Nvidia certainly wouldn't want to lose any valuable sales.

Even if it does come to fruition, don't expect it to make any performance headlines outside of handling GPT, simply because that's the nature of all ASICs (application specification integrated circuits)—they're designed to do one job very well but that's it. OpenAI's chip might be great for OpenAI but few other companies would be interested.

Nick, gaming, and computers all first met in the early 1980s. After leaving university, he became a physics and IT teacher and started writing about tech in the late 1990s. That resulted in him working with MadOnion to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its PC gaming section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com covering everything and anything to do with tech and PCs. He freely admits to being far too obsessed with GPUs and open-world grindy RPGs, but who isn't these days?