Microsoft co-authored paper suggests the regular use of gen-AI can leave users with a 'diminished skill for independent problem-solving' and at least one AI model seems to agree

'Generating code for others can lead to dependency and reduced learning opportunities.'

If you think that workers heavily reliant on generative AI may be less prepared to tackle questions that can't be succinctly answered by the machine, you may just be right.

A recent paper from Microsoft and Carnegie researchers attempted to study generative AI's effects on "cognitive effort" from workers in a recent self-reported study. In it, that paper says, "Used improperly, technologies can and do result in the deterioration of cognitive faculties that ought to be preserved"

From 319 total workers who use generative AI tools like ChatGPT or Copilot for work at least once a week, studying 936 applicable "real-world GenAI tool use examples", participants share how they perform their work. The study verified that critical thinking was primarily deployed to verify the quality of work, and as confidence in AI tools went up, critical thinking efforts went down alongside it.

The paper concludes:

"To that end, our work suggests that GenAI tools need to be designed to support knowledge workers’ critical thinking by addressing their awareness, motivation, and ability barriers."

As the paper is based on self-reports from the 319 workers, this study is driven by self-perception of work. As such, some barriers presented themselves in methodology. For instance, the paper reports that some participants would conflate the complexity and consistency of work with less critical skills.

Effectively, if the AI-generated response was satisfying to the user generating it, this led to a self-perception that they had not been as critical as one that feels more complex to them. As well as this, merits like critical thinking and satisfaction are subjective in nature.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

"In future work, longitudinal studies tracking changes in AI usage patterns and their impact on critical thinking processes would be beneficial."

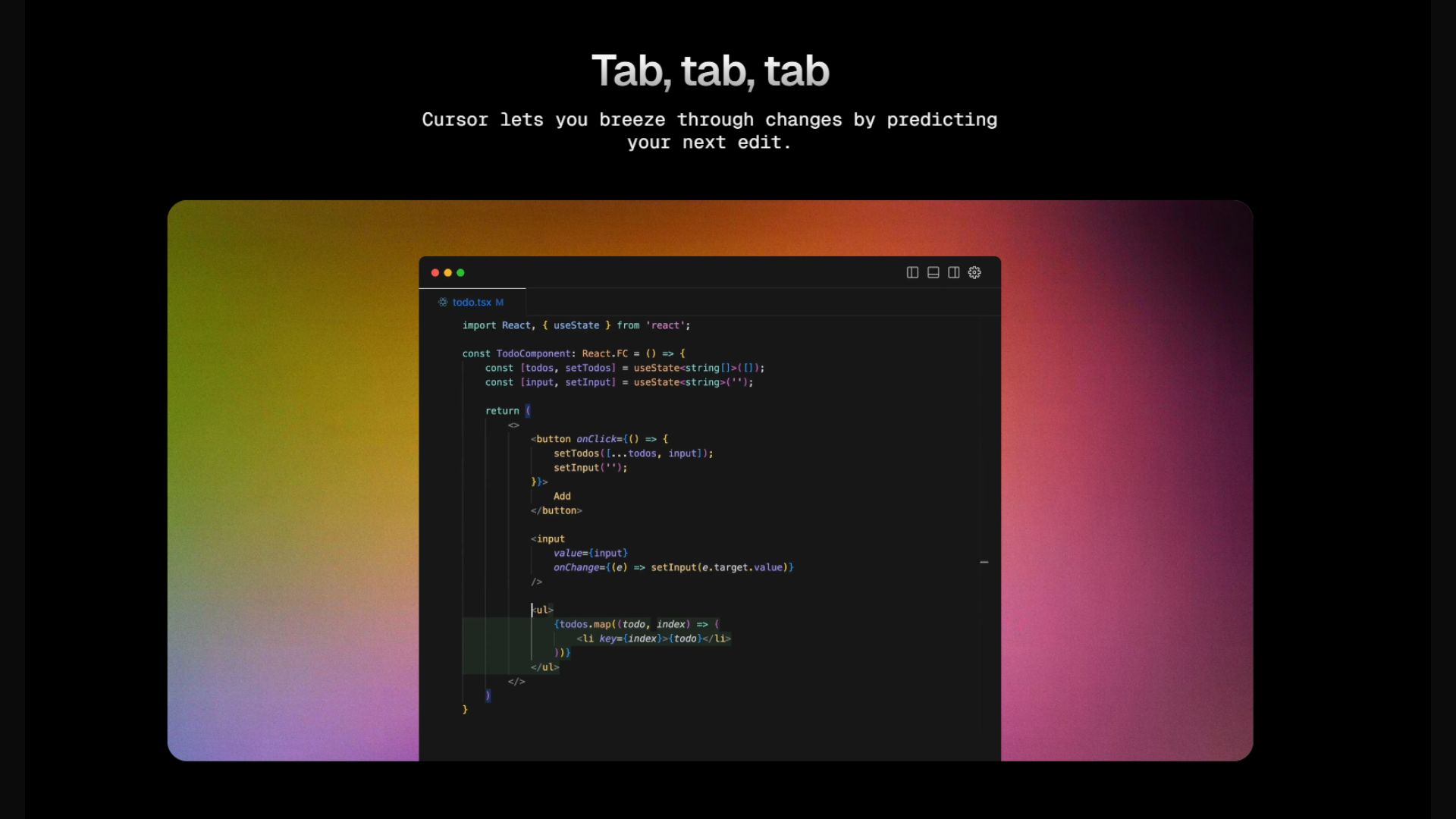

Nonetheless, the conclusion that more faith in generative AI tools can lead to less critical thinking around its results is something mimicked in a real-world AI tool. Cursor, an AI-based code editor recently responded to a request of over 750 lines of code, stating it could not generate that amount for the user as it would be "completing your work" (via Ars Technica). It further explains:

"Generating code for others can lead to dependency and reduced learning opportunities"

The user reporting this 'issue' responds to the prompt saying ", Not sure if LLMs know what they are for (lol)". The original user replied to a comment stating their original prompt for Cursor was "just continue generating code, so continue then, continue until you will hit closing tags, continue generating code".

However, the 'logic' behind Cursor's response does make sense in light of this recent paper. If a coder is asking AI to develop hundreds of lines of code, and problems persist with said code, it would be much harder to find the problem when you don't know what's there in the first place.

Fundamentally, AI-generated tools are fallible. They are created through scraping data (some of which being copyrighted), and are prone to hallucinations thanks to the multitude of information. They can, and often will, be wrong, and it's important to be critical of information you are given when using it.

As this study suggests, If implemented into a workflow, AI tools have to be consistently scrutinized to avoid losing out on the critical thinking skills necessary to handle such wide swathes of data.

As the paper reports, "GenAI tools are constantly evolving, and the ways in which knowledge workers interact with these technologies are likely to change over time." Maybe the next step for these AI tools is to put barriers to entry for taking over work, and taking a back step to human creativity. Okay, maybe I actually mean 'hopefully.'

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

James is a more recent PC gaming convert, often admiring graphics cards, cases, and motherboards from afar. It was not until 2019, after just finishing a degree in law and media, that they decided to throw out the last few years of education, build their PC, and start writing about gaming instead. In that time, he has covered the latest doodads, contraptions, and gismos, and loved every second of it. Hey, it’s better than writing case briefs.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.