The Tony Blair Insitute 'did not simply ask ChatGPT for the results' of its AI impact report, it trained its own version of ChatGPT instead

If there's one thing I trust, its AI predictions. Said nobody ever.

Updated July 12, 2024: The Tony Blair Institute for Global Change has been in touch to say we have oversimplified the nature of its research into the value of AI in the public sector workforce. A TBI spokesperson has told us that "we did not simply ask ChatGPT for the results" and that it built its approach "on previous academic papers and empirical research."

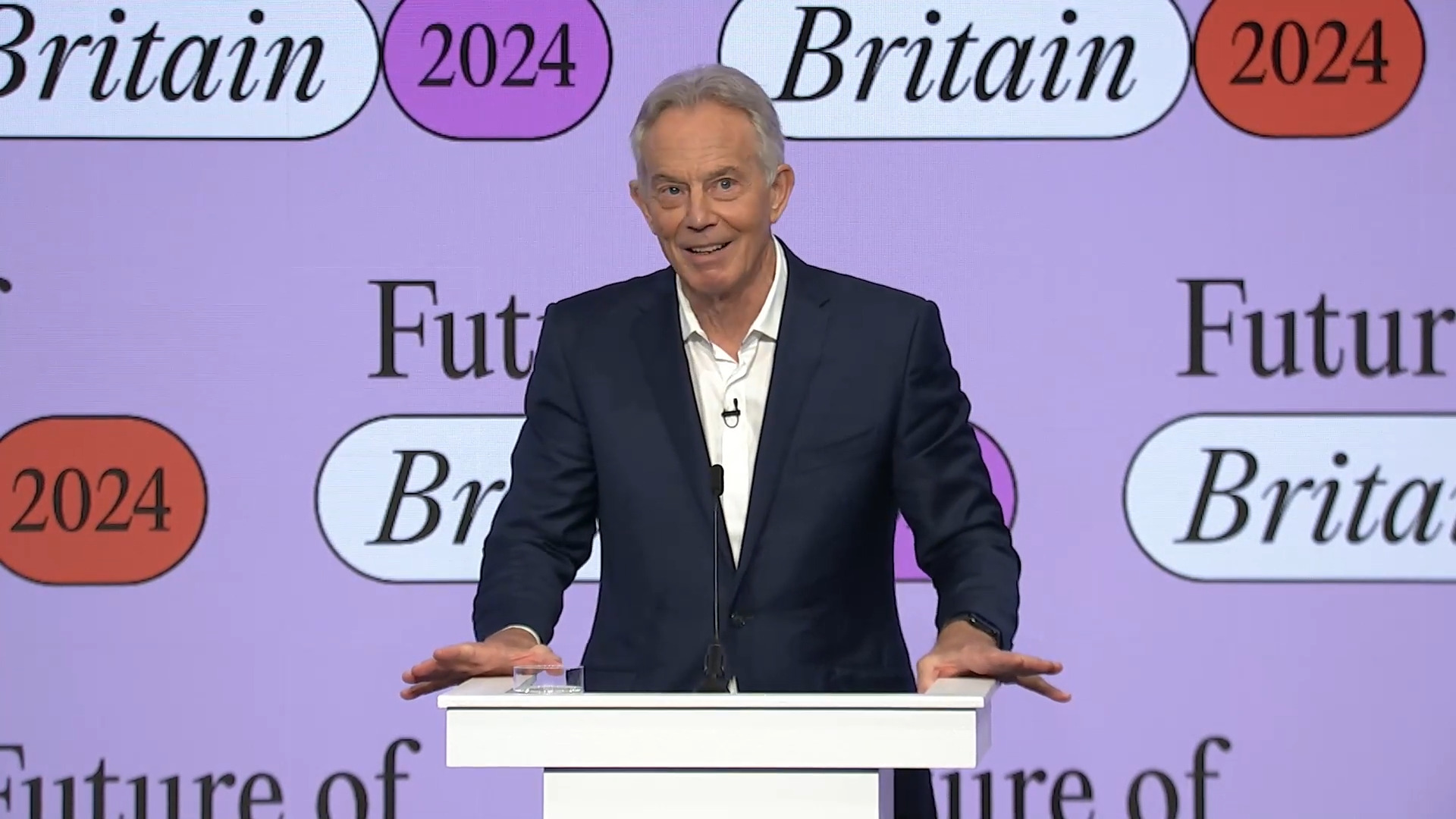

It did also want to note that Tony Blair himself was completely aware of the methods used to create the data and that he noted, at the Future of Britain conference "there is no definitive precision in these types of predictive figures." His statement echoes the TBI report which highlights that LLMs "may or may not give reliable results," though anything which is trying to gaze into the future can never be 100% accurate whether created by generative AI or fallible humans.

Here's exactly how TBI states that it used the GPT-4 model behind ChatGPT, in a more detailed way than we presented it previously, to find out whether AI would be a time-saver in the workplace:

"We trained a version of ChatGPT by prompting it with a rubric of rules to help classify tasks that could (and could not) be done by AI. We refined this rubric on a test dataset of around 200 tasks where we cross-checked the output against expert assessments of AI’s current capabilities—including comparing our results with those from recent academic and empirical studies on its impact on particular tasks (e.g. Noy and Zhang, 2023)—to ensure the model was performing robustly and producing credible results that were anchored in real-world data.

"Once trained, we then used the LLM to scale up the results by applying the rubric to the 20,000 tasks in the ONET dataset to automate the approach. We then ran several robustness checks on the outputs of that model to confirm that the time savings figures being generated again corresponded with real world applications."

The essential result, however, is the same; we still have the optics of an institute creating a research paper into the benefits of AI which heavily uses AI to tell us whether AI is going to be a good thing.

Now, AI may well genuinely have a host of time-saving benefits in the public sector if properly trained models are used correctly, but given the lack of public trust in the output of LLMs in use at the moment I believe you can see why there is a level of scepticism around this latest report. Scepticism that might not have been there had it been created using more traditional methods.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Original story, July 11, 2024: The Tony Blair Institute for Global Change, a non-profit organisation set up by the ex-UK prime minister, has released a paper (PDF) predicting that AI automation in public sector jobs could save a fifth of workers time, along with a huge reduction in workforce and governmental costs. The findings of the paper were presented by Tony Blair himself at the opening of the 2024 Future of Britain Conference.

Just one small issue: The prediction was made by a version of ChatGPT. And as experts 404 Media interviewed about this weird ouroboros of a report have noted, AI is maybe not the most reliable source for information about how reliable, useful, or beneficial AI might be.

The Tony Blair Institute researchers gathered data from O*NET based on occupation-specific descriptors on nearly 1,000 US occupations, with the aim to assess which of these tasks could be performed by AI. However, talking to human experts in order to define which roles could be suitable for AI automation was deemed too difficult a problem to solve, so they funnelled the data into ChatGPT in order to make a prediction instead.

Trouble is, as the researchers noted themselves, LLMs "may or may not give reliable results." The solution? Ask it again, but differently.

"We first use GPT-4 to categorise each of the 19,281 tasks in the O*NET database in several different respects that we consider to be important determinants of whether the task can be performed by AI or not. These were chosen following an initial analysis of GPT-4's unguided assessment of the automatability of some sample tasks, in which it struggled with some assessments"

"This categorisation enables us to generate a prompt to GPT-4 that contains an initial assessment as to whether it is likely that the task can or cannot be performed by AI."

So that'd be AI, deciding which jobs can be improved by AI, and then concluding that AI would be beneficial. Followed by an international figure extolling the virtues of that conclusion to the rest of the world.

Unsurprisingly, those looking into the details of the report are unsatisfied with the veracity of the results. As Emily Bender—a University of Washington professor in the Computational Linguistics Laboratory, interviewed by 404 media—puts it:

"This is absurd—they might as well be shaking at Magic 8 ball and writing down the answers it displays"

"They suggest that prompting GPT-4 in two different ways will somehow make the results reliable. It doesn't matter how you mix and remix synthetic text extruded from one of these machines—no amount of remixing will turn it into a sound empirical basis."

What is artificial general intelligence?: We dive into the lingo of AI and what the terms actually mean.

The findings were reported by several news outlets without mention of the ChatGPT involvement in the papers predictions. It's unknown if Big Tony knew that the information he was presenting was based on less than reliable data methods, or indeed, read the paper in detail himself.

While the researchers here at least documented their flawed methodology, it does make you wonder how much seemingly-accurate information is being created based on AI predictions, then presented as verifiable fact.

Nor, for that matter, content created by AI with just enough believability to pass without serious investigation. To prove that this article isn't an example of such content, here's a spelling mistike. You're welcome.

Andy built his first gaming PC at the tender age of 12, when IDE cables were a thing and high resolution wasn't—and he hasn't stopped since. Now working as a hardware writer for PC Gamer, Andy spends his time jumping around the world attending product launches and trade shows, all the while reviewing every bit of PC gaming hardware he can get his hands on. You name it, if it's interesting hardware he'll write words about it, with opinions and everything.

- Dave JamesEditor-in-Chief, Hardware