Battlefield 5 performance analysis: ray tracing is demanding, and DX12 runs worse than DX11

Real-time ray tracing is here, and it's no surprise it requires a beefy GPU and CPU to run well.

Nvidia announced its Turing architecture and its halo feature of real-time ray tracing hardware acceleration in August and it sounded promising, but there was one major concern: When would we actually see games that could utilize the new features? We were told they would be coming soon, and there are 25 announced games already slated to use DLSS and 11 games that will use ray tracing in some fashion. But we've gone through the RTX 2080 Ti, RTX 2080, and RTX 2070 launches with not a single actual game we could use for testing. That all changed with the final arrival of the DXR patch for Battlefield 5 (and the Windows 10 October 2018 update, which was perhaps part of the delay).

Over the past month and a half, I've spent a good chunk of time testing and retesting Battlefield 5, with and without ray tracing enabled. Frankly, it's a bit of a mess. The initial performance with ray tracing was terrible, at times up to one third the performance of non-DXR. Even with multiple patches and driver updates, however, there's still a lot of room for improvement.

DX11 performance is better than DX12 on literally every GPU I've tested, so not even AMD cards should enable DX12 mode. (Caveat: you need to enable Future Frame Rendering in DX11, but that's the default and the minor increase in input lag is worth the boost in performance.) But DXR and ray tracing require DX12, so we're getting ray tracing on top of a non-optimal rendering solution. It's pouring salt on the wound.

But I'm getting ahead of things. Let's start by talking features and performance for the PC version of Battlefield 5.

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Battlefield 5 on a bunch of different AMD and Nvidia GPUs, along with AMD and Intel platforms—see below for the full details. Thanks, MSI!

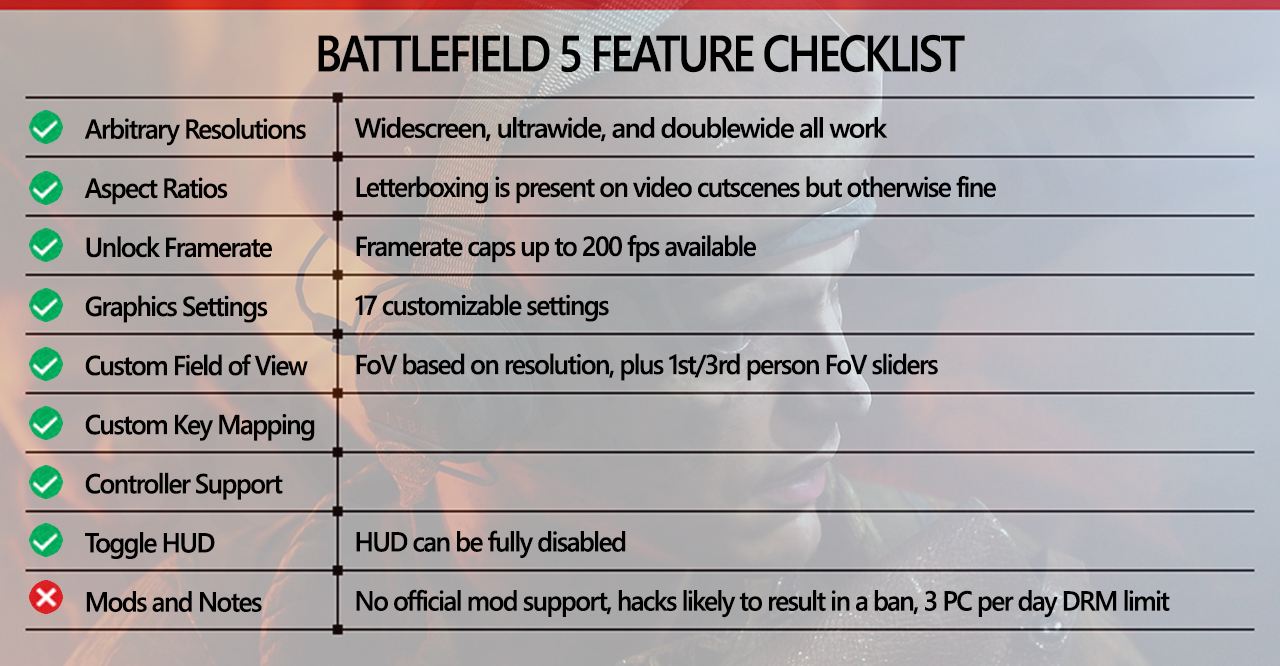

Nearly everything checks out. Yes, there's a 200fps maximum, but that's sufficient in my book. Aspect ratios and FoV options are present, as is controller support. There are loads of graphics settings to tweak, including DXR support for RTX cards, and the HUD can be fully disabled if you like. The only issue with with mods, as well as the DRM. Mods aren't supported, and while hacks have been created, using any is likely to result in a ban, at least in multiplayer mode.

As for the DRM, most people might not notice, but I found it extremely unforgiving and annoying. You have to run the game through EA's Origin service, which is limited to actively running on one PC at a time. If you log in on a different PC, the other gets booted. That seems like a pretty effective way to limit people from playing the game on multiple PCs at the same time, and it ought to be sufficient. But no, EA takes things a step further.

I routinely get locked out of Battlefield 5 because of running it on "too many PCs." I'm not absolutely certain on this, but after extensive testing over the past month or two, I believe any change in CPU or GPU triggers EA's DRM to count something as a new PC, and you're limited to three PCs per 24 hours. Counting just the current and previous generation AMD and Nvidia GPUs, plus laptops and CPUs, I have at least 30 or so "PCs" by this metric, so 10 days minimum to get through my full test suite. And if I ever go back and retest a card, it gets counted again.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

This is worse than Denuvo, as Denuvo at least only detects changes in CPU as a "new PC." So if this testing feels a bit delayed, at least part of the blame lies with EA's DRM. But let's talk settings.

Swipe for additional charts/settings.

Swipe for additional charts/settings.

Swipe for additional charts/settings.

Swipe for additional charts/settings.

Swipe for additional charts/settings.

Battlefield 5 settings and performance

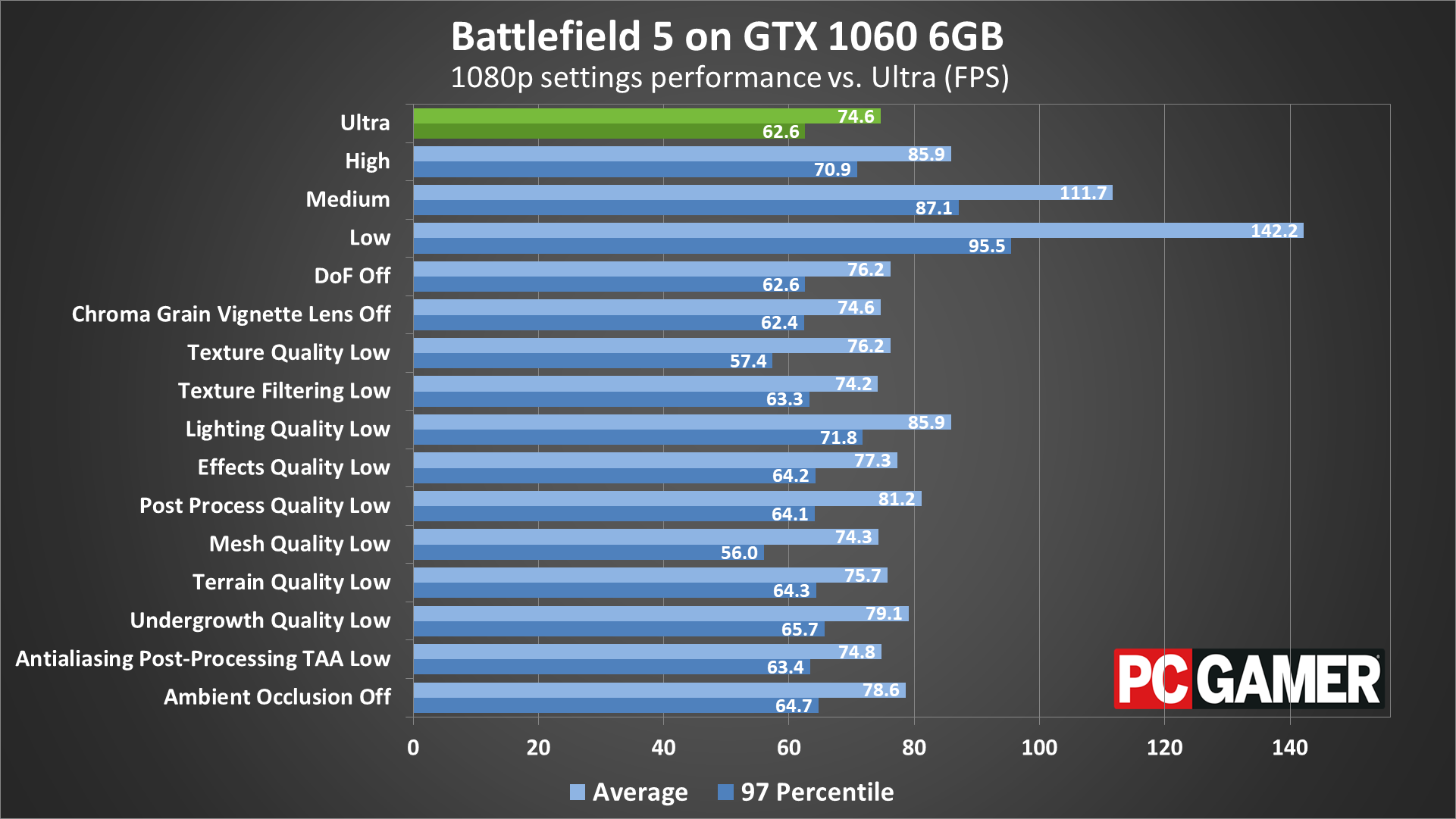

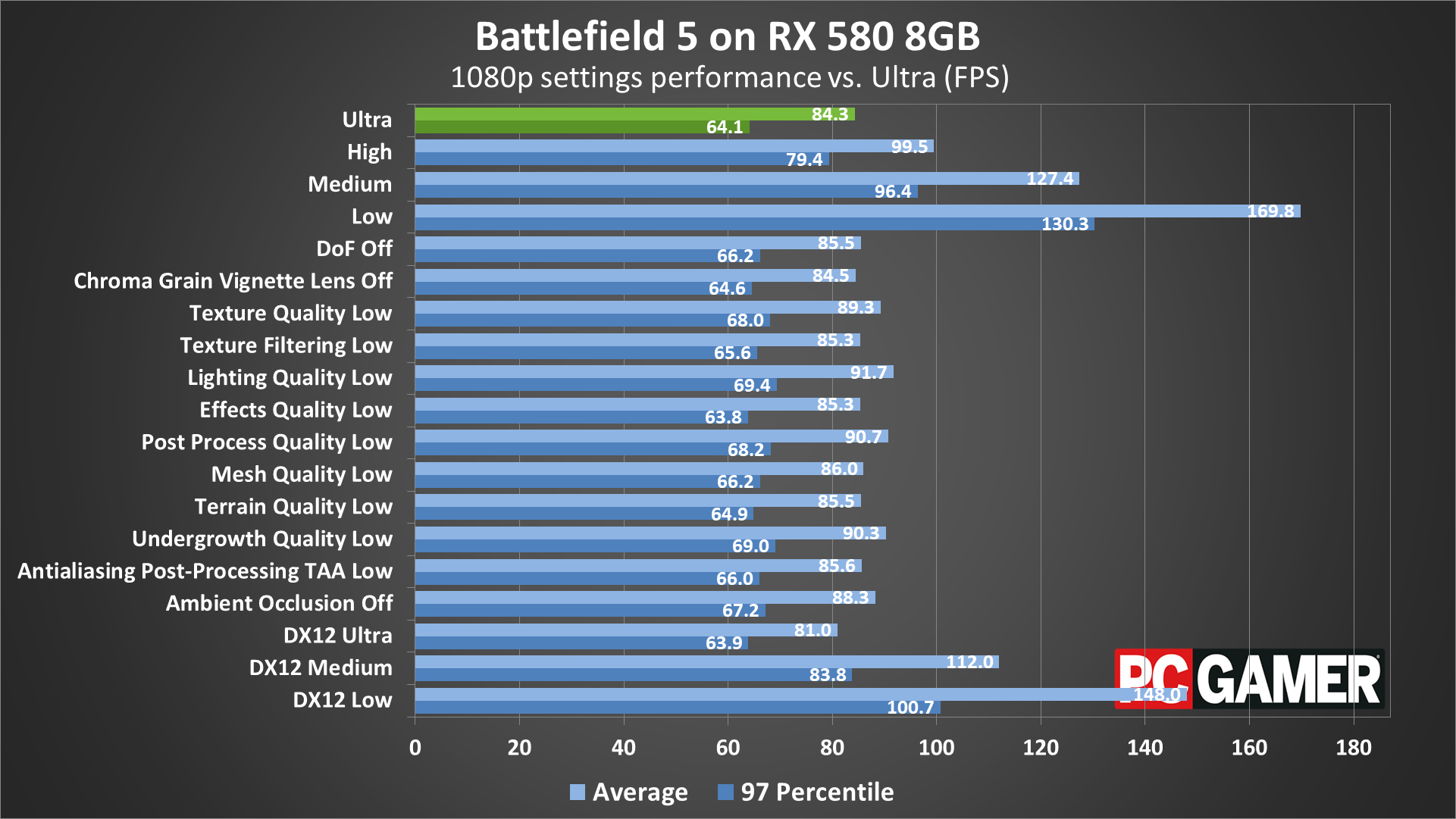

Depending on how you want to count individual graphics 'settings,' there are anywhere from about 15 to more than 20 options to adjust. Under the basic menu are the normal things like fullscreen/windowed/borderless, resolution, brightness, field of view, depth of field, and more. For testing, I lumped the two ADS settings under one test, and the chromatic aberration, film grain, vignette, and lens distortion filters under a second test. None of these make much of a difference in performance. Flip to the advanced options and there are a bunch more settings to tweak.

I've tested performance at 1080p ultra, with all of the various settings enabled, on two popular midrange GPUs, the GTX 1060 6GB and the RX 580 8GB. Then I've tested performance again by turning down each setting to its minimum option, and I'm reporting the average improvement in performance that this brings. Here are the options.

Graphics Quality: The global preset is the easiest place to start. Dropping to high boosts performance 15-20 percent, medium runs about 50 percent faster than the ultra preset, and low roughly doubles your framerates.

ADS DOF Effects: Turning off depth of field (which mostly occurs when you aim down the sights of a rifle) can improve performance by 1-2 percent.

Chromatic Aberration, Film Grain, Vignette, and Lens Distortion: Combined, disabling all four of these post-processing filters causes almost no change in performance.

Texture Quality: I measured a modest 2-5 percent improvement in performance by dropping the texture quality to low, even on cards with plenty of VRAM. Cards with less than 4GB will want to stick to the medium or low setting.

Texture Filtering Quality: Adjusts anisotropic filtering quality. This has almost no effect on performance with modern GPUs.

Lighting Quality: This is one of the most demanding settings, yielding a 10-15 percent improvement in performance.

Effects Quality: This is another setting that doesn't appear to effect performance much (sorry, bad pun).

Post Process Quality: Covers multiple post-processing effects and can boost performance by up to 8-10 percent by turning this to low.

Mesh Quality: Reduces number of polygons on objects, with a minimal impact on performance.

Terrain Quality: Adjusts the terrain detail, with little effect on performance.

Undergrowth Quality: Could also be called foliage, as lowering this reduces the amount of extra trees, shrubs, etc. Has a relatively large 6-7 percent impact on performance.

Antialiasing Post-Processing: Adjusts the amount of temporal AA that gets applied. There's no 'off' setting, and performance on TAA Low is nearly identical to TAA High.

Ambient Occlusion: Adjusts the quality of shadows at polygon intersections. Turning this off can boost performance by about 5 percent, at the cost of everything looking a bit flat.

DX12: Depending on the GPU and other settings, using DirectX 12 reduced performance by anywhere from 4 to 25 percent. Outside of RTX cards using DXR, I strongly recommend running in DX11 mode. All testing other than DXR will use the DX11 API.

Battlefield 5 GPU performance

Desktop PC / motherboards

MSI Z390 MEG Godlike

MSI Z370 Gaming Pro Carbon AC

MSI X299 Gaming Pro Carbon AC

MSI Z270 Gaming Pro Carbon

MSI X470 Gaming M7 AC

MSI X370 Gaming Pro Carbon

MSI B350 Tomahawk

MSI Aegis Ti3 VR7RE SLI-014US

The GPUs

MSI RTX 2080 Ti Duke 11G OC

MSI RTX 2080 Duke 8G OC

MSI RTX 2070 Gaming Z 8G

MSI GTX 1080 Ti Gaming X 11G

MSI GTX 1080 Gaming X 8G

MSI GTX 1070 Ti Gaming 8G

MSI GTX 1070 Gaming X 8G

MSI GTX 1060 Gaming X 6G

MSI GTX 1060 Gaming X 3G

MSI GTX 1050 Ti Gaming X 4G

MSI GTX 1050 Gaming X 2G

MSI RX Vega 64 8G

MSI RX Vega 56 8G

MSI RX 580 Gaming X 8G

MSI RX 570 Gaming X 4G

MSI RX 560 4G Aero ITX

Gaming Notebooks

MSI GT73VR Titan Pro (GTX 1080)

MSI GE63VR Raider (GTX 1070)

MSI GS63VR Stealth Pro (GTX 1060)

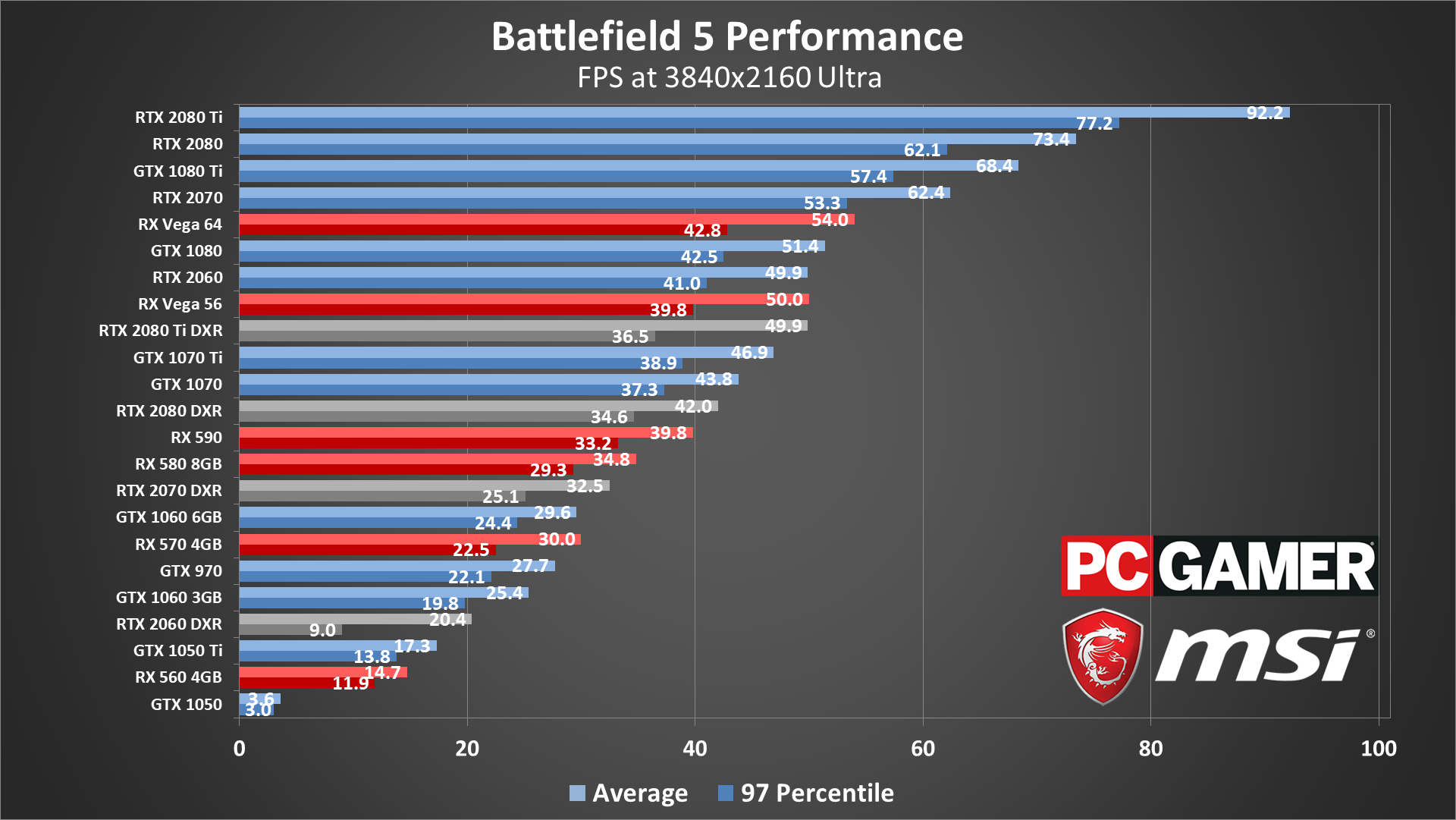

Okay, enough rambling. How does Battlefield 5 perform, with and without ray tracing? And how does it look? I ran ray tracing benchmarks on the three RTX GPUs, using the low/medium/ultra presets at 1080p, with ultra at 1440p and 4k. I used the same settings (but without DXR) on all non-RTX cards. I'm still running additional tests, as I have been repeatedly locked out of the game with a message about using too many different PCs (damn DRM), and will update the charts with the remaining results in the coming days.

All the testing was done on a Core i9-9900K running at stock, which means 4.7GHz on all eight cores. I ran the DirectX 11 version, except when testing the DXR effects which requires DX12. For ease of testing (ie, no server issues to worry about, no multiplayer messiness where I keep dying during testing, repeatability, etc.), all testing was done in a singleplayer mission, during the Tirailleurs War Story. I did limited testing in multiplayer as well, and generally found it to be more demanding and more prone to stutters, especially with DXR enabled.

The singleplayer setting is where I see ray tracing being far more useful in the near term anyway. In multiplayer, especially a fast-paced shooter like Battlefield 5, I don't think many competitive gamers will sacrifice a lot of performance for improved visuals. I might, but then I'm not a competitive multiplayer gamer. Singleplayer modes are a different matter, because the pace tends to be slower and a steady 60 fps or more is sufficient. I play most games at maximum quality, even 4k, simply because I have the hardware to do so and I want the games to look their best. Battlefield 5's War Stories mode has some impressive graphics and often looks beautiful, and adding ray tracing for reflections improves the overall look.

Here's how it performs.

[Note: All testing was performed during December 22-30, when these benchmarks were run. I used the Nvidia 417.35 and AMD 18.12.3 drivers. I added RTX 2060 using 417.54 drivers after testing the card in early January.]

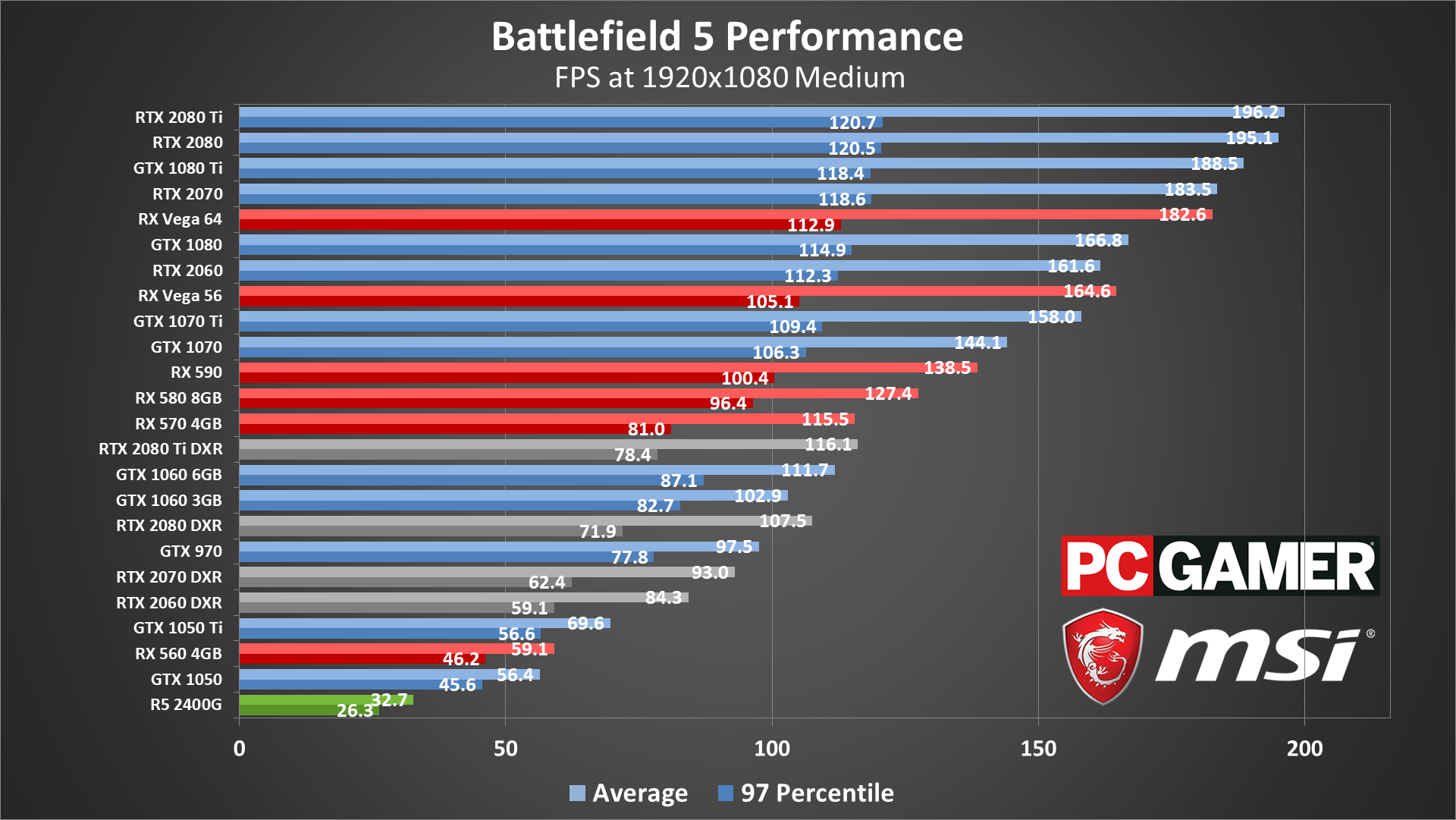

The low and medium presets can bump into the 200 fps cap on the fastest cards, but enabling DXR drops performance quite a bit. At the low preset, the RTX 2070 is about half as fast with DXR, while the 2080 and 2080 Ti don't drop as much mostly because they were more limited.

The medium preset starts to remove the 200 fps bottleneck, but it's still a factor on the Vega 64 and above. The RTX 2080 Ti with DXR ends up looking about the same as a GTX 1060 6GB without DXR. At only six times the price. Ouch. AMD GPUs so far look to be slightly faster than their Nvidia counterparts, though I have additional testing to complete.

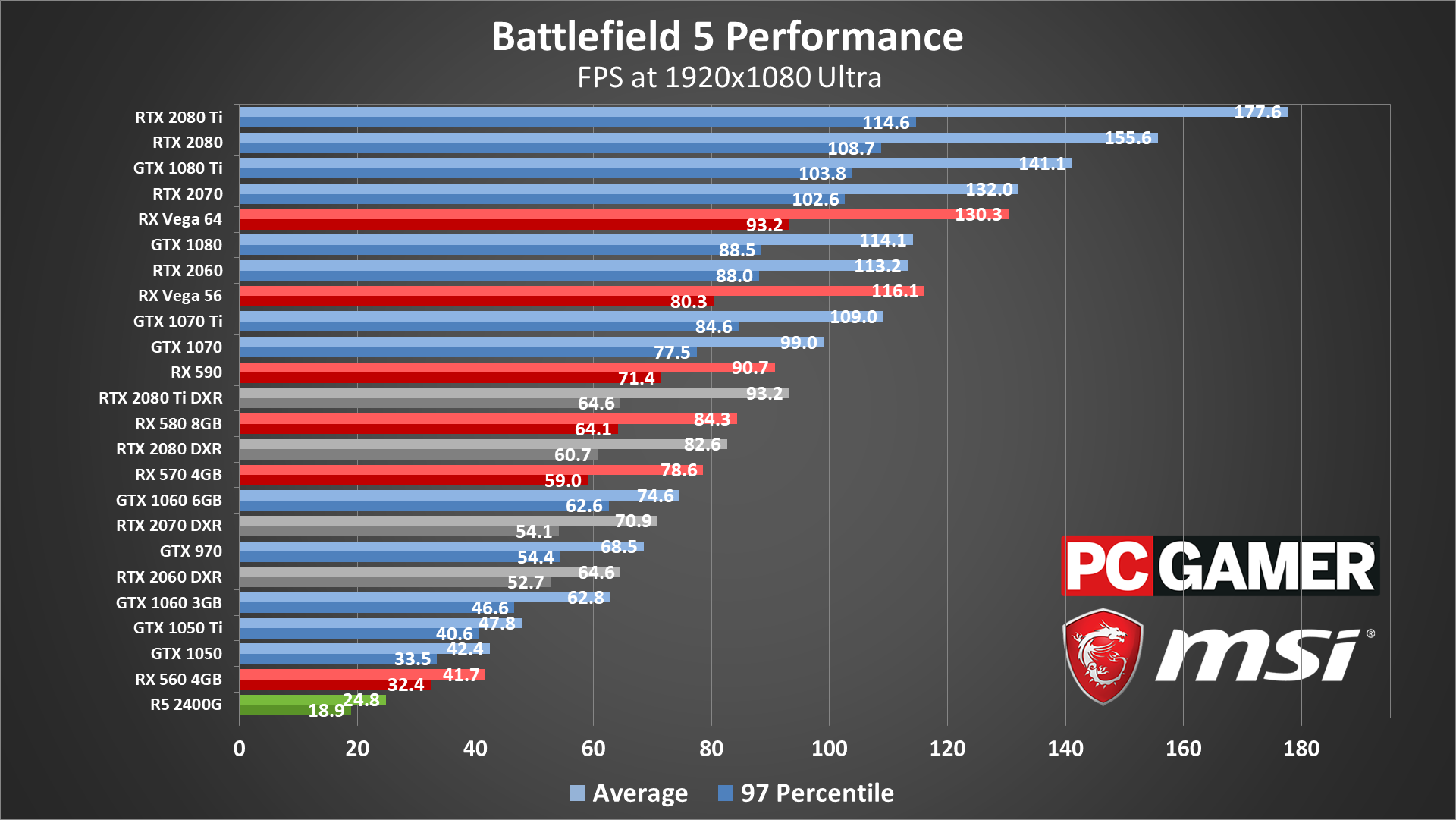

By 1080p ultra, all of the GPUs are finally being pushed to their limits, which allows the 2080 Ti and 2080 to rise to the top of the chart. The 1080 Ti falls right between the 2080 and 2070, with the latter matching the performance of the Vega 64. All three RTX GPUs also manage to break 60fps with DXR enabled, though there are occasional dips below that mark.

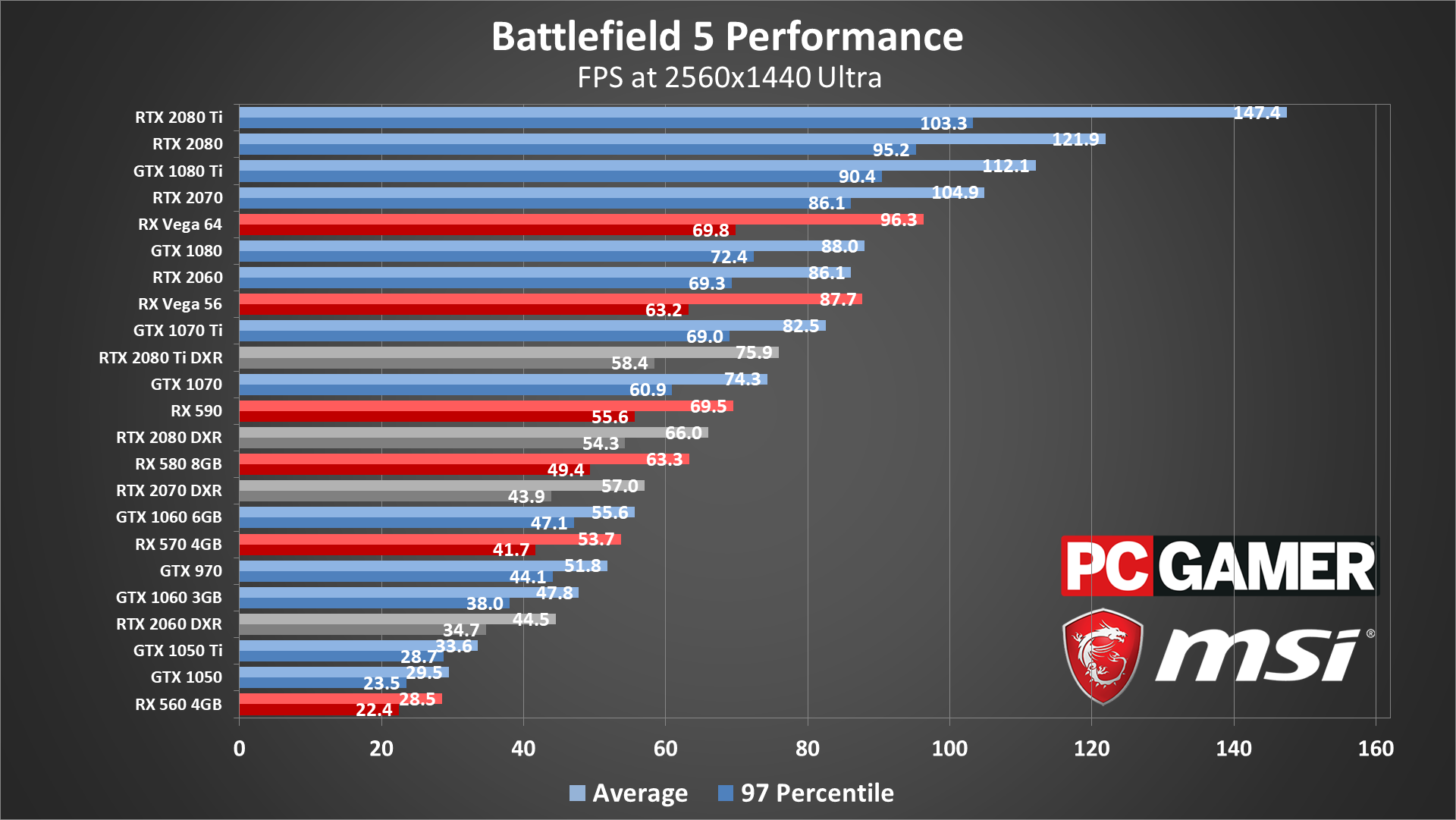

1440p ultra remains very playable on most GPUs, provided you're not running DX12 or DXR. The GTX 1060 6GB falls just below 60fps, while the RX 580 8GB is just slightly above 60 fps. The RTX 2080 also still manages 60fps with DXR enabled, again with a few dips below that threshold.

Finally, we have 4k ultra, where we get some good news and bad news. The good news is that the RTX 2070 and above all manage 60fps, as does the GTX 1080 Ti. The bad news is that ray tracing cuts performance roughly in half, but I'd suggest 4k with ray tracing is probably asking a bit much right now.

I did limited testing with some of the other settings for DXR reflection quality, leaving everything else at ultra. DXR medium can get the 2080 Ti above 60fps, but then at the medium preset many of the ray tracing effects become far less pronounced. Not that any of the RTX effects are really a massive improvement right now. I wish DXR was being used for shadows rather than reflections, but we'll have to wait for other games to see how that pans out.

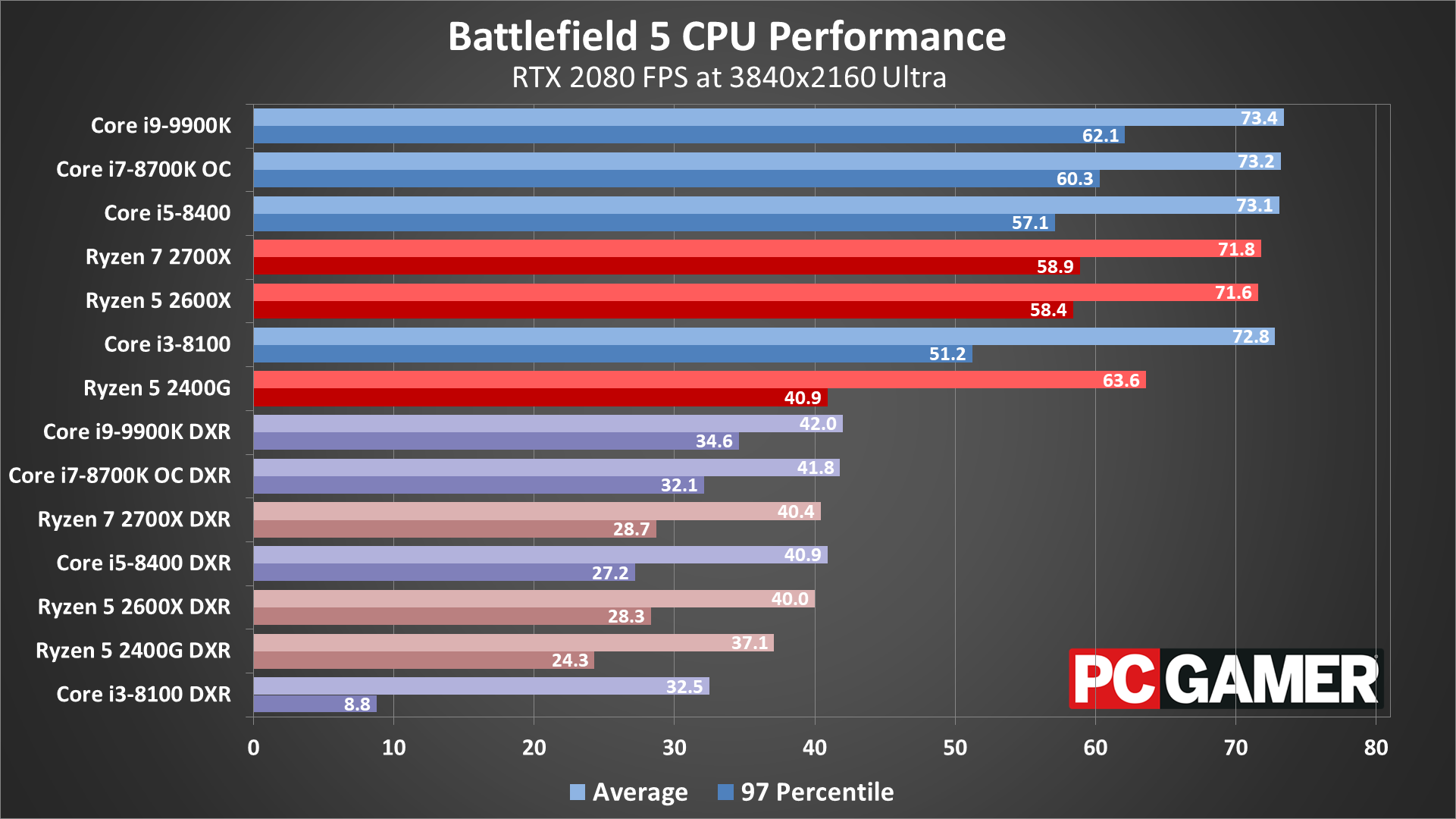

Battlefield 5 CPU performance

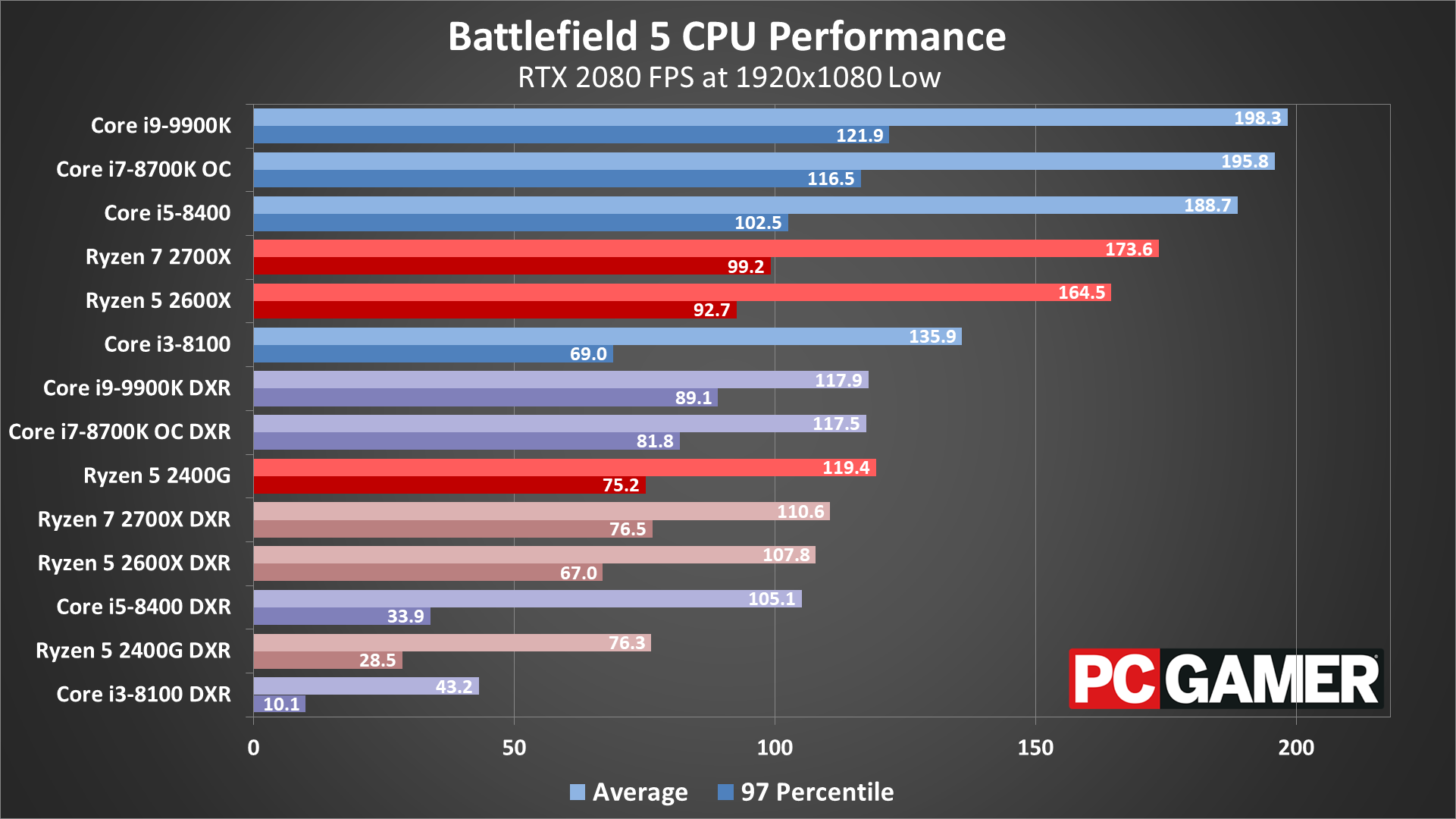

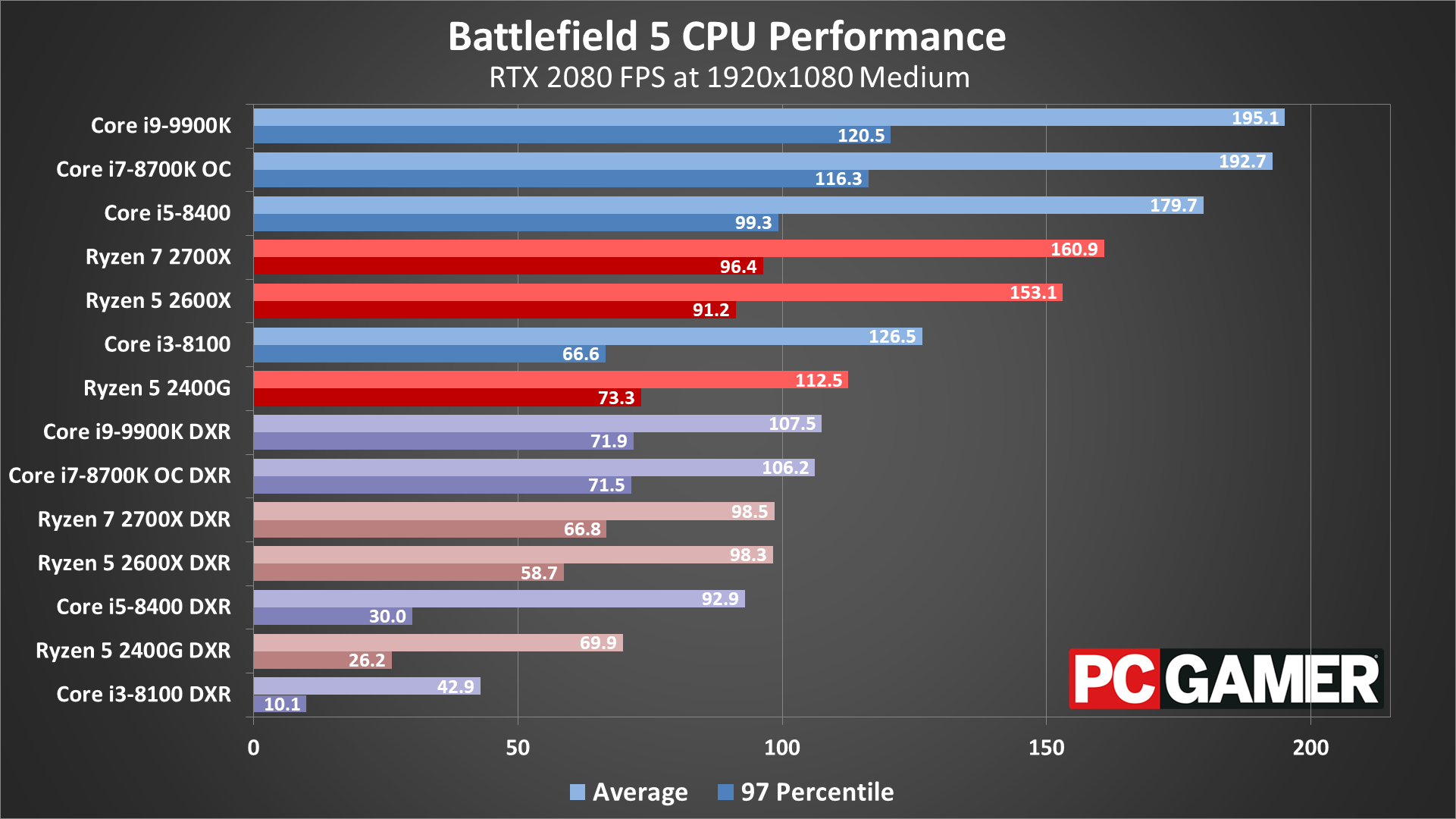

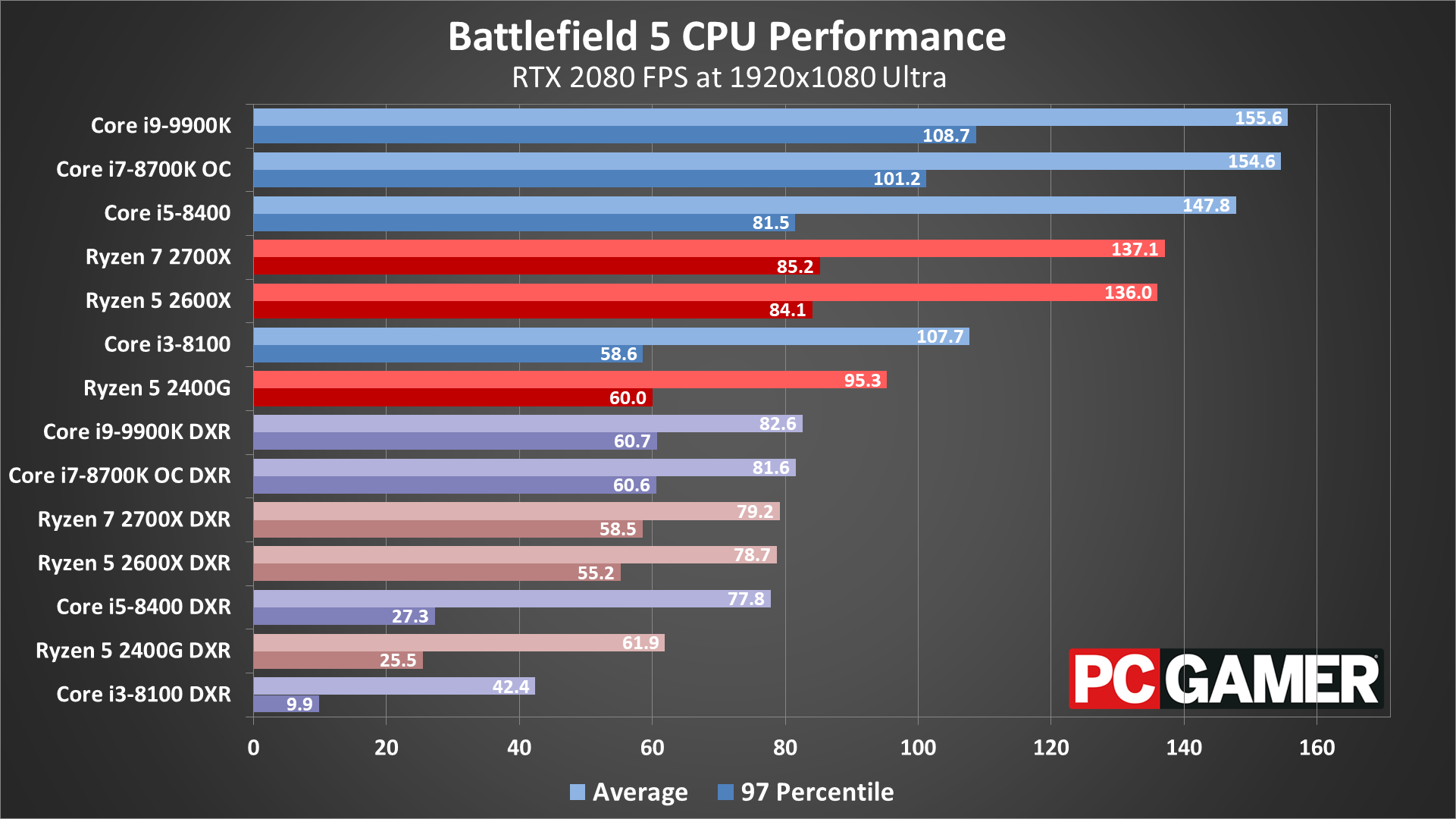

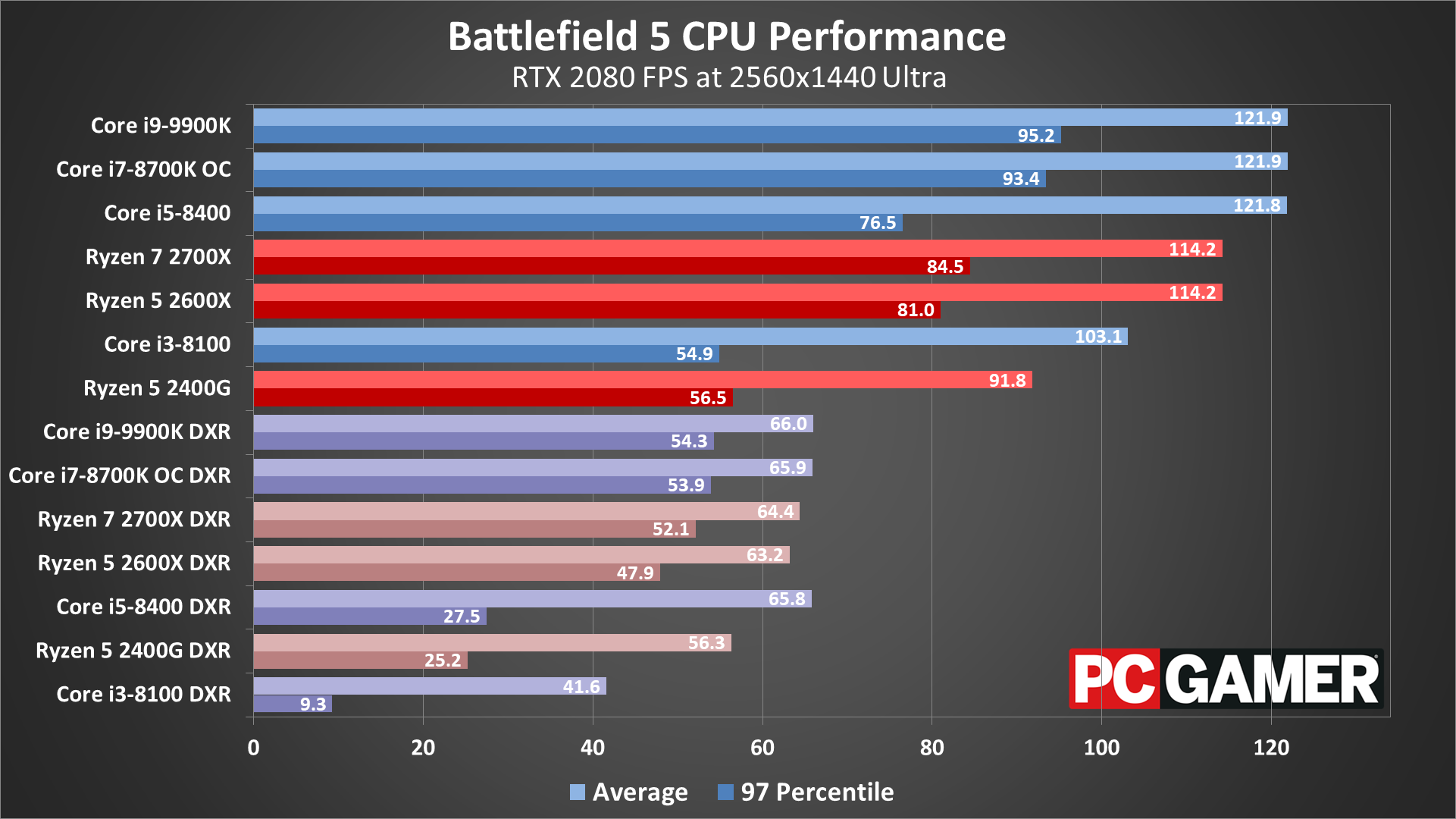

For CPU testing, I used the RTX 2080 this round. I also tested each CPU in both DX11 and DX12+DXR modes, as above. Somewhat surprisingly, DXR basically requires an i7-8700K or better to run well right now. That includes the Ryzen 5 2600X and above, incidentally. What it doesn't include is the Core i5-8400, which appears to lack the requisite number of threads to enable good and consistent DXR performance. DX11 performance is still fine, however.

Swipe left/right for additional charts.

Swipe left/right for additional charts.

Swipe left/right for additional charts.

Swipe left/right for additional charts.

Swipe left/right for additional charts.

Starting at 1080p low, all of the CPUs do fine in DX11 mode, but the i3-8100 basically chokes. Hard. Clearly there are some bugs or other factors in play, and the i5-8400 also suffers a lot of stutters in DXR mode. The 2600X does better, but you'd really want a Ryzen 7 2700X or above to get smooth DXR gaming, even at 1080p low. 1080p medium is basically the same story, with a minor drop in performance.

By 1080p ultra, in DX11 mode most of the CPUs start to feel similar, with only the i3-8100 really falling behind. (I'll test a Ryzen 5 2400G soon enough, if you're wondering how that performs.) DXR mode continues to favor CPUs with at least 12 threads on the Intel side, and 16 threads for AMD. The i5-8400 average fps looks okay now, but the minimums tell the real story.

1440p continues to close the gap between the various CPUs, but the top three Intel chips still hang onto the overall lead in DX11. Meanwhile, at 4k DX11, we mostly end up with a 6-way tie. Mostly, except for the lower minimum fps on the i3-8100.

The real takeaway here is that the combination of DX12 and DXR means the CPU can be a much bigger factor than I initially assumed. I figured DXR mode would be almost completely GPU limited, even on faster RTX cards, but that's clearly not the case. Or at least, it's clearly not the case in Battlefield 5. But if you have the money for an RTX 2080 or 2080 Ti, you can likely spring for an i9-9900K as well.

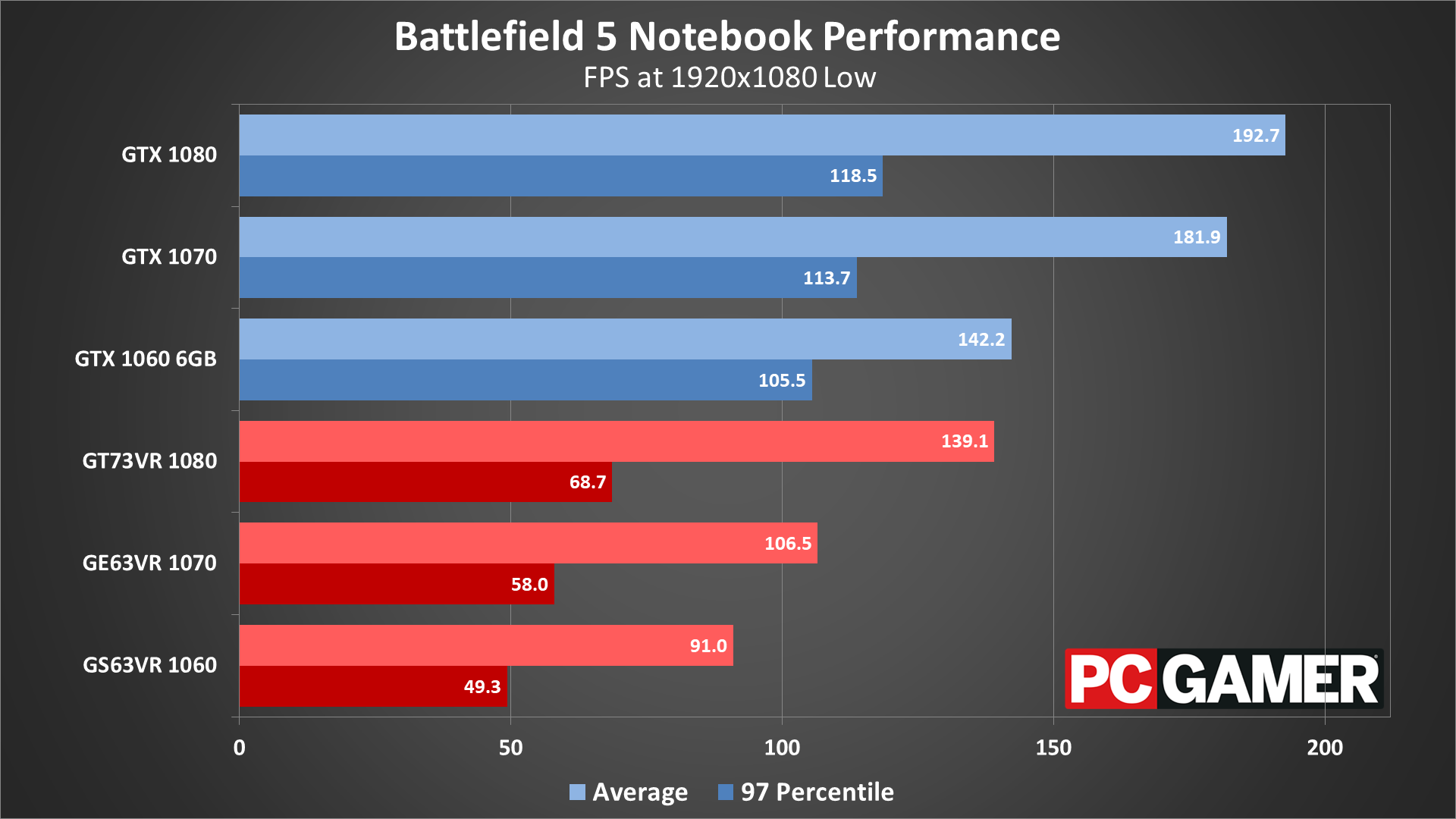

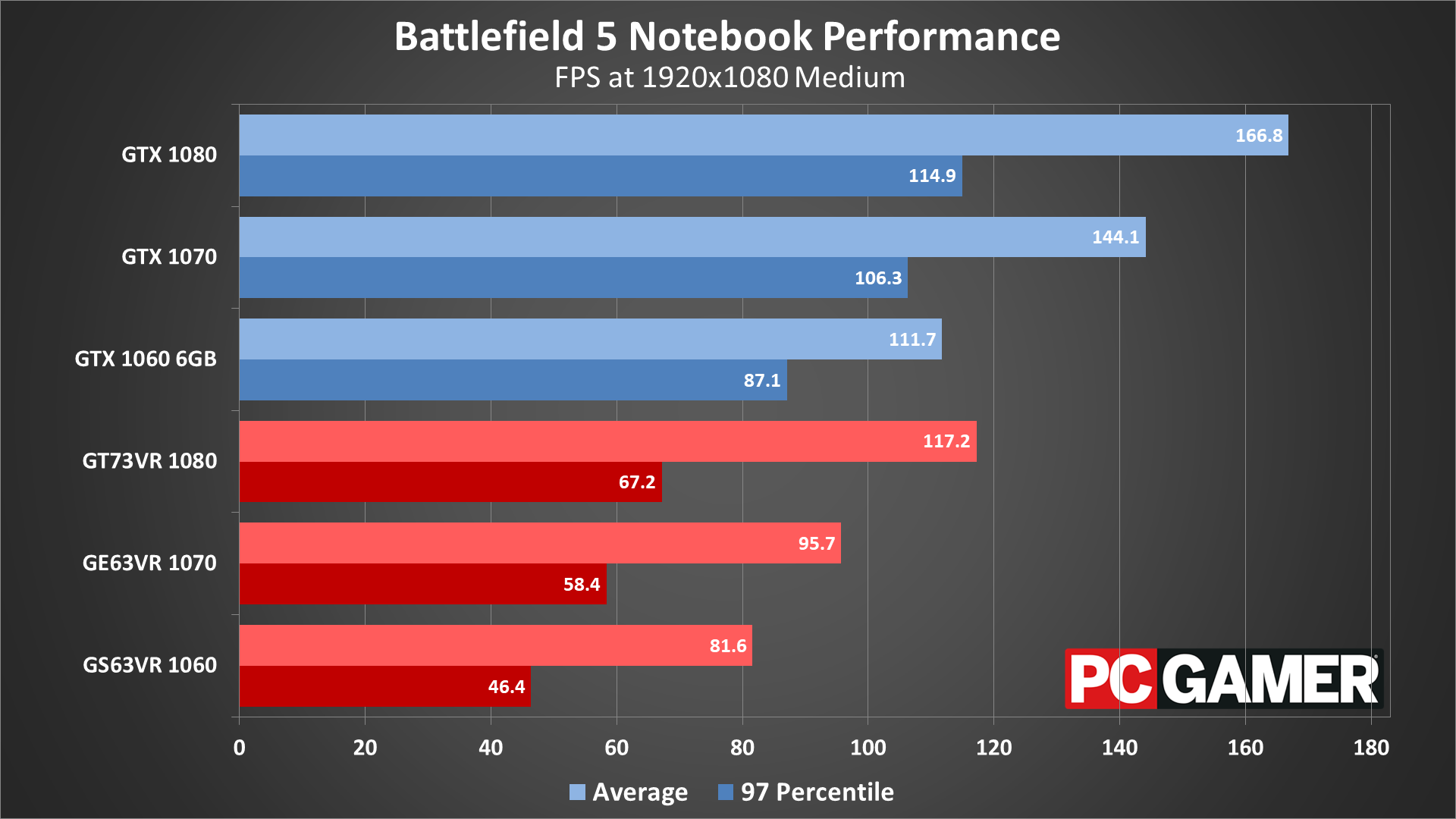

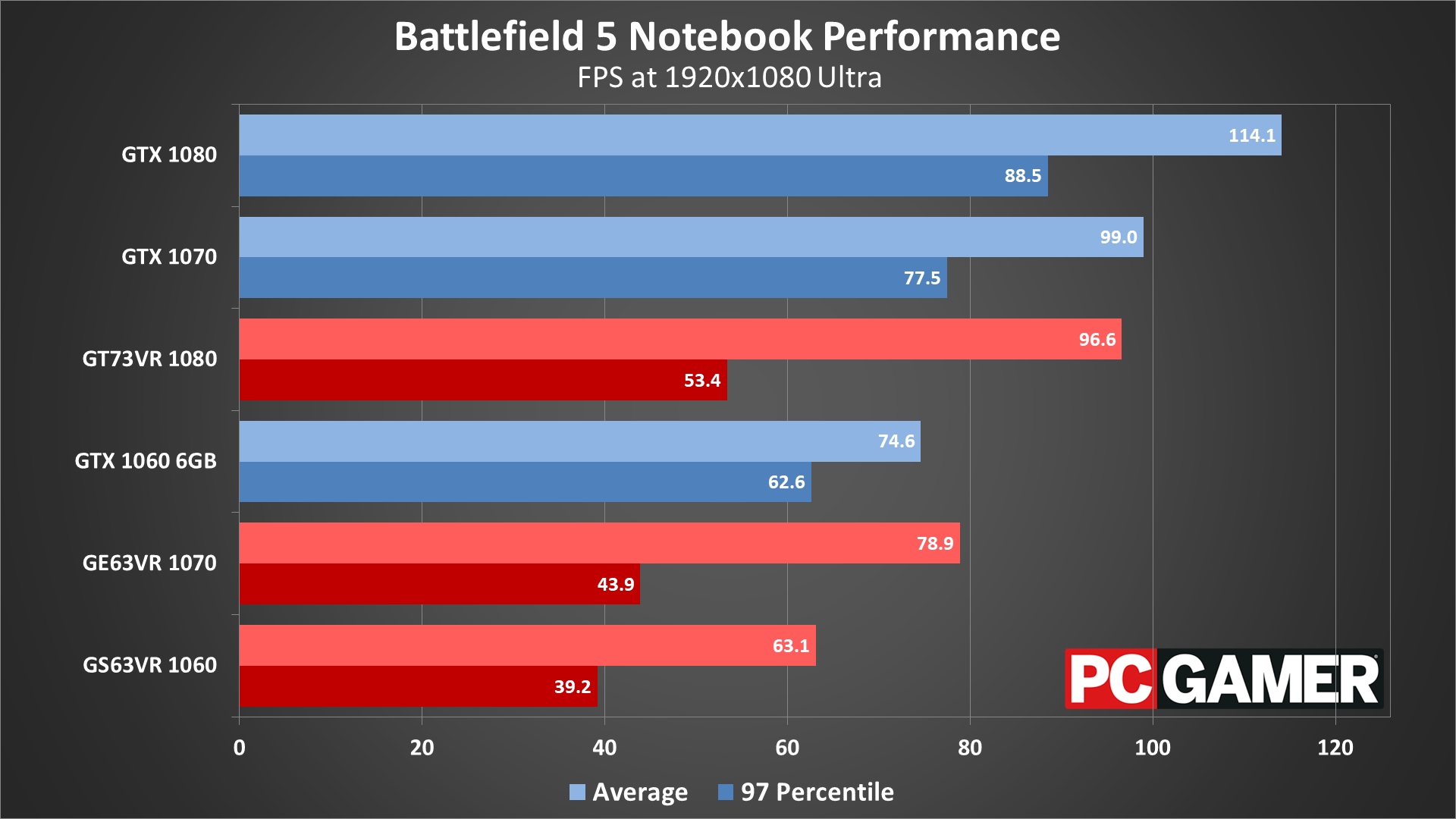

Battlefield 5 notebook performance

I haven't had a chance to test the desktop equivalents yet, but here are the preliminary results. CPU bottlenecks are clearly a factor on mobile systems, especially when you're limited to 1080p for all practical purposes.

Swipe left/right for additional charts.

Swipe left/right for additional charts.

Swipe left/right for additional charts.

There's not much to say other than the faster CPU definitely helps the desktop card. Even a GTX 1060 6GB beats the fastest notebook at medium and low quality, though at 1080p ultra things look more sensible. Notice the wide discrepancy in minimum vs. average framerates, however. The 4-core/8-thread notebook CPUs are clearly a limiting factor, especially with their lower clockspeeds.

Closing thoughts

I've spent a lot of time trying to get a handle on what ray tracing does—and more importantly doesn't do—in Battlefield 5. As the first publicly available test case, there's a lot riding on game, and simply stated this is not the end-all, be-all of graphics and gaming excellence right now. In fact, the only thing ray tracing is used for in Battlefield 5 is improved reflections. They look good, better than the non-DXR mode certainly, and it's pretty cool to see mirrors, windows, and vehicle surfaces more accurately reflecting their surroundings. But this particular implementation isn't going to revolutionize the gaming industry.

And that's okay. I've been playing games since the '80s, and I've seen a lot of cool technologies come and go over the years. To put real-time ray tracing in proper perspective, I think we need to look at commonplace techniques like anti-aliasing, shadow mapping, hardware transform and lighting, pixel shaders, and ambient occlusion. When those first became available in games, the tradeoff in performance vs. image quality was often massive. But those were all important stepping stones that paved the way for future improvements.

Anti-aliasing started out by sampling a scene twice (or four times), and in the early days that meant half the performance. Shadow mapping required a lot of memory and didn't always look right—and don't even get me started on the extremely taxing soft shadow techniques used in games like F.E.A.R. Hardware T&L arrived in DirectX 7 but didn't see much use for years—I remember drooling over the trees and lighting in the Dagoth Moor Zoological Gardens demo, but games that had trees of that quality wouldn't come until Far Cry and Crysis many years later. The same goes for pixel shaders, first introduced in DirectX 8, but it was several years before their use in games became commonplace. Crysis was the first game to use SSAO, but the implementation on hardware at the time caused major performance difficulties, leading to the infamous "will it run Crysis?" meme.

I point these out to give perspective, because no matter the performance impact right now (in one game, basically in beta form), real-time ray tracing is a big deal. We take for granted things like anti-aliasing—just flip on FXAA, SMAA, or even TAA and the performance impact is generally minimal. Most games now default to enabling AA, but if you turn it off it's amazing how distracting the jaggies can become. And games without decent shadows look… well, they look like they were made in 2005, before shadow mapping and ambient occlusion gained widespread use. The point is that we had to start somewhere, and all the advances taken together can lead to some impressive improvements in the way we experience games.

Battlefield 5 performance with DXR is not where I'd like it to be, especially in multiplayer. But high quality graphics at the cost of framerates doesn't really make sense for multiplayer in the first place. How many Fortnite or PUBG players and streamers are running at 4k ultra compared to 1080p medium/high? Improving lighting, shadows, and reflections often makes it more difficult to spot and kill the other players first, which is counterproductive. Until the minimum quality in games improves to the point where ray tracing becomes standard, most competitive gamers will likely give it a pass. But that doesn't mean we don't need or want it.

Ray tracing isn't some new technology that Nvidia just pulled out of its hat. It's been the foundation of high quality computer graphics for decades. Most movies make extensive use of the technique, and companies like Pixar have massive rendering farms composed of hundreds (probably thousands) of servers to help generate CGI movies. Attempting to figure out ways to accelerate ray tracing via hardware isn't new either—Caustic for example (now owned by Imagination Technologies) released its R2100 and R2500 accelerators in 2013 for that purpose. The R2500 was only moderately faster than a dual-Xeon CPU solution at the time, but it 'only' cost $1,000 and could calculate around 50 million incoherent rays per second.

Five years later, Nvidia's RTX 2070 is able to process around six billion rays per second. I don't know if the calculations are even comparable or equivalent, but we're talking a couple of orders of magnitude faster, for half the price—and you get a powerful graphics chip as part of the bargain. As exciting as consumer ray tracing hardware might be for some of us, this is only the beginning, and the next 5-10 years are where the real adoption is likely to occur. Once the hardware goes mainstream, prices will drop, performance will improve, and game developers will have a great incentive to make use of the feature.

For now, Nvidia's RTX ray tracing hardware is in its infancy. We have exactly one publicly available and playable game that uses the feature, and it's only using it for reflections. Other games are planning to use it for lighting and shadows, but we're definitely nowhere near being able to ray trace 'everything' yet. Which is why DirectX Raytracing (DXR) is built around a hybrid rendering approach where the areas that look good with rasterization can still go that route, and ray tracing can be focused on trickier stuff like lighting, shadows, reflections, and refractions.

It's good to at least have one DXR game available now, even if it arguably isn't the best way to demonstrate the technology. We're still waiting on the ray tracing patch for Shadow of the Tomb Raider, and DLSS patches for a bunch of other games. Compared to a less ambitious launch, like AMD's RX 590 for example, GeForce RTX and DXR have stumbled a lot. Of course performance without ray tracing is still at least equal to the previous generation, so it's not a total loss. And compared to adoption rates for AA, hardware T&L, pixel shaders, and shadow mapping, having a major game use a brand new technology just months after it became available is quite impressive. Let's hope other games end up being more compelling in how it looks and affects the game world.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.