We're on the brink of the biggest changes to computing's DNA and it's not just quantum that's coming

We're not at the tipping point yet, however. The hardware is still nascent and "the thing that we haven't cracked yet is the software."

Recent updates

This article was originally published on 30th June this year and we are republishing it today as part of a series celebrating some of our favourite pieces from the past 12 months.

Server-side: Will your CPU get sucked up into the cloud?

Next gen: What will a CPU look like in the future?

Time's up: Does silicon have an expiration date?

Computers are built around logic: performing mathematical operations using circuits. Logic is built around things such as Adders—not the snake; the basic circuit that adds together two numbers. This is as true of today's microprocessors as all those going back to the very beginning of computing history. You could go back to an abacus and find that, at some fundamental level, it does the same thing as your shiny gaming PC. It's just much, much less capable.

Nowadays, processors can do a lot of mathematical calculations using any number of complex circuits in a single clock. And a lot more than just add two numbers together, too. But to get to your shiny new gaming CPU, there has been a process of iterating on the classical computers that came before, going back centuries.

As you might imagine, building something entirely different to that is a little, uh, tricky, but that's what some are striving to do, with technologies like quantum and neuromorphic computing—two distinct concepts that could change computing for good.

"Quantum computing is a technology that, at least by name, we have become very accustomed to hearing about and is always mentioned as 'the future of computing'," says Carlos Andrés Trasviña Moreno, software engineering coordinator at CETYS Ensenada.

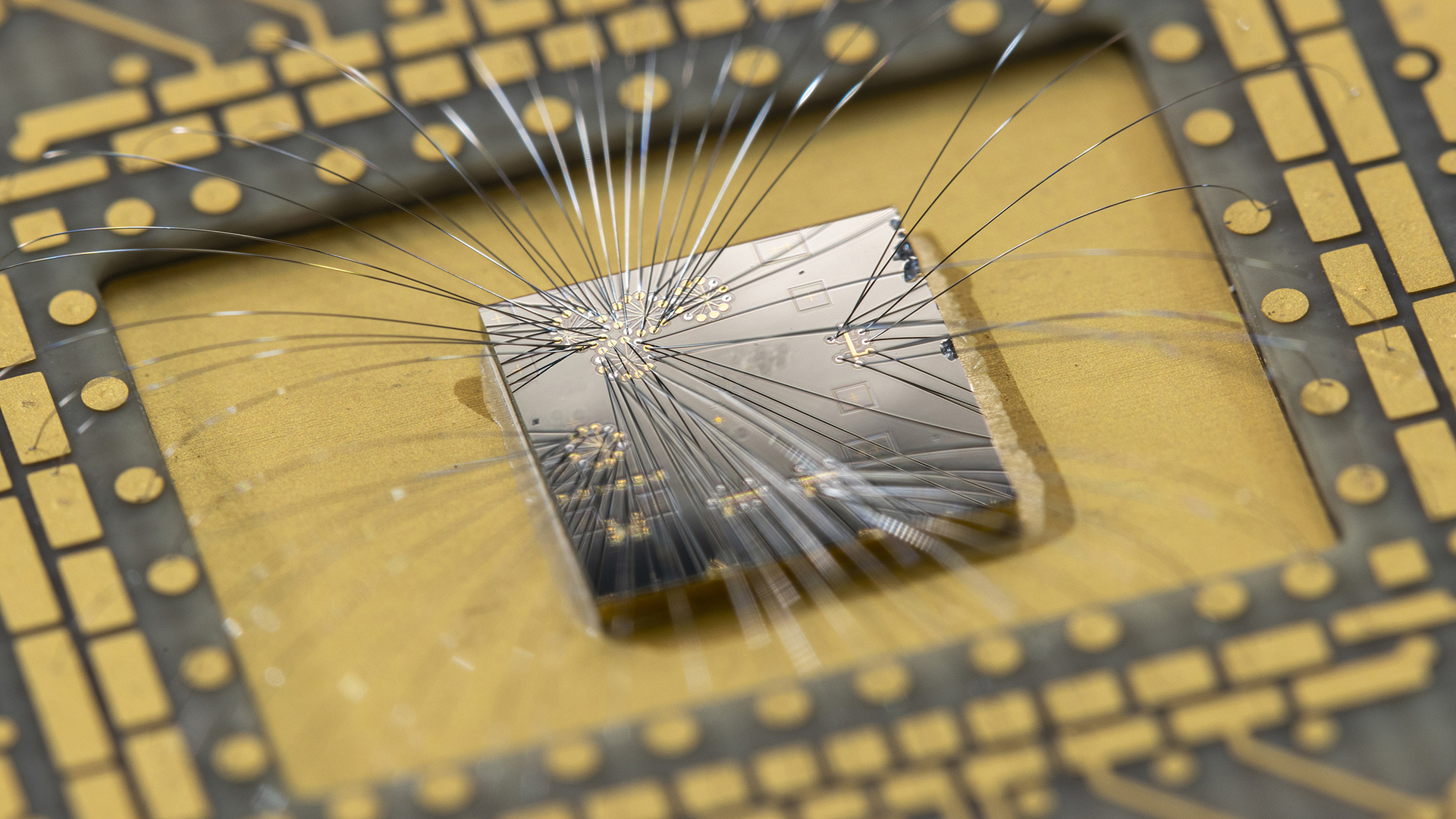

Quantum computers utilise qubits, or quantum bits. Unlike a classical bit, which can only exist in one of two states, these qubits can exist in two states and a superposition of those two states. It's zero, one, or both zero and one at the same time. And if that sounds awfully confusing, that's because it is, but it also has immense potential.

Quantum computers are expected to be powerful enough to break modern-day 'unbreakable' encryption, accelerate medicine discover, re-shape how the global economy transports goods, explore the stars, and pretty much revolutionise anything involving massive number crunching.

The problem is, quantum computers are immensely difficult to make, and maybe even more difficult to run.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

"One of the main drawbacks of quantum computing is its high-power consumption, since it works with algorithms of far greater complexity than that of any current CPU," Moreno continues. "Also, it requires an environment of near absolute zero temperatures, which worsens the power requirements of the system. Lastly, they are extremely sensitive to environmental disturbances such as heat, light and vibrations.

We're scratching the surface there with quantum computing.

Marcus Kennedy, Intel

"Any of these can alter the current quantum states and produce unexpected outcomes."

And while you can sort of copy the function of classical logic with qubits—we're not starting entirely at zero in developing these machines—to exploit a quantum computer's power requires new and complex quantum algorithms that we're only just getting to grips with.

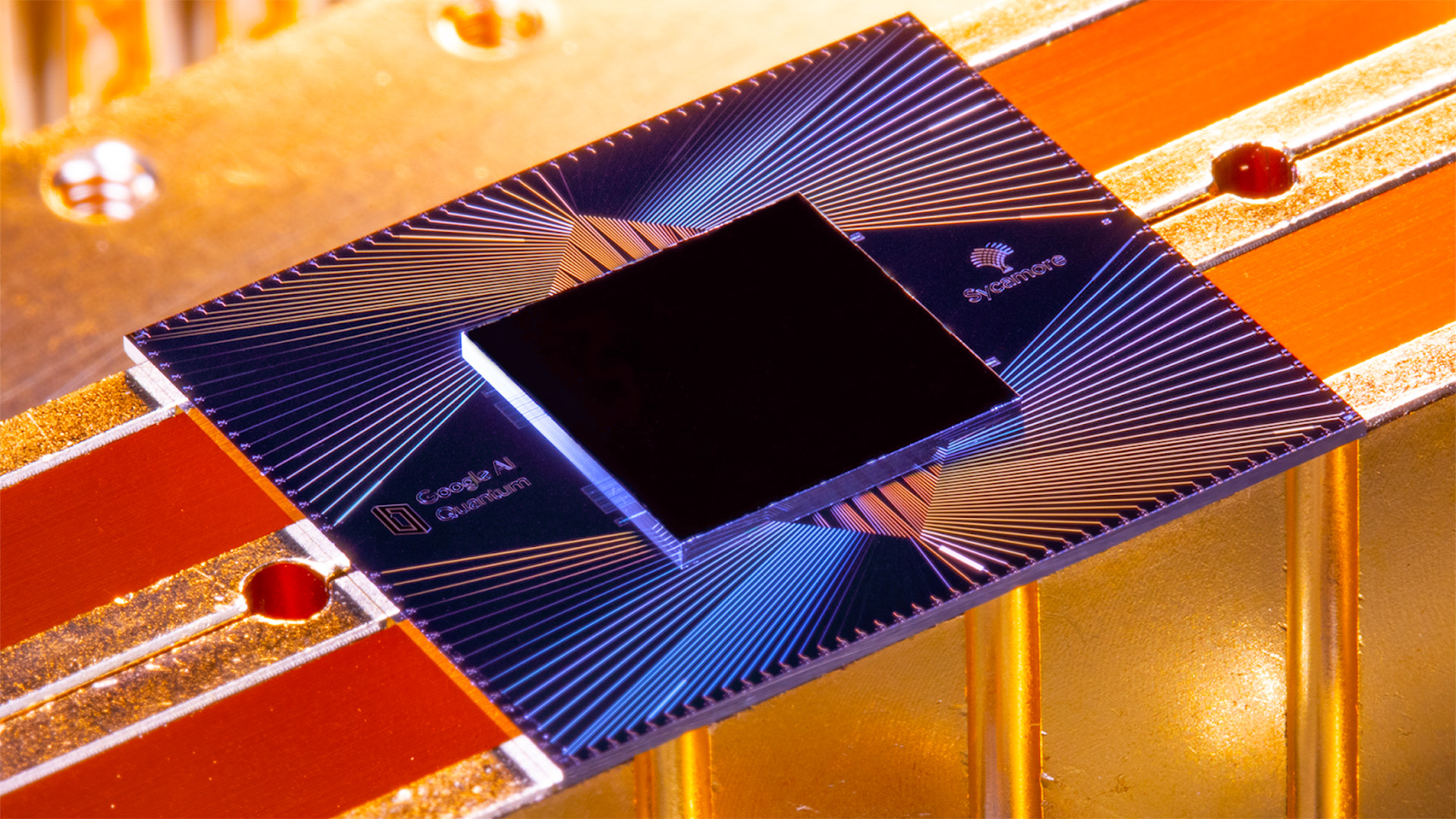

IBM is one company investing heavily in quantum computing, aiming to create a quantum computer with 4,158 or more qubits by 2025. Google also has its fingers in quantum.

Admittedly, we're still a long way off ubiquitous 'quantum supremacy', which is the moment when a quantum computer is better than today's top classical supercomputers. Google did claim it did just that back in 2019, though that may have turned out to be something of a niche achievement, but nonetheless an impressive one. Either way, in practical terms, we're just not there yet.

They're a real pain to figure out, to put it scientifically. But that's never stopped a good engineer yet.

"I do think that we're scratching the surface there with quantum computing. And again, just like we broke the laws of physics with silicon over and over and over again, I think we break the laws of physics here, too," Marcus Kennedy, general manager of gaming at Intel, tells me.

There's more immediate potential for the future of computing in artificial intelligence, your favourite 2023 buzzword. But it really is a massive and life-changing development for many, and I'm not just talking about that clever-sounding, slightly-too-argumentative chatbot in your browser. We're only scratching the surface of AI's uses today, and to unlock those deeper, more impactful uses there's a whole new type of chip in the works.

"Neuromorphic computing is, in my mind, the most viable alternative [to classical computing]," Moreno says.

"In a sense, we could say that neuromorphic computers are biological neural networks implemented on hardware. One would think it's simply translating a perceptron to voltages and gates, but it's actually a closer imitation on how brains work, on how actual neurons communicate amongst each other through synapsis."

What is neuromorphic computing? The answers in the name, neuro, meaning related to the nervous system. A neuromorphic computer aims to imitate the greatest computer, and most complex creation, ever known to man: the brain.

"I think we'll get to a place where the processing capability of those neuromorphic chips far outstrips the processing capability of a monolithic die based on an x86 architecture, a traditional kind of architecture. Because the way the brain operates, we know it has the capacity and the capability that far outstrips anything else," Kennedy says.

"The most effective kind of systems tend to look very much like things that you see in nature."

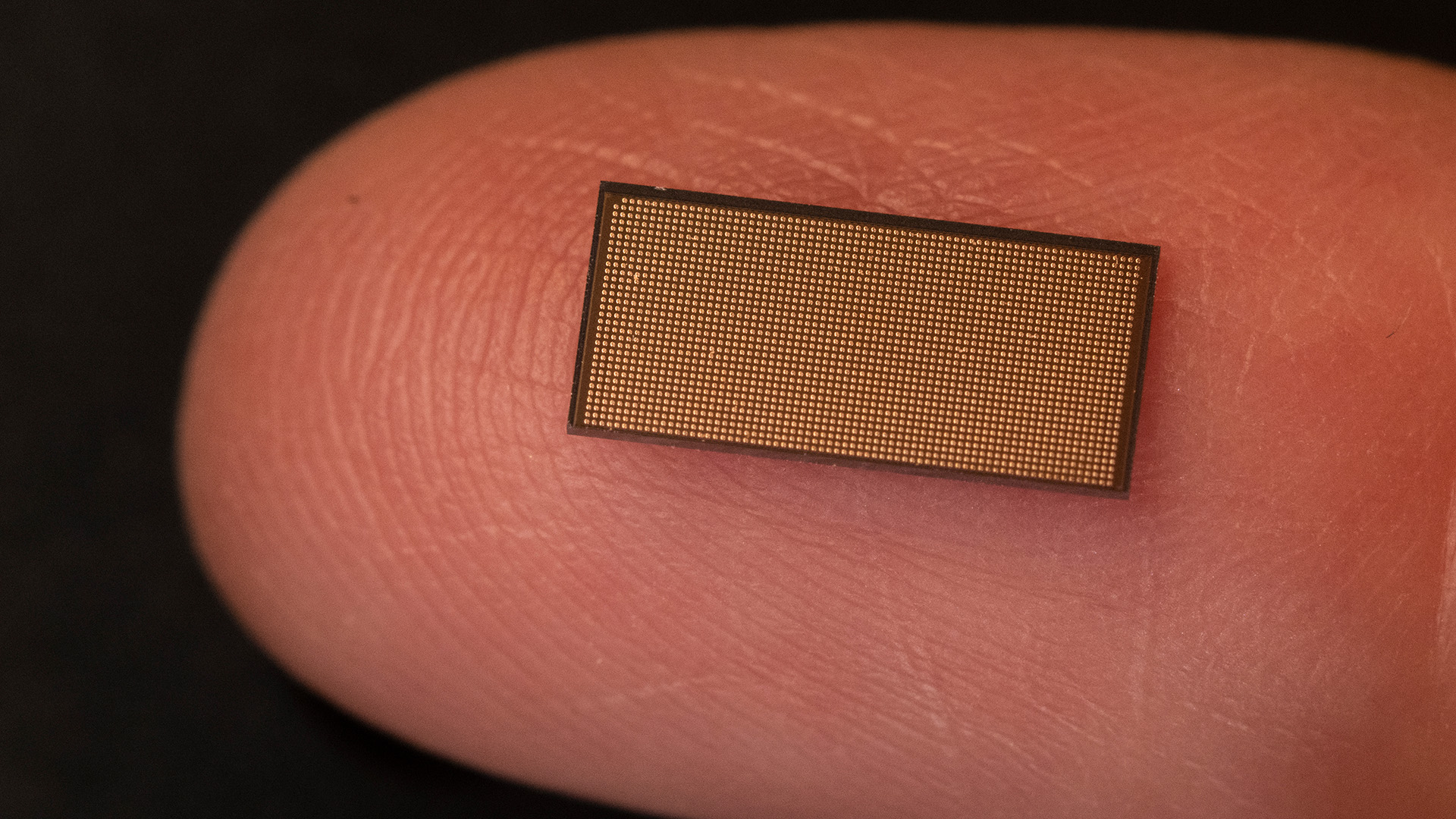

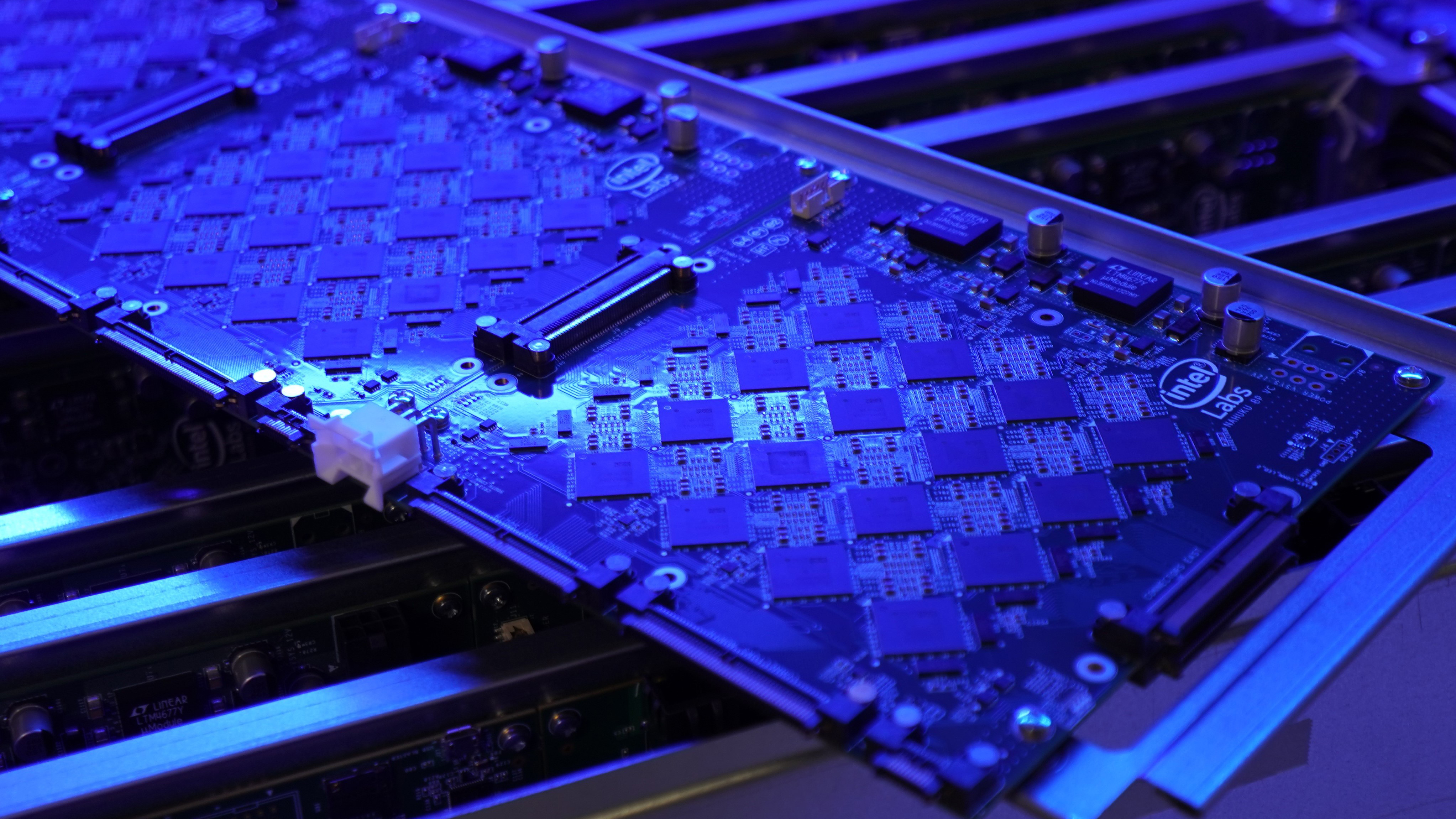

Neuromorphic chips are yet to reach their breakthrough moment, but they're coming. Intel has a couple of neuromorphic chips in development today, Loihi and Loihi 2.

And what is a neuromorphic chip, really? Well, it's a brain, with neurons and synapses. But since they're still crafted from silicon, think of them as a sort of hybrid of a classical computer chip and the biology of the brain.

And not necessarily a big brain—Loihi 2 has 1 million neurons and 120 million synapses, which is many orders of magnitude smaller than a human brain with roughly 86 billion neurons and trillions of synapses. It's hard to count them all, as you might imagine, so we don't really know precisely, but we have big ol' brains. You can brag about that all you want to your smaller-brained animal companions.

A cockroach is estimated to have as many synapses as Loihi 2, for a better understanding of the grey matter scale we're talking about here.

"We claim you don't need to be that complex that the brain has its function, but if you're going to do computing, you just need some of the basic functions of a neuron and synapse to actually make it work," Dr. Mark Dean told me in 2021.

Neuromorphic computing has a lot of room to grow, and with a rapidly growing interest in AI, this nascent technology may prove to be the key to powering those ever-more-impressive AI models you keep reading about.

The amount of processing power would surpass any of the existing products with just a fraction of the energy.

Carlos Moreno, CETYS Ensenada

You might think that AI models are running just fine today, which is primarily thanks to Nvidia's graphics cards running the show. But the reason neuromorphic computing is so tantalising to some is "that it can heavily reduce the power consumption of a processor, whilst still managing the same computational capabilities of modern chips," Moreno says.

"In comparison, the human brain is capable of hundreds of teraflops of processing power with only 20 watts of energy consumption, whilst a modest graphics card can output 40-50 teraflops of power with an energy consumption of 450 watts."

Basically, "If a neuromorphic processor were to be developed and implemented in a GPU, the amount of processing power would surpass any of the existing products with just a fraction of the energy."

Sound appealing? Yeah, of course it does. Lower energy consumption isn't only massive for the potential computing power it could bring about, it's massive for using less energy, which has knock-on effects for cooling, too.

"Changing the architecture of computing would also require a different programming paradigm to be implemented, which in its own will also be an impressive feat," Moreno continues.

Building a neuromorphic chip is one thing, programming for it is something else. That's one reason why Intel's neuromorphic computing framework is open-source, you need a lot of hands on deck to get this sort of project off the ground.

Forget quantum: here's what we're expecting for the future of gaming CPUs.

"The thing that we haven't cracked yet is the software behind how to leverage the structure," Kennedy says. "And so you can create a chip that looks very much like a brain, the software is really what makes it function like a brain. And to date, we haven't cracked that nut."

It'll take some time before we entirely replace AI accelerators with something that resembles a brain. Or Adders and binary functions, that are as old as computing itself, with quantum computers. Yet experiential attempts have already begun to replace classical computing as we know it.

A recent breakthrough claimed by Microsoft sees the company very bullish on quantum's future, and there's also recently been IBM predicting quantum computers will outperform classical ones in important tasks within two years.

In the words of Intel's Kennedy, "I think we're getting there."

Jacob earned his first byline writing for his own tech blog. From there, he graduated to professionally breaking things as hardware writer at PCGamesN, and would go on to run the team as hardware editor. He joined PC Gamer's top staff as senior hardware editor before becoming managing editor of the hardware team, and you'll now find him reporting on the latest developments in the technology and gaming industries and testing the newest PC components.