The tweaks and settings to get the best performance out of Overwatch

Maxing out high refresh displays for a competitive advantage.

Overwatch hardly needs an introduction. After more than a year Overwatch continues to receive regular updates, graphics card drivers have been tuned for the game, and with more 30 million registered players, it's not going away anytime soon.

The good news for people looking to get into Overwatch is that it's not too demanding when it comes to hardware. If you have a PC built in the past five years with a decent graphics card, you can probably get at least 30 fps. For 60 fps, you'll either need a faster graphics card or lower quality settings.

But what if you're the highly competitive type, and you're running a 144Hz or even 240Hz display? That will require a pretty powerful system, and that's why we chose Overwatch for a performance analysis.

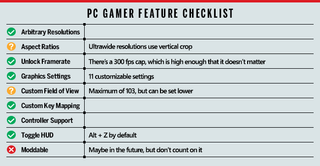

Looking at the features checklist, Overwatch does a lot of things right, but there are a few areas where it really comes up short. Resolution support is good, but the FOV setting is locked to a maximum of 103, which means ultrawide displays and multimonitor support ends up with vertical cropping rather than a properly scaled experience. Blizzard chose to go this route in order to prevent owners of ultrawide displays from having a competitive advantage, but personally I find that argument specious. Owners of fast PCs and people with fast Internet connections also have a competitive advantage, for example. Regardless, don't expect an ideal ultrawidescreen experience.

The major missing item however is modding support. Blizzard has said it knows modding is a big deal, but that it hasn't found an effective way to support modding in Overwatch without compromising other aspects of the game (eg, cheating). Considering the whole MOBA genre spawned from a mod to WarCraft 3, it's a sad and unfortunate loss. There's a slim chance Blizzard could change this in the future, but don't count on it.

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Overwatch on a bunch of different AMD and Nvidia GPUs, multiple CPUs, and several laptops—see below for the full details. Full details of our test equipment and methodology are detailed in our Performance Analysis 101 article. Thanks, MSI!

Overwatch has a fairly comprehensive collection of graphics settings that can be tweaked, with 11 individual settings. There are five presets for ease of use: Low, medium, high, ultra and epic. However, many of the settings have very little impact on performance or visual fidelity. Dynamic reflections is the most demanding setting to enable, providing up to a 50 percent boost to frame rates when it's off, and it's not something most people will miss. Local fog detail can also have a 10-15 percent impact on performance, followed by shadow quality and ambient occlusion.

The remaining settings only cause a small drop in performance, even at maximum quality, though texture quality does need sufficient VRAM—typically 4GB or more and you can set that to max. I'll discuss the various settings in detail below, if you want additional information.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

The hardware

MSI provided all of the hardware for this testing, mostly consisting of its Gaming/Gaming X graphics cards. Unfortunately, many of the cards are currently showing wildly inflated pricing due to cryptocurrency fever, and quite a few are out of stock right now. The above price tables show the current best prices we're tracking, however, so they'll populate when the cards are back in stock.

MSI's Gaming X cards are designed to be fast but quiet, and the fans will shut off completely when the graphics card isn't being used. All of the GPUs are factory overclocked, by 5-10 percent. Our main test system is MSI's Aegis Ti3, a custom case and motherboard with an overclocked 4.8GHz i7-7700K, 64GB DDR4-2400 RAM, and a pair of 512GB Plextor M8Pe M.2 NVMe solid-state drives in RAID0. There's a 2TB hard drive as well.

MSI also provided three of its gaming notebooks for testing, the GS63VR with GTX 1060, GT62VR with GTX 1070, and GT73VR with GTX 1080. The GS63VR has a GTX 1060 6GB with a 4Kp60 display, the GT62VR has a GTX 1070 and a 1080p60 G-Sync display, and the GT73VR has a GTX 1080 with a 1080p120 G-Sync display. For testing higher resolutions on the GT-series notebooks, I used Nvidia's DSR technology.

For CPU testing, MSI has provided several different motherboards. In addition to the Aegis Ti3, I have the X99A Gaming Pro Carbon for Haswell-E/Broadwell-E testing, Z270 Gaming Pro Carbon for additional Core i3/i5 Kaby Lake CPU testing, X370 Gaming Pro Carbon for high-end Ryzen 7 builds, B350 Tomahawk for budget-friends Ryzen 5 builds, and the new X299 Gaming Pro Carbon AC for Intel's Skylake-X platform.

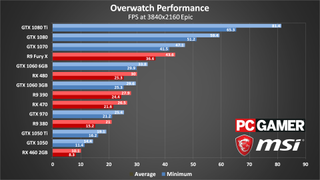

Overwatch benchmarks

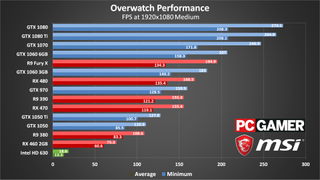

For the benchmarks, I've used my standard choice of 1080p medium as the baseline, and then supplemented that with 1080p, 1440p, and 4K epic performance (though it's worth noting that even epic doesn't max out every setting). As with other competitive multiplayer games, some people will want to disable certain graphics settings to try to gain a competitive advantage. Lower quality shadows and lighting can make it easier to spot opponents, for example. The performance difference between the low and medium presets is pretty small, however, so I've stuck with that as the minimum.

Testing performance of a game like Overwatch is a bit tricky, since the critical moment is going to be in the heat of battle with explosions and particles flying everywhere. Online multiplayer isn't exactly repeatable, so I ran a custom battle with 11 bots on the King's Row map. I grabbed a 60 second benchmark of framerates multiple times and took the median result. The variation between runs is less than five percent, so if two results are close to each other, it's effectively a tie. Remember that in less hectic moments framerates can be up to twice as fast, but the numbers here are what you'll get during intense firefights.

For the maximum framerate junkies running on 240Hz displays, only the fastest graphics cards will suffice. The 1070, 1080, and 1080 Ti all break 240 fps averages, though periodic dips keep minimum closer to 200 fps. Since the only 240Hz displays currently available also support G-Sync or FreeSync, however, that shouldn't be a problem. If you want to maintain 240 fps, you'll also want to stick with Nvidia GPUs, at least for now, as none of AMD's GPUs could break the 240 fps barrier.

In general, at most price tiers the Nvidia GPUs hold a clear performance advantage (and that's not even taking into account the current cryptocurrency inflated pricing). I haven't (re)tested with AMD's latest drivers, so these results are potentially two weeks out of date, but the GTX 1060 6GB beats the R9 Fury X, and the GTX 1060 3GB beats the RX 480 8GB (overclocked).

All of the dedicated GPUs are clearly hitting playable framerates, but the 1050 and 460 are usually pretty similar. Here the 1050 holds onto a nearly 50 percent performance advantage, indicating better optimized drivers. AMD will need more than a faster GPU to close the gap, so hopefully new drivers will improve the situation when RX Vega launches next month.

What about those running integrated graphics? I have bad news for you: at 1080p medium, Intel's latest HD Graphics 630 (found in most 7th Generation Core processors, and with similar performance to the HD Graphics 530) comes up well short of 30 fps. It's not playable at less than 20 fps. You can improve things by dropping the resolution, however—at 1366x768, the HD 630 manages around 45 fps. If you're on an older 4th Gen Core processor, graphics performance will be about 20-30 percent slower, but that should still get you at least 30 fps. AMD's more recent APUs should also be able to hit 30-40 fps at 1366x768.

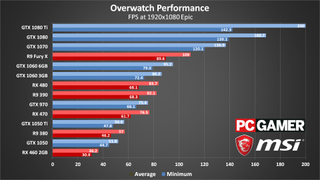

Cranking the quality to 1080p epic drops performance on the fastest card, the GTX 1080 Ti, by about 25 percent, as it was bumping into the 300 fps framerate cap before. Slower GPUs like the RX 470 can lose up to half of their performance compared to medium quality. All of the tested graphics cards are still playable, but if you're looking for 60+ fps, you'll need an RX 470 or GTX 970 or equivalent to get there. And if you were hoping for 240 fps on a 1080p 240Hz display, sorry, it's not happening. All of the extra graphical effects prove too much for even the 1080 Ti.

Looking at the budget cards, the Radeon RX 460 2GB sits on the 30 fps fence, which for competitive gamers is going to be too slow. Nvidia again performs better than AMD in Overwatch, with the mainstream GTX 1060 models easily beating the RX 470 and 480—and by extension, the RX 570 and 580. VRAM isn't a major factor if you look at the 1050 and 1050 Ti, though that will change at higher resolutions. Not that I'd recommend going with a 2GB card these days, as many other games have started to benefit from 4GB VRAM.

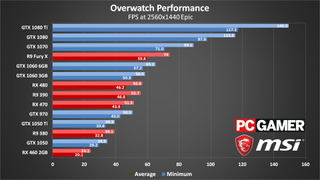

1440p Epic quality obviously demands a powerful graphics card. Those with a 144Hz display will need a 1080 Ti to max out the refresh rate, and for AMD the only hope right now is that RX Vega will be in that same ballpark. But if you have a G-Sync or FreeSync display, the RX 470 and above and GTX 970 and above all run quite well. I'll get into more detail in a moment, but for 1440p gaming, even with a GTX 1080 Ti, nearly any modern CPU will be fast enough to max out the graphics card.

If all you want is 60 fps, the 1060 6GB and R9 Fury X will get you there, but Nvidia's hardware is clearly delivering better overall performance—at least the Fury X is able to beat the 1060 this round, no doubt helped by the massive 512GB/s of bandwidth that HBM provides, but I'd normally expect the Fury X to be closer to the 1070 in performance.

I've said that Overwatch isn't as demanding as some other games, but that doesn't mean 4K epic is a walk in the park. A GTX 1080 will nearly average 60 fps during the most demanding sequences, but if you want a steady 60+ fps you'll need a GTX 1080 Ti. This is actually one of the few recent games where a single fast GPU can run well above 60 fps at maximum quality, but if you're willing to disable dynamic reflections then the Fury X and 1070 should also break into 60 fps territory.

I also have some good news for those running multi-GPU solutions. GTX 1080 SLI showed excellent performance scaling— a bit too excellent, actually, as GTX 1080 SLI nearly tripled the performance of a single 1080. It's hard to tell what's going on, but I'd guess certain graphics effects are disabled with SLI, probably dynamic reflections. If your main concern is framerates, that's not a problem, and it would allow for 144+ fps on 1440p and 4K displays. Now if only the Asus PG27UQ and Acer Predator X27 were available for purchase—those will be the first 144Hz 4K displays.

CPU performance scaling

Swipe left/right for more images.

Swipe left/right for more images.

Swipe left/right for more images.

Swipe left/right for more images.

What about the CPU side of things—does Overwatch benefit from additional CPU cores and SMT/Hyper-Threading? I've decided to use the GTX 1080 Ti for CPU scaling tests this time, as that will move the bottleneck to the CPU side of things as much as possible. Using the 1080 Ti, I tested that five Intel CPUs and three AMD Ryzen CPUs. The above gallery shows results at all four test settings.

The critical moment is going to be in the heat of battle with explosions and particles flying everywhere.

The biggest difference is at lower resolutions and quality settings. At 1080p, the i9-7900X and i7-7800X are basically tied, with both CPUs bumping into the 300 fps cap. That's interesting, considering the lower clockspeeds and additional cores, so Overwatch is clearly able to benefit from more than four CPU cores. On the other hand, the 4-core/8-thread i7-7700K still easily beats the 8-core/16-thread Ryzen 7 1800X, so don't neglect clockspeeds and IPC.

At 1080p epic, the i9-7900X and i7-7800X still take top honors, but the Ryzen 1800X moves ahead of the i7-7700K. All the extra graphics effects appear to tax the CPU cores differently, with minimum fps on the 7700K clearly taking a hit. But at 1440p, most CPUs start to look the same, and only the i3-7100 is moderately slower—not that I expect anyone to pair a $700 GPU with a $120 CPU. Also keep in mind multiple GPUs like GTX 1080 SLI will need more CPU than a single GPU, which is why I recommend Core i7 or above for SLI and CrossFire builds.

Of course, most of these CPU limitations are only visible with an ultra-fast graphics card. Using a slower mainstream card like a GTX 1060 3GB or RX 470 4GB (or RX 570 4GB), Core i5 and Ryzen 5 are more than sufficient. There's almost no difference in performance between the i5-7500 and i7-7700K using a 1060 3GB or RX 470.

Overwatch on the go

Swipe left/right for more images.

Swipe left/right for more images.

Swipe left/right for more images.

Swipe left/right for more images.

Shifting over to the mobile side of things, there are a few oddities. First, the GS63VR is the only notebook I tested that uses Nvidia's Optimus Technology, which basically renders the game on the dedicated GPU and then copies the resulting frames over to the integrated graphics' framebuffer. At moderate framerates, this isn't a problem, but there's a clear bottleneck in Overwatch that limits framerates to around 72 fps. Basically, don't get too hung up on the GS63VR numbers at 1080p.

Given the mobile CPUs are slower than the i7-7700K in the desktop system, it's no surprise that the GTX 1080 notebook falls behind the GTX 1080 desktop at 1080p medium. Things are a lot closer at the epic preset, though the desktop is still a bit faster. The mobile 1070 and 1060 aren't clocked as aggressively, and in the case of the 1060, in addition to Optimus it has to cope with significantly reduced cooling performance as the GS63VR is a thin and light gaming notebook. The desktop 1070 ends up being about 10 percent faster than the GT62VR, while the desktop 1060 is about 20 percent faster than the GS63VR.

Fine tuning performance

Test System

MSI Aegis Ti3 VR7RE SLI-014US

MSI X299 Gaming Pro Carbon AC

MSI X99A Gaming Pro Carbon

MSI Z270 Gaming Pro Carbon

MSI X370 Gaming Pro Carbon

MSI B350 Tomahawk

Graphics Cards

MSI GTX 1080 Ti Gaming X 11G

MSI GTX 1080 Gaming X 8G

MSI GTX 1070 Gaming X 8G

MSI GTX 1060 Gaming X 6G

MSI GTX 1060 Gaming X 3G

MSI GTX 1050 Ti Gaming X 4G

MSI GTX 1050 Gaming X 2G

MSI RX 480 Gaming X 8G

MSI RX 470 Gaming X 4G

MSI RX 460 4G OC Gaming

MSI RX 460 2G OC Gaming

Gaming Notebooks

MSI GT73VR Titan Pro (GTX 1080)

MSI GT62VR Dominator Pro (GTX 1070)

MSI GS63VR Stealth Pro (GTX 1060)

I mentioned some of the biggest factors in improving performance earlier, but here's the full rundown of the various settings, along with rough estimates of performance changes. The numbers given here aren't from the complete benchmark using a bunch of different cards, but were gathered using a single RX 480 running at 1080p epic, and comparing the minimum setting on each item to the maximum (epic) setting using the average framerate. I specifically selected a location where performance was lower, then started checking each setting.

The Graphics Quality global preset is the easiest place to start tuning performance, though it requires a restart to take full effect. Epic quality gives a baseline score of 91 fps. Dropping to ultra improves performance to 116 fps, high is 165 fps, medium is 176 fps, and low gives 218 fps. If you check out the above screenshots, you'll notice that there are only a few slight differences in shadows between epic and ultra, then high reduces the lighting and shadow quality more—neither of these are major changes in overall appearance. From high to medium there's a clear drop in lighting reflections on some walls—still not a huge difference—and then finally at low (minimum) quality, everything starts to look really flat.

If you're hoping to tweak the individual settings to better tune performance, below is the approximate improvement in performance for each setting, along with a note on whether the setting can be changed without restarting the game. There will be some variation depending on your GPU, but not too much.

Render Scale: The range is 50-200, in relatively coarse steps. 50 with a resolution of 1920x1080 represents a rendered resolution of 960x540, while 200 is equivalent to 3840x2160. At 50, performance improves by around 80 percent, while 200 drops performance to about 1/3 of normal (for four times as many pixels rendered). I recommend setting this to 100 rather than using 'Automatic.'

Texture Quality (restart required): with sufficient VRAM, this causes almost no change in performance—I measured a two percent gain going from high to low on the RX 480 8GB, and a similar change on a GTX 1050 Ti 4GB. Only cards with less than 2GB VRAM will really need to drop this below the high setting.

Texture Filtering Quality: Allows anisotropic filtering settings of 1x to 16x, with almost no impact on performance. You can safely set this to 16x (Epic) on nearly any GPU.

Local Fog Detail (restart required): The second most demanding setting in the game, the name is a bit misleading—this is actually used for volumetric lighting. In practice, there's very little change in image quality, regardless of setting, and dropping this from ultra to low improves performance by about 15 percent.

Dynamic Reflections: The most demanding individual setting, depending on the area in the game this can impact performance by 20-50 percent. This is used for reflections of moving (dynamic) objects, like other players, bullets, etc. In the test scene, disabling this increases performance by 25 percent.

Shadow Detail (restart required): Effects the shadow mapping quality, with only a minor impact on performance. Turning this off only improves performance by around 5 percent.

Model Detail (restart required): Controls the number of polygons used for the character and level models. In testing, there was no discernable difference between the minimum low setting and the maximum ultra setting, but the low setting strips out a lot of extra objects so I'd set this to at least medium quality.

Effects Detail (restart required): This mostly appears to affect weapon effects and other dynamic elements, so potentially this will have a larger impact on performance during battles. During the test scene, however, this only caused a 4 percent change in performance.

Lighting Detail (restart required): Controls the quality and number of light sources, including god rays. The performance impact is minimal, with low improving framerates by only 3 percent compared to ultra.

Antialias Quality: Another setting that doesn't really impact performance much, mostly because all of the anti-aliasing methods used are post-processing algorithms. Low uses FXAA and the other enabled modes uses varying levels of SMAA. Going from ultra to off only improves framerates by about 3 percent, so I recommend leaving this on ultra for most GPUs.

Refraction Quality: Affects the way light bends as it passes through certain transparent/translucent objects. It's hard to notice this while playing, and the performance impact is a moderate 5 percent, so if you're looking for every last bit of performance setting this to low can help a bit.

Screenshot Quality: This is in the graphics settings so I'm listing it, but it only affects the resolution of screenshots. It's sort of like Nvidia's Ansel technology, except without all the extra UI functionality. You can capture 1X to 9X resolution, and the only time it will impact performance is when you grab a screenshot.

Local Reflections: Unlike the dynamic reflections, local reflections are pre-calculated and thus not nearly as demanding, and having these on can improve the overall appearance of the game quite a bit. Turning this off yields about a 3 percent gain in fps.

Ambient Occlusion: Unlike many other games, Overwatch only uses SSAO, the least costly form of ambient occlusion—a way of improving shadow quality in the corners and areas where polygons intersect. The performance cost is relatively small at 5 percent, so you can usually leave this on.

All testing was done with the latest Nvidia and AMD drivers available at the time of testing, Nvidia 384.76 and AMD 17.6.2. Ignoring the insanity caused by cryptocurrency miners for a moment, Nvidia's GPUs are clearly the superior solution right now. If you already own an AMD GPU, however, performance is usually more than sufficient, unless you're after 144+ framerates. If that's the case, you'll want at least a GTX 1070, or SLI Nvidia cards. AMD GPUs can also tweak settings in order to improve performance, and with a few smart changes (like disabling dynamic reflections and fog), most mainstream AMD cards can get 60 fps or more.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

'Game changing' ArmA Reforger update adds attack helicopters, mortars, and destructible buildings: 'nowhere on the battlefield is safe anymore'

Over 60% of Call of Duty players reported for cheating are on console, but the data says nearly all cheaters are on PC: 'We've found that many of these reports have been inaccurate'