Nvidia's DLSS upscaling really does need those AI-accelerating Tensor cores after all

Investigation into Nvidia GPU workloads reveals that Tensor cores are being hammered, just incredibly briefly.

Nvidia has form when it comes to locking out older GPUs from new technologies and features. So when it wheeled out DLSS upscaling technology, limiting it to the latest GPU architecture at the time, there was always the question of whether that was strictly necessary. If, perhaps, DLSS could run on older GPUs, was it just that Nvidia preferred to help the generational upsell by restricting the technology to newer hardware.

That suspicion is only heightened by the fact that both AMD's FSR upscaling and Intel's XeSS can run on a much wider range of GPUs, including those of competitors (for absolute clarity, Intel's XeSS came in two flavours, one widely compatible, the other requiring Intel Arc GPUs). What this all comes down to, then, is the question of whether DLSS scaling does indeed lean heavily on those AI-accelerating Tensor cores, as Nvidia claims.

Well, now we seemingly have an answer, of sorts. And it turns out DLSS really does need those Tensor cores.

An intrepid Reddit poster, going under the handle Bluedot55, leveraged Nvidia's Nsight Systems GPU metric tools to drill down into the workloads running on various parts of an Nvidia RTX 4090 GPU.

Bluedot55 ran both DLSS and third party scalers on an Nvidia RTX 4090 and measured Tensor core utilisation. Looking at average Tensor core usage, the figures under DLSS were extremely low, less than 1%.

Initial investigations suggested even the peak utilisation registered in the 4-9% range, implying that while the Tensor cores were being used, they probably weren't actually essential. However, increasing the polling rate revealed that peak utilisation is in fact in excess of 90%, but only for brief periods measured in microseconds.

When you think about it, that makes sense. The upscaling process has to be ultra quick if it's not to slow down the overall frame rate. It has to take a rendered frame, process it, do whatever calculations are required for the upscaling, and output the full upscaled frame before the 3D pipeline has had time to generate a new frame.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

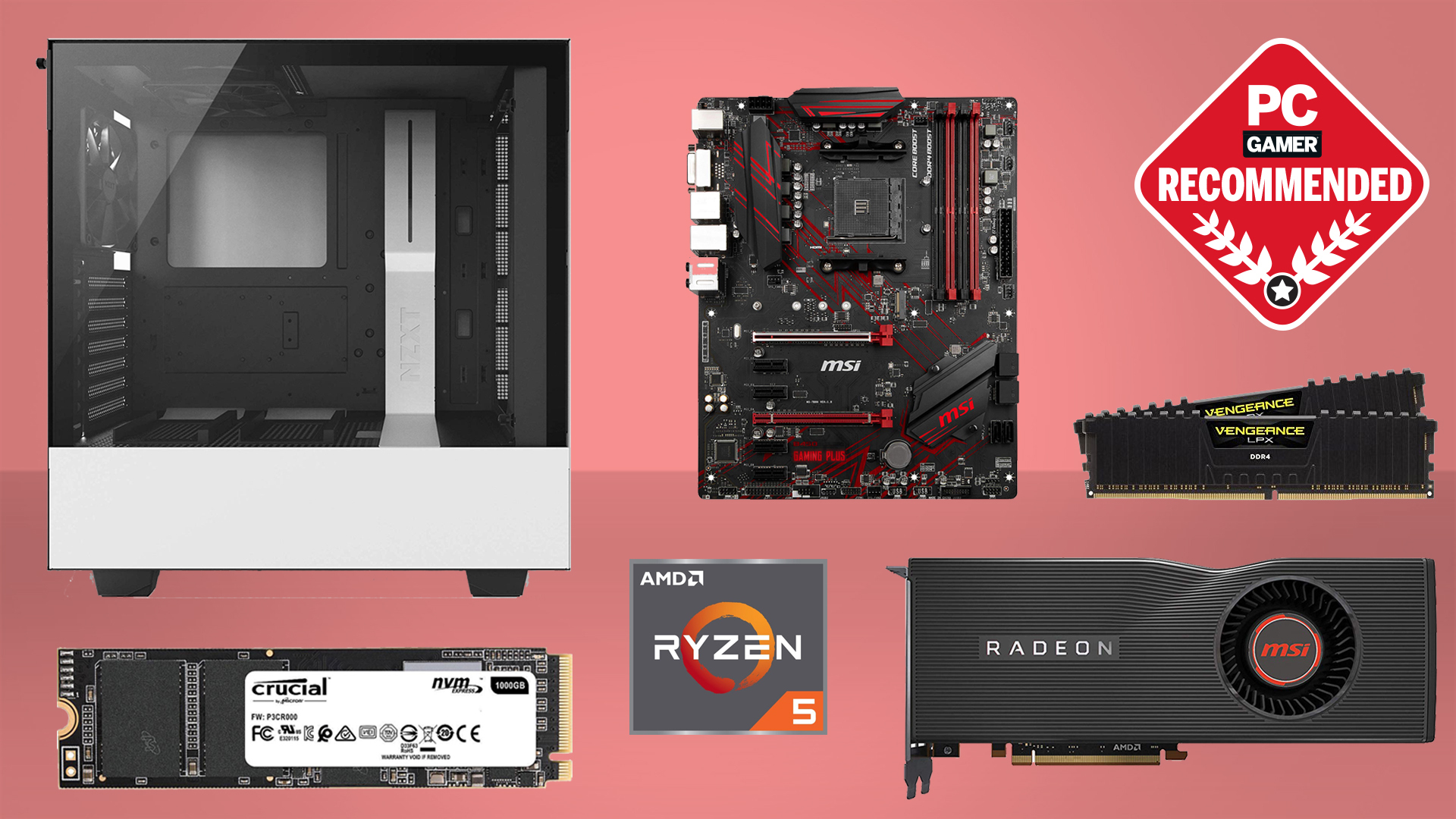

Best CPU for gaming: The top chips from Intel and AMD

Best gaming motherboard: The right boards

Best graphics card: Your perfect pixel-pusher awaits

Best SSD for gaming: Get into the game ahead of the rest

So, what you would expect to find is exactly what Bluedot55 observed. An incredibly brief but intense burst of activity inside the Tensor cores when DLSS upscaling is enabled.

Of course, Nvidia's GPUs have offered Tensor cores for three generations and you have to go back to the GTX 10 series to find an Nvidia GPU that doesn't support DLSS at all. However, as Nvidia adds new features to the DLSS overall superset, such as Frame Generation, newer hardware is being left behind.

What this investigation shows is that while it's tempting to doubt Nvidia's motives whenever it seeming locks out older GPUs from a new feature, the reality may be simply be that the new GPUs can do things old ones can't. That's progress for you.

Jeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.