Our Verdict

At $999 the RTX 4080 would have been a great high-end gaming GPU, but the artificial RTX 3080 Ti price point, and 'unlaunched' 12GB version has backed the latest Ada card into the corner. At the moment it's got the 4K gaming performance against the previous gen, but if the RX 7900 XTX pushes it harder than expected, Nvidia may have to respond on pricing. But I wouldn't bank on it.

For

- Bests both the RTX 3090 and 3080 Ti

- Has the glitzy Frame Generation magic

- Svelte, efficient GPU

Against

- Over-priced

- Over-sized

PC Gamer's got your back

The new Nvidia RTX 4080 is another speedy graphics card—it bloody should be for $1,200—and when you take DLSS 3 into account you are getting on for twice the performance of the similarly priced RTX 3080 Ti from the last generation. Seriously, Frame Generation is black magic.

But reviewing the RTX 4080 is tougher than being Jen-Hsun's spatula wrangler. Though it's a lot more straightforward now there's only a 16GB version and it doesn't come with some additional 12GB half breed trailing it around. But while I feel that I've got all the data necessary to make a decision on how good a GPU the new Ada Lovelace card is right in this moment, I'm ultra-aware that moment isn't going to last long.

You could always argue the temporary nature of a GPU's initial success (or failure) is a regular issue with graphics card reviews; they can only ever exist as a snapshot in time in light of the inevitable changes that availability, pricing, and competition will wreak following any release.

But this generation feels different to any other, and the RTX 4080 release more so. The struggle I'm having, you see, is that AMD (likely deliberately) announced its Radeon RX 7900 XTX just ahead of this GeForce release. And that is AMD's top GPU from this new generation, which is specifically targeting the RTX 4080, and not the Nvidia RTX 4090.

Knowing that, it almost feels like I'm reviewing this card with just half the data I really need to be able to make a fully informed decision. As it is, I can probably extrapolate just enough from what little AMD has let slip officially to make a call. But it's still a bit of a stretch.

What's also a stretch is any form of justification for the pricing of modern graphics cards.

During a time of extreme economic difficulty and uncertainty across the globe, it's not a great look for both the main GPU makers to reveal their next generation graphics cards with the starting price being $900 at best. There will be arguments the $1,200 RTX 4080's performance over and above the RTX 3080 Ti renders it an unqualified success. But I have thoughts on that, too.

Nvidia RTX 4080 specs

What's inside the RTX 4080?

I guess the highlight here is the fact this is the RTX 4080 16GB card, not the 'unlaunched' RTX 4080 12GB. But the key to why Nvidia ended up having to kill (however temporarily) the 12GB card lies not just in the amount of memory it sported, but the actual GPU makeup of the chip itself.

This RTX 4080 16GB card is using the second-tier Ada Lovelace GPU, the AD103 chip. The 12GB card was set to use the AD104, which is a third-tier GPU, and realistically only suitable to go into an RTX 4070-series card, not some bastardised RTX 4080. Which I guess is where we're going to see it next pop up, either with or without the 'Ti' suffix.

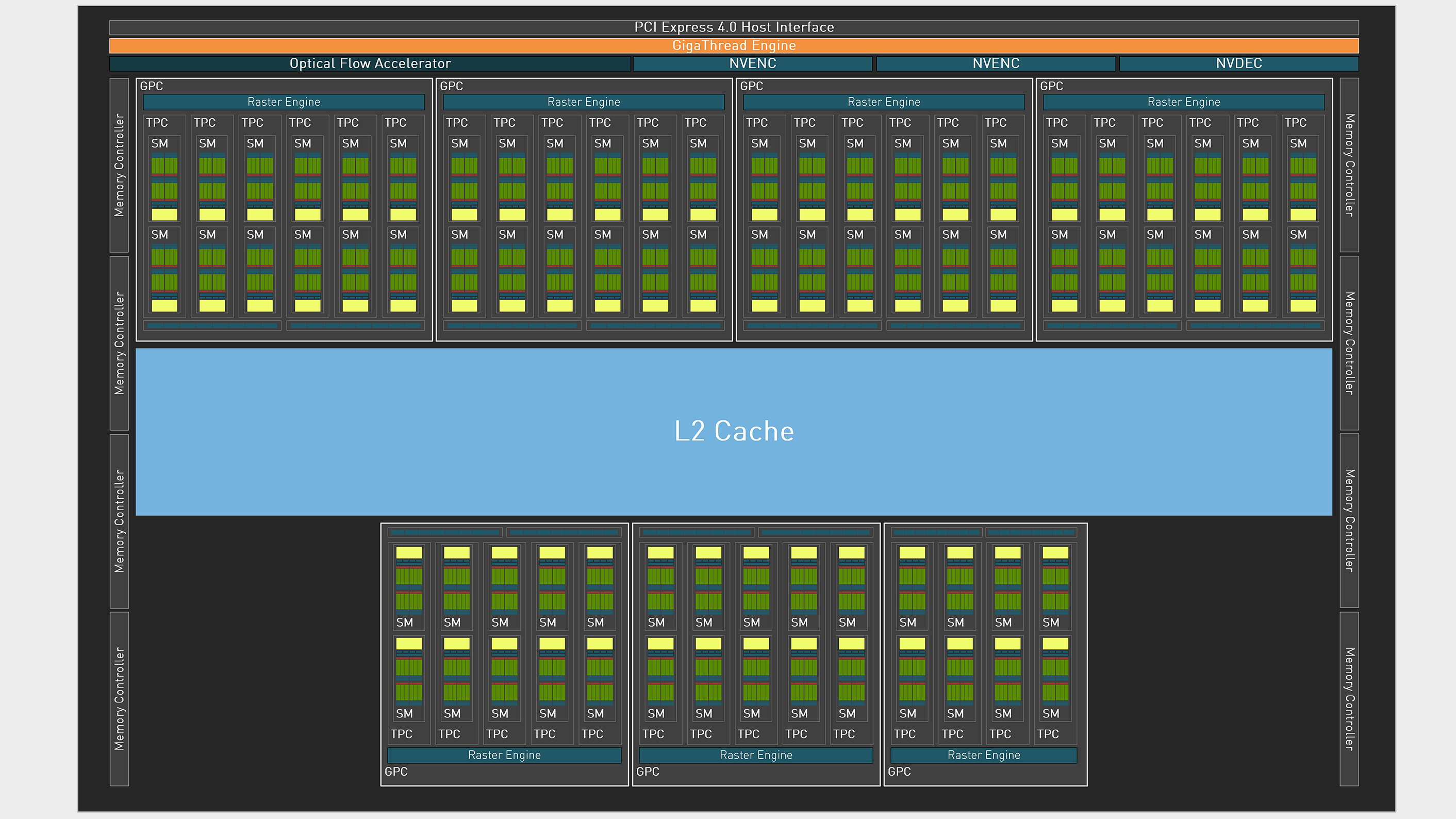

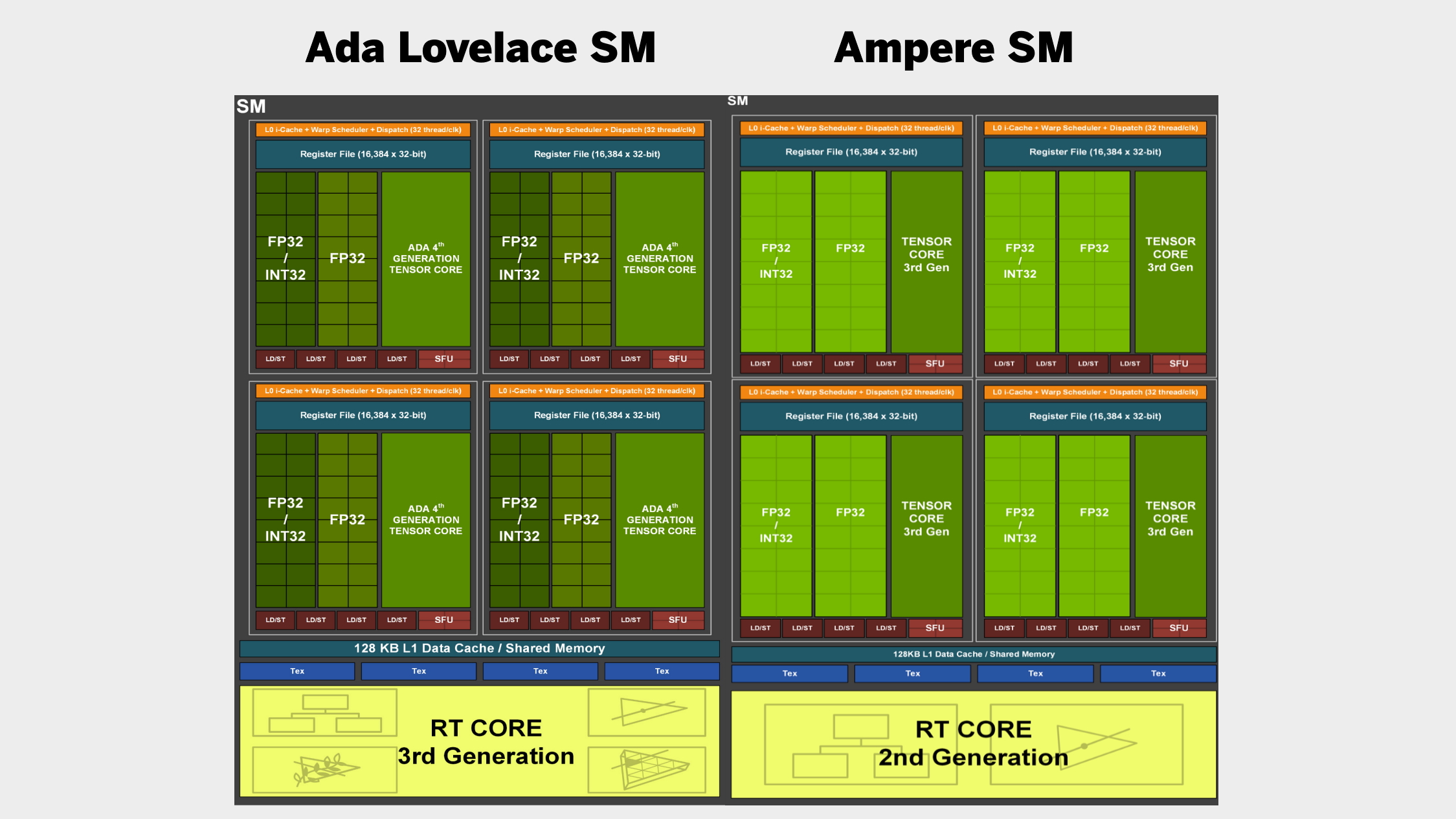

However, the AD104 stuff shakes out in the final reckoning, the RTX 4080's AD103 GPU contains all the architectural goodness that made the RTX 4090 such a powerful new chip. That means you get the fourth gen Tensor Core and third-gen RT Core, as well as the frame generating magic of DLSS 3. Inevitably this far smaller slice of silicon cannot hope to contain the same level of individual componentry the top AD102 contains.

The RTX 4080's GPU is around 62% the size of the RTX 4090, contains around 60% the numbers of transistors, and is home to around 59% of the CUDA cores the top consumer Ada Lovelace GPU houses. Which might have you questioning why it costs just 25% less than the big boi card.

The chip used in the RTX 4080 isn't the full AD103 config, however. That has 80 SMs and 10,240 CUDA cores, as opposed to the 76 SMs and 9,728 CUDA cores the RTX 4080 design contains.

But it's still mighty impressive when you compare the new TSMC 4N AD103 against the top Samsung 8nm GA102 of the previous generation. That was a 628.4mm² GPU with 28.3 billion transistors inside it. This is a 378.6mm² chip with 45.9 billion transistors inside. If you wanted a quick numerical snapshot of the new nominally 4nm GPU lithography then it's right there in the disparity between the reduction in die size and the massive boost in transistor count.

| Header Cell - Column 0 | GeForce RTX 4080 | GeForce RTX 3080 Ti |

|---|---|---|

| GPU | AD103 | GA102 |

| Lithography | TSMC 4N | Samsung 8N |

| CUDA cores | 9728 | 10,240 |

| SMs | 76 | 80 |

| RT Cores | 76 | 80 |

| Tensor Cores | 304 | 320 |

| ROPs | 112 | 112 |

| Boost clock | 2,505MHz | 1,665MHz |

| Memory | 16GB GDDR6X | 12GB GDDR6X |

| Memory speed | 22.4Gbps | 19Gbps |

| Memory bandwidth | 717GB/s | 912GB/s |

| L1 | L2 cache | 9,728KB | 65,536KB | 10,240KB | 6,144KB |

| Transistors | 45.9 billion | 28.3 billion |

| Die Size | 378.6mm² | 628.4mm² |

| TGP | 320W | 350W |

| Price | $1,200 | £1,269 | $1,200 | £1,200 |

Despite having a GPU that's smaller than the Ampere chip at the heart of the RTX 3060 Ti, however, Nvidia has still opted to use the exact same MASSIVE cooler that it picked for the RTX 4090 Founders Edition. The add in board partners (AIBs) have followed suit, too—I've got a PNY RTX 4080 that's the size of a small moon.

It's got a mighty familiar PCB as well, using a modified PG136 like the RTX 4090 Founder Edition. That means the same cutout and heavily power phased board to cope with the 320W Total Graphics Power (TGP) the RTX 4080 generates.

It's also got a familiar 16-pin power connector, too, with a triple-headed adapter as opposed to the quad-head one supplied with the RTX 4090. We still don't know quite why the 16-pin adapters out in the wild have been melting—not a good look for a super expensive, high-powered graphics card—but Nvidia is persevering with it. As are the AIBs.

It might seem strange to say, but I bet there will be a host of people trying to get an RTX 4080 adapter to melt as soon as they're launched.

Then we come to the memory. As has been widely reported, this RTX 4080 comes with 16GB of GDDR6X memory, though maybe not as well reported that it comes with a 256-bit aggregated memory interface. Compared with the 384-bit bus the identically priced RTX 3080 Ti shipped with that feels a bit limited. Again, we're back to referencing the RTX 3060 Ti when it comes to that particular spec in the Ampere generation.

I'd say it's leaning heavily on both that clock speed bump and the extra L2 cache to deliver its lead over the RTX 3080 Ti.

What likely mitigates that and helps the RTX 4080—with its fewer CUDA cores, fewer texture units, and weaker memory bus compared with the RTX 3080 Ti—is the fact that the Ada architecture follows current chip advancement procedure and jams a ton more cache into the die. We're talking ten times the amount of L2 cache in the RTX 4080 as with the RTX 3080 Ti.

It's also using faster 22.4 Gbps memory, courtesy of a higher clock speed. And when we're talking about clocks, the actual GPU clock itself is absolutely worth referencing here—Nvidia rates the RTX 4080 with a 2,505MHz boost clock, while the RTX 3080 Ti comes with a 1,665MHz boost.

Conservative isn't the word here, though, because in my testing I was getting an average clock speed of over 2,700MHz compared with 1,700MHz on my RTX 3080 Ti Founders Edition. Again, Ada is offering a 1GHz clock speed bump over the previous generation.

And when it comes to raw rasterised gaming performance, I'd say it's leaning heavily on both that clock speed bump and the extra L2 cache to deliver its lead over the RTX 3080 Ti.

Nvidia RTX 4080 benchmarks and performance

How does the RTX 4080 perform?

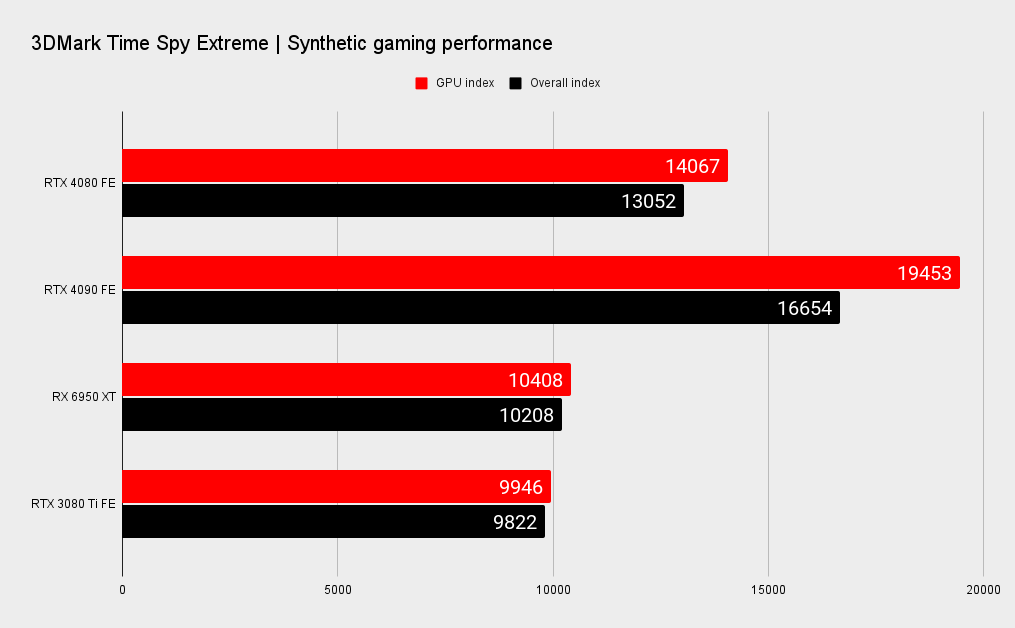

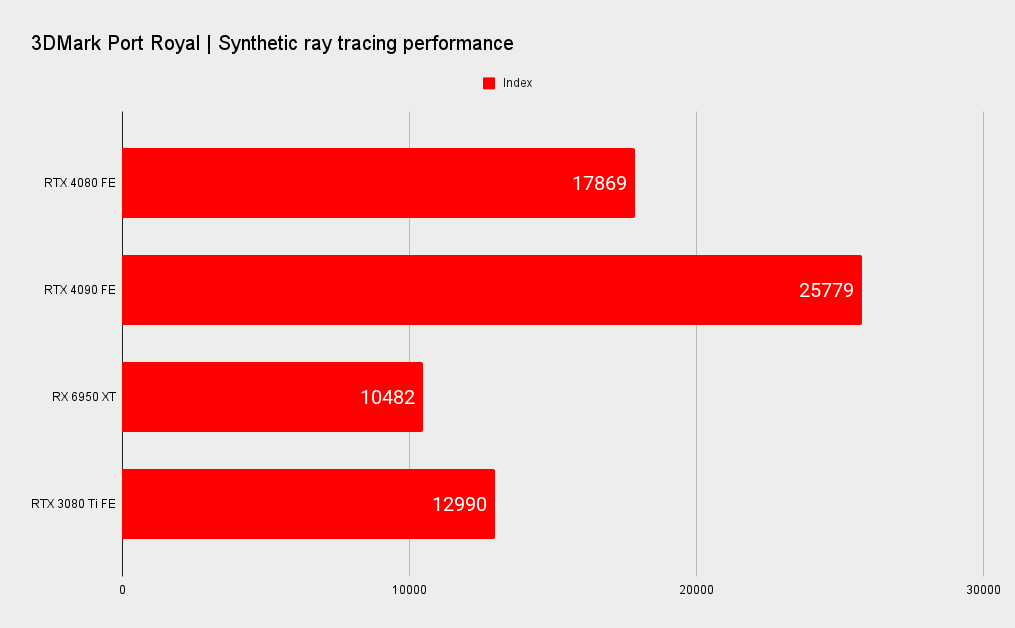

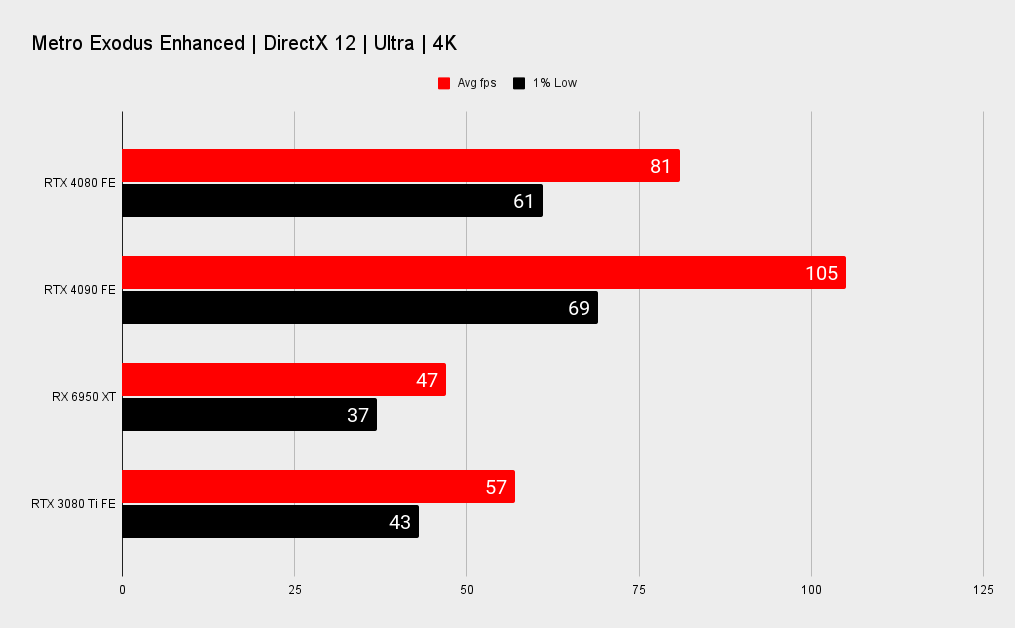

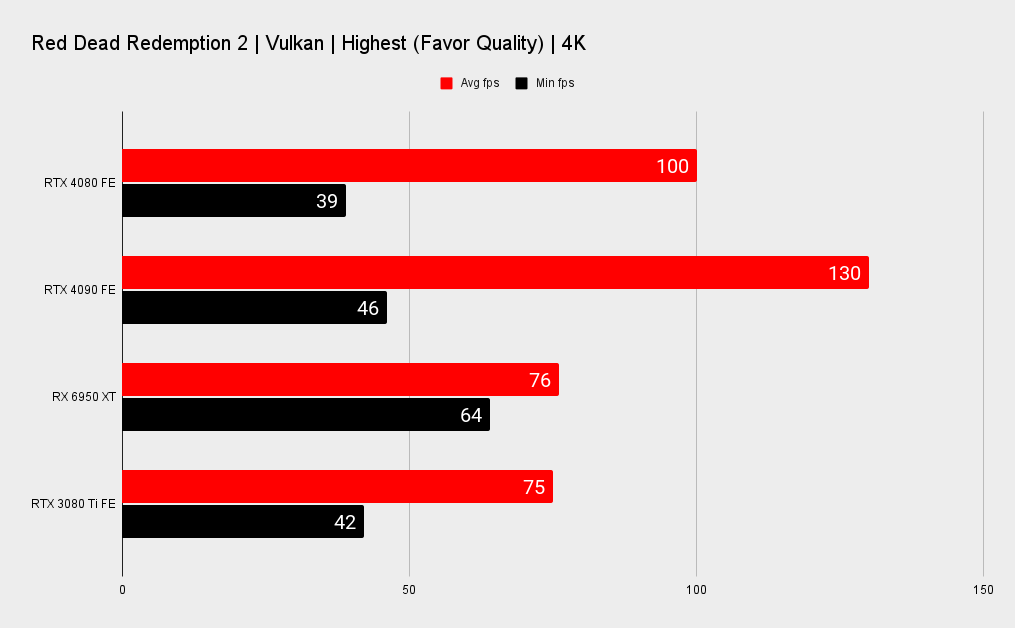

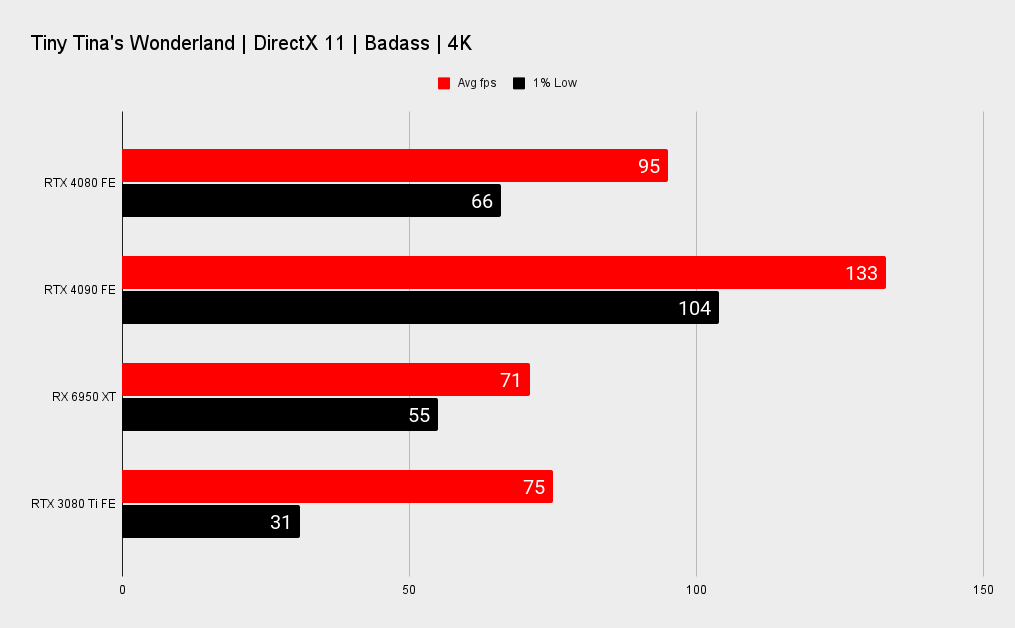

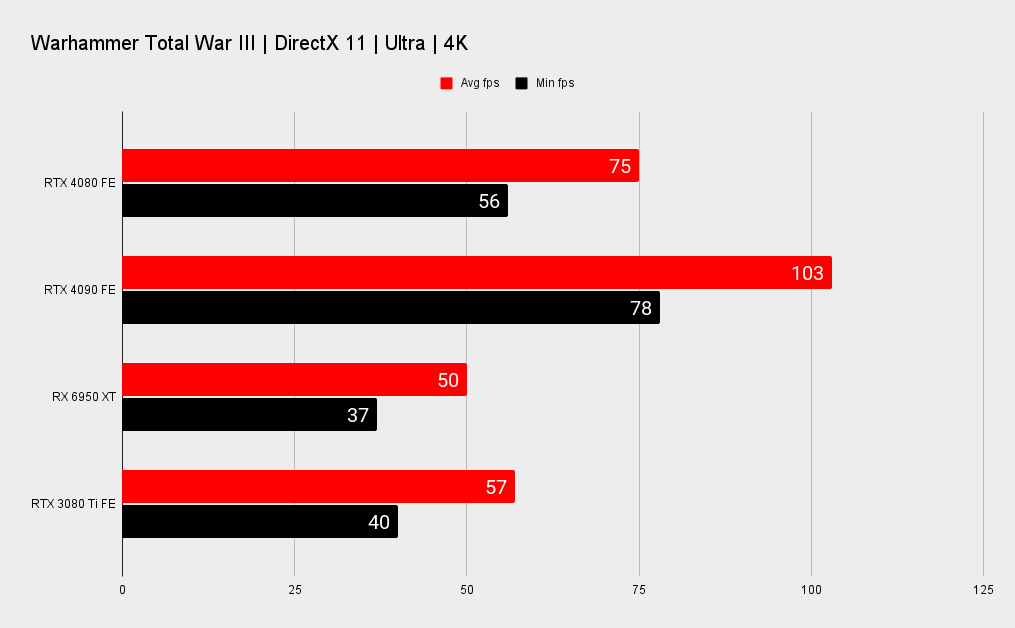

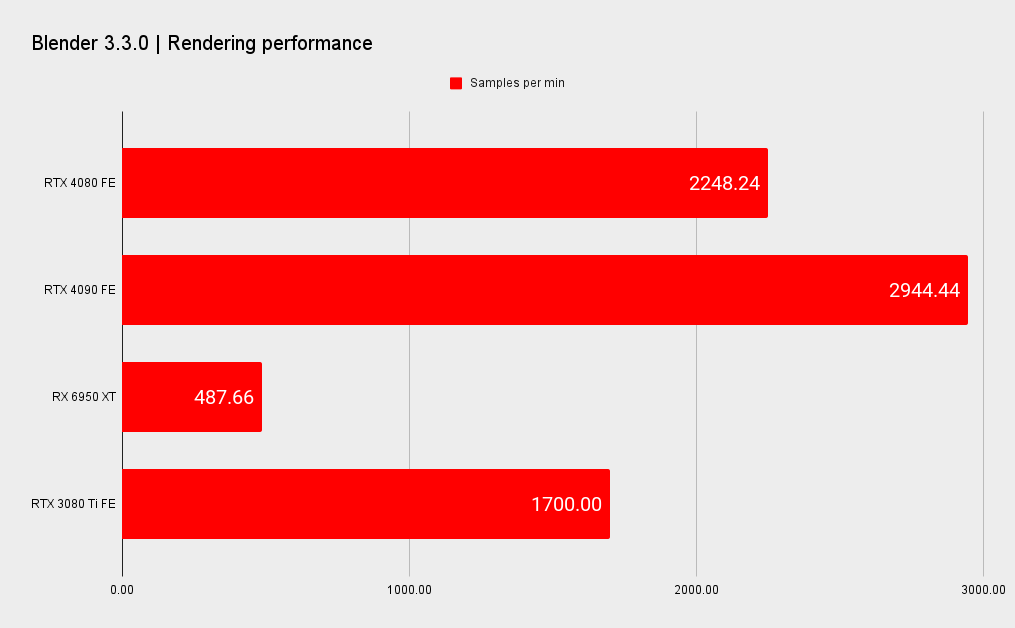

For all the technical nuances of a GPU that's around 60% the size of the AD102 chip of Nvidia's RTX 4090 flagship in most respects that count, the RTX 4080 is only on average 33% slower than its slightly older sibling across our benchmarking suite. And that's in the somewhat rarified air of 4K ray traced gaming at ultra presets.

Flip it around and that just highlights how much extra power and silicon is required to squeeze much more performance out of a GPU architecture. It's certainly not a linear graph when it comes to what is required to nail high frame rates in modern games.

4K gaming performance

CPU: Intel Core i9 12900K

Motherboard: Asus ROG Z690 Maximus Hero

Cooler: Corsair H100i RGB

RAM: 32GB G.Skill Trident Z5 RGB DDR5-5600

Storage: 1TB WD Black SN850, 4TB Sabrent Rocket 4Q

PSU: Seasonic Prime TX 1600W

OS: Windows 11 22H2

Chassis: DimasTech Mini V2

Monitor: Dough Spectrum ES07D03

Does that make the RTX 4080 more of a sweet spot for non-4K gaming than the RTX 4090? Maybe, but if you're buying either RTX 40-series card to play games at 1080p then I'd maybe suggest something's not quite right when it comes to your perception of propriety or value. Still, the RTX 4080 is practically as good as the flagship Ada card when it comes to sub-4K resolutions where you're arguably still bound by the limits of your processor.

It's interesting then that Nvidia's latest drivers have actually improved the GPU performance of its cards compared with the RTX 4090 release software. If you're doing PC gaming right, and running the RTX 4090 at 4K, you will not notice the difference, but the new drivers used in testing the RTX 4080 have shown a defined increase at the lower resolutions, where the powerful GPUs would have been CPU bound before.

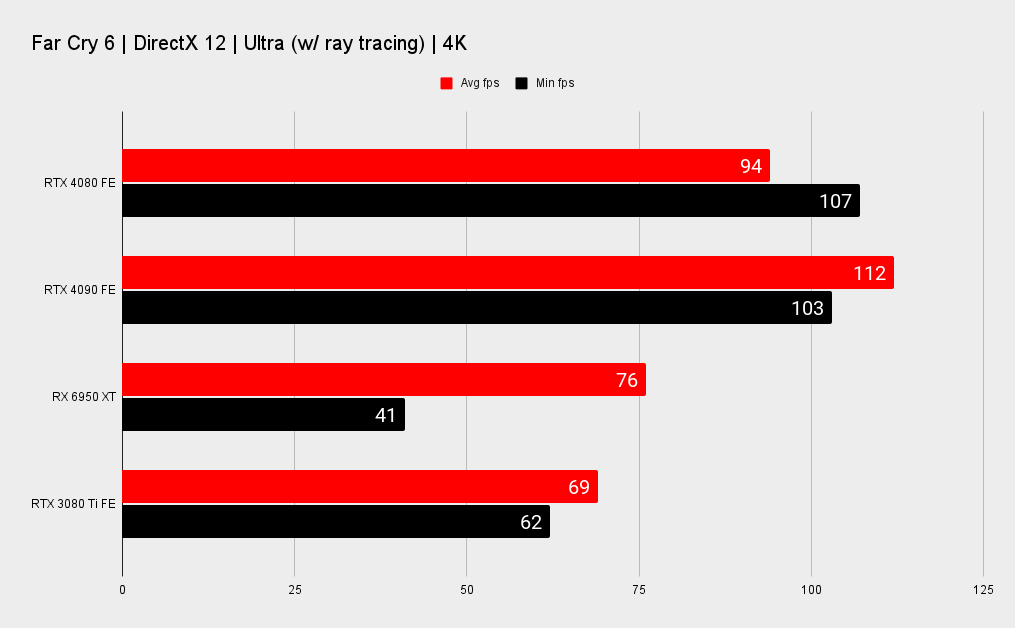

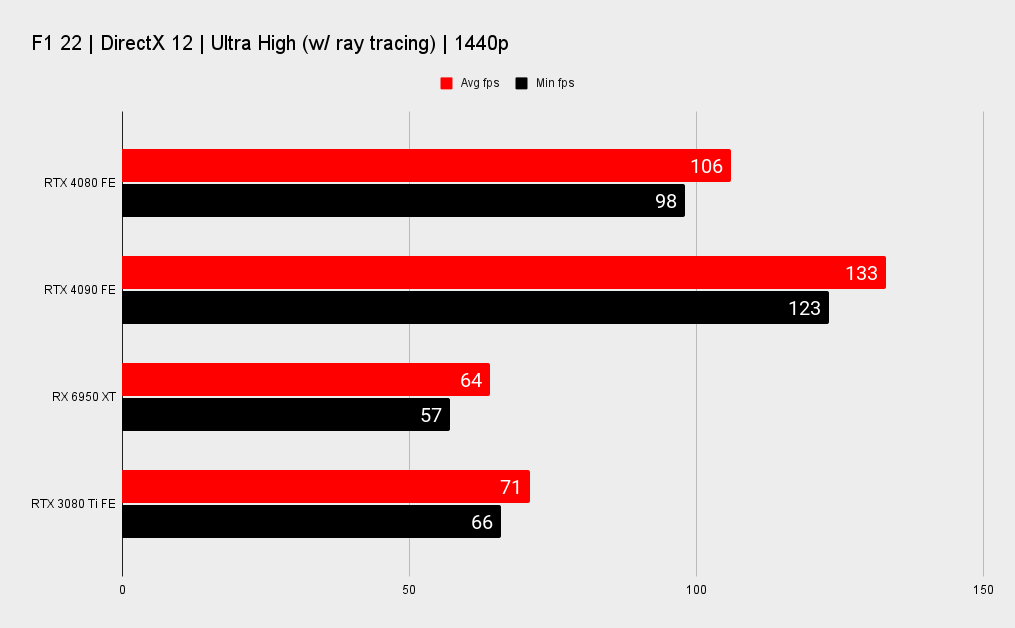

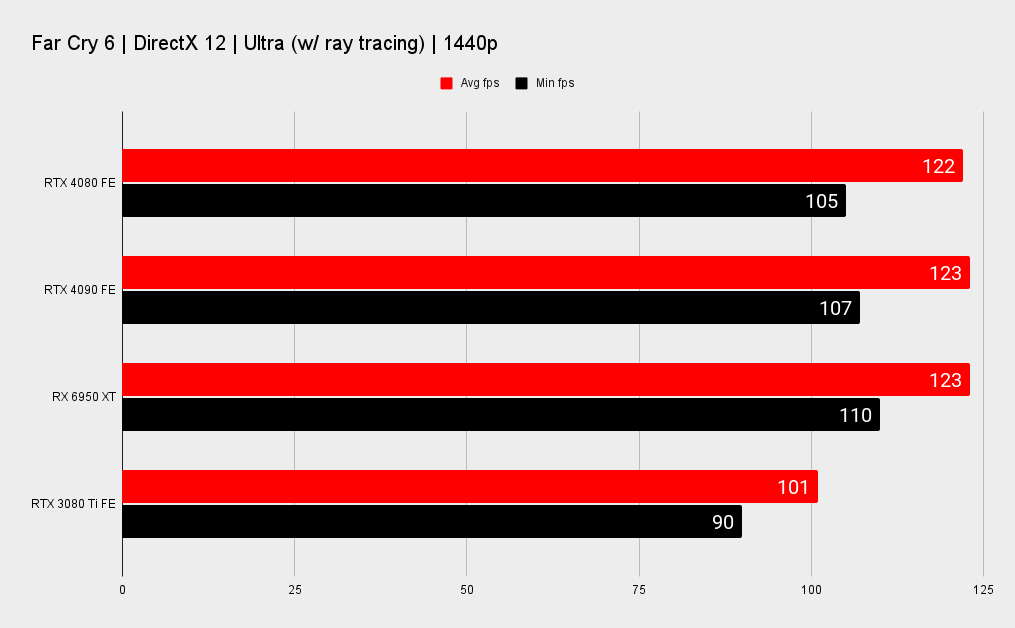

In games where previously the 1080p and 1440p scores were more or less identical the new drivers have given it that little bit extra, to the point where I was initially surprised to see the RTX 4080 actually taking the lead over the RTX 4090 in a host of CPU bound games. I've since retested the Ada monster on those titles with this latest software, and the rising tide has definitely lifted all the silicon boats in the water. In Far Cry 6 and F1 22 I've seen a 12% and 16% increase in 1080p performance.

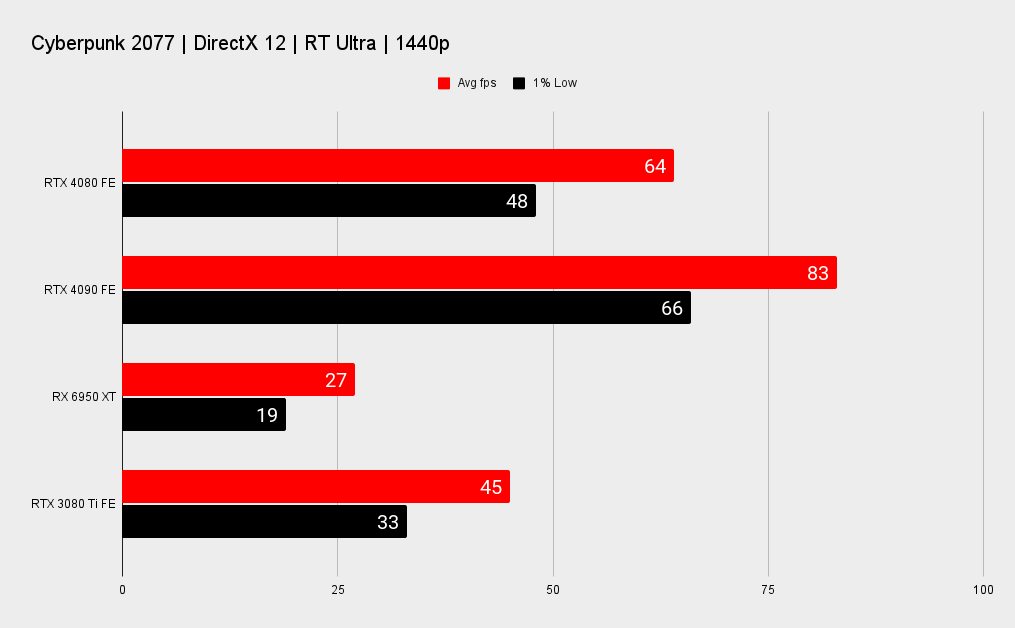

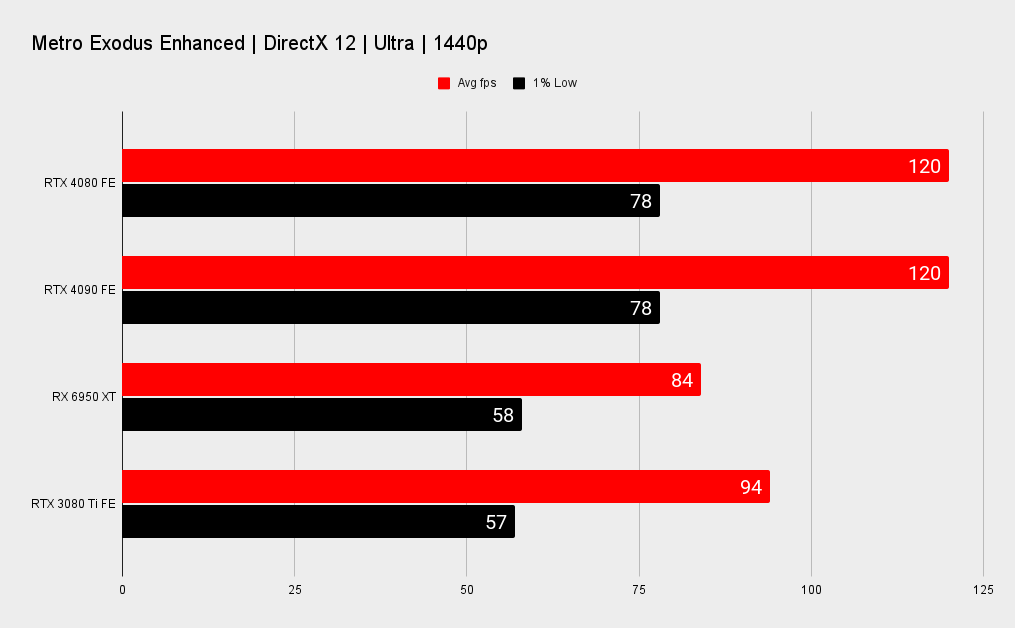

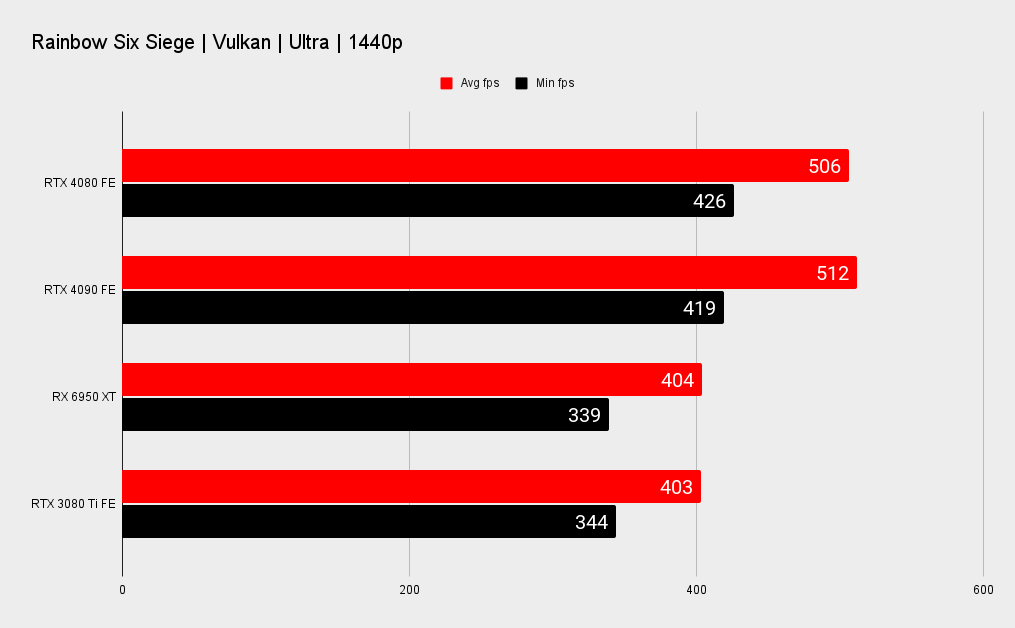

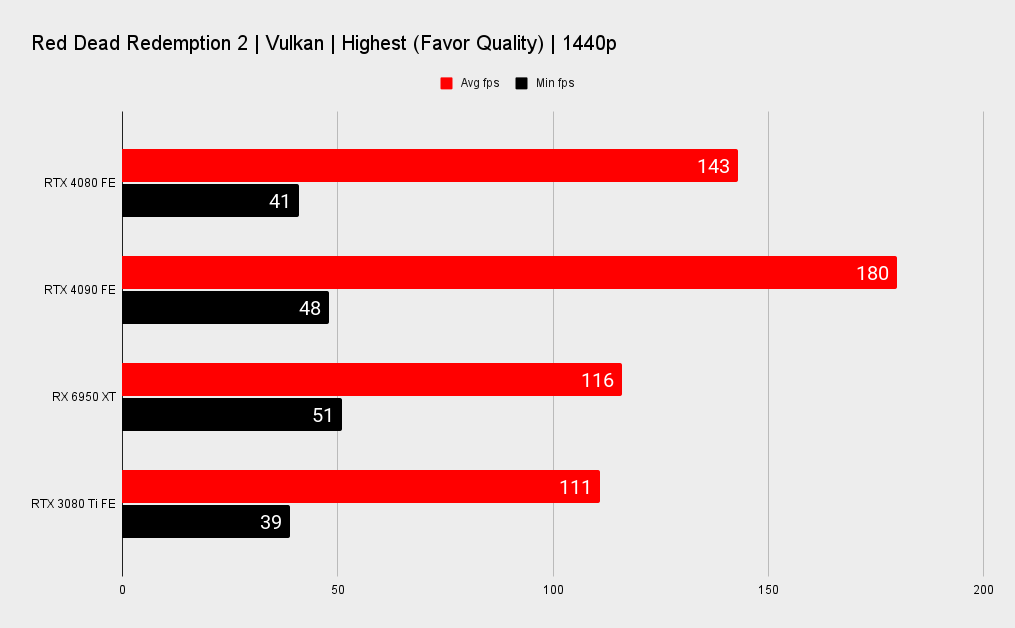

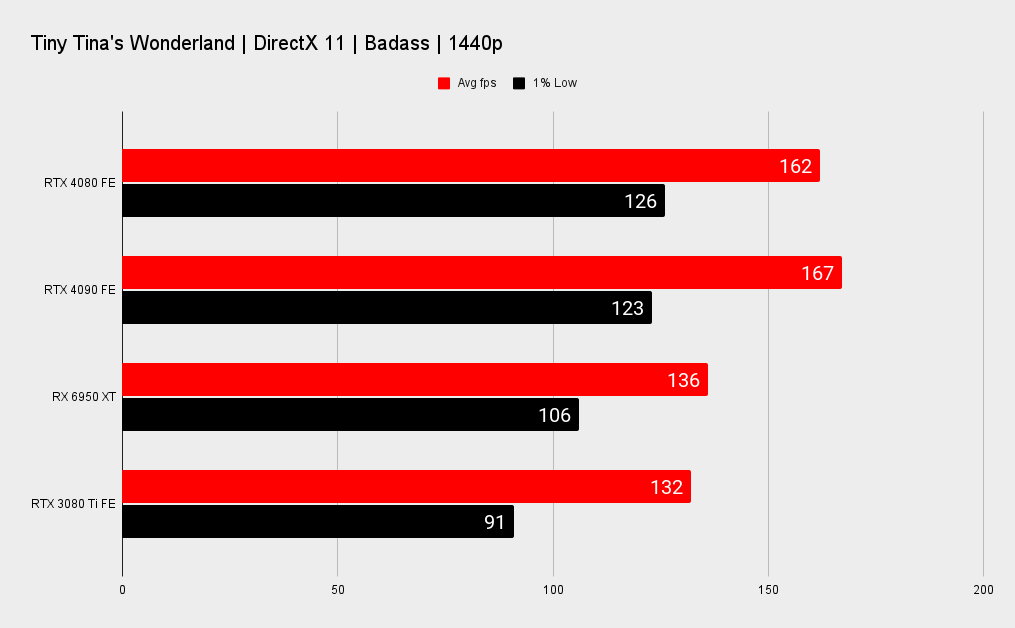

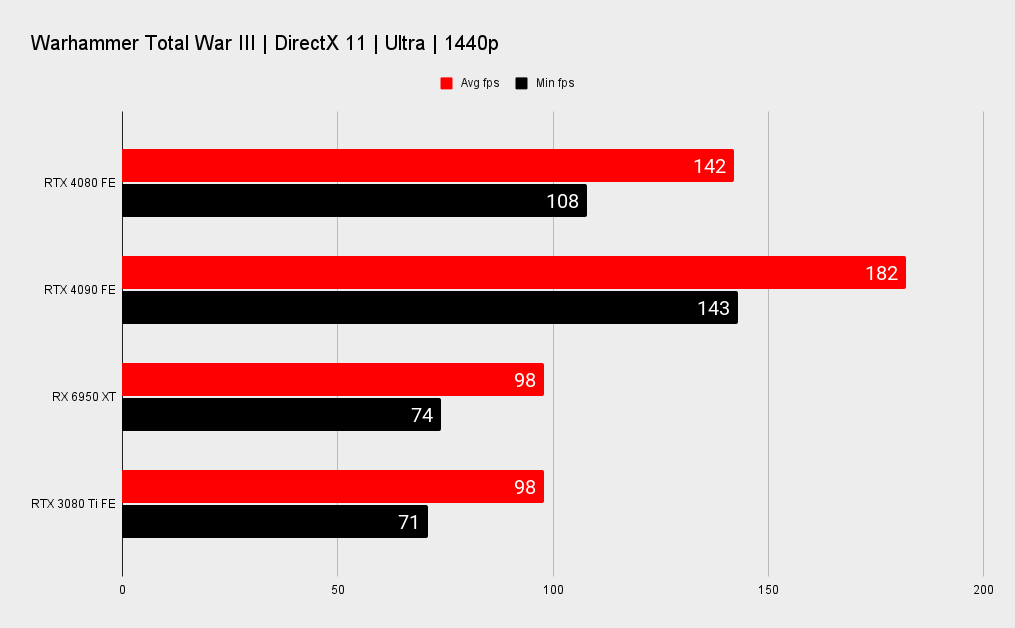

1440p gaming performance

That's all against the flagship RTX 40-series card, but how about the $1,200 GPU it's ostensibly replacing, the RTX 3080 Ti? Averaged across our benchmarking suite, the RTX 4080 is around 38% quicker than the Ampere card. That's a hefty performance hike for the same price.

Throw the $100 cheaper RX 6950 XT to the Ada wolves, however, and AMD's most powerful graphics card gets savaged. The ray tracing titles in our suite are the ones with blood on their hands here, but you're looking at the RTX 4080 being 68% faster on average. In the games where rasterised performance is all that's required, however, the performance delta is far closer, closer to the mark of the RTX 3080 Ti.

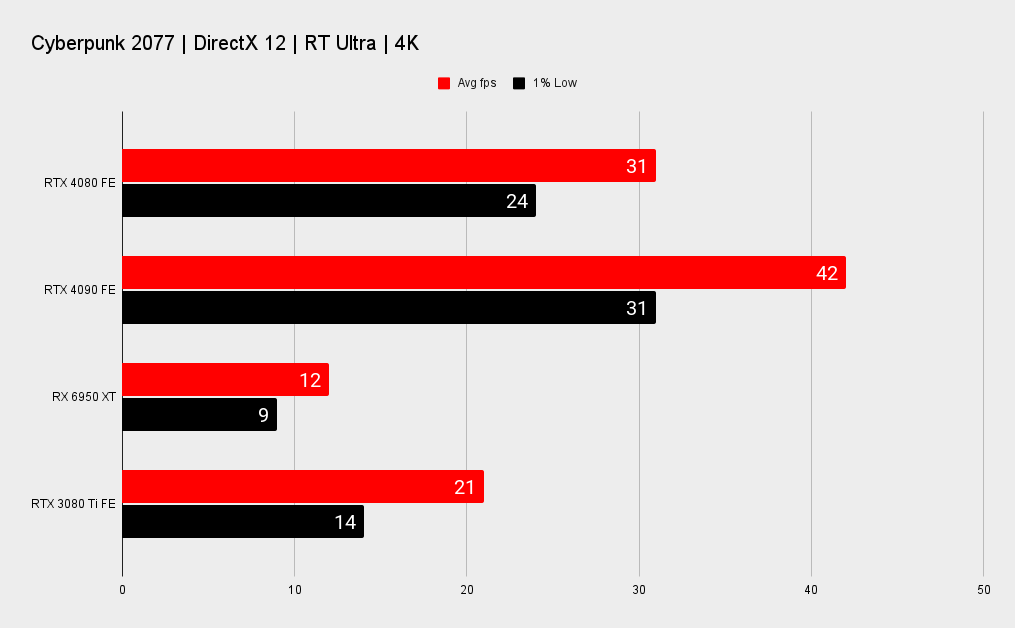

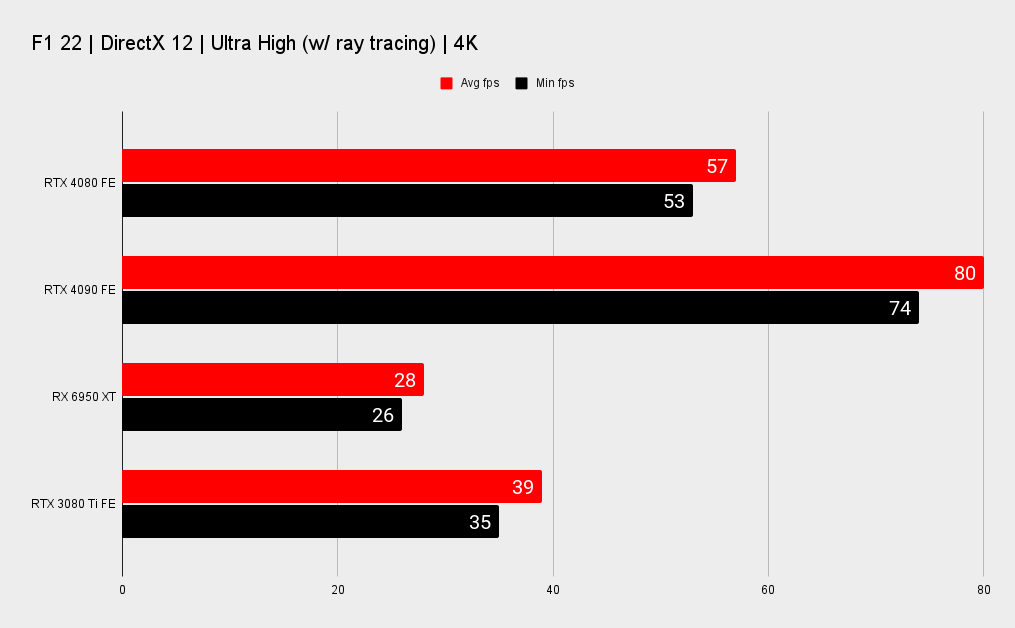

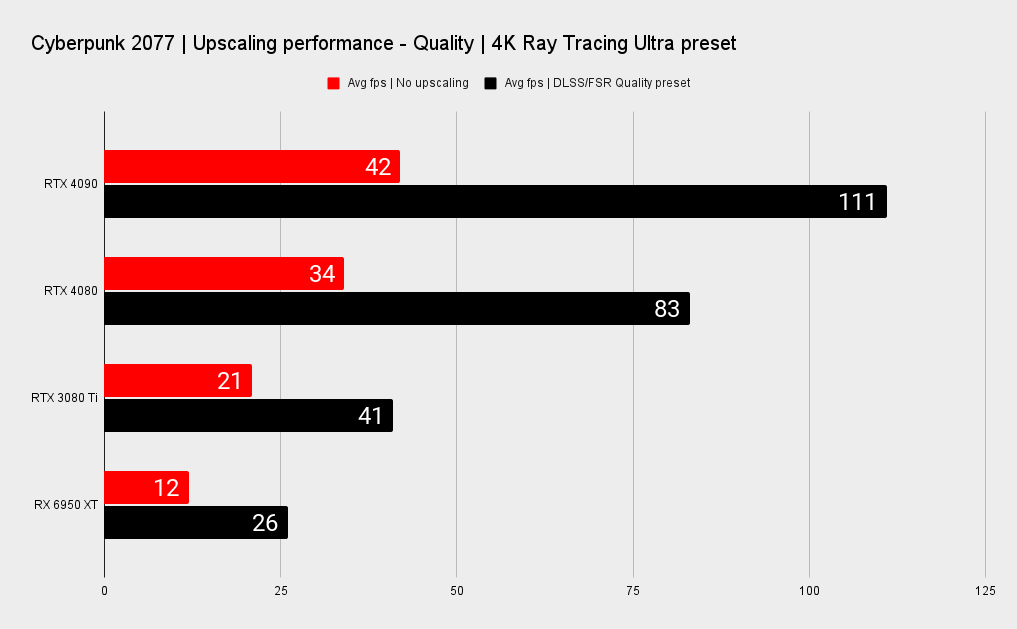

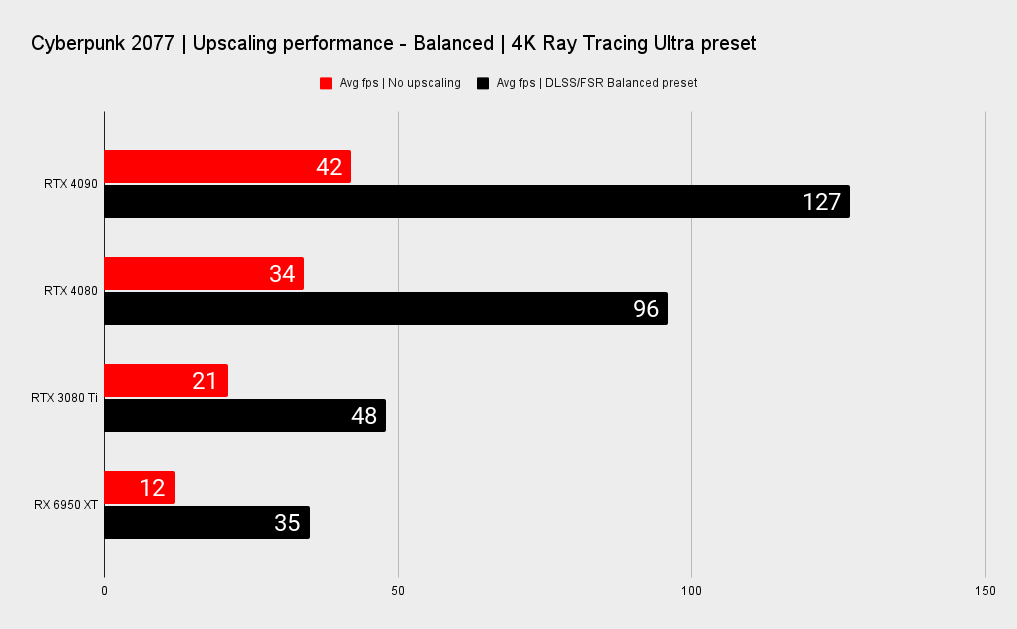

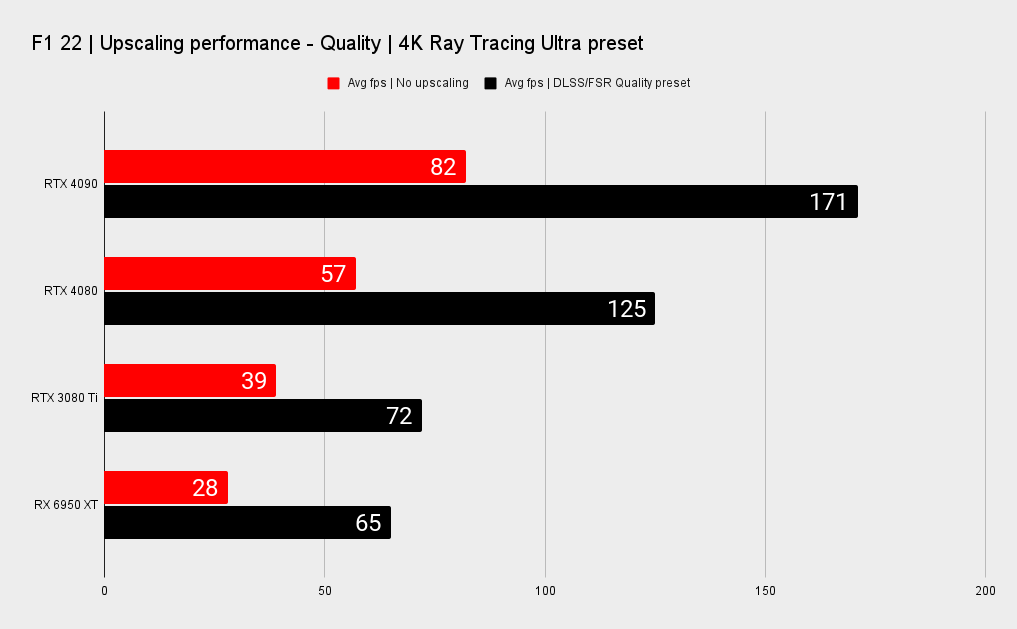

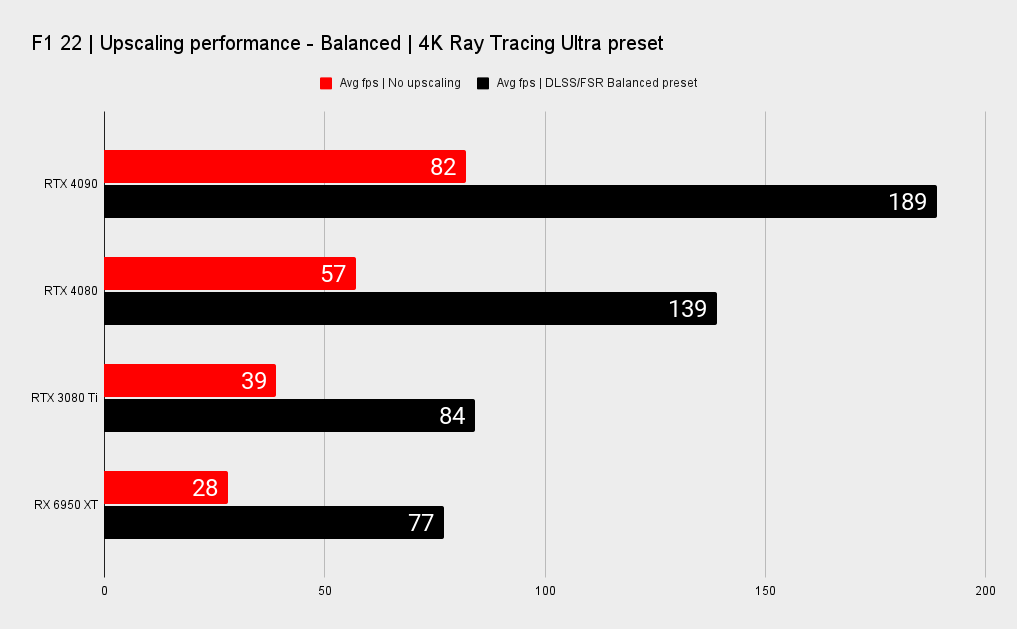

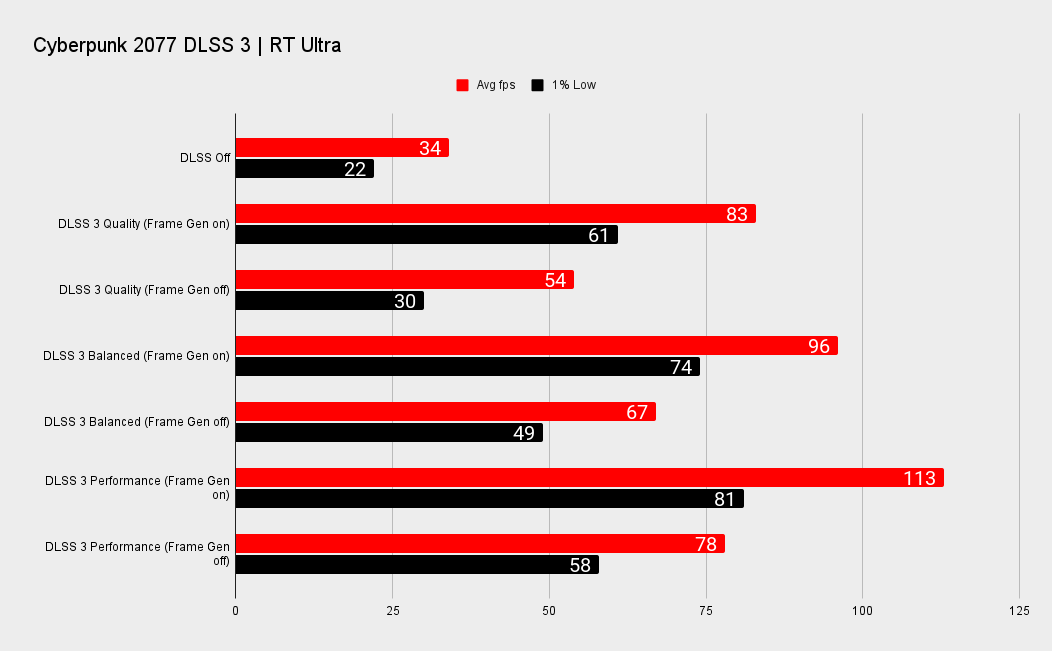

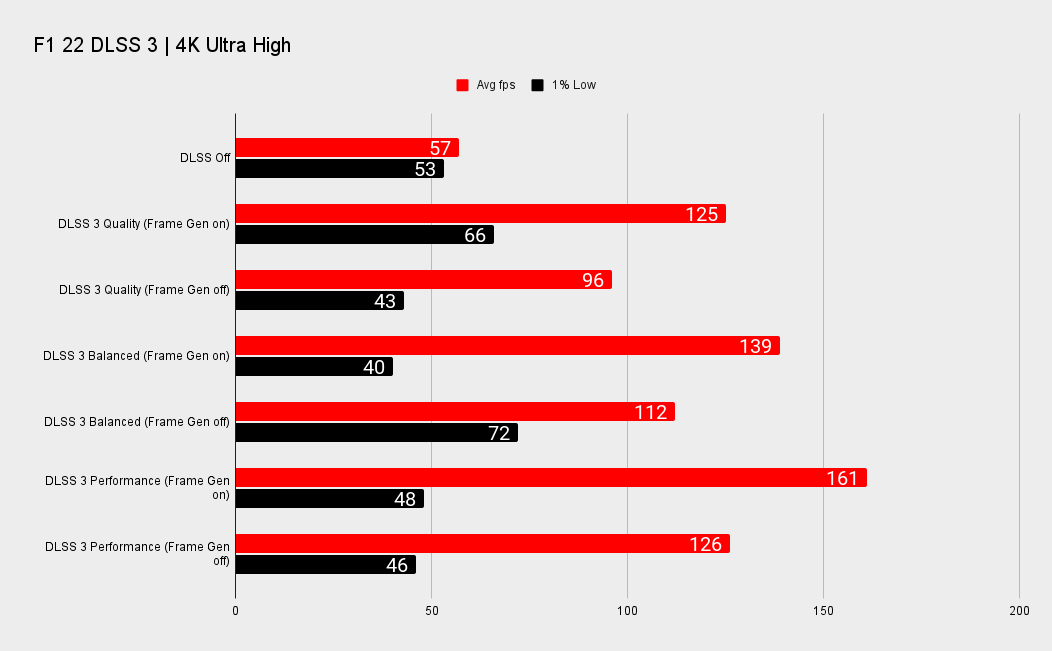

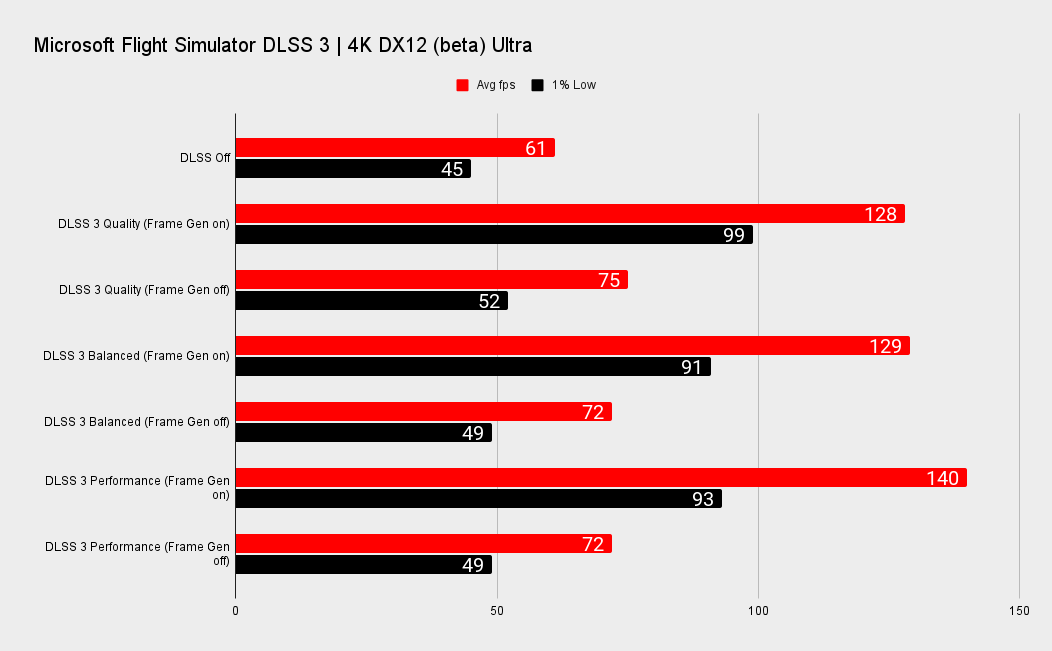

The benefits of Nvidia's frankly incredible (so far) DLSS 3 with Frame Generation are plain to see when you look at what it can do in the likes of F1 22 and Cyberpunk 2077. That's where Nvidia's claims of a far greater performance lead over the RTX 3080 Ti begin to make sense, with the AI upscaling tech delivering an effective doubling of performance at the Quality upscaling level at 4K.

Upscaling performance

In Cyberpunk 2077 you're getting a 144% increase in frame rates.

But honestly, it's not the comparisons with other cards that I find the most startling with DLSS 3, it's the uplift over native performance and for only a really hard-to-see difference in fidelity. In Cyberpunk 2077, using the 4K ultra ray tracing preset, and the Quality DLSS setting, you're getting a 144% increase in frame rates. And in F1 22, with the same level of settings, it's 131% quicker. In Flight Sim though it's just 110% faster with DLSS 3 with Frame Generation.

I know AMD has claimed to have its own frame generation tech coming to FSR 3, but the green team's frame interpolating feature is already starting to filter into games and is clearly making a tangible difference today.

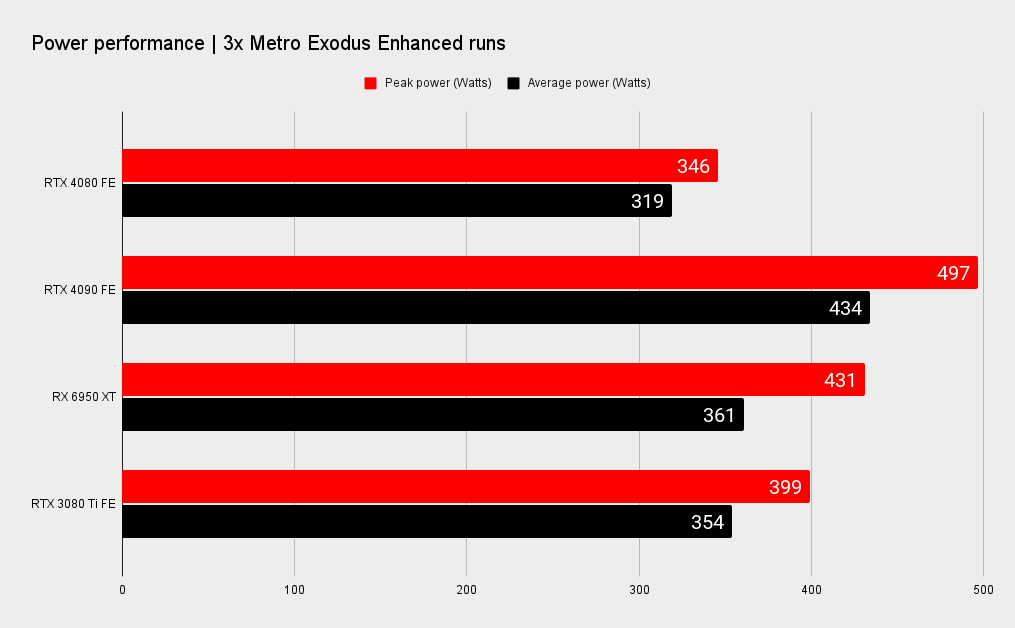

There is, however, still a large part of me really struggling with the hugely cut down AD103 GPU being presented as this $1,200 chip. But the performance numbers are there, and when you mix them with the power figures you're looking at a far more efficient gaming architecture compared with Ampere.

System performance

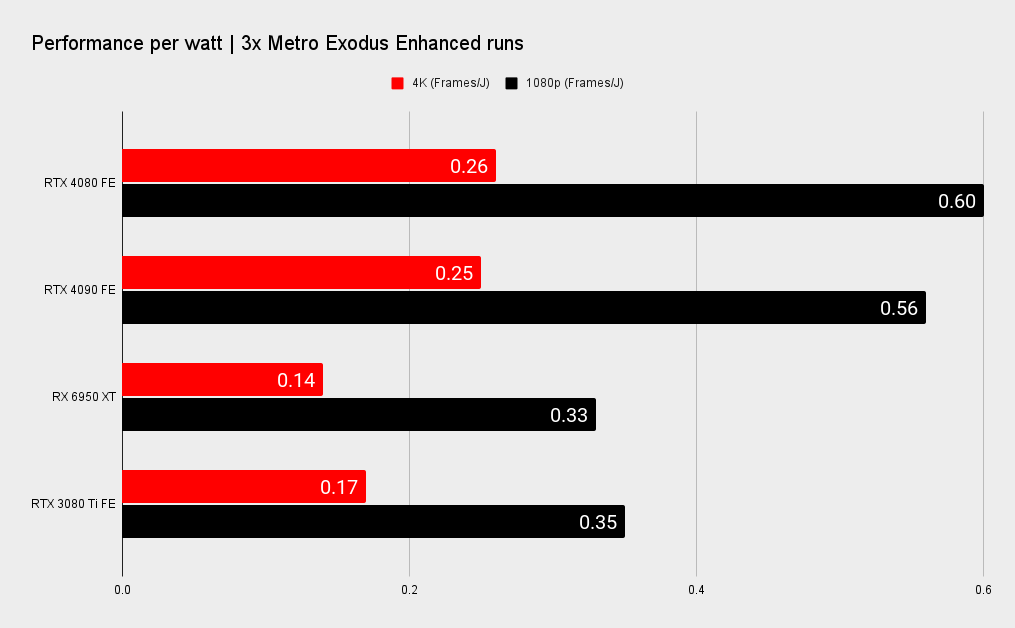

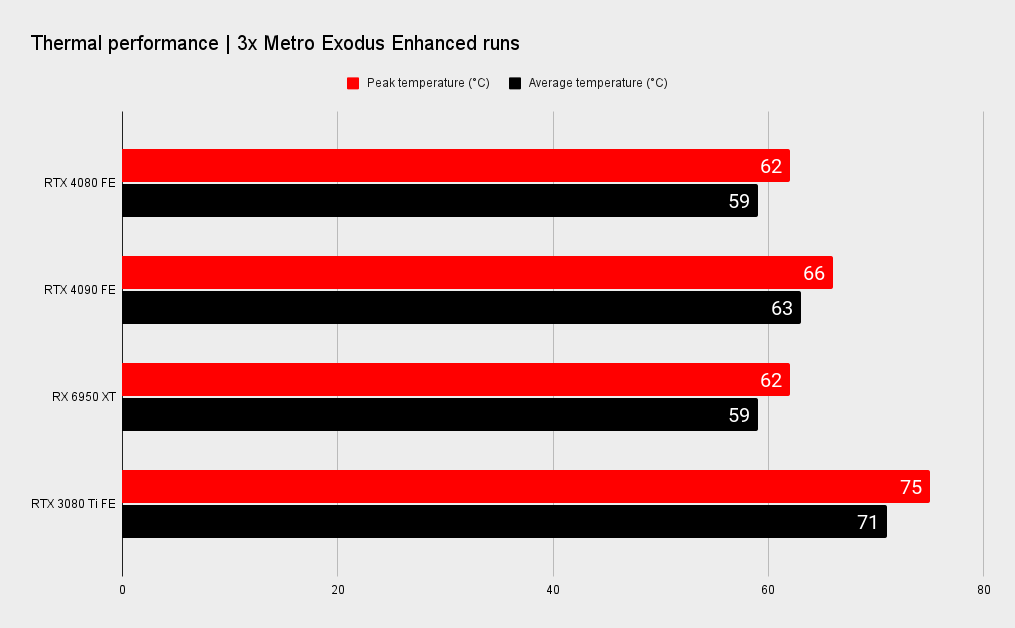

Again, it's that vs. RTX 3080 Ti and RX 6950 XT comparison that really hits home here. It uses around 10% less power than the previous generation Nvidia card and is over 50% more efficient in terms of performance per watt.

But against the Radeon competition, which usually wins out in the efficiency stakes, the difference is even more stark. On average the RTX 4080 is using around 12% less energy compared with AMD's most powerful GPU, and has 20% lower peaks. And when it comes to the performance per watt metrics the latest Ada card is almost twice as efficient.

Nvidia RTX 4080 analysis

How does the RTX 4080 stack up?

What is the RTX 4080 fighting against? In the future it will be the RX 7900 XTX, but right now you're looking at either the RTX 3080 Ti or the RX 6950 XT. And, given the performance of this latest Ada GPU, you wouldn't spend $1,000 on the Radeon card or $1,200 on the GeForce card from looking at those benchmarks above.

But that's not really where things stand these days. Granted, the RTX 3080 Ti is still expensive, but you can find it for around the $1,000 mark if you dig around and ignore the $1,400 options. On the other side, the RX 6950 XT has, like pretty much every single AMD GPU of the last generation, seen a hefty price cut. You can find that card for $800 today. The RX 6900 XT for even less, around $650, nearly half the price of the RTX 4080.

But even that much cheaper than their initial prices they represent a huge outlay, and I would still struggle to recommend either card in the face of the new RTX 40-series today. The AMD card has more of a case, because its rasterised performance is closer to that of the RTX 4080 when compared with the extra money you'd have to drop on the new Ada card. But ray tracing, however you feel about it, is well on its way to being a ubiquitous lighting feature of modern games, and the older RDNA 2 architecture really suffers under the reflected glare.

That should all make this a pretty easy recommendation, then. The RTX 4080 comfortably outperforms both the similarly priced cards from the previous generation, most notably the $1,200 RTX 3080 Ti, and therefore is really hitting that gen-on-gen performance uplift we crave.

But neither GeForce card should ever have been a $1,200 GPU.

If you wanted to do some simple maths the RTX 4080 really ought to be around $960.

I feel like the RTX 3080 Ti's pricing was a reaction to Nvidia missing out on cashing in on the unprecedented price hikes the RTX 3080 was seeing over and above the $699 MSRP the company launched it with. It seems to me that Nvidia wanted its piece of the silicon shortage/crypto boom action, and so the RTX 3080 Ti came out with a $1,200 price tag.

And that has made it all too easy for Nvidia to match this almost artificial price point with its latest Ada card thanks to the fact it outperforms the last-gen GPU on all counts. Though at least the RTX 3080 Ti was still using a massive GA102 chip at its heart. As I've said earlier, Nvidia has pared the silicon back a whole lot to create the AD103 GPU in comparison to the AD102 chip of the RTX 4090.

Generalising, it's 60% the size, has 60% of the transistors, and 60% of the CUDA cores, and yet is 75% of the price of the RTX 4090. If you wanted to do some simple maths the RTX 4080 really ought to be around $960.

But thanks to the abortive decision to launch with a pair of RTX 4080 cards at $1,200 and $899 respectively—with different levels of memory and wholly different GPUs—Nvidia was locked into this price even once it unlaunched the cheaper 12GB card.

I get the TSMC 4N process node is going to be a pricey one at the beginning of a new generation, but in terms of GPU yield alone, I'd be surprised if the manufacturing margins on the AD103 chip didn't start outstripping the GA102 relatively quickly. That's great for Nvidia, and excellent for its shareholders, but has little benefit for us PC gamers if it's going to maintain an almost artificially high pricing strategy.

As always, I would recommend the Founders Edition over any overclocked card.

And then we come full circle to the point made at the beginning of this review; how will it stack up against the upcoming Radeon RX 7900 XTX? I can't help but feel we might be looking at a very close-run thing. I'm expecting the new AMD card to perform at a similar level to the RTX 3090, and for a $999 card that would make it tempting compared to a slightly quicker $1,200 RTX 4080, especially with its improved ray tracing capabilities.

I think AMD will lose on performance for the most part but will sit comfortably lower in terms of pricing.

But therein lies the rub. Though the $999 MSRP will come with the reference edition cards from AMD, and likely a few Sapphire and ASRock cards at launch, too, there will be myriad $1,000+ overclocked versions doing the rounds. Traditionally those pricier cards become the norm as initial launch stock runs dry. And when the prices of the RTX 4080 and RX 7900 XTX get close the decision between them will likely become anything but.

But let's lob in the inevitable premium priced products Nvidia's board partners will unleash post-launch, too. As always, I would recommend the Founders Edition over any overclocked card, and any MSRP version too. Factory overclocked GPUs simply aren't worth the ridiculous price premium attached to them. Even ahead of launch you can see AIB cards pushing towards the price of the RTX 4090. In fairness that's more prevalent in the UK than in the US, where there's more reasonable pricing.

Nvidia RTX 4080 verdict

Should you buy an RTX 4080?

Taking everything else out of the equation, the fact that Nvidia can lop so much of the GPU good stuff from its AD103 silicon and still perform at a level that's just a third lower than the far bigger AD102 core means it delivers what you'd want from the RTX 4080. It's fast, the GPU is impressively small (though the shroud is unfeasibly enormous), and I'm deeply in love with DLSS with Frame Generation.

It's just that $1,200 price tag that stings.

Nvidia has a history of pricing cards purely according to performance rather than the actual underlying silicon. Looking back as far as the Kepler architecture and the GTX 680, the company has made performance and efficiency leaps and priced up nominally lower spec GPUs accordingly.

As a business it's the right thing to do in a capitalist market. But when you're looking at the comparative specs of the RTX 4080, RTX 3080 Ti, and RTX 4090, it's tough not to think this is a GPU that shouldn't be priced at this prohibitive level. If the RTX 4080 came out at $999 that would be a far more reasonable price given the cut-down silicon it's using and the relative 40-series performance. Throw in the spectre of a brutal macro economic climate and that feeling's only going to be compounded.

At $999 the RTX 4080 would have been a great high-end gaming GPU, but the artificial RTX 3080 Ti price point, and 'unlaunched' 12GB version has backed the latest Ada card into the corner. At the moment it's got the 4K gaming performance against the previous gen, but if the RX 7900 XTX pushes it harder than expected, Nvidia may have to respond on pricing. But I wouldn't bank on it.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.