Our Verdict

The new Nvidia card houses a monster of a GPU, tearing up the Turing generation and making ray traced gaming worthwhile. And this Founders Edition is the ultimate expression of the GeForce RTX 3080.

For

- Incredible gen-on-gen fps boost

- Makes 2080 Ti look mid-range

- Ray tracing isn't a sacrifice

Against

- Needs a beefy PSU

PC Gamer's got your back

It feels almost redundant to call the new Nvidia GeForce RTX 3080 the fastest graphics card you can buy. I mean, today, it absolutely is, and after the first reveal that was pretty much inevitable. The initial performance figures from the green team's grand unveiling were no joke: this thing takes the RTX 2080 Ti outside and gives it a good, sound kicking. It's not even close.

The ol' Turing GPU's now whimpering in the corner, its vapour chambers leaking out over my floor as its fans fitfully spin their last.

Which is a pretty spectacular result when you consider the top-end Turing chip was the previous holder of the 'fastest graphics card' title, with its bold specs list and $1,200 sticker price. The fact this $699 card can make such a performance step up, at the same price as the RTX 2080 Super it's replacing, is incredible.

It's at once testament to the new Nvidia Ampere architecture, the work its engineers have put in, and maybe to the more competitive landscape of 2020.

The GeForce RTX 3080 is the flagship graphics card of this new Ampere generation. At least that's what Jen-Hsun and Co. is calling it, despite the GeForce RTX 3090, with a superior specs list, launching very, very soon. But that's a $1,500 GPU, and however much you might want the borderline offensive power the RTX 3090 is promising, the RTX 3080 is the far more realistic option for those of us without a Hollywood bank balance.

So, how has Nvidia managed to not just deliver RTX 2080 Ti levels of gaming performance, but fly past it at an unprecedented rate of knots? It's a mixture of smart design choices, a whole bunch more transistors, and some serious engineering.

Specifications

Nvidia GeForce RTX 3080 specs

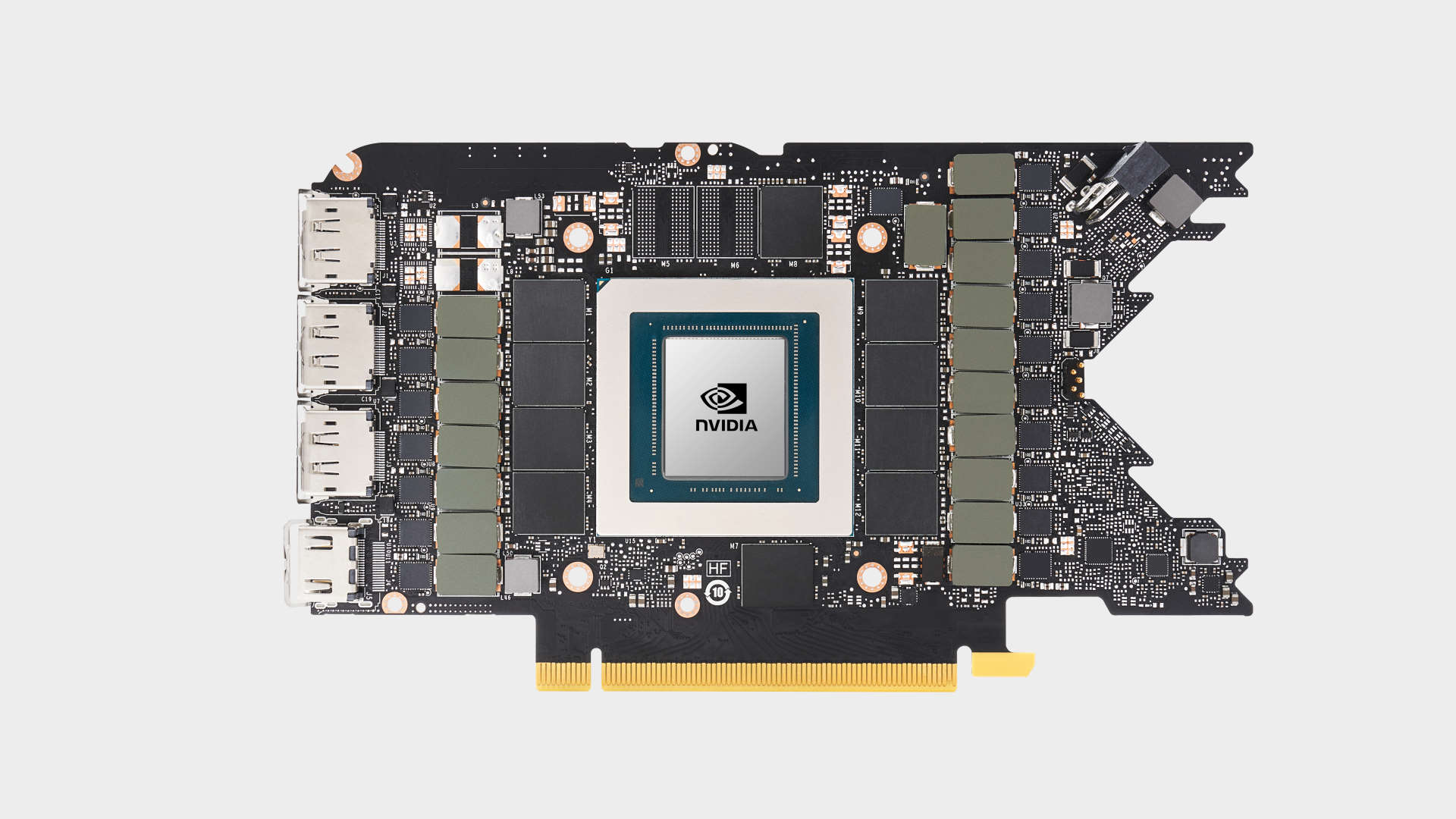

The key specs, most especially when it comes to gaming, are those heavily beefed up CUDA core numbers. We're seeing effectively twice the number of cores compared to what we were expecting, and far more than the equivalent GPU of the previous Turing generation. That's down to the redesigned data paths Nvidia has fitted into the GA102 silicon at the heart of the RTX 3080.

The full GA102 GPU, the same essential chip which powers both the RTX 3080 and the upcoming RTX 3090, houses 10,752 CUDA cores. The cut-down version dropped into the RTX 3080, however, sports 8,704. That is still a huge increase over the paltry 3,072 CUDA cores Nvidia released the RTX 2080 Super with just last year. Although that kinda depends on your definition of a 'core'.

These cores are arrayed across 68 streaming multiprocessors (SMs), which means that you get a few more of the dedicated 2nd gen RT Cores too. Because of the way the new architecture is designed, however, you do get fewer AI-driving Tensor Cores.

GPU - GA102

Lithography - Samsung 8nm

Die size - 628.4mm2

Transistors - 28.3 bn

CUDA cores - 8,704

SMs - 68

RT Cores - 68

Tensor Cores - 272

GPU Boost clock - 1,710MHz

Memory bus - 320-bit

Memory capacity - 10GB GDDR6X

Memory speed - 19Gbps

Memory bandwidth - 760GB/s

TGP - 320W

The GeForce RTX 3080 GPU is built on the Samsung 8N design, which is nominally an 8nm production node, with a die size of 628.4mm2. That's smaller than the top Turing chip, which was 754mm2, but packs in another 10 billion transistors at 28.3 billion transistors. It is though a larger slice of silicon than the TU104 which powered the equivalent level card of the last generation, the RTX 2080.

A side-by-side look at the Boost clock speed of the RTX 3080 compared with the RTX 2080 Super might have you worrying for the performance of the GPU as it rated slower, but in our testing we've clock speeds well north of 1,800MHz as standard—at a similar level to the last-gen Turing card.

You do get faster memory too, with 10GB of GDDR6X memory, running across an aggregated 320-bit memory interface. That delivers a pretty spectacular 760GB/s of bandwidth compared with the sub-500GB/s of the standard GDDR6 of the Turing card.

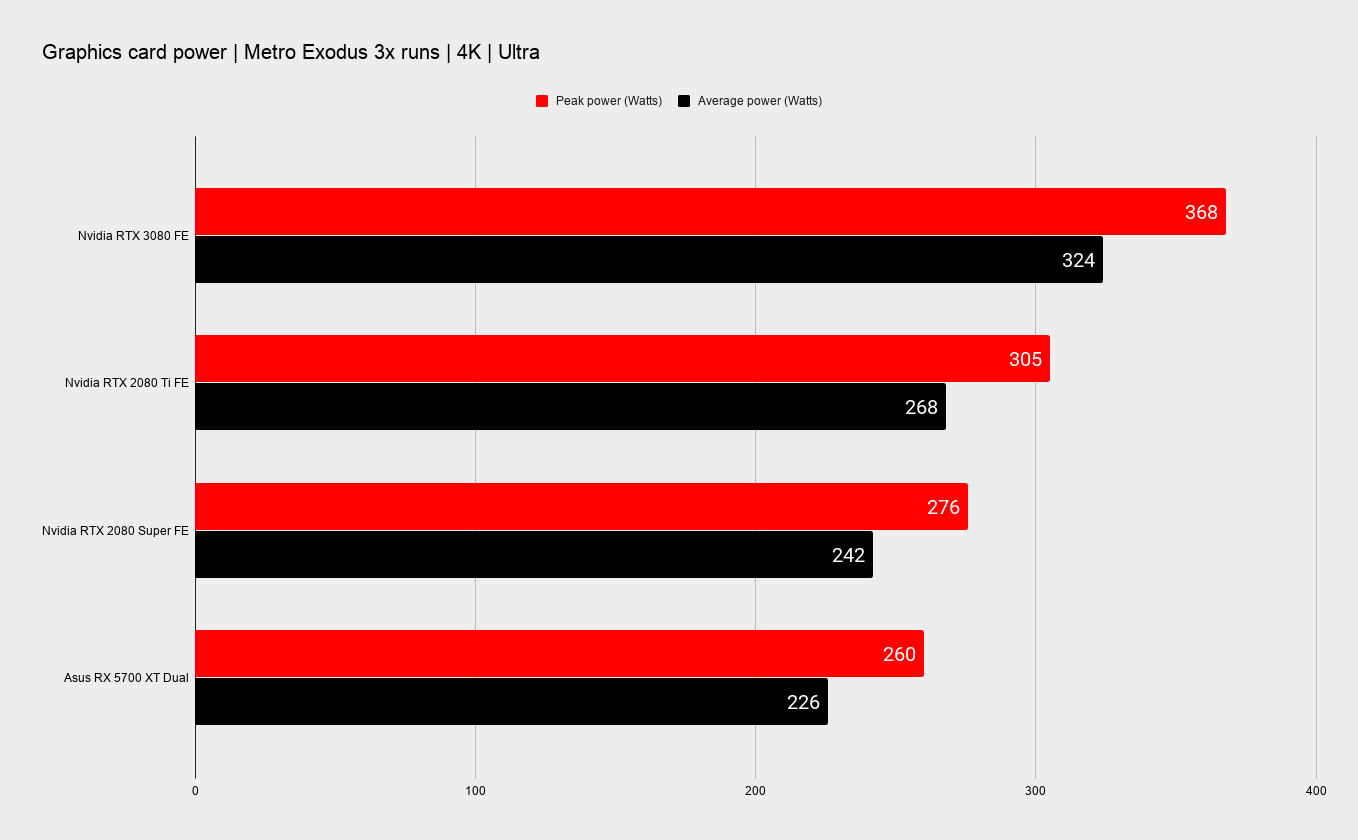

But you are definitely going to need some serious PSU performance for your new card, because this Founders Edition has a board power rating of 320W, and we've seen this version of the RTX 3080 running well above that on average, with peaks up to 368W in demanding games.

Nominally Nvidia is suggesting a 750W PSU as a minimum, but that's not really taking into account the higher power draws of some modern CPUs. If you're rocking a Core i9 10900K, for example, you'll likely need 850W at least.

Thankfully the innovative cooling solution on the RTX 3080 Founders Edition is definitely up to the task. It's a big, dense mountain of vapor chambers and direct contact heatpipes, which helps the card maintain those clock speeds way above the rated Boost numbers.

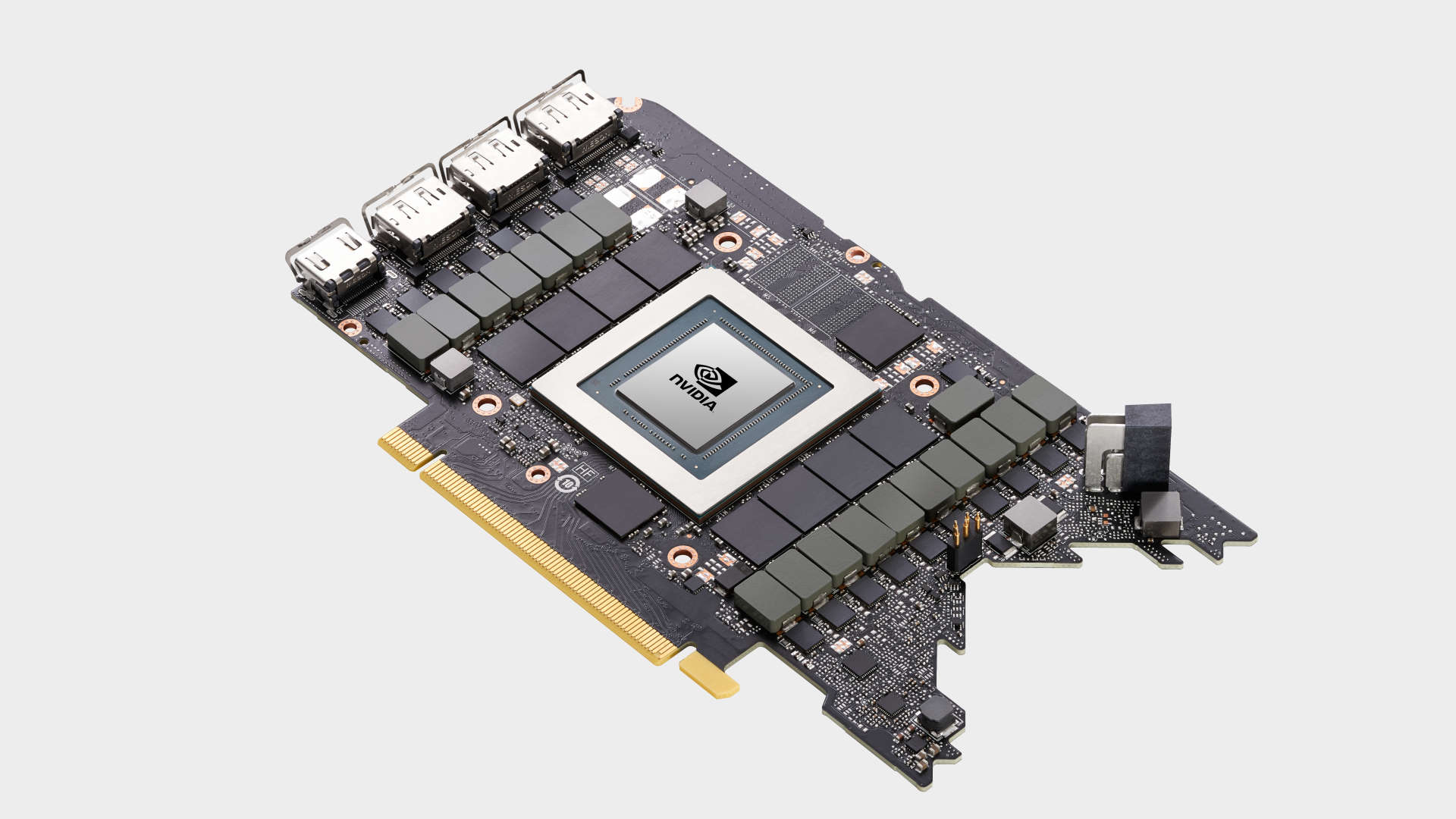

The PCB itself is a custom design, one we're not seeing on third-party cards, at least not yet anyways. It's a surprisingly compact board, with all the necessary componentry squeezed into a small space to allow for a cutout to enable the second chill spinner to do its job.

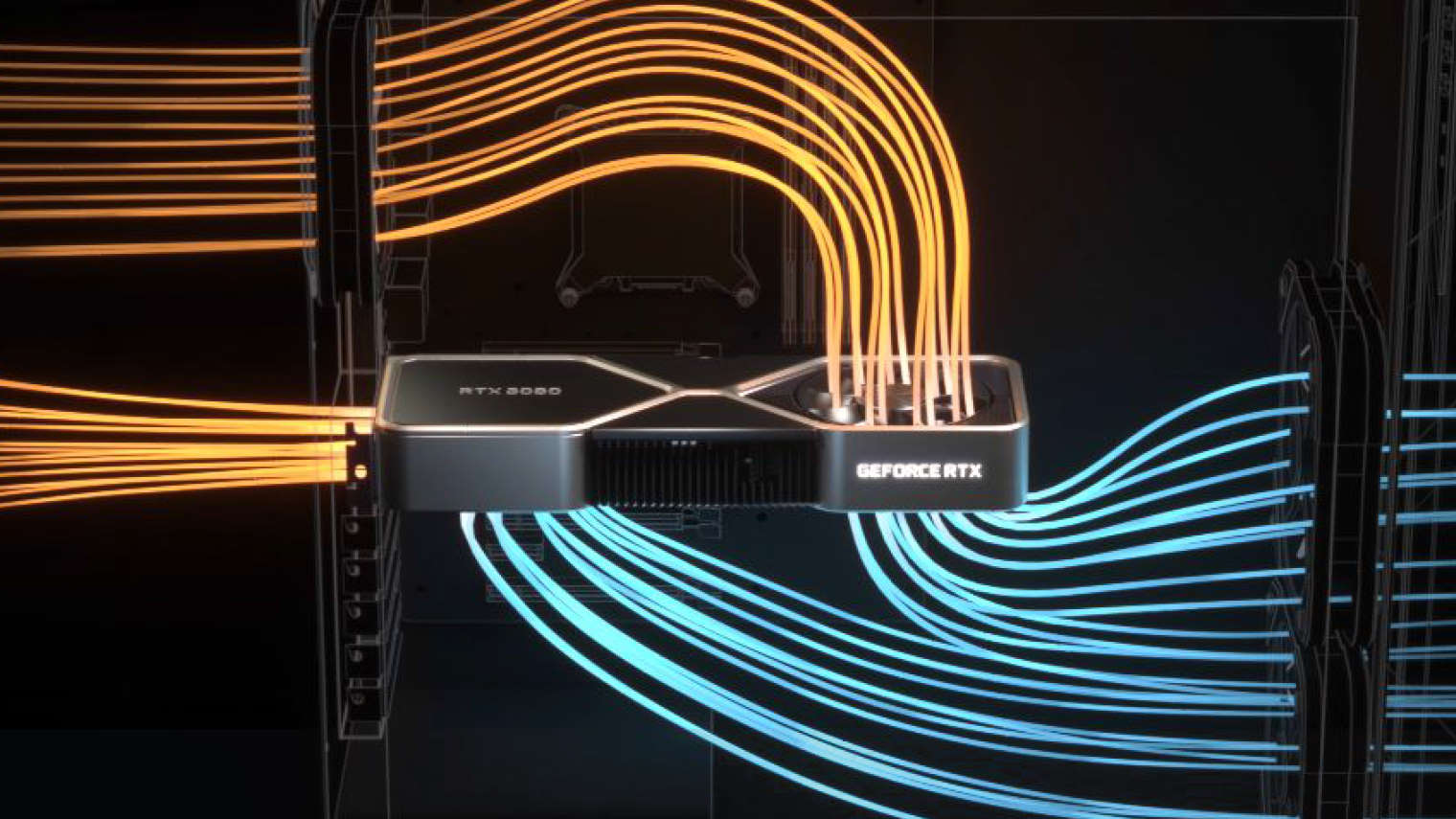

The throughflow design of this secondary fan pulls air across the cooling block, giving additional chip-chilling capabilities alongside the direct fan sat atop the GPU itself. The idea is that one vents out the back of the graphics card and the other out the back of the PC.

My only concern with this design is that you might end up getting toasted air circulating around your CPU's air-cooler in a traditional mounting, which could affect processor temperatures. Nvidia maintains that it would have no impact at all, and if you're rocking an all-in-one liquid cooler it's definitely not going to matter.

Architecture

Nvidia GeForce RTX 3080 architecture

The new SM design, and therefore the core-count, is one of the key differentiators between the Turing and Ampere GPU architectures. When Nvidia talks about its GPU cores, it's referencing 32-bit floating point (FP32) units. Most graphics workloads are based on floating point operations (at the launch of Turing Nvidia claimed that for every 100 FP instructions there were 36 integer pipe instructions), and so doubling the number of available units massively accelerates most gaming tasks.

In Turing Nvidia made FP32 and INT workloads concurrent, meaning parts of the GPU weren't twiddling their silicon thumbs waiting for one or the other type of task to be completed before getting on with its own thing. "Before Turing [the GPU] was only able to really issue one math instruction per clock," explains Jonah Alben, senior VP of GPU engineering at Nvidia, "and we found that was holding back performance in a lot of cases."

Like Turing, there are still two data paths in the Ampere design, but while previously they were split down the line between integer and floating point operations—concurrent though they were—Ampere now allows FP32 workloads on either data path.

"Now it's an FP32-plus-integer unit that has enabled us to double up our performance on those floating point heavy workloads," Alben tells us. "So now we have amazing performance for that kind of workload, like denoising and other image processing workloads you'll see in a game engine."

Nvidia's streaming multiprocessors (SMs) are split into four partitions, each with a dedicated FP32 data path, but in Ampere the second path offers both integer and FP32 operations. That means there is potentially twice the number of FP32 units available, though given there will still be times where that second data path has to be running integer operations you're not always getting twice the floating point performance.

Nvidia has also beefed up the cache around the enhanced data paths because, as Alben explains "feeding that, of course is always a challenge. The faster these processors go, the more it actually matters that they have a lot of bandwidth to be fed. And this was more of a significant architectural change where we basically replumbed the pipeline to our L1 cache system so that now has double the bandwidth."

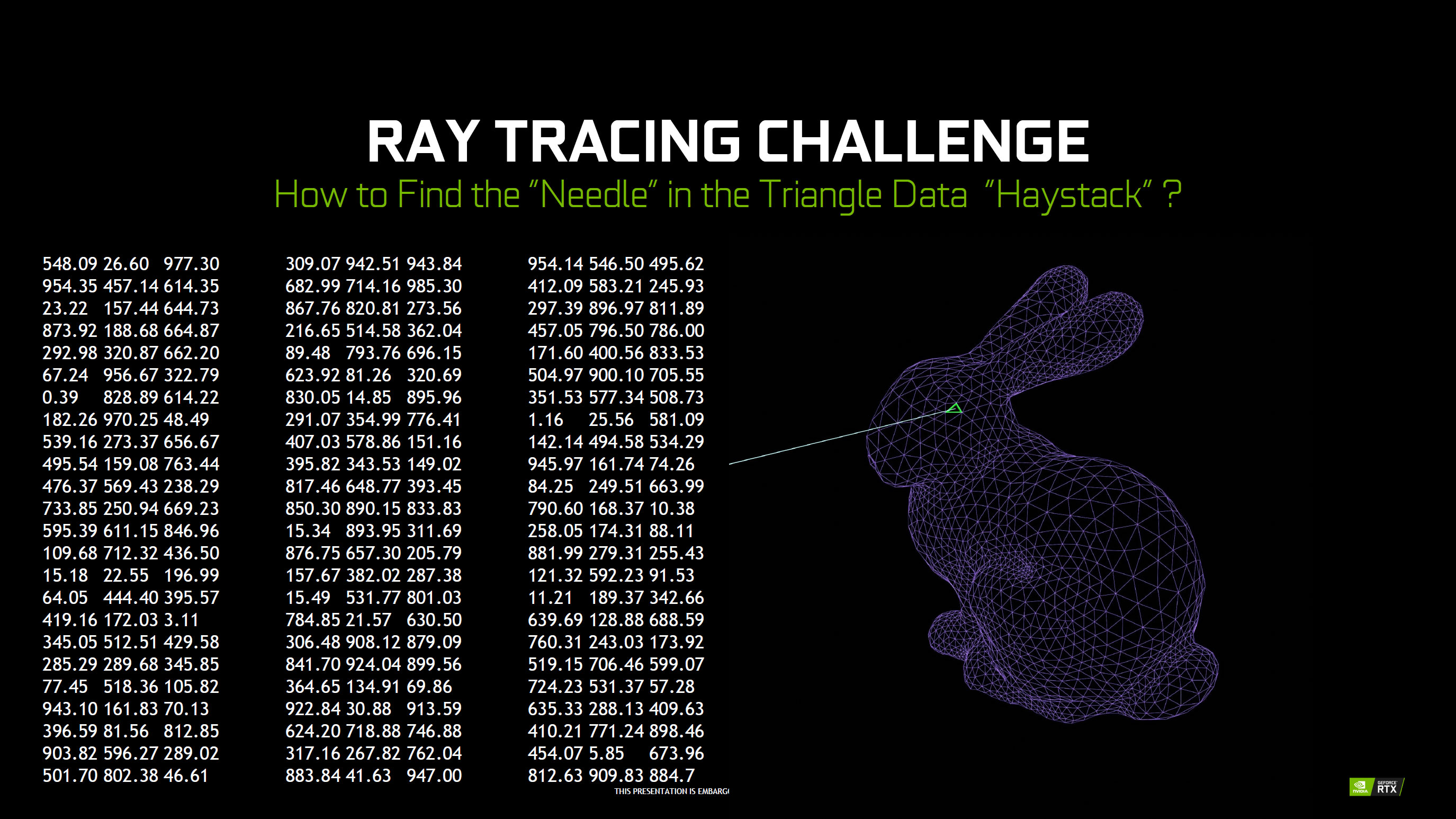

With modern game engines, however, especially moving into this next-generation of gaming, it's not just about floating point or integer operations. With real-time ray tracing set to become ever more prevalent in games, thanks to the new Sony and Microsoft mini PCs launching this year, Nvidia has taken the opportunity to update the RT Cores first introduced in the Turing RTX GPUs.

At its most basic, the RT Core is a specific fixed function unit dedicated to accelerating the bounding volume hierarchy (BVH) algorithm at the heart of ray tracing workloads. It's a workload unlike anything else you do in a shader core, so it's very inefficient to try and run them on a standard GPU. Just look at how well the GTX 1080 deals with ray tracing for a hint…

There are two parts to this dedicated ray tracing core, one that sorts the bounding boxes, and another which then traces triangle intersection rates for the different rays. But the first-gen RT Core is by no means the finished product.

"We found that we had really good bounding box intersection rates," says Alben, "but the triangle intersection rates at the end of the tracing could be a bottleneck."

So now the RT is able to do more in parallel; so for some rays it will be tracing the bounding boxes while it will be calculating the triangle intersections for others.

"So we have separate resources for these two units, they can run in parallel, and the triangle side has double the performance of the last time."

This added parallelism, as well as a new way to trace triangles through time and space to speed up the calculation of motion blur effects in ray traced scenes, means there is genuine acceleration of ray tracing performance on the whole.

Pick your expletive, because the RTX 3080 Founders Edition is ****ing great

The third piece of the Ampere GPU puzzle is the 3rd gen Tensor Core. This is purely a slice of silicon dedicated to deep learning and AI, but before you scan down to the score and snappy summation at the end, it really is relevant to gaming. It's the part of the GPU which is responsible for DLSS, or deep learning super sampling, or 'that bit of magic that makes ray traced games actually playable'.

In a bit of a switcheroo there are actually fewer Tensor Cores per SM than with Turing. "We found that it was actually better to have a smaller number of cores per SM," Alben explains, "and then make each core much more powerful than the previous generation."

The basic performance of the Tensor Core has been doubled in Turing, but Nvidia is also using 'Sparse Deep Learning' to make the whole process more efficient. It's basically a way of pruning away a bunch of maths that isn't really necessary for the final outcome, and that seriously accelerates the performance of DLSS 2.0. And DLSS 2.1 when it comes too.

Nvidia is also introducing DLSS 8K, which leverage a new 9x super resolution scaling factor with the aim of bringing 60fps gaming within reach at 8K resolutions, even on ray tracing enabled games. Damn. But that's not exactly within reach of the RTX 3080 itself and is more set out for the upcoming RTX 3090, the card Nvidia is calling the "world's first 8K HDR gaming GPU."

But then there's the new memory sub-system which is relevant to the RTX 3080. Working with Micron, and the DRAM industry at large, Nvidia has helped create a new higher speed version of GDDR6, catchily titled, GDDR6X. Using some snazzy new signalling inside this new memory type GDDR6X is able to shift more data around and do it at faster rates. Today's data-hungry game engines, especially those keyed into ray tracing workloads, ought to thrive on the newer memory tech.

It's complicated stuff, but all you really need to know is that it's fast, like 19Gbps fast on the GeForce RTX 3080. That all means there's a huge amount of memory bandwidth available to the Ampere card, with 760GB/s on the RTX 3080 vs. 496GB/s on the RTX 2080 Super.

What else is rather complex is the RTX IO feature wrapped up in the new RTX 30-series design. These new Nvidia Ampere GPUs support Microsoft's DirectStorage API, one of the most exciting features coming to next-gen consoles. And this means that Nvidia is almost taking the CPU out of the equation when it comes to decompressing the huge high-fidelity, high-resolution scenes that will fill out next-gen games.

But we're not expecting RTX IO enabled games to arrive until well into next year, so right now this is a bit of a dormant feature of the RTX 3080. Though, as the competition is want to say, that ought to mean the Ampere architecture ages like fine wine as the next generation of gaming rolls along.

What can make a difference today, however, is Nvidia Reflex. This is a feature being baked into some of the top esports games of today, such as Fortnite, Apex Legends, and Valorant, and is aimed at providing an SDK which allows devs to drop settings into their games to reduce system latency when you hit GPU-intensive scenes.

In a similar way to how G-Sync synchronises the GPU with the monitor, Reflex does the same between CPU and GPU with the result being that there are never frames waiting in the render queue. It also allows games to sample the individual mouse click at the very last second to get the input lag down as much as possible.

Reflex needs to be enabled at a game level however, but it also partners up with GSync. There's a new module going into the latest 360Hz gaming displays from Asus, and its ilk, which includes the Reflex Latency Analyser, and that allows you to measure mouse, display, and full system latency without the need for specialist equipment. Well, apart from an expensive new 360Hz panel anyways.

That won't cut latency itself, but might help you figure out what the bottleneck is on your system. Or at least come to the conclusion that bottleneck is, well, you.

Benchmarks and performance

Nvidia GeForce RTX 3080 benchmarks and performance

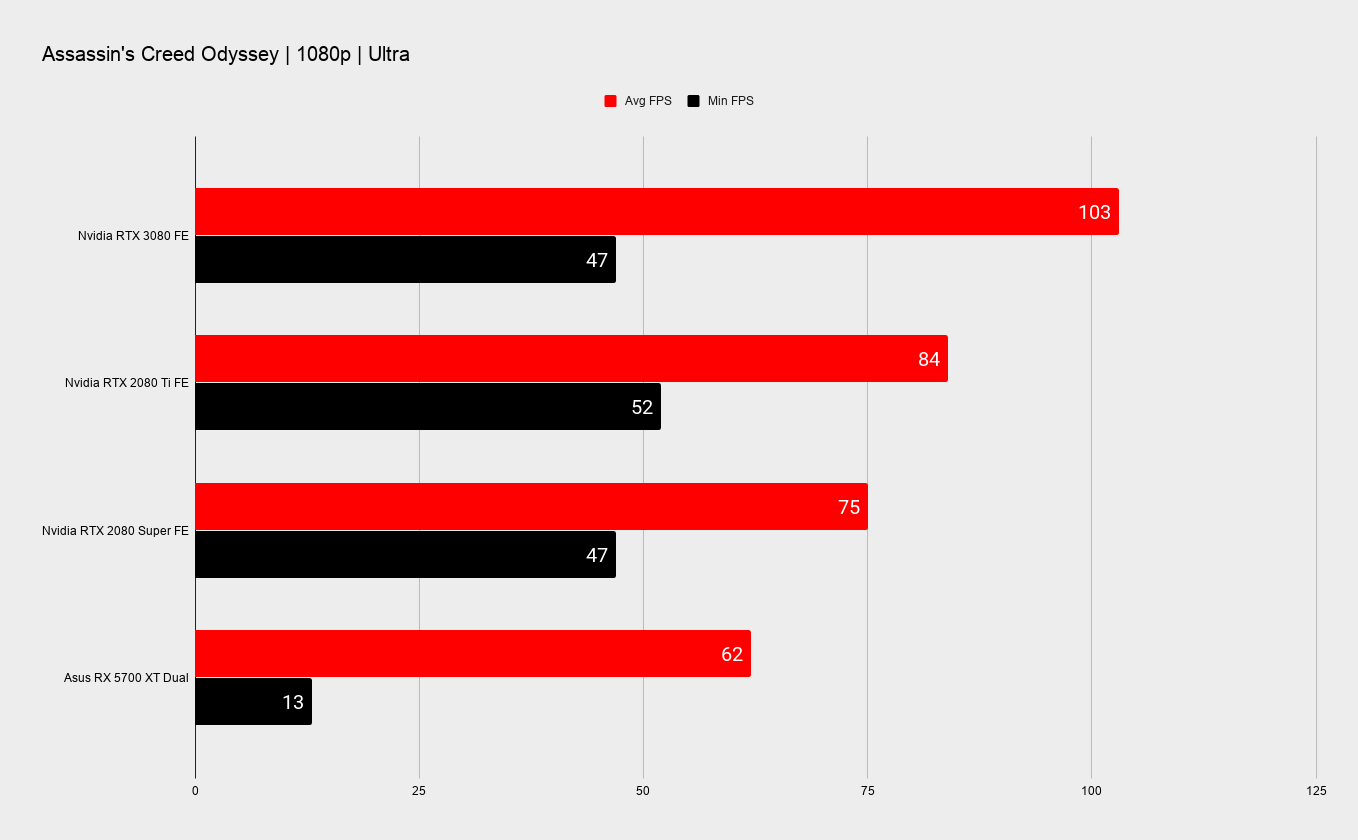

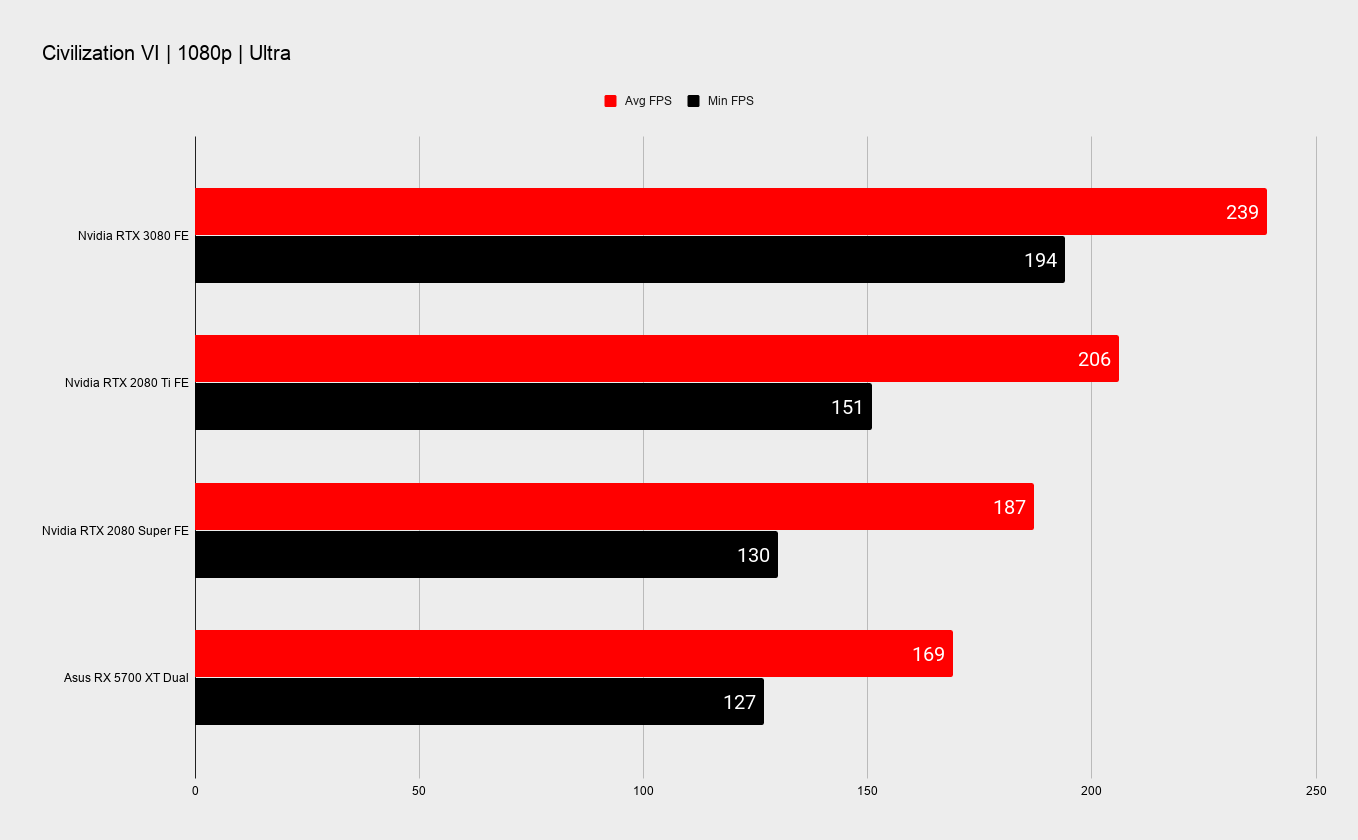

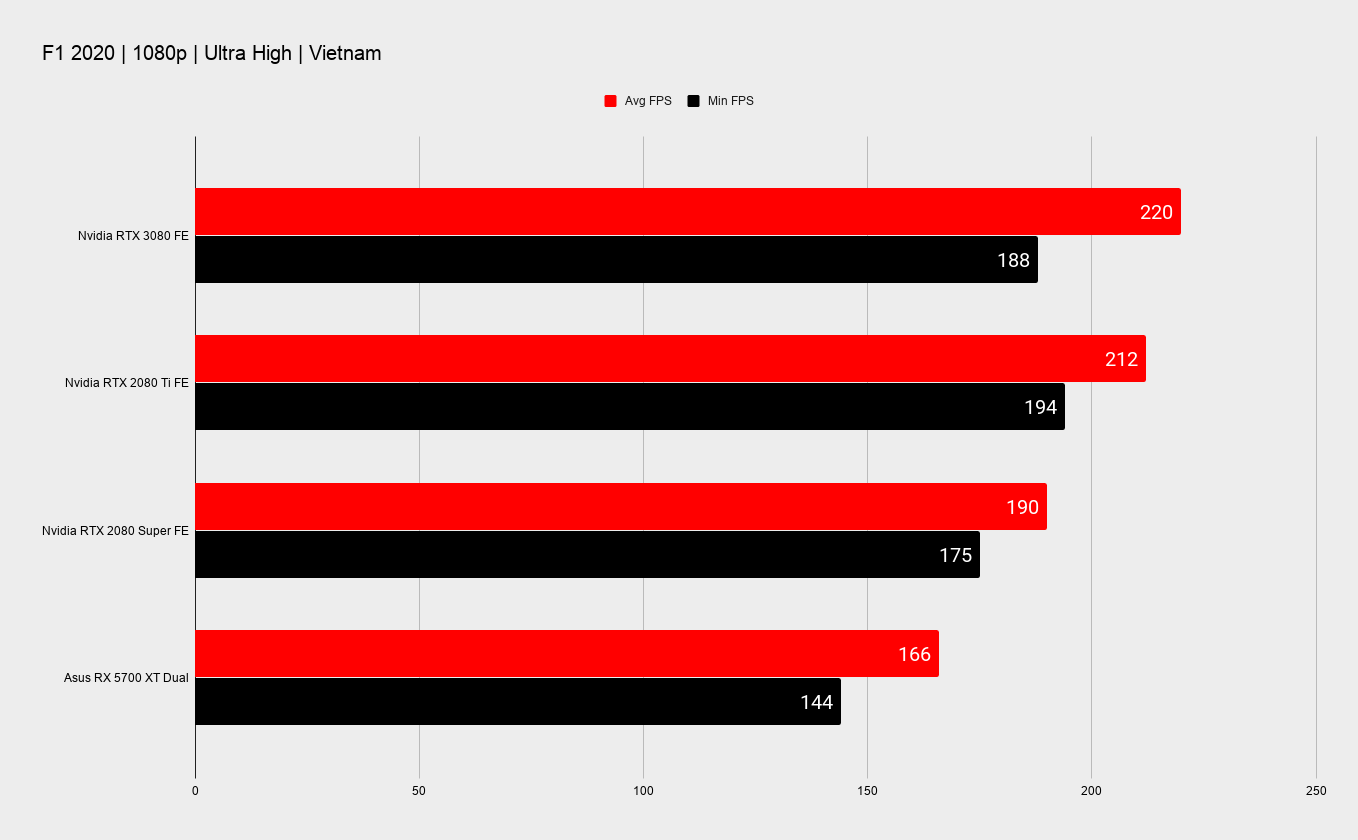

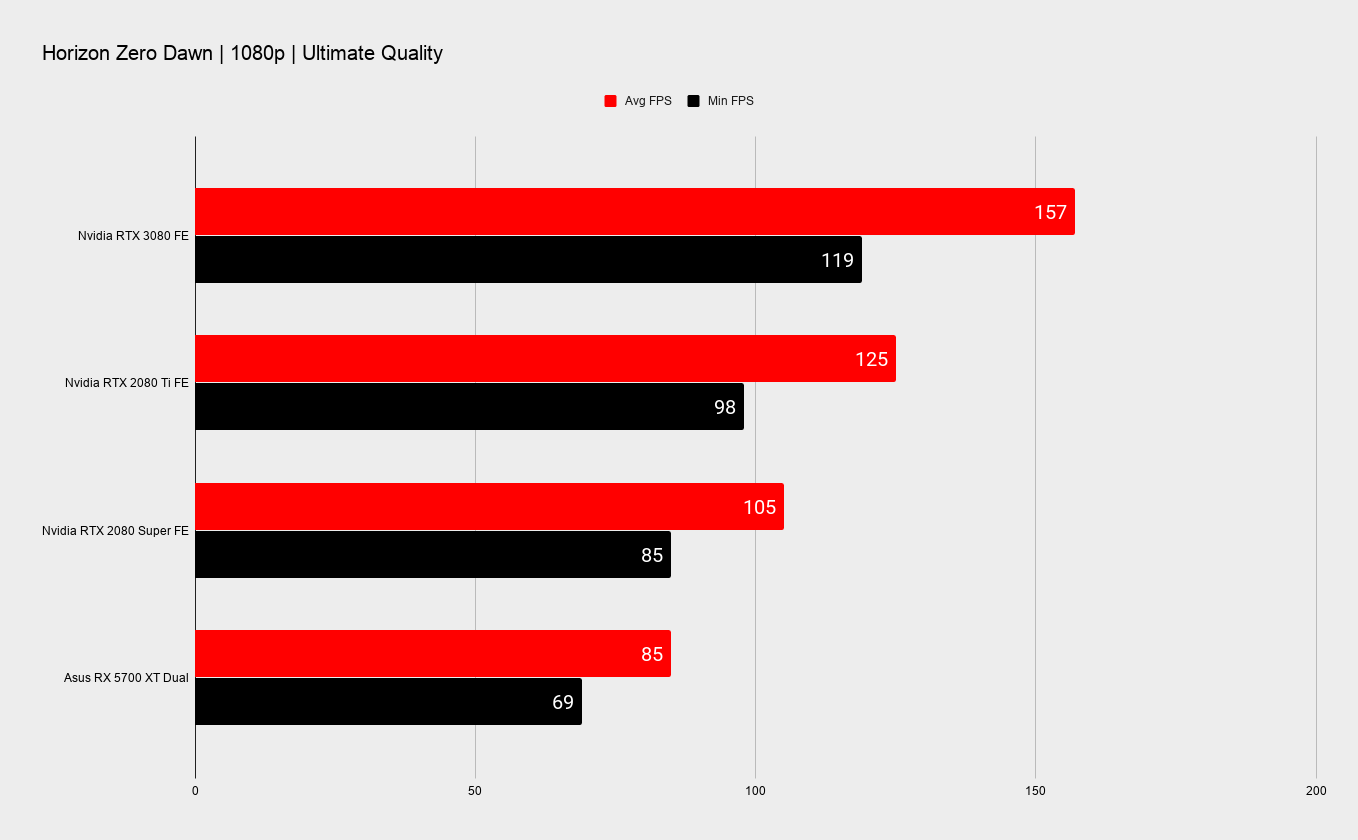

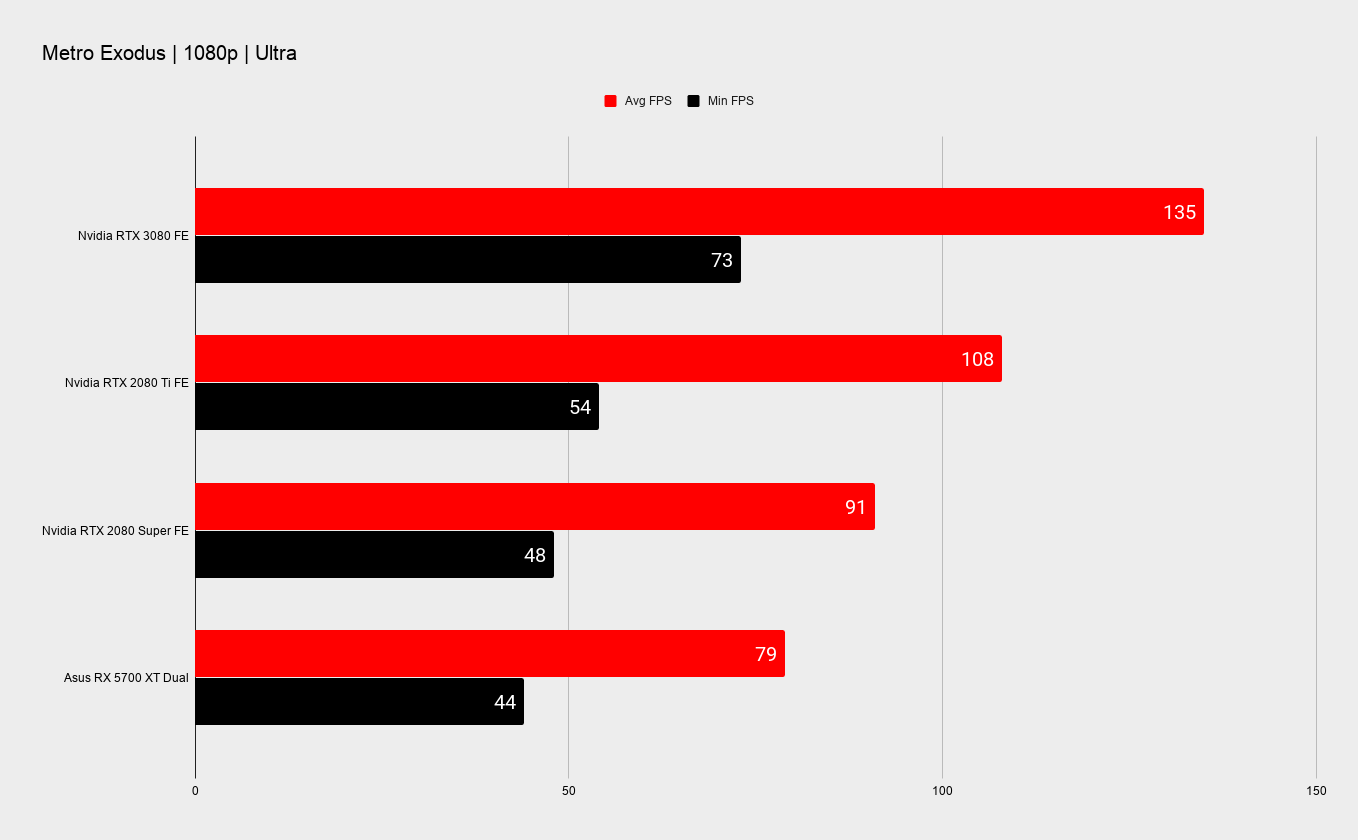

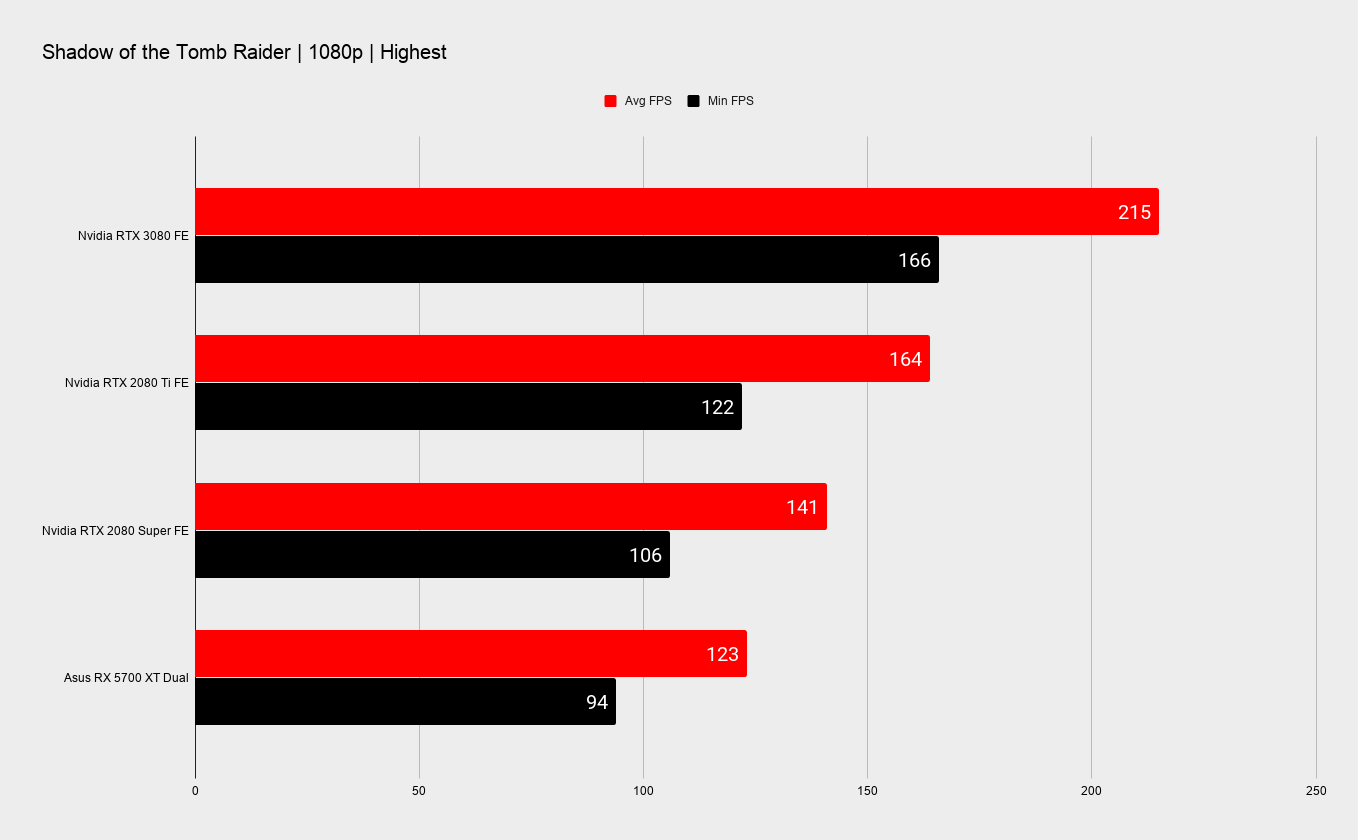

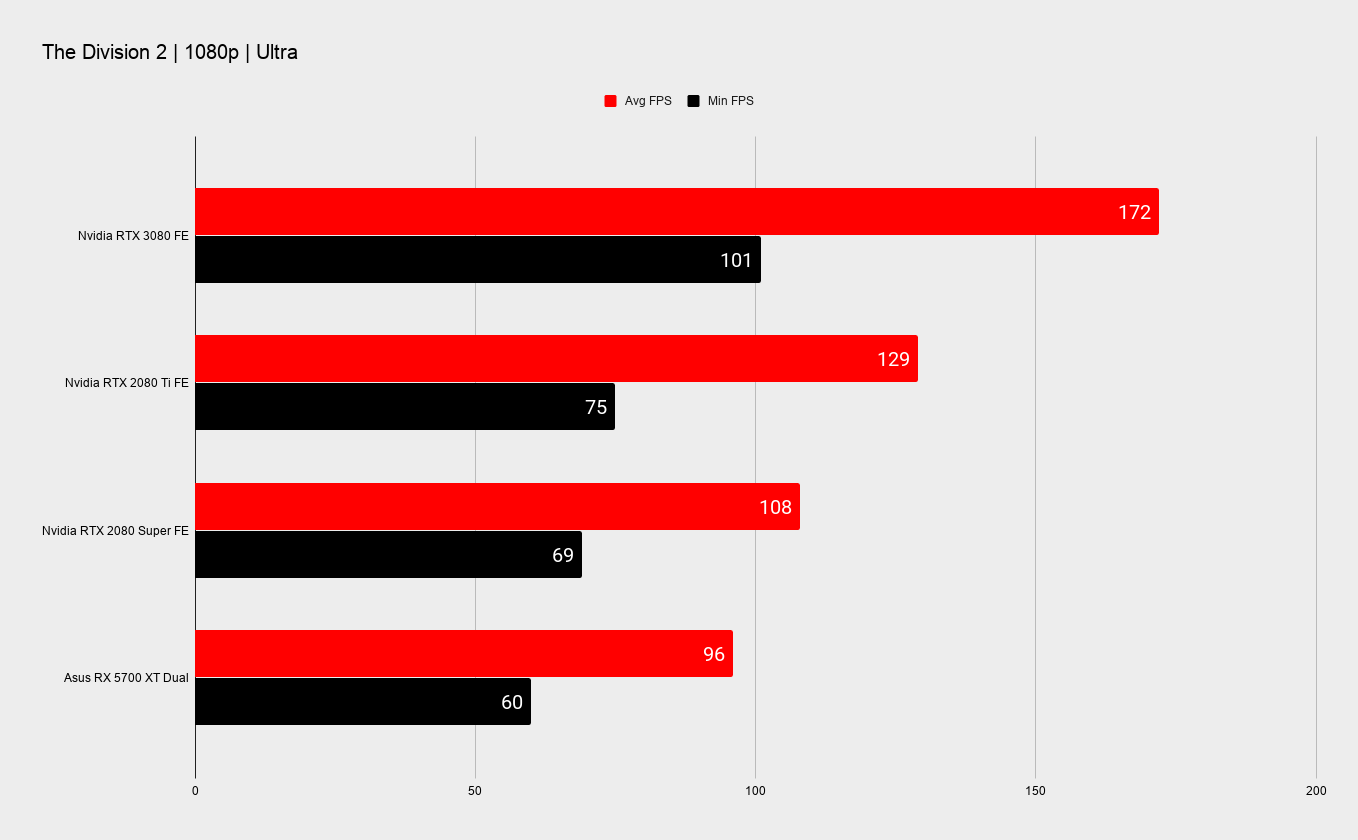

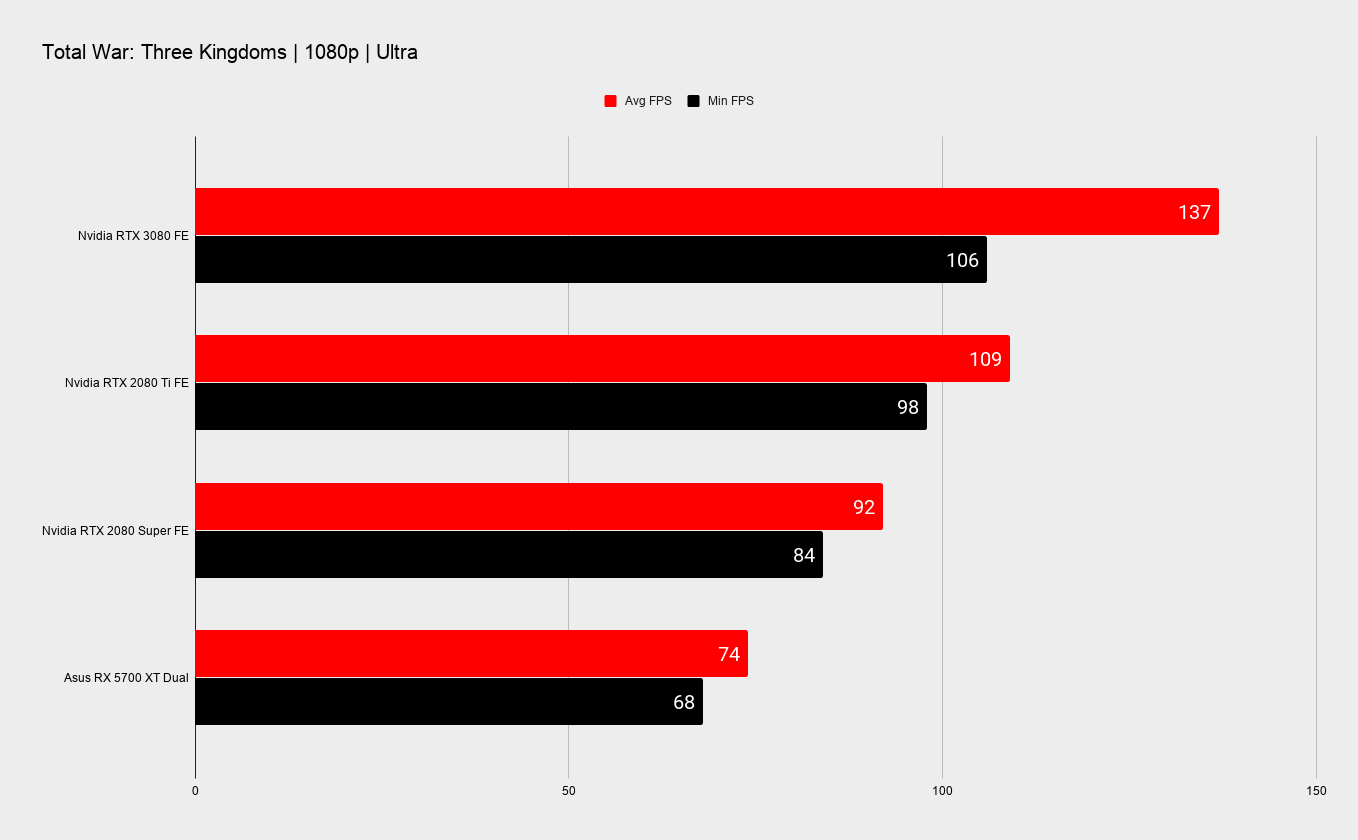

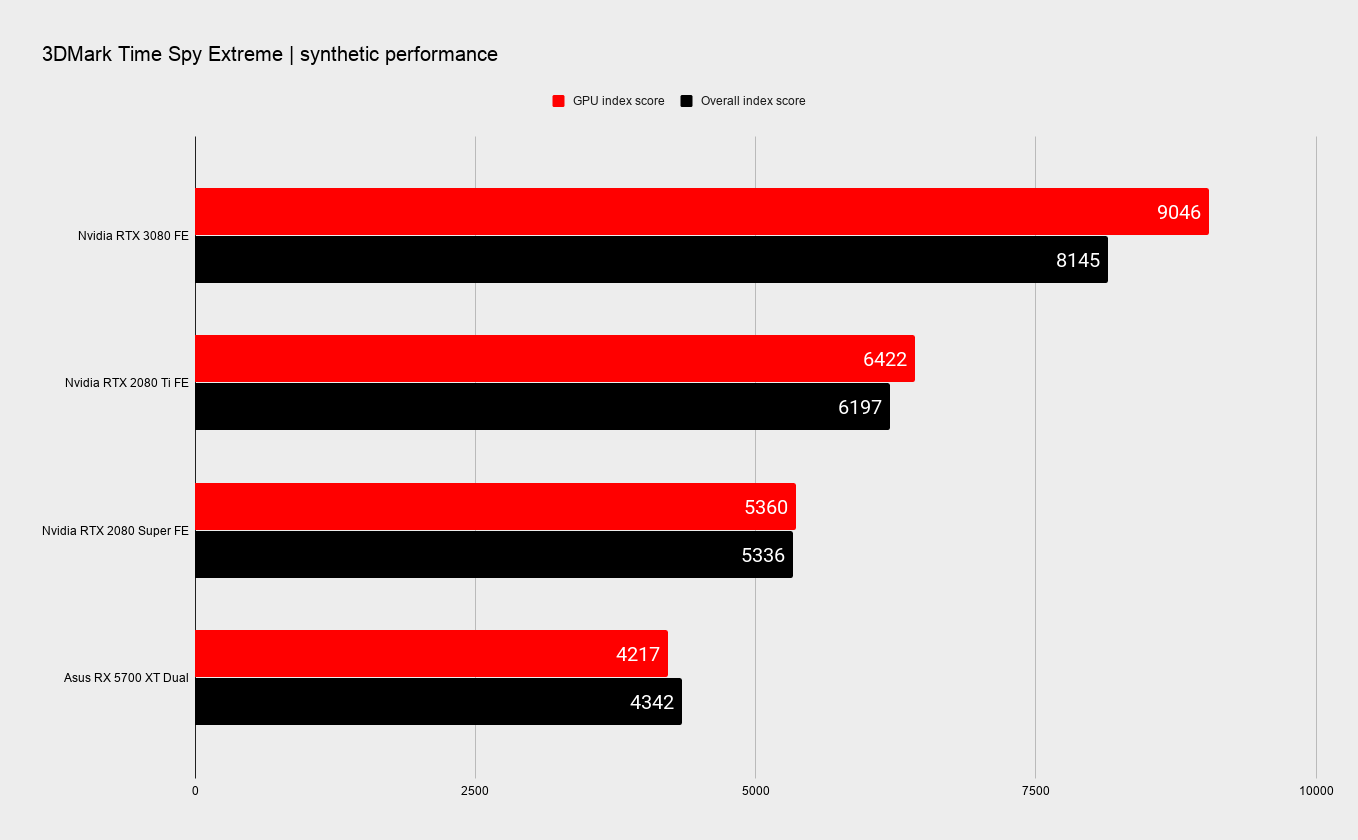

You should know how this is going to go by now. The RTX 3080 is an absolute monster of a gaming GPU, and is able to deliver frame rates which make the $1,200 RTX 2080 Ti look like a thoroughly mid-range graphics card.

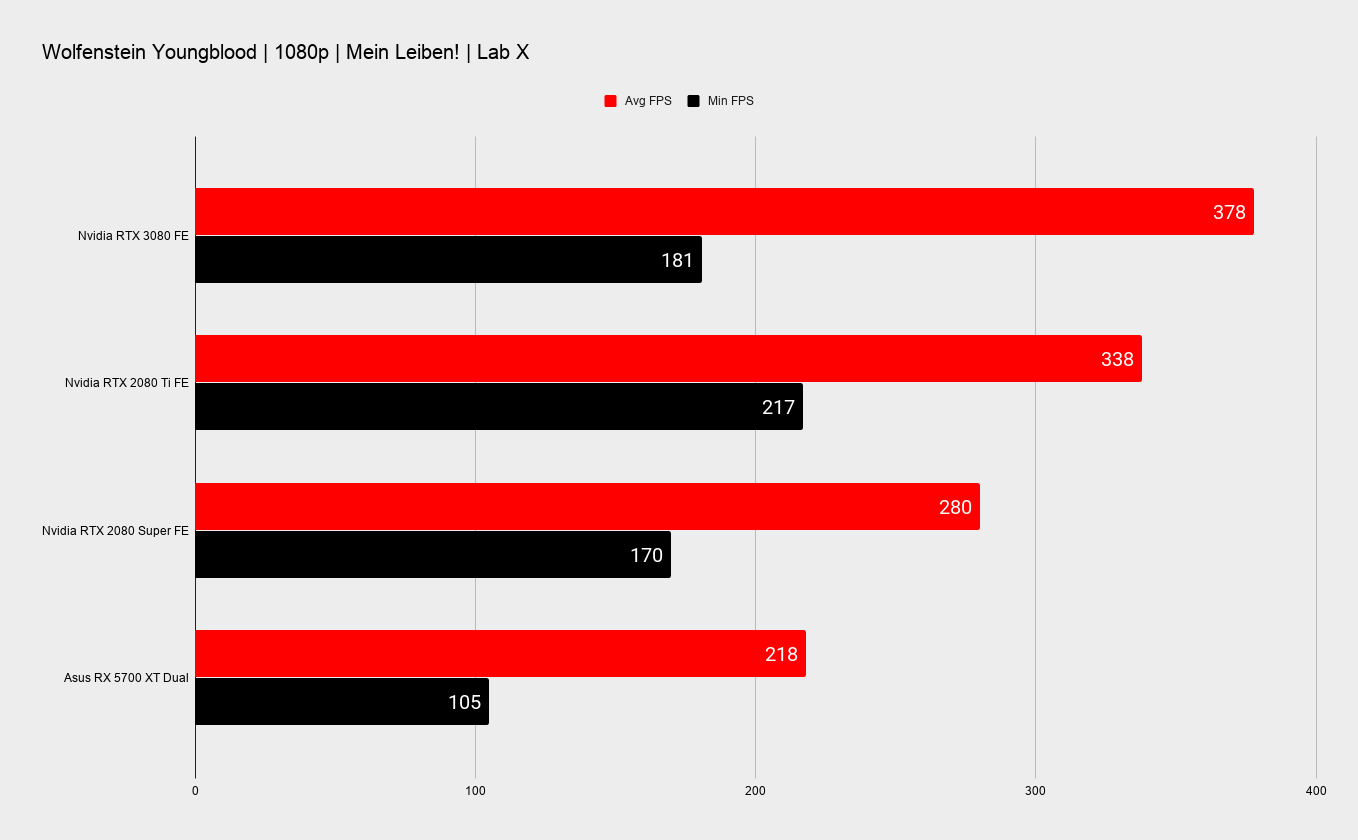

At the lowest 1080p resolution levels you are only getting a modest performance bump over the Ti, but that's when you're talking about frame rates getting into the 200+ level. At that point it's less about graphics power and more about the rest of the system. Dropping in a chunk more FP32 cores ain't going to do a lot there.

1080p benchmarks

1080p benchmarks

1080p benchmarks

1080p benchmarks

1080p benchmarks

1080p benchmarks

1080p benchmarks

1080p benchmarks

1080p benchmarks

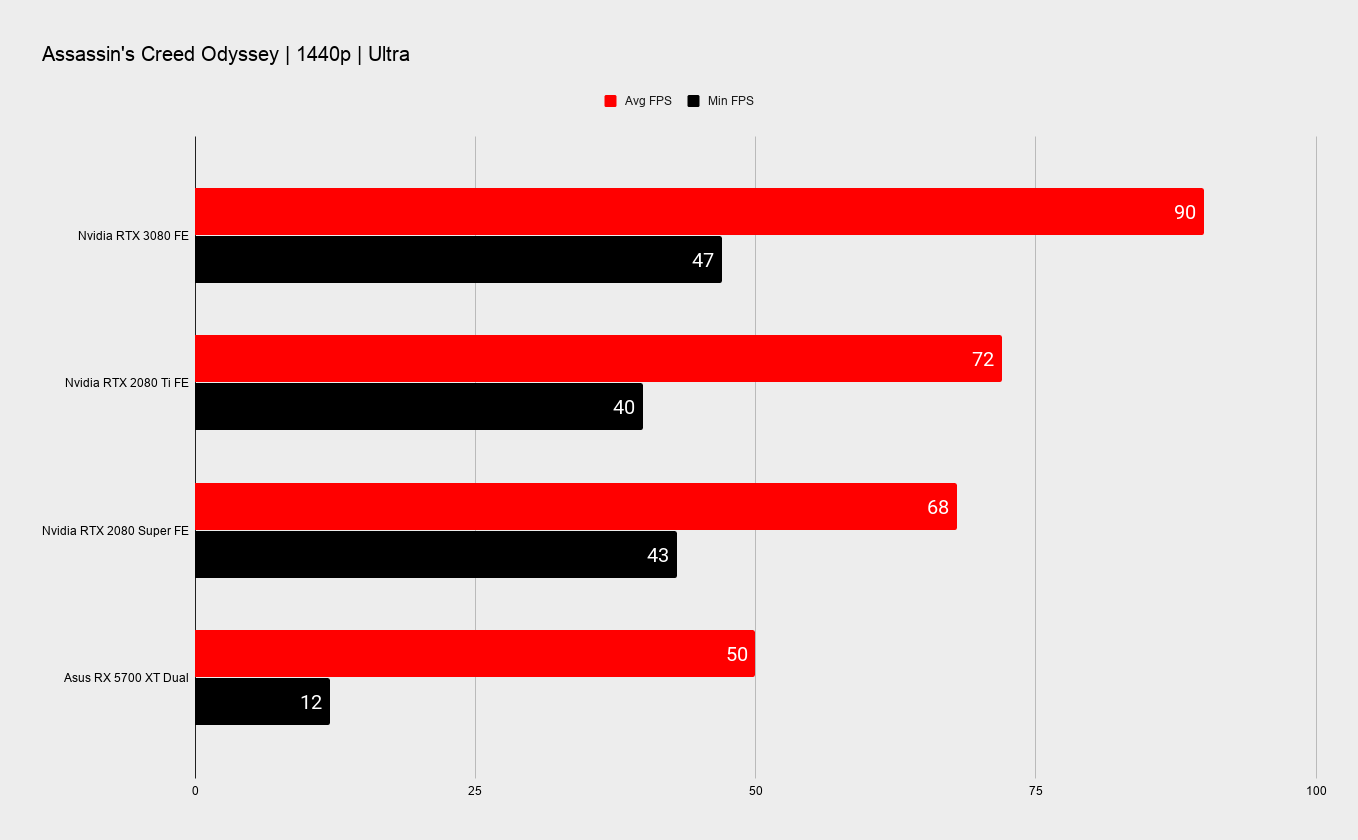

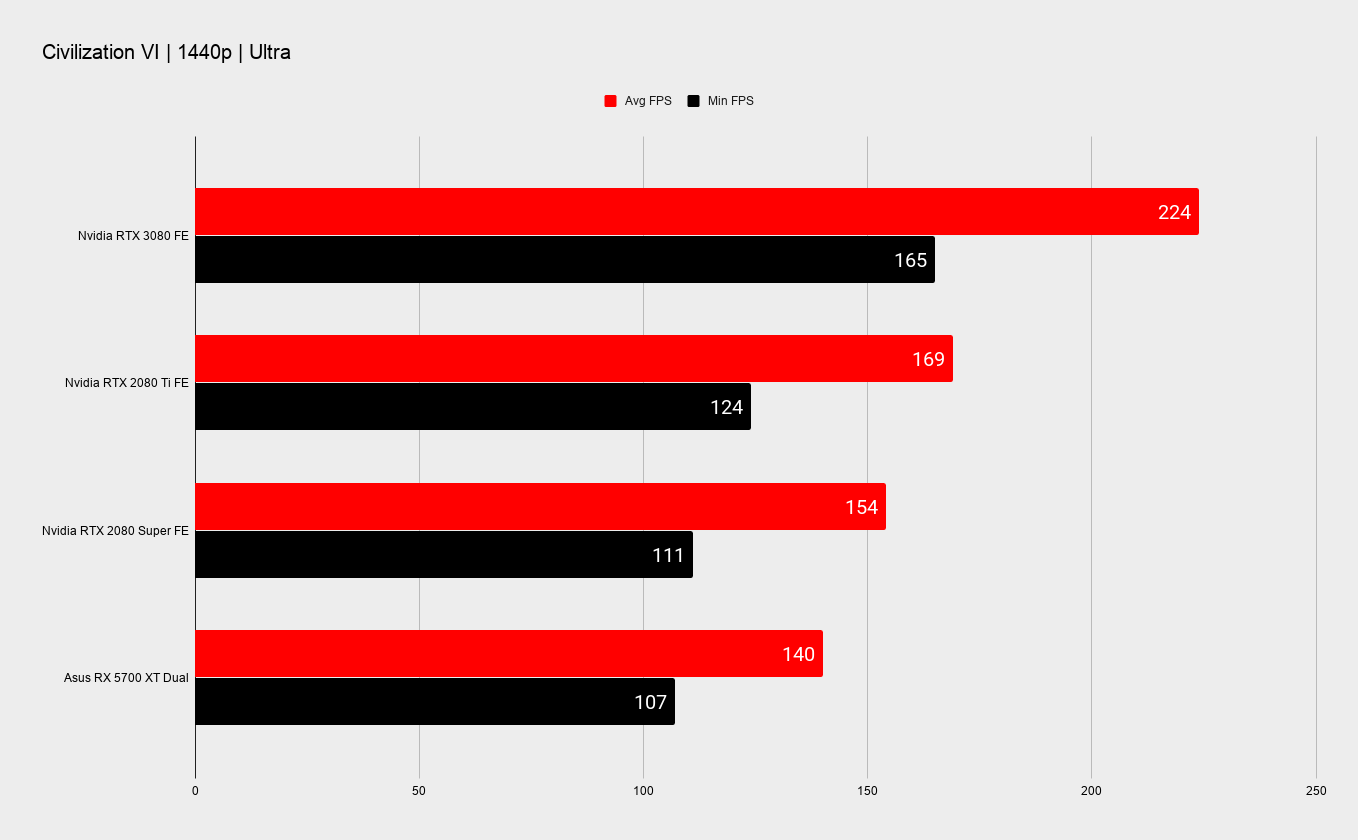

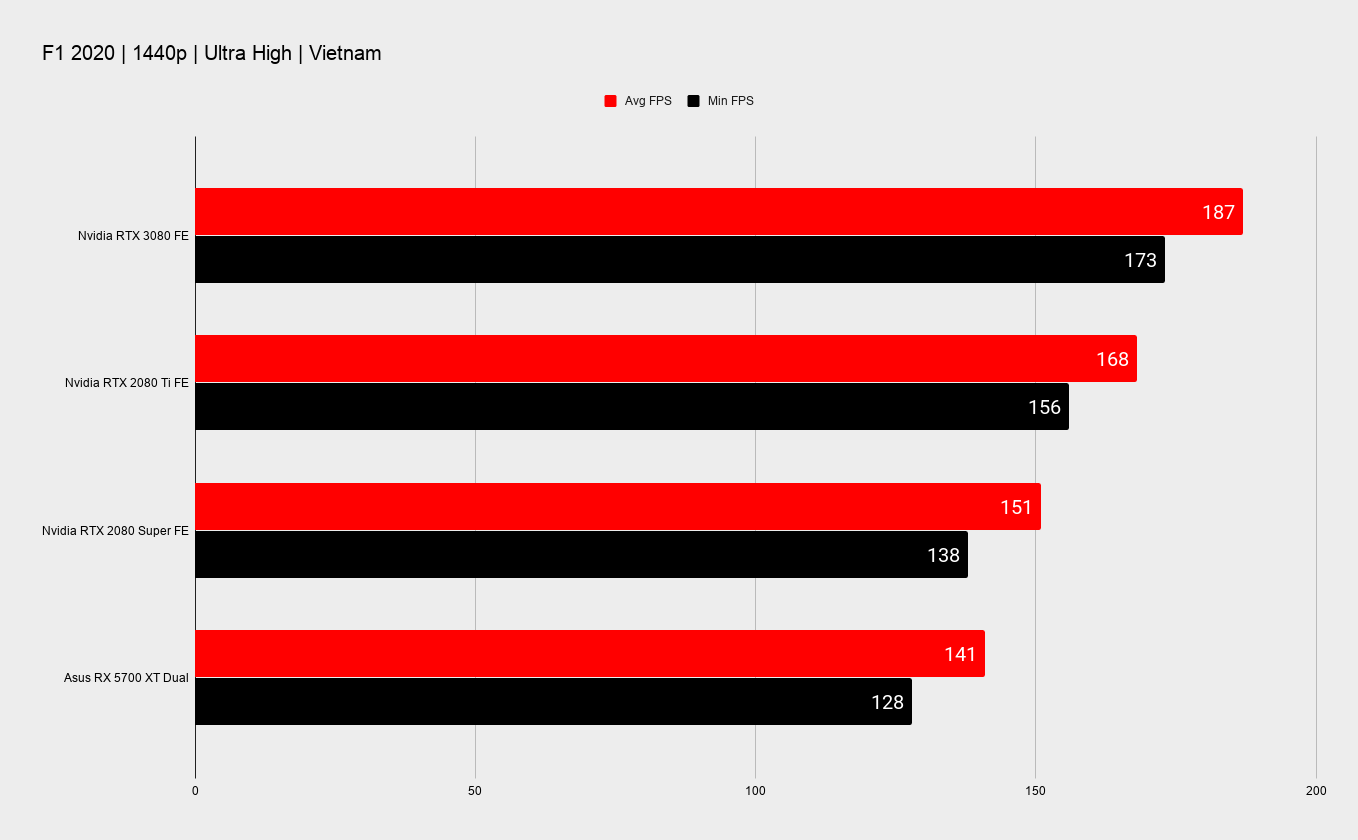

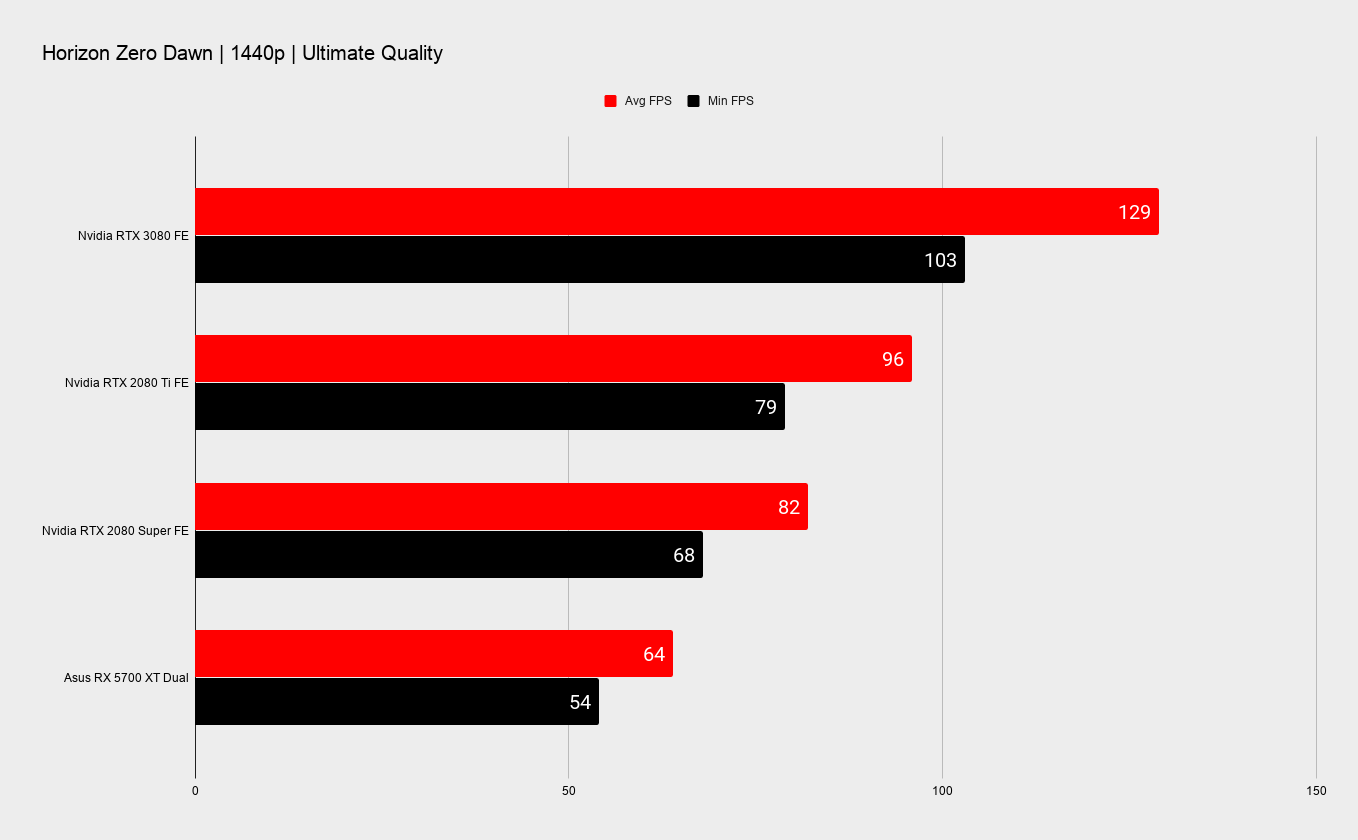

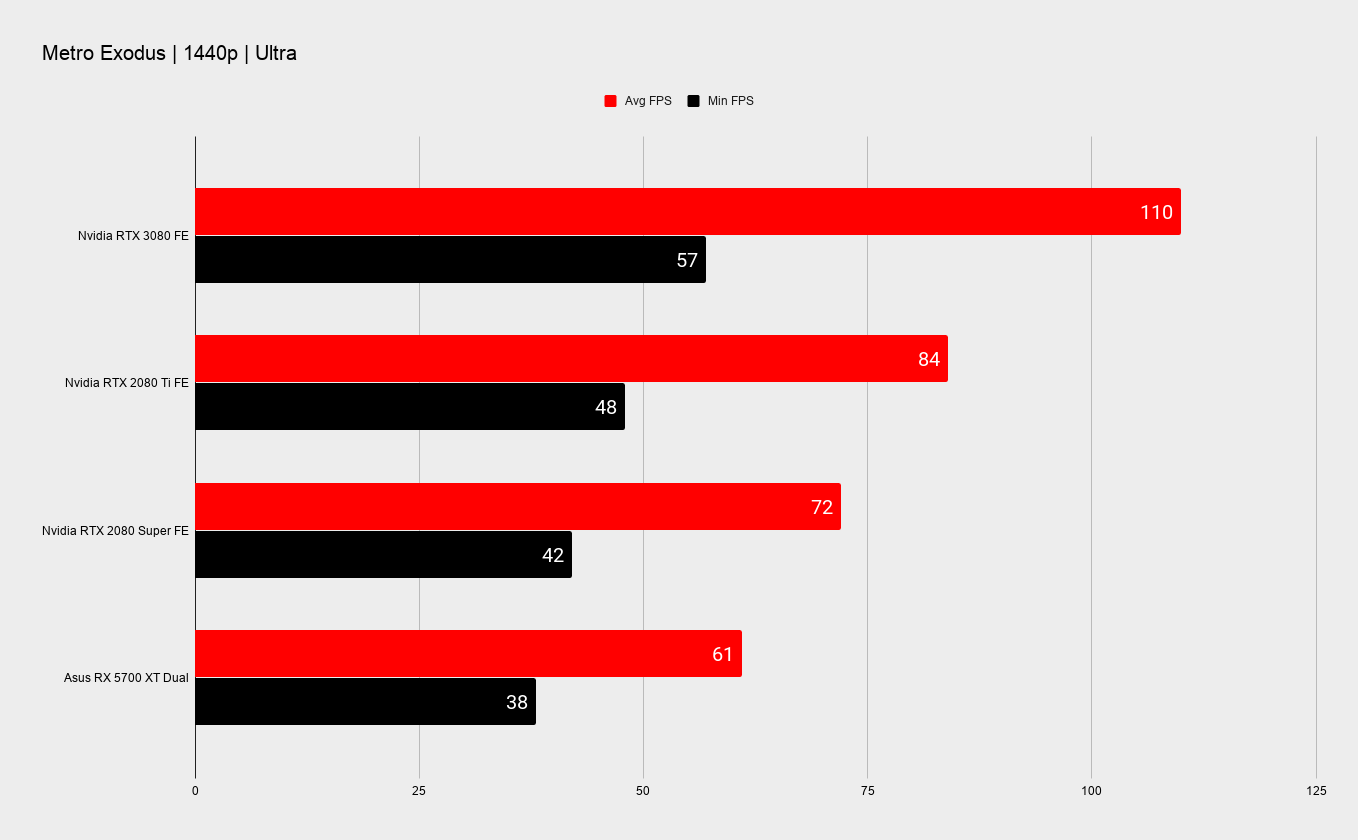

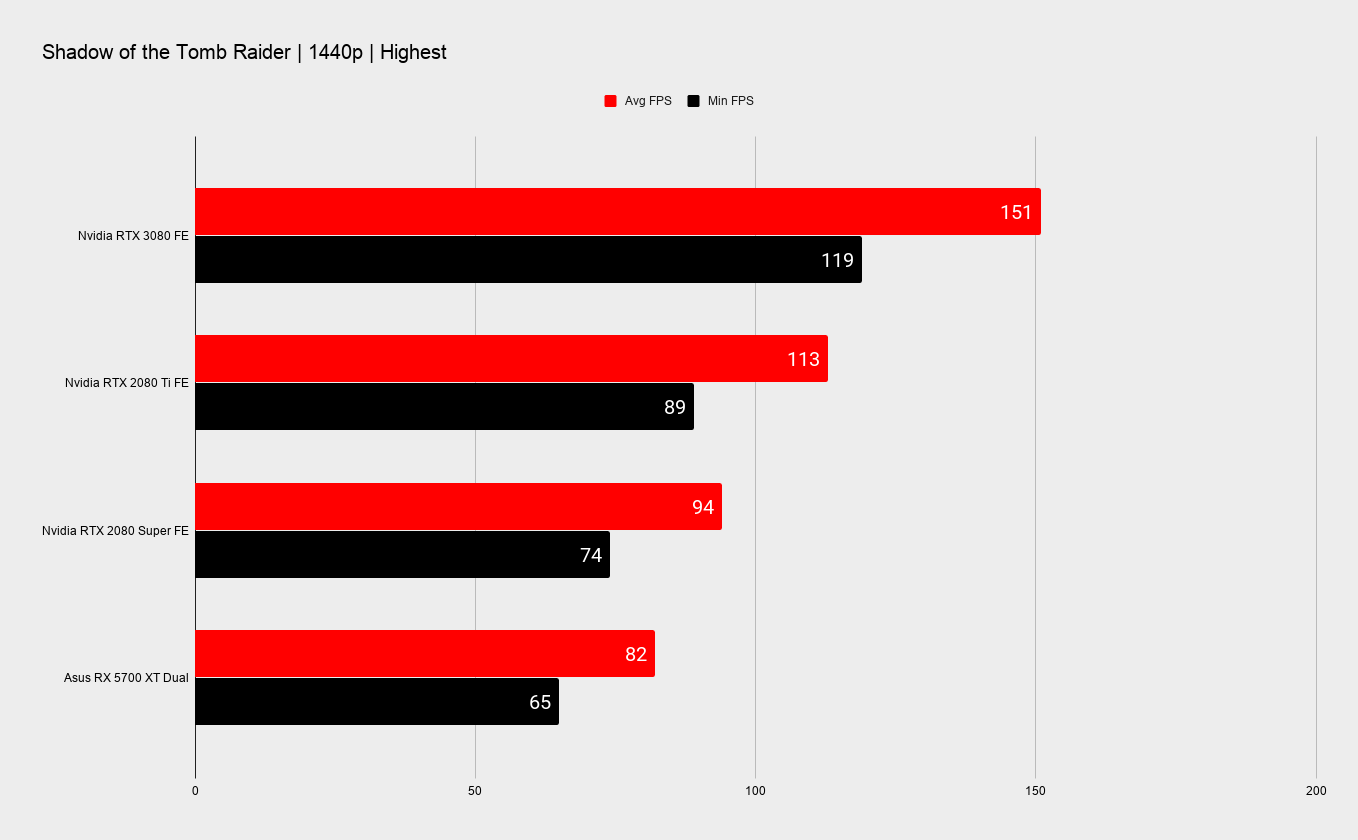

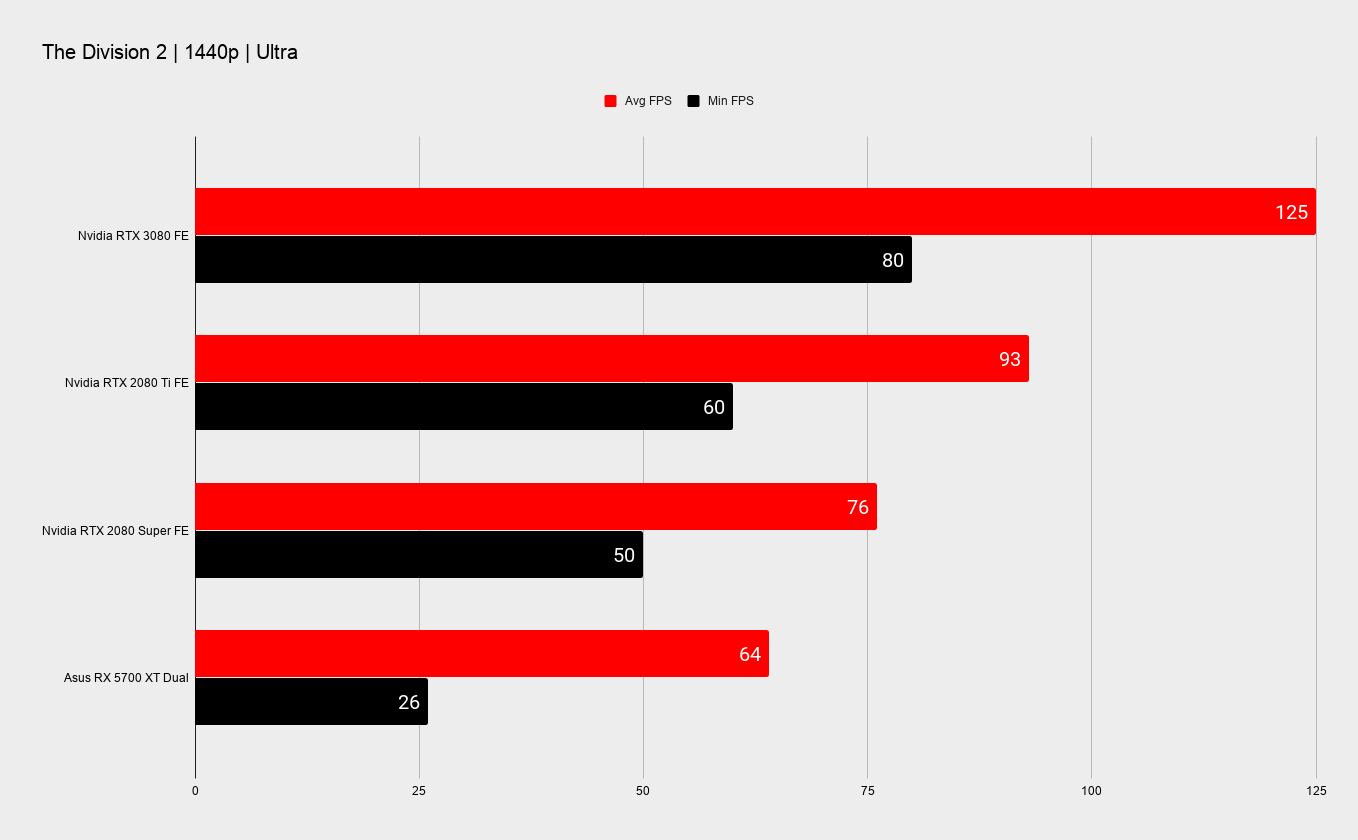

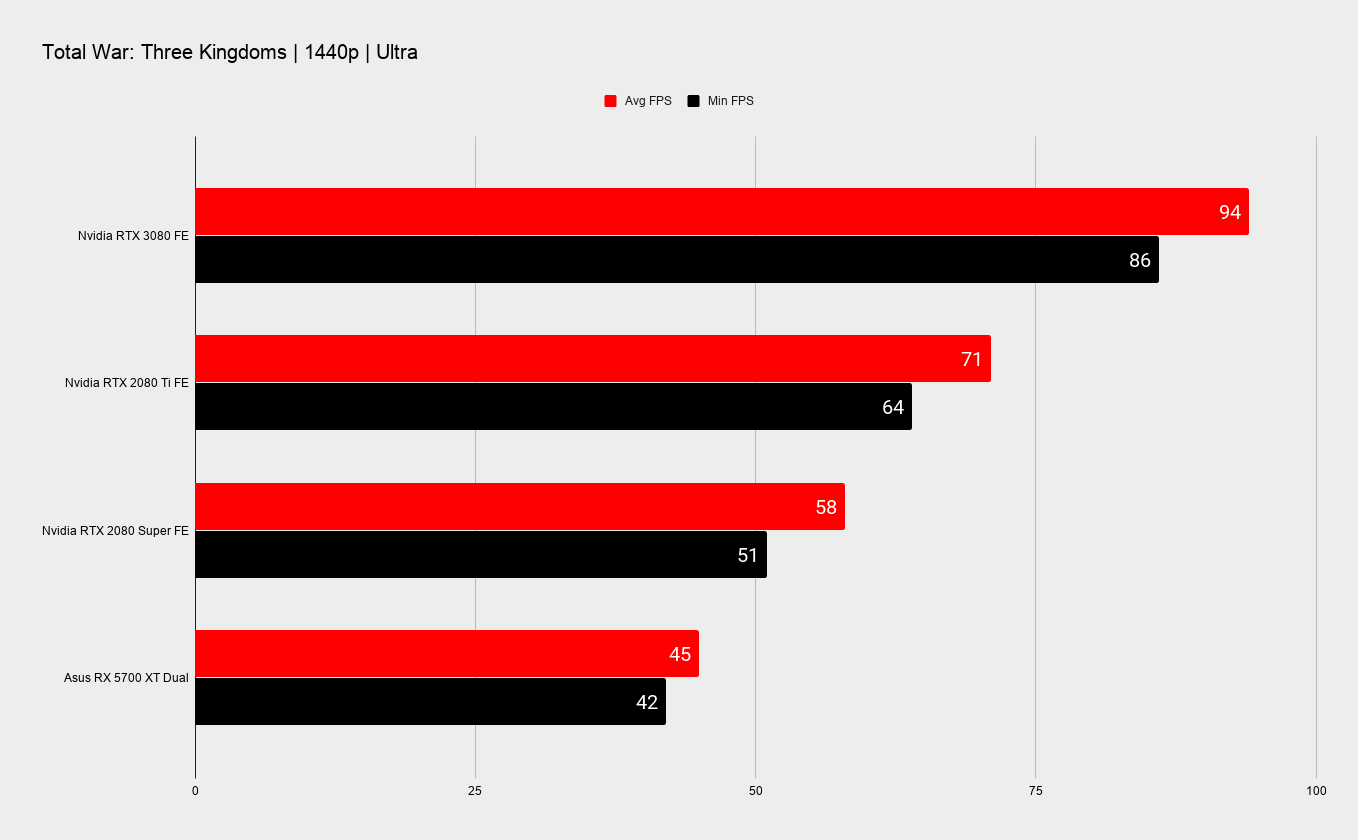

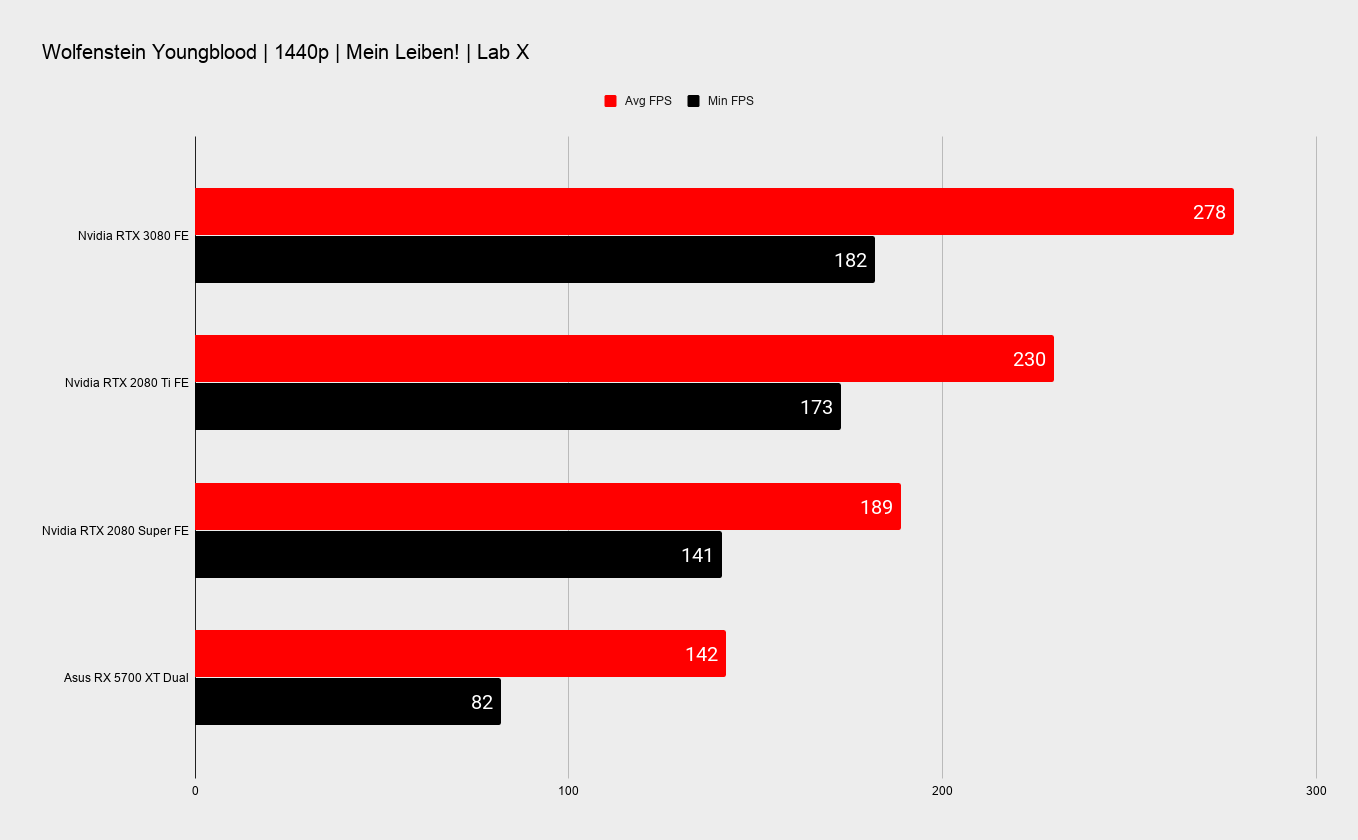

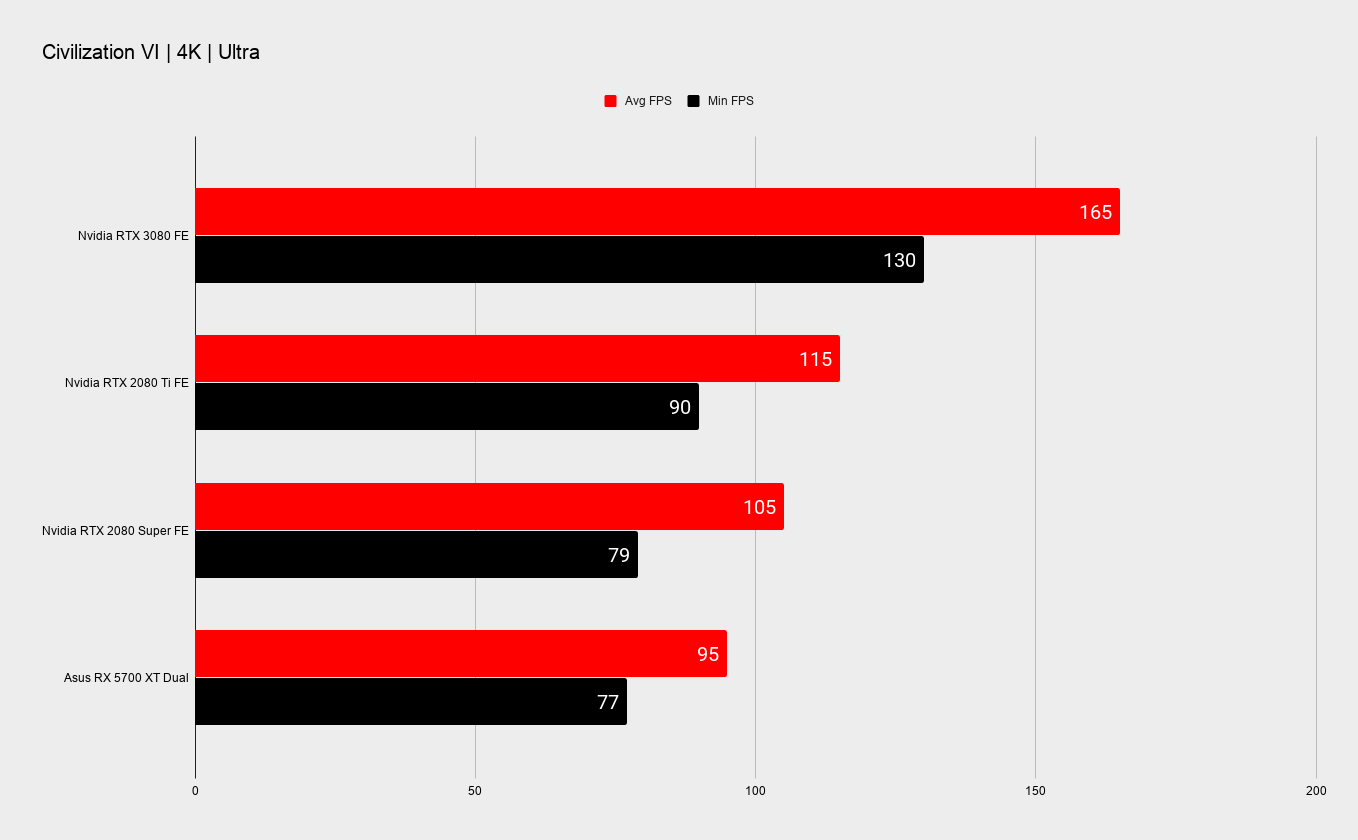

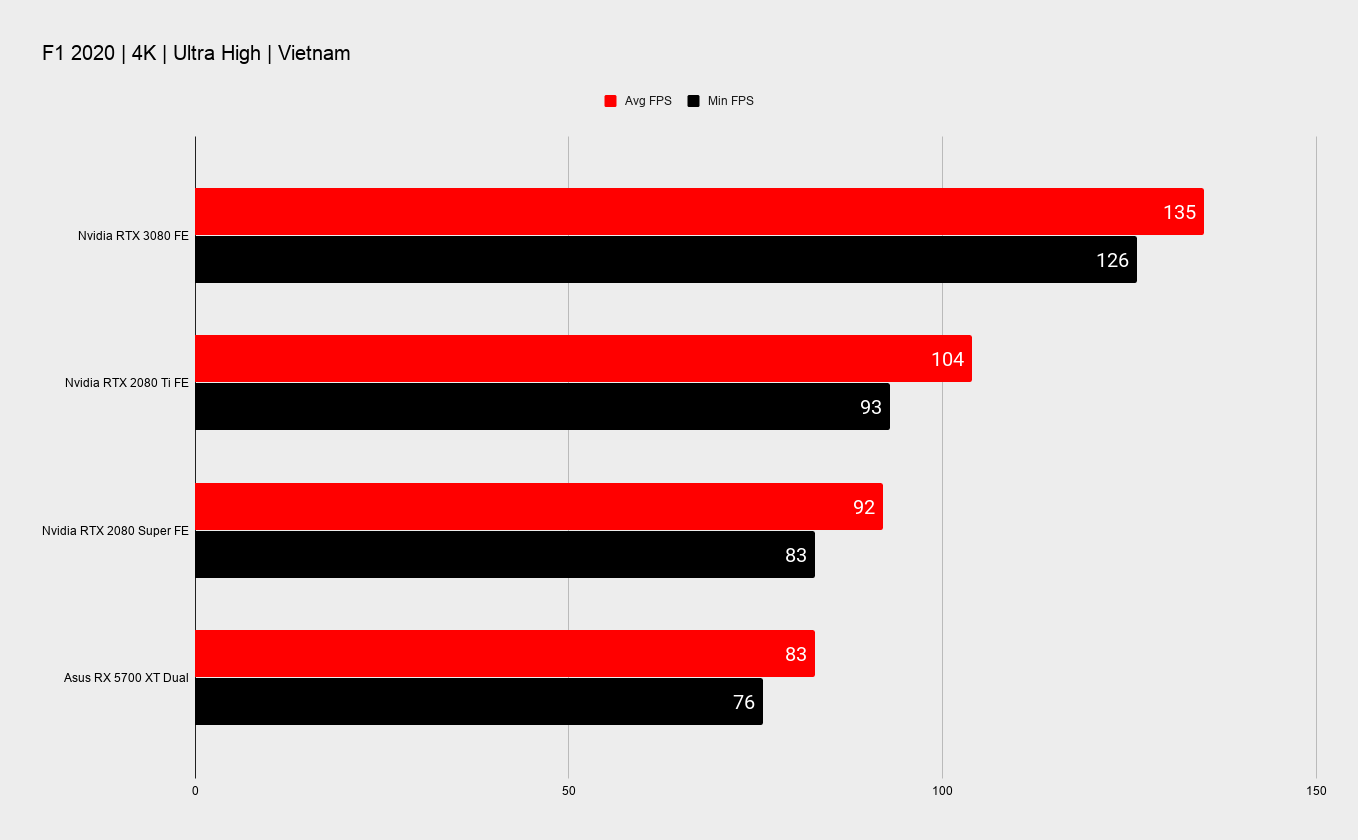

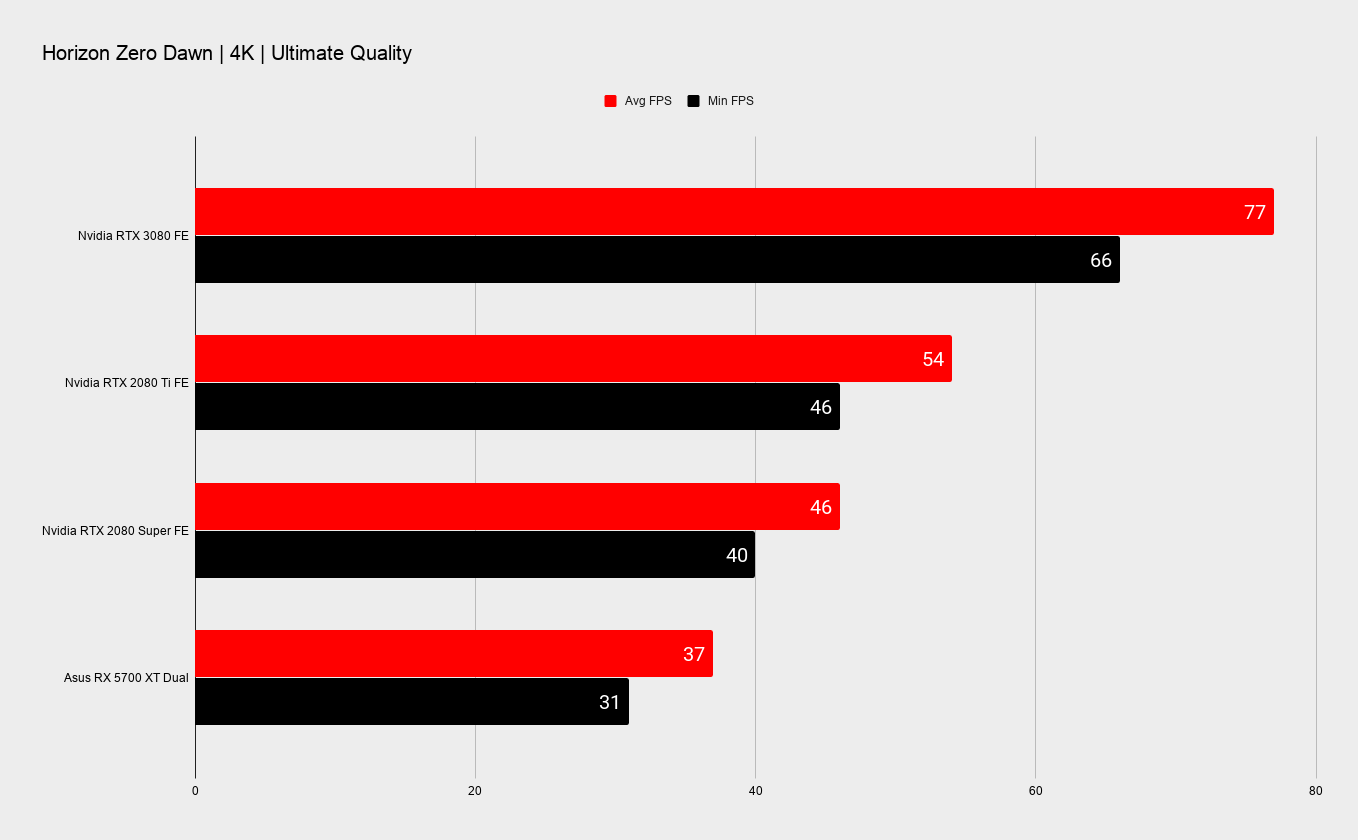

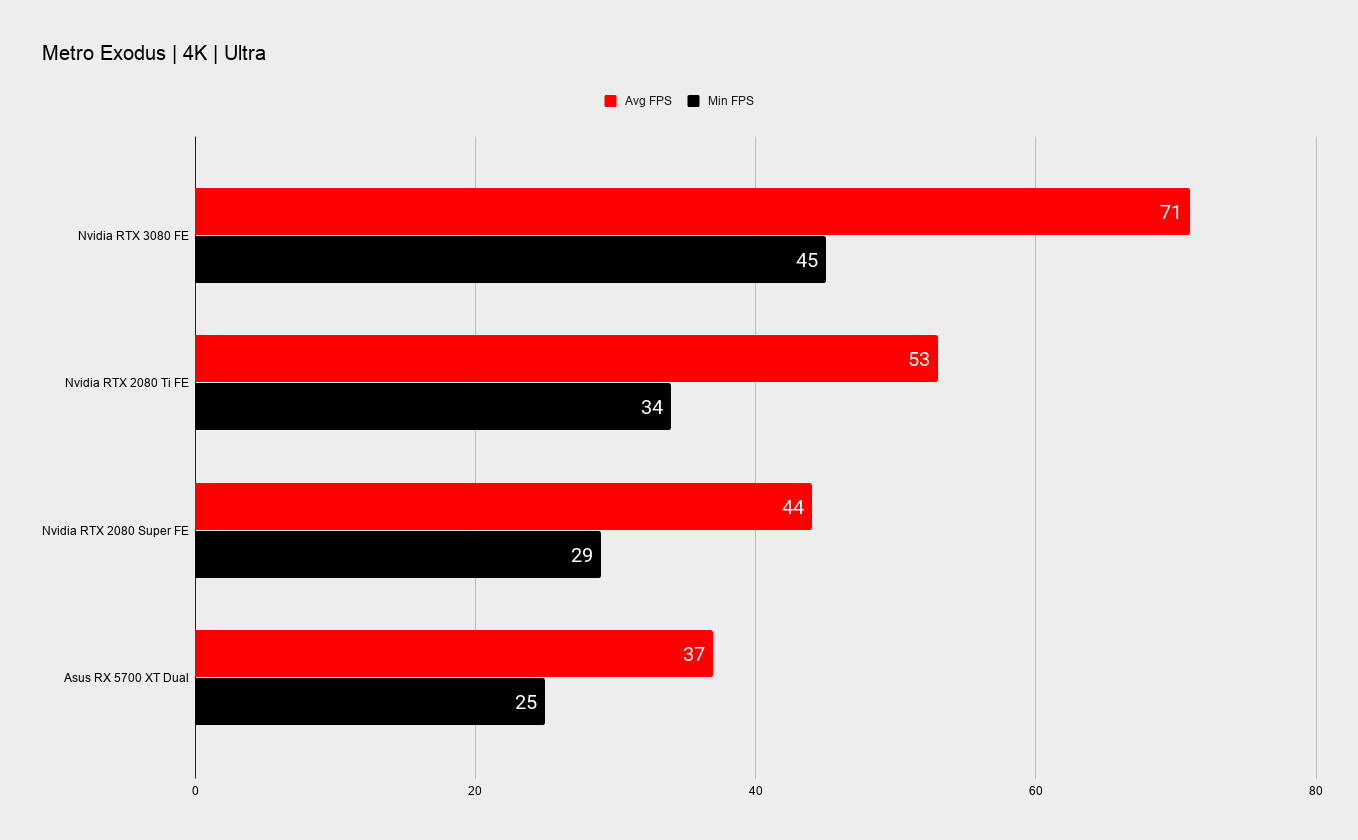

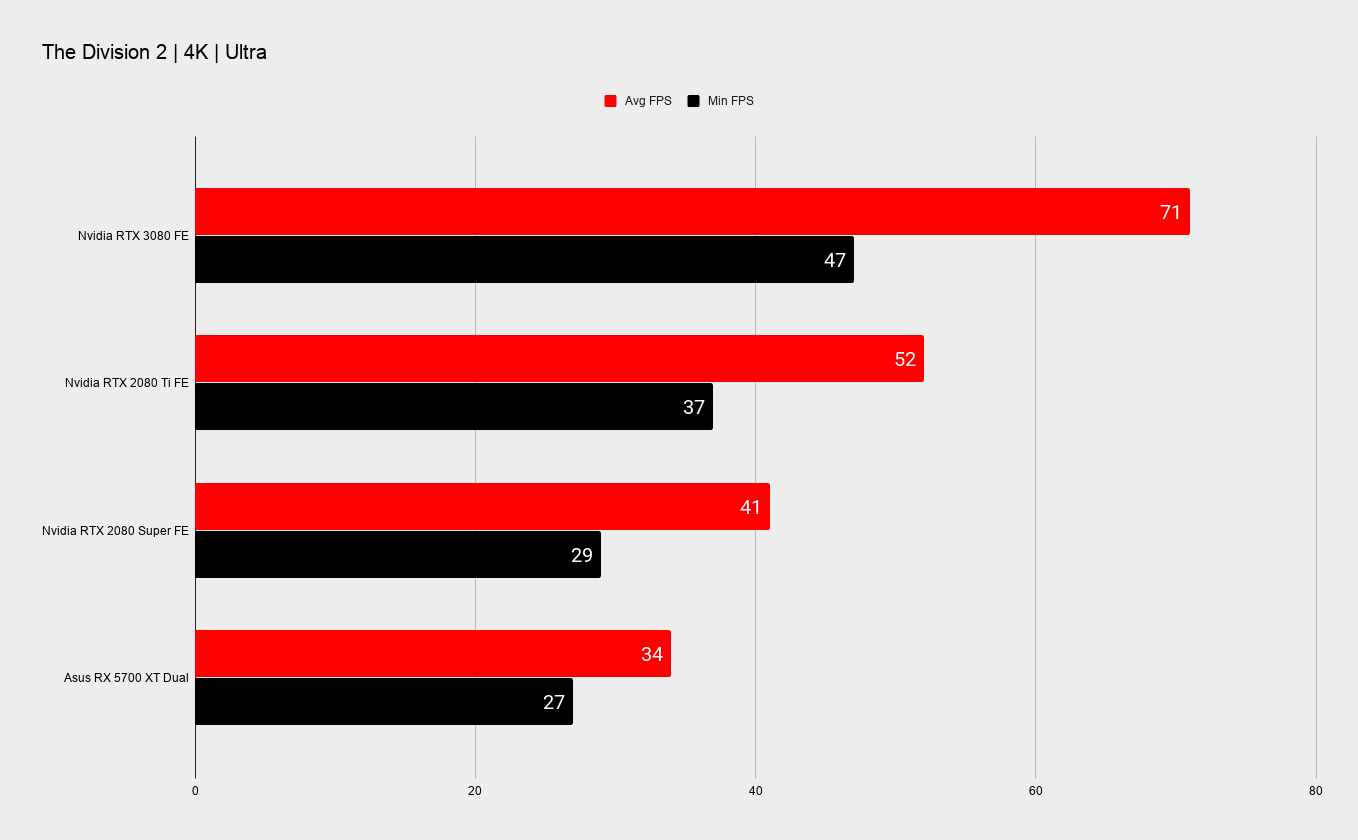

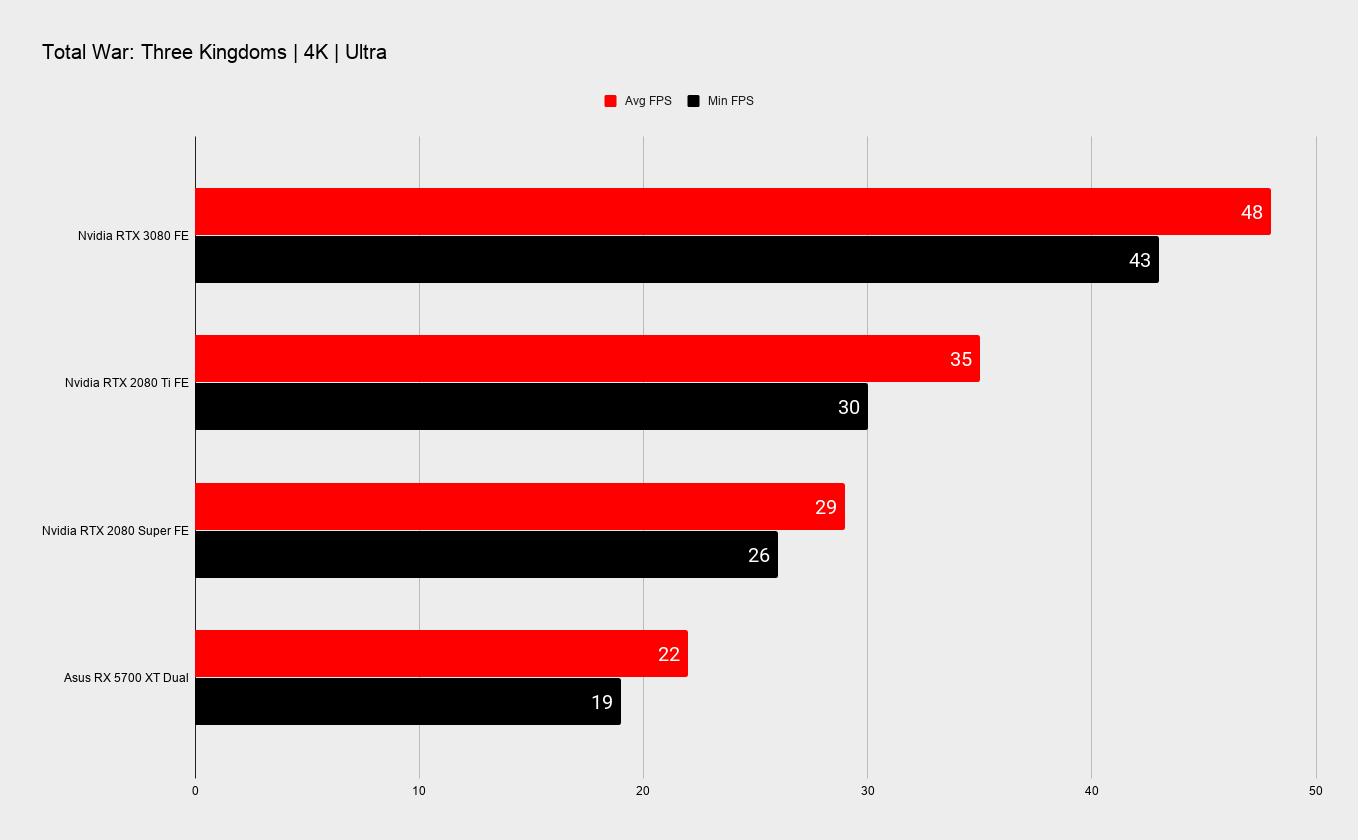

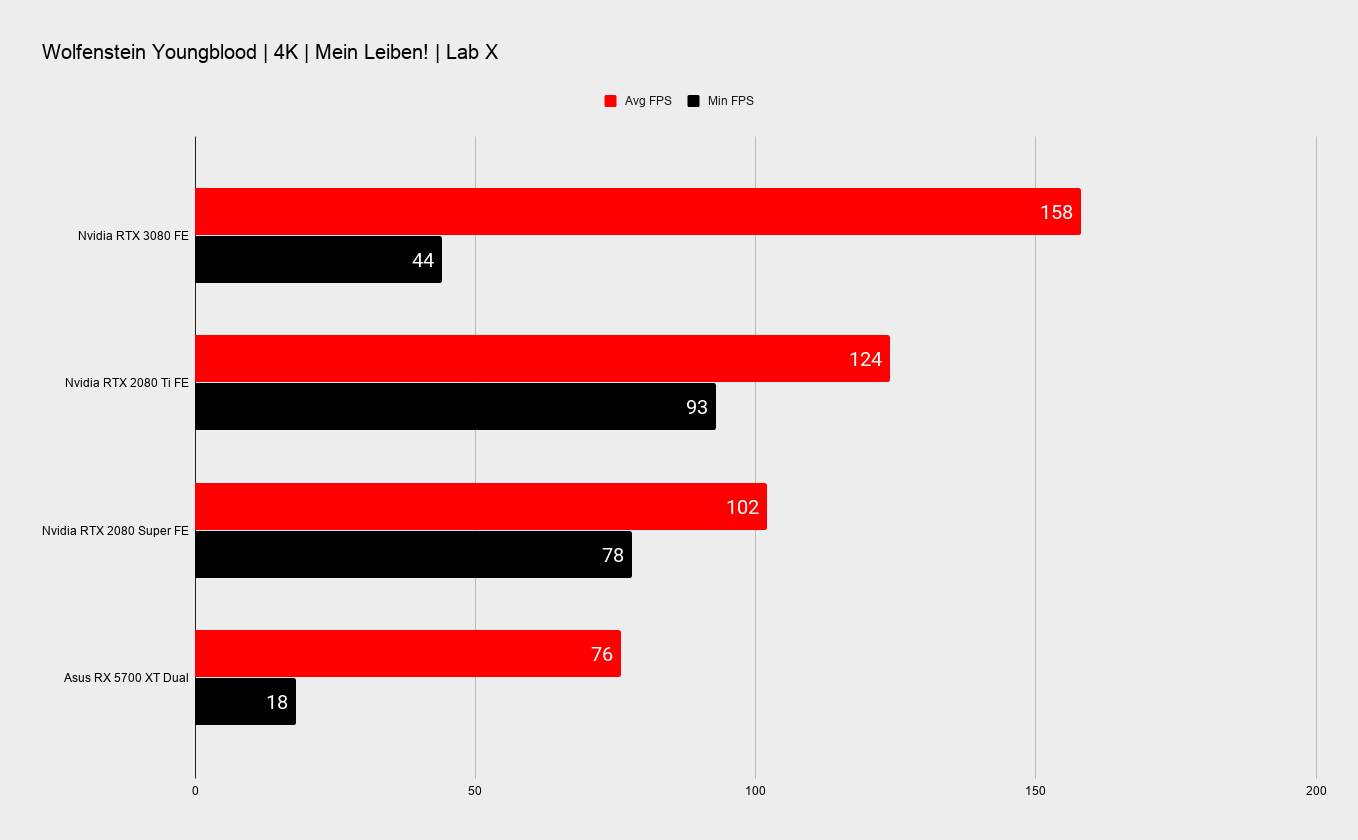

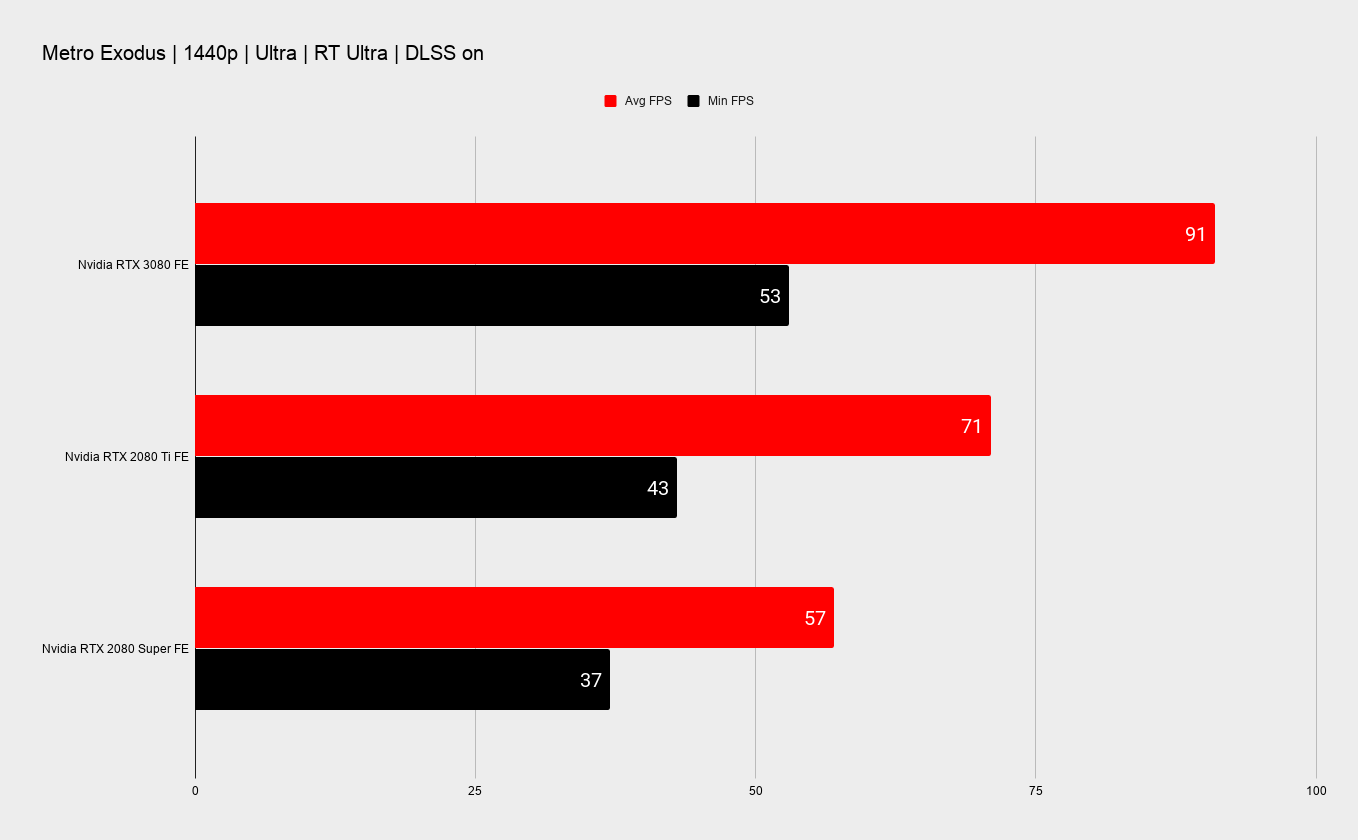

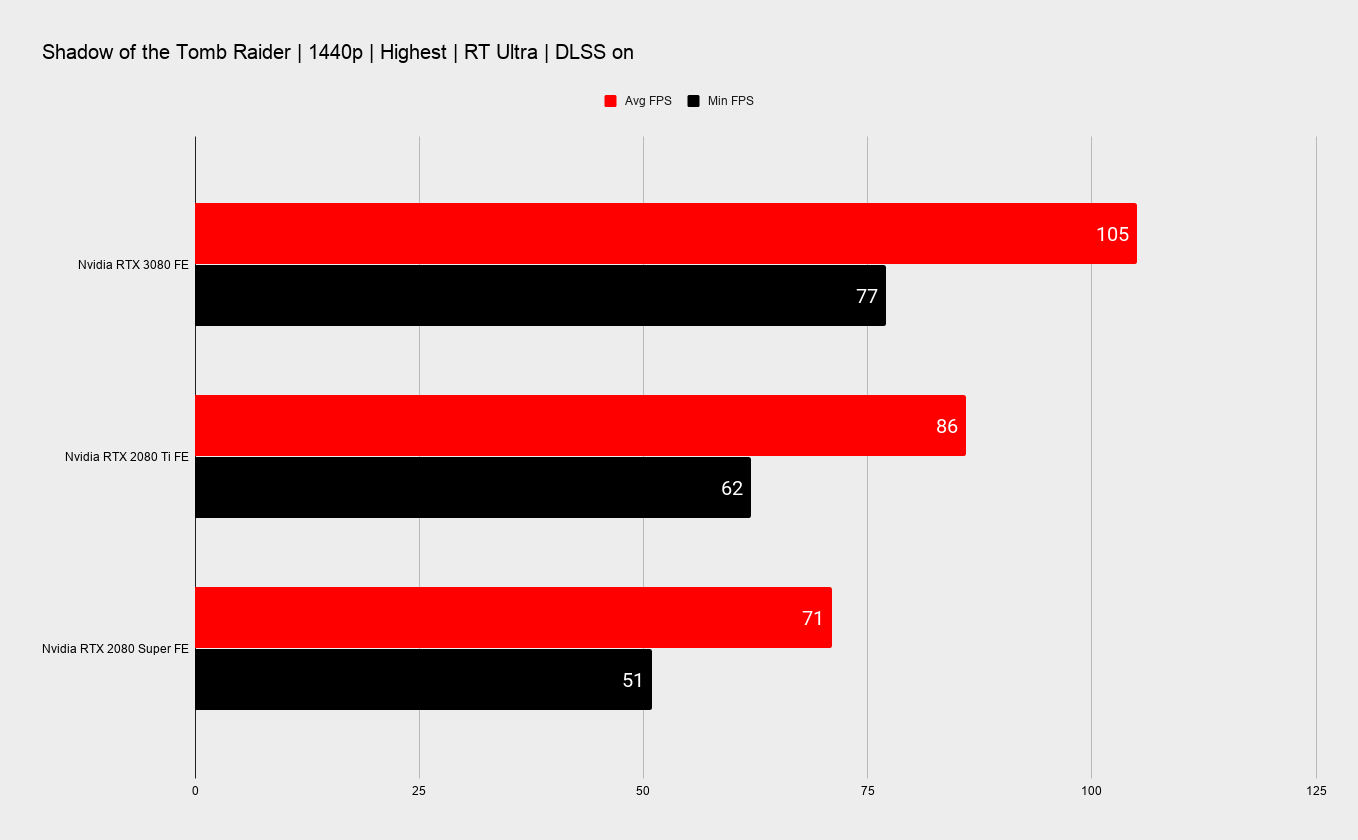

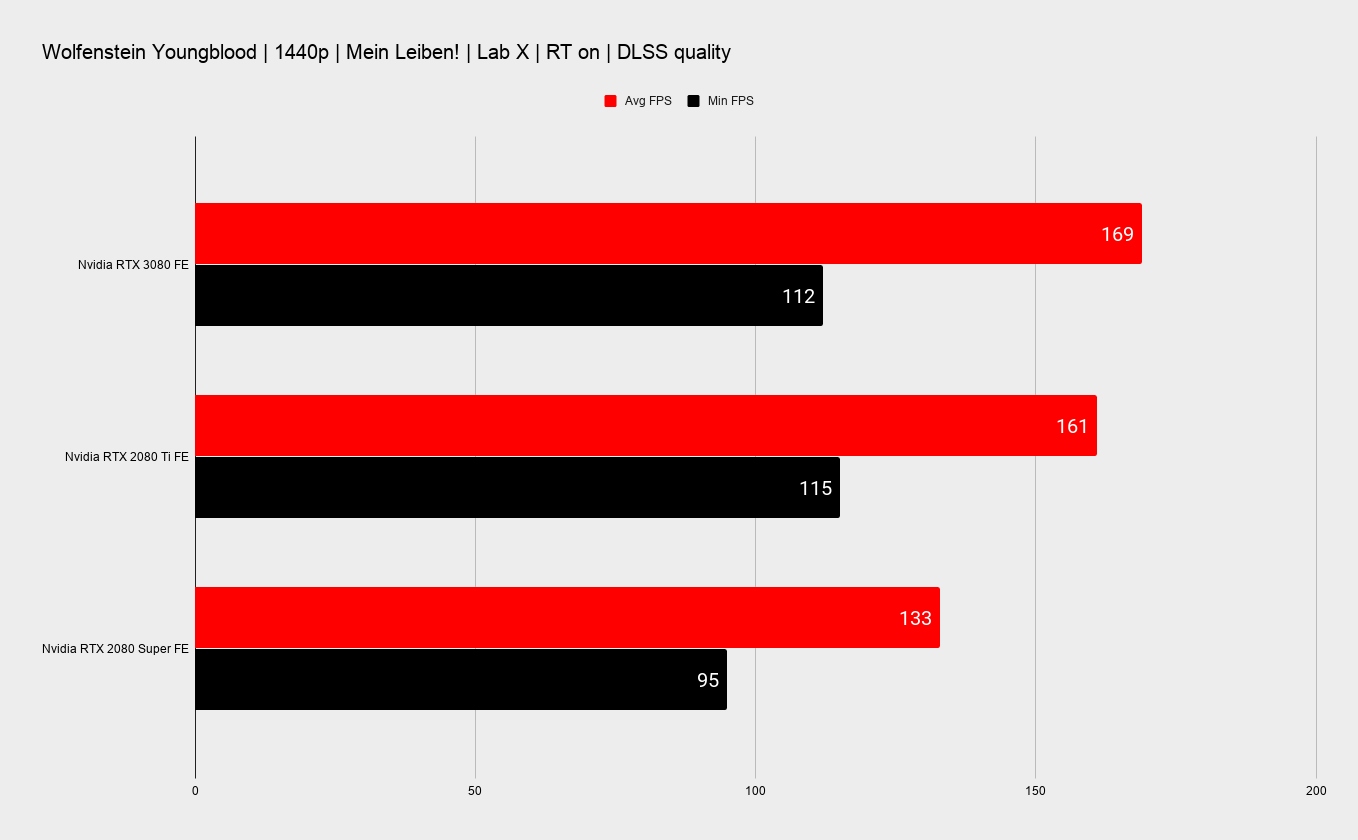

But when you shift gears into 1440p and 4K resolutions, however, especially in the more demanding game engines, that's when the RTX 3080's performance chops really come to the fore. When the going gets tough I've been seeing frame rates jumps of between 25 percent and 43 percent over the RTX 2080 Ti.

You can now get higher ray traced frame rates than the previous generation could offer without it

That's a huge performance uplift over the absolute top-end of not just the previous generation of Nvidia graphics cards, but what was the absolute fastest consumer GPU ever made. And when you consider the RTX 3080 isn't even the most performant of the new Ampere generation you can see what the architectural changes have done for the gaming prowess of the new GeForce cards.

According to Nvidia we shouldn't really be talking about its comparative performance with the Ti because that's not really the card the RTX 3080 is replacing. That's the RTX 2080 Super, and here it's even worse news for people who dropped the best part of a grand on a new GPU over the last 12 months. Coming in at the same $699 sticker price as the RTX 2080 Super was launched at, the RTX 3080 destroys it.

1440p benchmarks

1440p benchmarks

1440p benchmarks

1440p benchmarks

1440p benchmarks

1440p benchmarks

1440p benchmarks

1440p benchmarks

1440p benchmarks

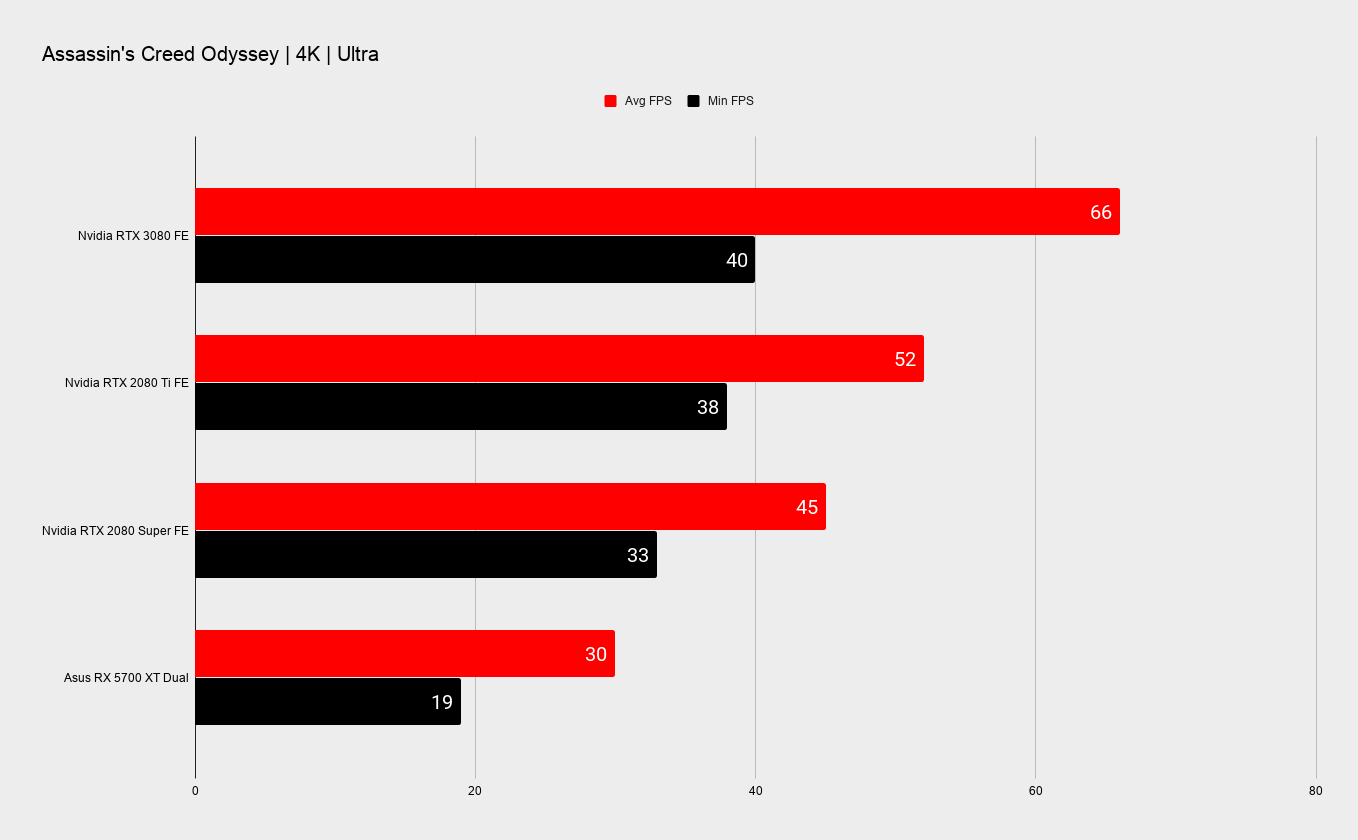

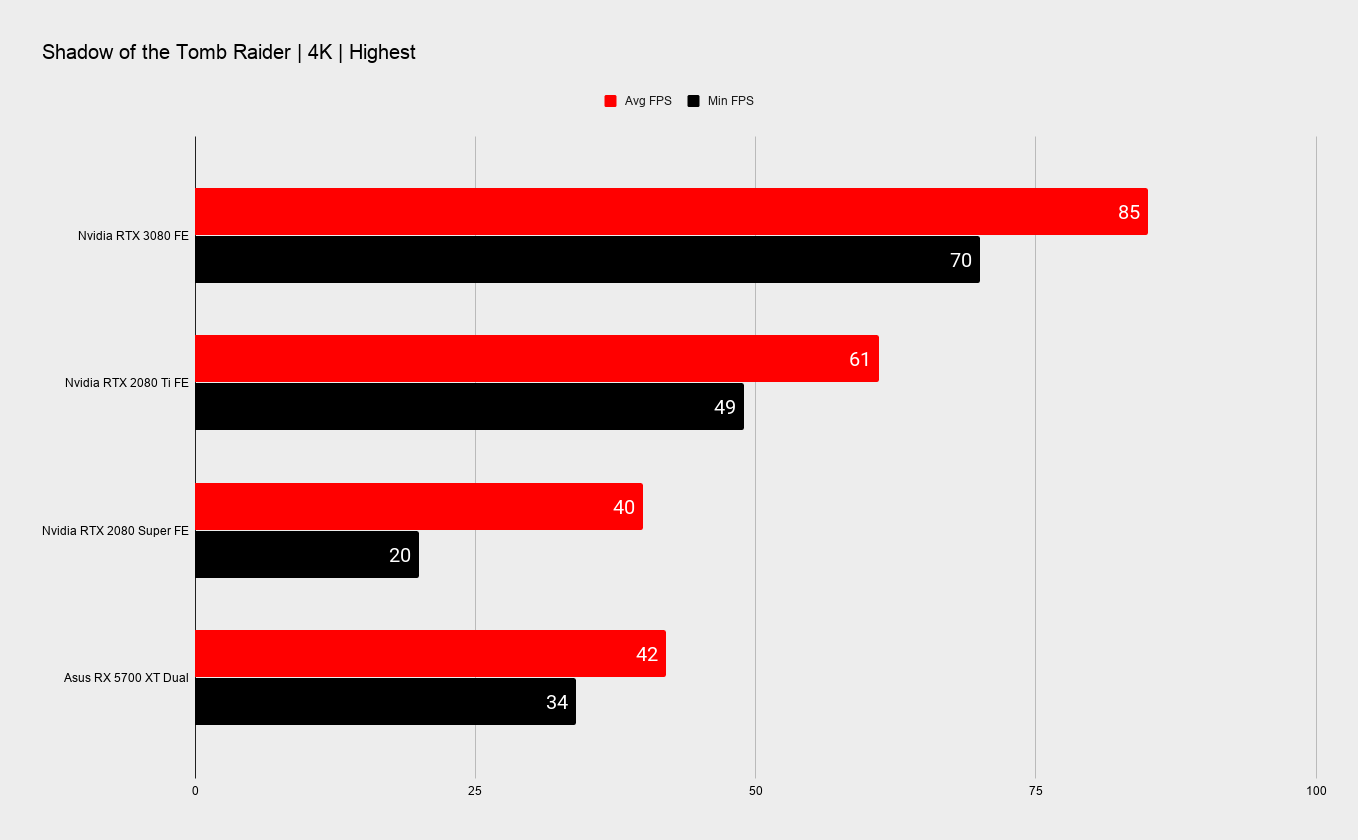

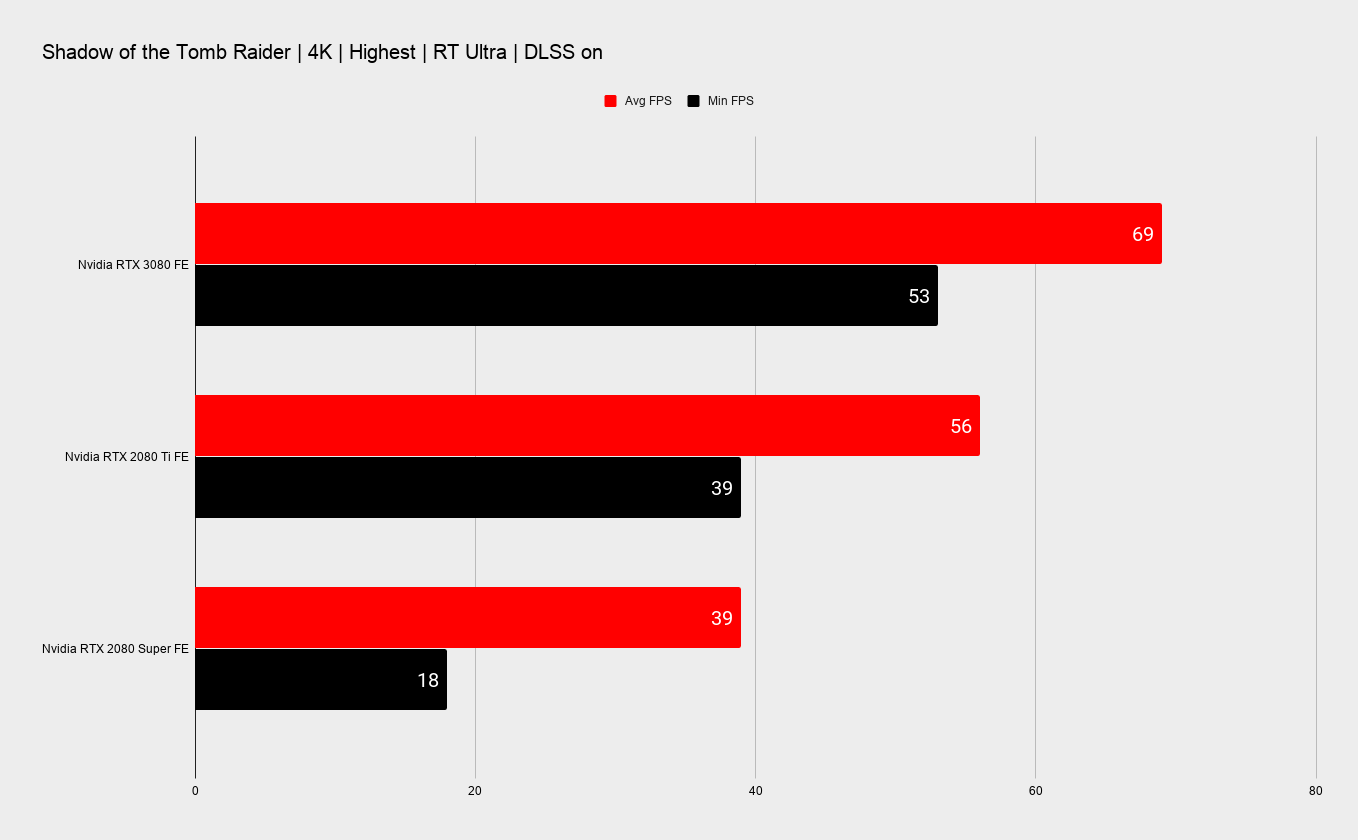

But where Nvidia has been talking about performance up to twice that of the original RTX 2080 that is only in a few very specific titles. In my testing only Shadow of the Tomb Raider presents such a situation, but still doubling the 4K frame rates of the Super is hugely impressive.

That said, the uplift is still significant over the previous generation across the board: my 1440p gains average out at 50 percent faster frame rates and the 4K performance boost is even higher at 65 percent on average.

4K benchmarks

4K benchmarks

4K benchmarks

4K benchmarks

4K benchmarks

4K benchmarks

4K benchmarks

4K benchmarks

4K benchmarks

4K benchmarks

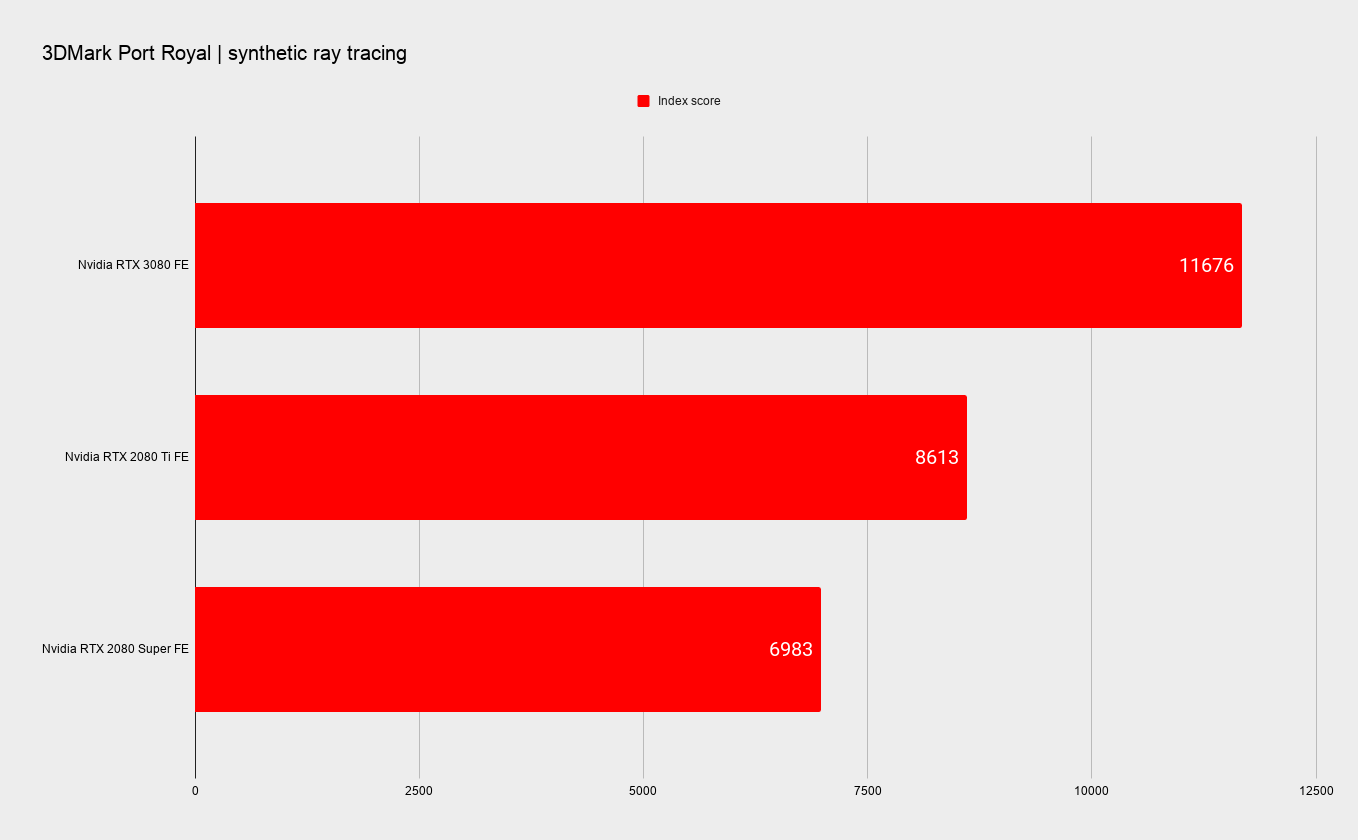

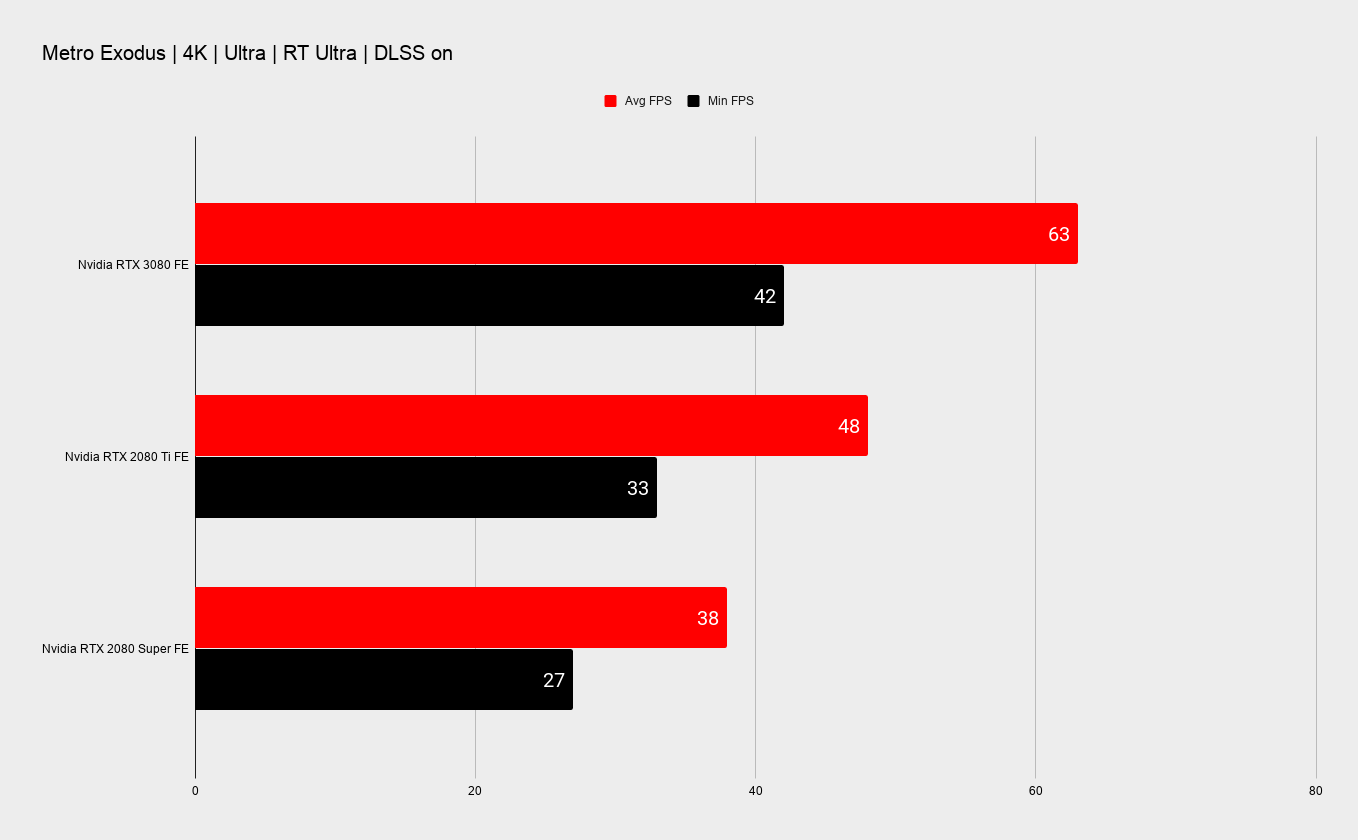

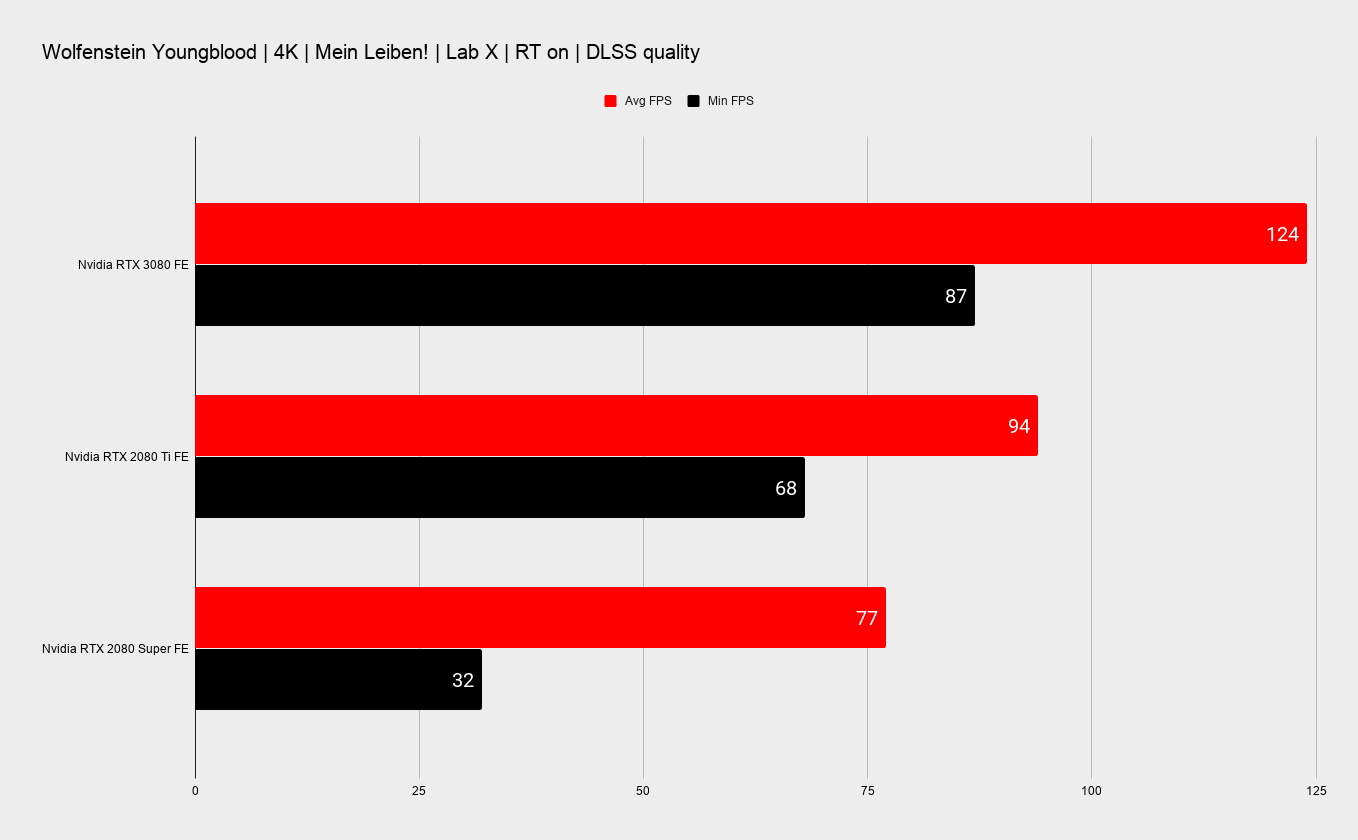

The accelerated second-gen RT Cores are very much in play when you check out the relative ray traced performance of the Turing and Ampere cards. All you really need to know is that you can now get higher ray traced frame rates than the previous generation could offer without the feature turned on. That highlights just where this new architecture has moved the game on in that regard.

Basically, it is safe to go RTX on in-game now.

Ray tracing benchmarks

Ray tracing benchmarks

Ray tracing benchmarks

Ray tracing benchmarks

Ray tracing benchmarks

Ray tracing benchmarks

Ray tracing benchmarks

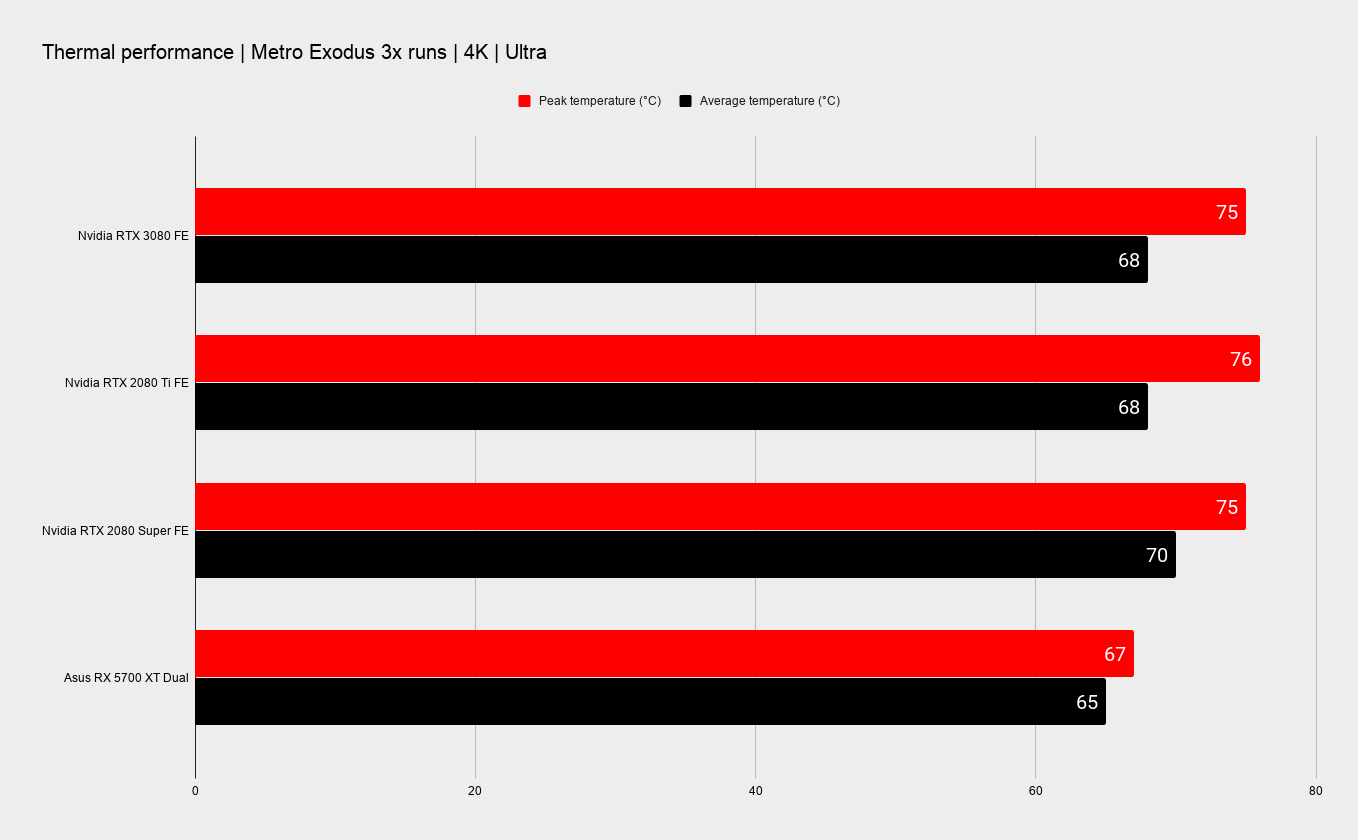

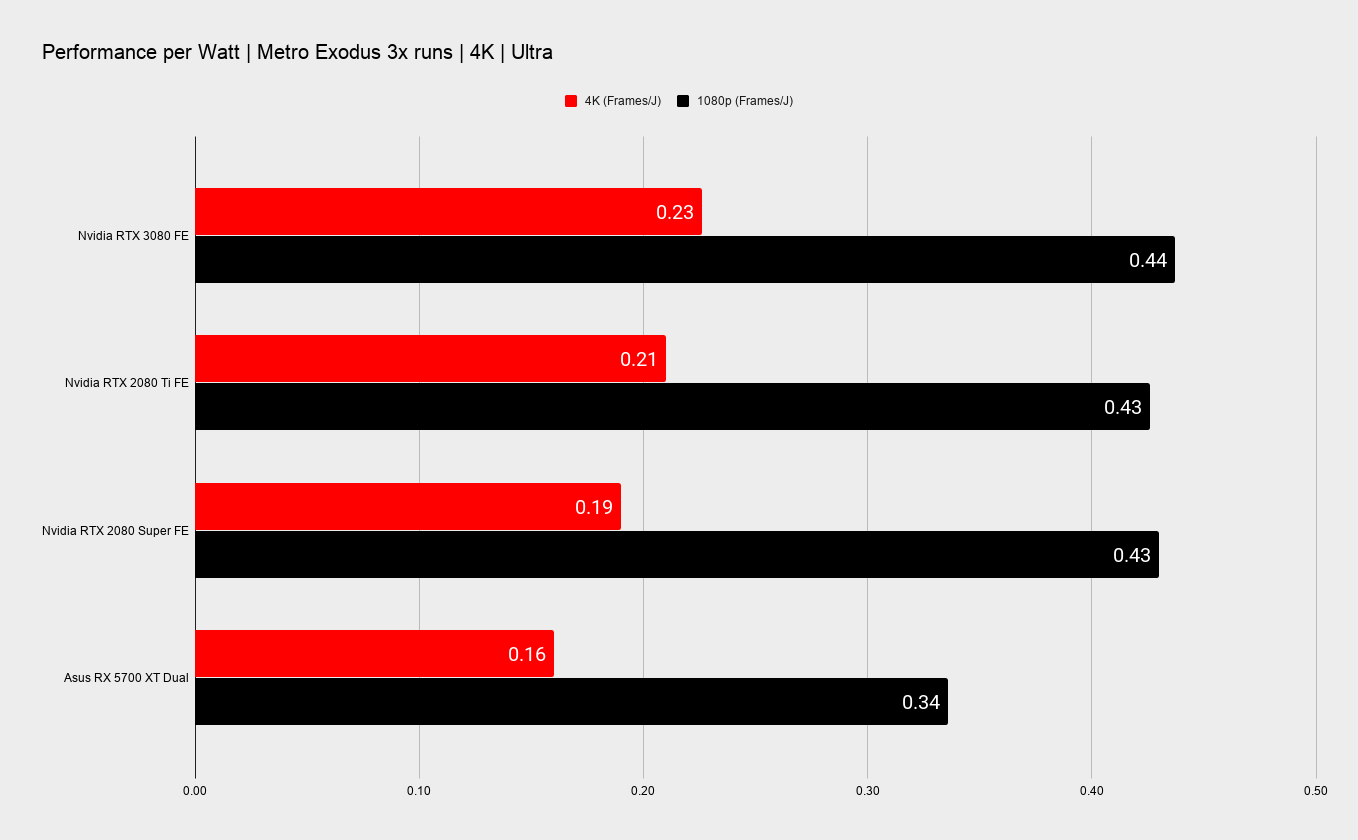

Nvidia has also been keen to talk a lot about the performance per Watt gains the Samsung 8N process, and Ampere architecture efficiencies, have enabled it to drop into the RTX 30-series. And honestly I think a lot of that pre-launch noise is because these are still incredibly thirsty graphics cards. The peak Wattage I've got out of this Founders Edition card, with its innovative PCB and 12-pin PSU connector is 368W, well over the rated 320W TGP Nvidia has been touting.

On average, however, I'm seeing board power of 324W, which sits more in line with the specifications, but is still a hell of a lot of PSU juice.

Yet it is still a more efficient GPU than the card it's replacing, and the top RTX 2080 Ti too. Considering Turing was already a pretty efficient architecture in its own right that's an impressive feat.

In the real world, with the Ampere GPU left to its own devices, chowing down on as much power as your PC can give it, you're only looking at around 19 percent higher performance per Watt compared with the RTX 2080 Super. That's at 4K, and the delta almost vanishes lower down the resolution scale. At 1080p it's just 2 percent.

Thermals and power

Thermals and power

Thermals and power

CPU - Intel Core i7 10700K

Motherboard - MSI MPG Z490 Gaming Carbon WiFi

RAM - Corsair Vengeance RGB Pro @ 3,200MHz

CPU cooler - Corsair H100i RGB Pro XT

PSU - NZXT 850W

Chassis - DimasTech Mini V2

Monitor - Philips Momentum 558M1RY

Nvidia has been boasting of a 1.9x perf/W boost over Turing, however, but you can only see that in situations where the previous generation is really pushing its hardest. The green team has shown when the RTX 2080 is maxing out at 55fps with all the bells and RTX whistles enabled in Control, the RTX 3080 is almost twice as efficient. But that's an outlier.

We've capped both the RTX 2080 Super and the RTX 3080 at 60fps at 1440p in a variety of titles and then the perf/W delta is again a lot smaller. That is until you get to ray traced benchmarks—at that point the redesigned RT Cores and extra FP32 units come into play and you see a far more significant boost in efficiency. In Shadow of the Tomb Raider and Metro Exodus, with ray tracing set to Ultra, you get 38 percent and 46 percent higher perf/W respectively.

And how does that new chip-chiller perform? Remarkably well. Remember those days of your reference card cooler maxing out at 90°C and that being fine? Well, no more. The RTX 3080 peaks at around 75°C in my testing, and averages out at 68°C, despite that huge amount of power flooding through the system.

It's not loud either, even when you really push the card. Plus it's got the 0dB fan feature, which means they don't even start spinning until you top the mid sixties in terms of temps.

We'll have to see how well it deals with overclocking, however, because I'm still waiting on release drivers and the new OC tools to really get to grips with that. Right now it's just not stable operating much above its standard clock speed.

Verdict

Nvidia GeForce RTX 3080 verdict

Pick your expletive, because the RTX 3080 Founders Edition is ****ing great. Far from just being the reference version of the 'flagship' Ampere graphics card, because of that cooler and redesigned PCB, it is the ultimate expression of the RTX 3080. And if you end up with another version of this fine-ass GPU then I'm afraid you're likely to feel a little hard done by.

And chances are you might have to make do. The Founders Edition cards are not going to be produced in the same volumes as the third-party versions from the likes of Asus, Palit, and Colorful, to name but the few we've seen. This is a whole new generation of card, it's seriously good, and that means stock will likely vanish of the RTX 3080 FE almost as soon as it's launched, and may take a while to return and level out.

But I'd probably suggest you wait, because I think it's worth it for such a good-looking, impressively performant version of the Ampere GPU. It might not necessarily be the coolest, looking at the vast heat-sinks on some of the AIB offerings, but it's probably the one I'd be happiest having in my rig.

The extreme power demands are interesting however, and likely a stick that the competition will want to use to beat Ampere with. Though I would wager any concerns aren't going to sway the average gamer away from picking Ampere for their powerful new GPU. If you want to be particularly green about the power you use gaming then you're not running a desktop PC with a high-end discrete graphics card.

The performance uplift you get over the previous generation is huge, and almost unprecedented. Maybe we've become used to more iterative generational deltas, especially considering the slight difference between the GTX 1080 Ti and RTX 2080, for example. And we're going to continue beating the Ti drum, because it bears repeating—the fact the $699 RTX 3080 can absolutely smash the $1,200 RTX 2080 Ti is rather staggering.

Of course that maybe says a lot about the market right now compared with two years ago. Back then there was nothing the competition could produce to go up against even the GTX 1080 Ti, but with the AMD RX 6000 on the horizon at the end of October, and suggested to be a fair bit faster than the ol' RTX 2080 Ti, Nvidia couldn't have gone for a minor performance update this time around.

There is potentially more competition in the high-end GPU space again, and with the RTX 30-series lineup announced so far it looks like the green team is going for some shock and awe tactics ahead of the upcoming Radeon launch. So, thanks AMD for pushing Nvidia, because we're getting some great cards being released to loom over Big Navi.

Jen-Hsun and Co. has gone big with the RTX 3080, and the result is an outstanding gaming card that sets a new benchmark for both high-end 4K gaming performance and for ray tracing. And honestly that has us salivating over the potential of both the $499 RTX 3070 and the ludicrous potential of the $1,500 RTX 3090.

The new Nvidia card houses a monster of a GPU, tearing up the Turing generation and making ray traced gaming worthwhile. And this Founders Edition is the ultimate expression of the GeForce RTX 3080.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.