Nvidia shows off new features and software for GTX 1080

Ansel, path-traced audio, and simultaneous multi-projection

After the big announcement of the GTX 1080 and GTX 1070 last night, we were able to check out some demos of the cards running a few of the latest games, showing off what some developers are doing with the technology. We also neglected to talk about some of the other features and software that Nvidia discussed, so let's get to it.

First up on the software side, Nvidia has created a new tool and API called Ansel to aid artists in capturing amazing screenshots. Except "screenshot" doesn't do justice to what Ansel can do. It's a free camera utility that you can position anywhere you want, it has support for a variety of filters, HDR (EXR) is supported, and you can bump up the resolution of the capture.

That last point might sound pretty tame, but let's talk actual resolutions that are supported. We're not talking 4K or 8K or anything mundane like that. How does 61440 x 34560 sound? Or if you want to capture 360 degree screenshots for people to view using VR headsets, those are also supported.

Nvidia had a giant printed canvas (about 15m x 3m) created using Ansel featuring a 2 Gigapixel capture from The Witcher 3 to show off a little bit of what Ansel can do. Other games that have already announced Ansel support include The Division, The Witness, Lawbreakers, Paragon, No Man's Sky, Unreal Tournament, and Obduction. We'll get to that last one later, as it has some other cool tech.

Next up on the new features is Nvidia VRWorks Audio, a true path traced audio solution. This is something you obviously need to experience in person, but in principle it sounds (erg…bad pun alert) quite reminiscent of AMD's TrueAudio. We've had various forms of 3D audio over the years—Aureal 3D for example was doing custom ASICs with HRTF functions to do 3D audio way back in the early aughts—but VR has suddenly pushed 3D audio back into the limelight.

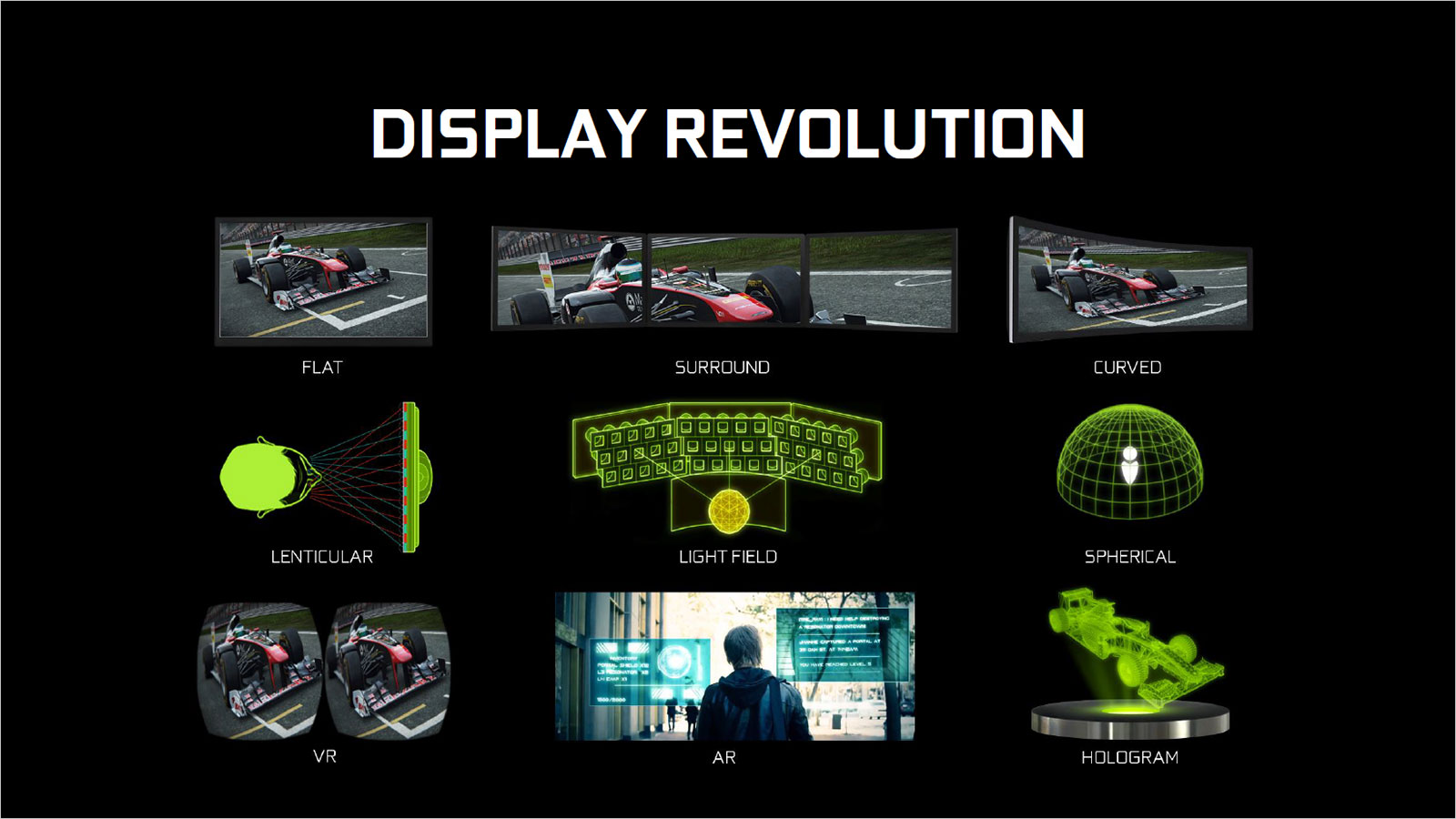

The final item discussed was new technologies to help with displays. HDR output is now present, for those of you with ultra-high-end HDTVs, and Nvidia announced their new Pascal chips will support single-pass simultaneous multi-projection with support for up to 16 independent viewports. This doesn't seem entirely new, as Maxwell 2.0 already supports something like this with their multi-res shading and multi-viewport technologies, but apparently there are some differences at the hardware level that make the new method "better."

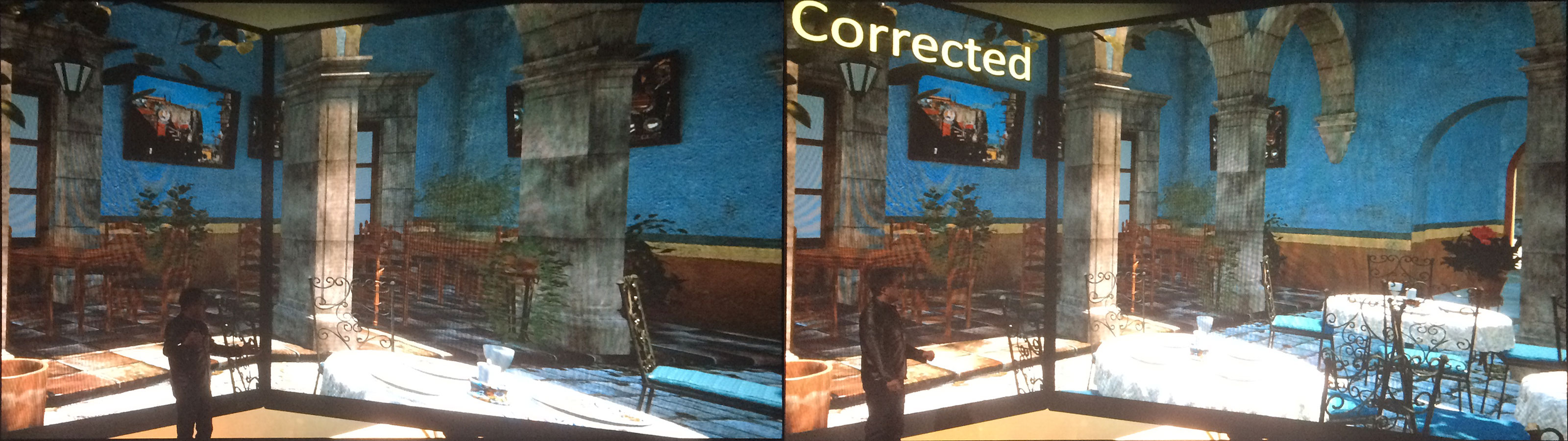

One example of what can be done with simultaneous multi-projection was perspective correct surround displays. Normally, if you have a triple-wide display configuration, games treat that as a flat surface. What happens if you reposition the two side displays is you get a funky break in the view. Multi-projection allows games to adapt to the position of the displays to make things look correct.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Another use case for multi-projection is something we've heard before, as a way to improve VR performance. Everest VR already supports multi-res shading, and it will be incorporated into Unreal Engine 4 as well. The way VR works is that you project two viewports of the 3D world, one for each eye, which are then warped so that when you view them through the VR lenses, everything looks correct. The warping process ends up discarding a lot of data, and multi-res shading is able to pre-warp sections of the display so that the GPU only renders 33% or 50% fewer pixels.

With Maxwell 2.0, multi-res shading was demonstrated using nine "zones" for each eye: four corners, four sides, and the center (unwarped) area. When talking about the same sort of stuff with Pascal and GTX 1080, Nvidia showed four zones per eye, angled somewhat differently. The bottom line is the same, however: fewer pixels rendered without a loss in image quality.

But there are other uses of this technology, which brings us back to Obduction, one of the cooler demos in terms of the technology being used. Obduction is something of a modern take on puzzle games in the vein of Myst—and it comes from the creators of Myst and Riven—only it's rendered in a real-time 3D engine instead of using pre-rendered backdrops. It leverages the simultaneous multi-projection (aka SMP, but not symmetric multi-processing) feature of the 1080 to do something akin to foveated rendering.

The game was running at 4K but only hitting about 50 fps, just a bit below the desired 60 fps for a 60Hz display. By flipping a switch, the game renders the outer portions of the display at a lower resolution and stretches these, leaving the main section of the display—where you're most likely focused during gaming—at full quality. Frame rates jumped from 45-50 fps without multi-projection to over 60 fps, and while there was a slight loss in quality, it was only really visible if you were stationary and carefully looking for the change. I was able to talk with the developers a bit, and they indicated that their game is one of the first to use multi-res shading in this fashion, and it will work on both Maxwell 2.0 as well as Pascal cards.

One final thing to show off before we go dark (meaning we'll be under NDA embargo) is Nvidia's VR Fun House tech demo. It leverages massive amounts of PhysX, HairWorks, and other Nvidia technologies, and it's apparently more than a little demanding. How demanding? Nvidia had to use three—yes, three—GTX 1080 cards to keep Fun House running smoothly at 90 fps. The system was from OriginPC, and it was doing VR SLI on two of the GPUs, with the third GPU handling all the PhysX calculations. I suspect the PhysX aspect was overkill and would have worked fine on lower end GeForce cards, but it's still an impressive tour de force for Nvidia and Pascal.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.