Laptop makers just aren't interested in discrete Radeon GPUs and I really want AMD to do something about that, even though it won't

You'd think it would hurt spending all that time and money, only for nobody to want the end result.

This month I've been testing: A small mountain of ergonomic keyboards. Some aren't especially ergo at all, whereas others sit in a specialised niche all to themselves. I really love the weird and wonderful ones, even if it does feel like learning how to type all over again.

I've been following the events of the CES since the mid-1990s and there's always something that stands out or sticks in your memory. Take the 2005 event, when Microsoft tried to demonstrate the full abilities of the Xbox 360 and failed rather miserably, or the 2011 one, where 3DTVs were being hyped as the future of entertainment. Yeah, kinda got that one wrong. For this year's event, the biggest thing that I noticed, other than AI being plastered onto absolutely everything, was the total lack of new gaming laptops using a discrete AMD Radeon chip. As in none, zip, zero, nada.

If I'm honest, I don't know what's going on with AMD's graphics division but I am convinced of one thing: It's not interested in massively expanding it. Sure, its revenue stream has been the steadiest of the four sectors (client, data center, gaming, and embedded) since the company restructured its divisions in 2021. It makes a steady profit, too, whereas the others are all over the place.

But that's entirely down to AMD supplying the APU that powers every PlayStation and Xbox out there. While Microsoft and Sony sell their consoles for almost no profit, AMD certainly doesn't when it comes to its chips. So you can't rely on the financial results of the gaming sector to determine how well its graphics division is doing.

Instead, you just have to look at how popular its products are, and excluding the custom processors for consoles and the Steam Deck, you're looking at Radeon graphics cards and discrete laptop chips. The former are well received because they're fast and they're a lot cheaper than the competition. Sure, they don't offer nifty AI features nor are they top-notch when it comes to ray tracing, but they're fine for everything else.

Determining exactly how popular Radeons are isn't something a mere mortal such as myself can do. Like everyone else, I have to rely on reports from market analysts, such as Jon Peddie Research, and figures from Steam's hardware surveys. Averaging the past five months of the latter's findings, AMD's graphics chips account for just 10.1% of all the GPUs recorded, and before anyone thinks it varies a lot, the standard deviation was just 1.5%.

Jon Peddie's findings paint a rosier picture, with AMD holding 17% of the GPU add-in board market during Q2 and Q3 of 2023. That's not great but it's a lot better than 10%, that's for sure.

But that's for graphics cards and the thought of trying to dig through all of the available data, just to glean what the story is for discrete laptop GPUs is far too gloomy a prospect. So instead, let's just take stock of all the new gaming laptops announced at CES 2024 that had an AMD Radeon, instead of an Nvidia GeForce, for graphics duties.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

There. Done.

Yep, that's right: There was none. Or if there was, it certainly wasn't from Asus, Acer, Alienware, Razer, MSI, Gigabyte, Lenovo, or any of the main gaming laptop manufacturers. How on Earth can that possibly happen? If one transfers the popularity of Radeon cards across to the mobile sector, you'd expect to see at least a few here and there.

But for some reason, nobody is using them. AMD's CPUs and APUs, absolutely, but just not its GPUs. Let's see if we can figure out why.

To begin with, think about what a laptop designer wants from a discrete GPU. It needs to be as small as possible, use as little power as possible, maintain performance even when clocked down low, and support all of the latest graphics technology. Space is a premium inside a laptop, as is power because the latter ultimately all converts into heat.

A small, power-sipping chip doesn't require a large cooling solution, making it space efficient and cheaper to manufacture the whole laptop.

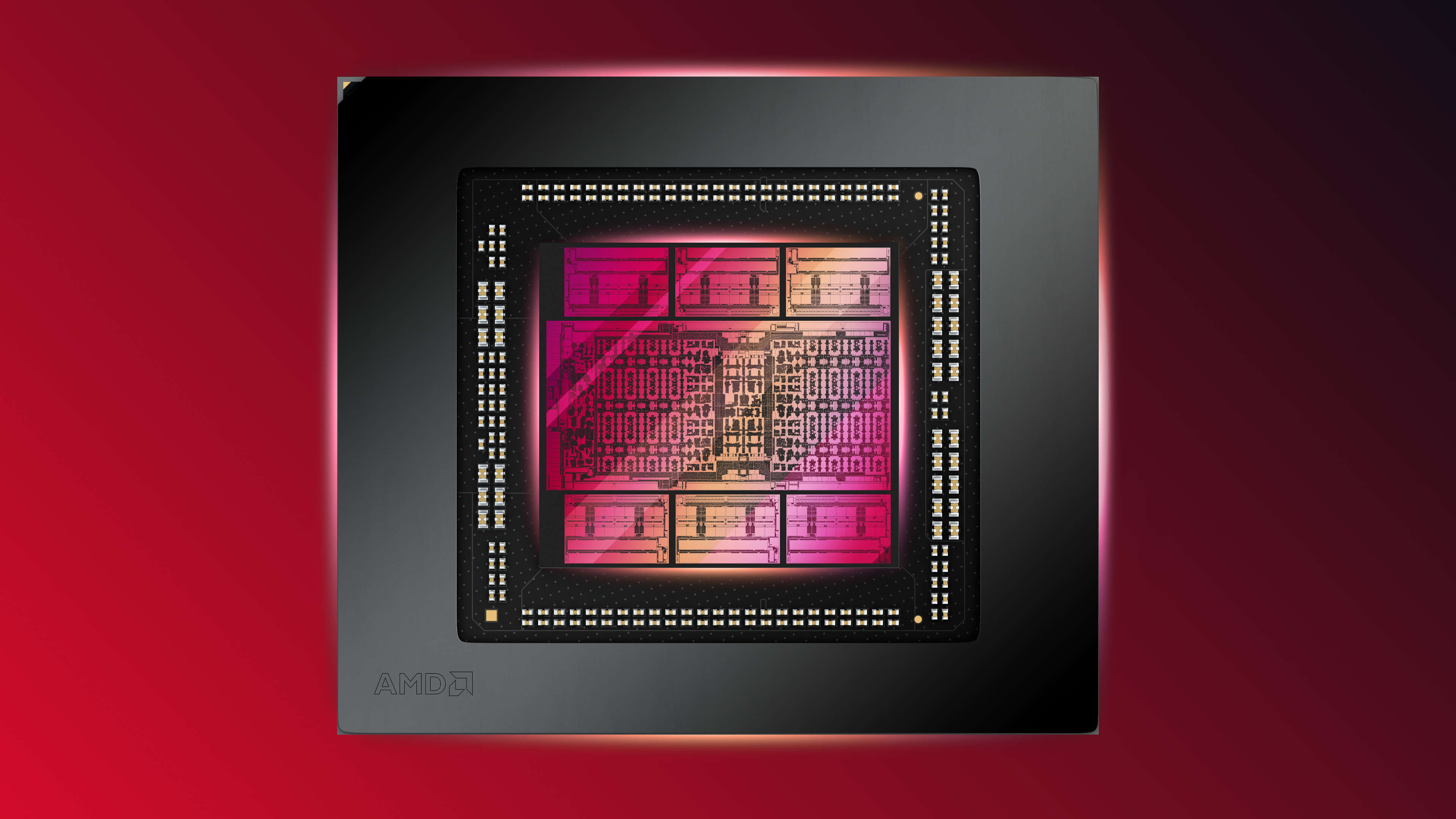

AMD has five laptop models, based on its RDNA 3 architecture, with the range-topping Radeon RX 7900M sporting 72 Compute Units (CU), 64MB of L3 cache, and 16GB of GDDR6. It also has a power limit of up to 180W. It uses the same Navi 31 GPU as found in the Radeon RX 7900 series, albeit with lots of shaders disabled.

Unfortunately, there's no getting around the fact that it's a big chip (well, chiplets). At 529 square millimetres in total, it takes up 40% more area than Nvidia's GeForce RTX 4090 Mobile (which uses the AD103, the same GPU in the RTX 4080 graphics card). That has a typical power limit of 150W, although it can be set higher by laptop vendors.

So AMD's best mobile gaming GPU is bigger and potentially more power-hungry than Nvidia's, and although we haven't tested it (simply because we can't!), I can't see performing as well, as it has fewer shaders and they're clocked slower. It's therefore not too hard to see why the RX 7900M is not being used in any of the latest gaming laptops.

But what about the other models, the Radeon RX 7600M, 7700S, and 7600S? Again, no laptop maker appears to be interested in those, and I suspect the reasons are the same as for the 7900M: Not small enough, not power efficient enough, not fast enough.

And there's the fact that Nvidia dominates the discrete graphics card market, too. Laptop vendors will naturally want to produce a gaming-focused model that matches the customer's overall awareness of the GeForce brand and outside of the enthusiast sector, that's all that anyone talks about.

I've lost count of the number of people I've spoken to about AMD and Radeon, only to be met by glazed expressions. But mention GeForce and you're instantly understood.

AMD has the ultra-portable gaming sector almost entirely to itself, with the vast majority of handheld PCs sporting one of the red team's APUs. The only handheld product of note powered by Nvidia is the Nintendo Switch. Why is AMD the top choice here? Easy, the chips are small, fast, use very little power, and provide exactly the right amount of performance needed for such devices.

So clearly AMD has the know-how and resources to make a perfect mobile GPU, just not one that sits by itself in a laptop, rather than being embedded in an APU. Gaming laptops aren't a niche product nor is AMD lacking the money required to make a concerted effort to battle Intel and Nvidia head-on in that sector.

To be blunt, I'm getting a little tired of reading how AMD's next GPU architecture will change everything around or the next Radeon will be the one to beat Nvidia. I don't think it matters, as it already has the necessary technology, as evidenced by the capabilities of the latest consoles and handheld PCs.

What it all comes down to is money, of course. AMD isn't averse to spending a few billion dollars when it sees the potential in something (e.g. the purchase of FPGA masters Xilinx) but I suspect it just looks at its gaming division, sees the nice revenue stream from the consoles, and only wants to put enough effort into developing GPU architectures that are best suited for data center monsters, APUs, and other custom projects.

At times, graphics cards and discrete laptop chips just seem to be a handy by-product of it all, not a primary focus for AMD's gaming sector. But for the sake of competition and hauling Nvidia's sticky mitts off the GPU revenue fountain, I really do wish AMD would do something about it all.

Except it won't, of course. It is a business, after all, and has a responsibility to its shareholders to ensure it invests its resources wisely, so it will only make decisions based entirely on maintaining its financial status quo, at the very least. Why rock the boat, when the good ship HMS Gaming Division is chugging along nicely?

It certainly works well for AMD, just not so much for us PC gamers, especially those who love a good gaming laptop.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?