Intel's team-up with AMD produces the fastest integrated graphics ever

But outside of laptops and NUCs, you're still better off with a dedicated graphics card.

Intel's announcement of a partnership with AMD to produce a single CPU package with integrated Vega graphics made waves in late 2017. Even with the final Hades Canyon NUC in my hands, I'm still surprised this ever happened. Hell may not be frozen over, but temperatures have certainly dipped. It's like finding the fabled unicorn grazing in your backyard.

More critically, while gamers are always hungry for the best graphical performance, Intel has been working to improve its integrated graphics offerings for several years. Teaming up with AMD feels like a slap in the face to the engineers working on Intel's Iris Graphics solutions.

Yet here we are, and we're all curious to see what the new processors can do. Intel has five Kaby Lake-G parts, all of which are the same other than clockspeeds, power levels, and the number of compute units that are enabled in the GPU. That means you get a 4-core/8-thread CPU paired with either an RX Vega M GH (for Graphics High, 24 CUs) or RX Vega M GL (Graphics Low, 20 CUs). Intel's HD Graphics 630 is also available, should the system builder choose to use it.

That brings me to today's testing, with a chip mostly built for laptops sitting in a NUC—Intel's Next Unit of Computing, the diminutive form factor the company created back in 2013. Apple's future MacBook Pro looks like a prime candidate for using Kaby Lake-G when it gets announced, and Apple was a major reason for Intel's Iris Graphics in the first place. With rumors of Apple planning to move to its own in-house processor designs, however, this could be a short-lived relationship. Dell and HP have also announced laptops with KBL-G processors.

Intel's NUC serves as something of a proof of concept, showing what we can expect from the new processors. NUCs are built off mobile solutions, but they're less complex than an actual laptop since they don't need to run from battery. A few extra watts of power use on a system that's plugged in doesn't matter much, but with a laptop it could mean the difference between nine hours of battery life and four hours of mobility. In a sense, the NUC should give us the maximum level of performance we can expect from laptops that use the same processor.

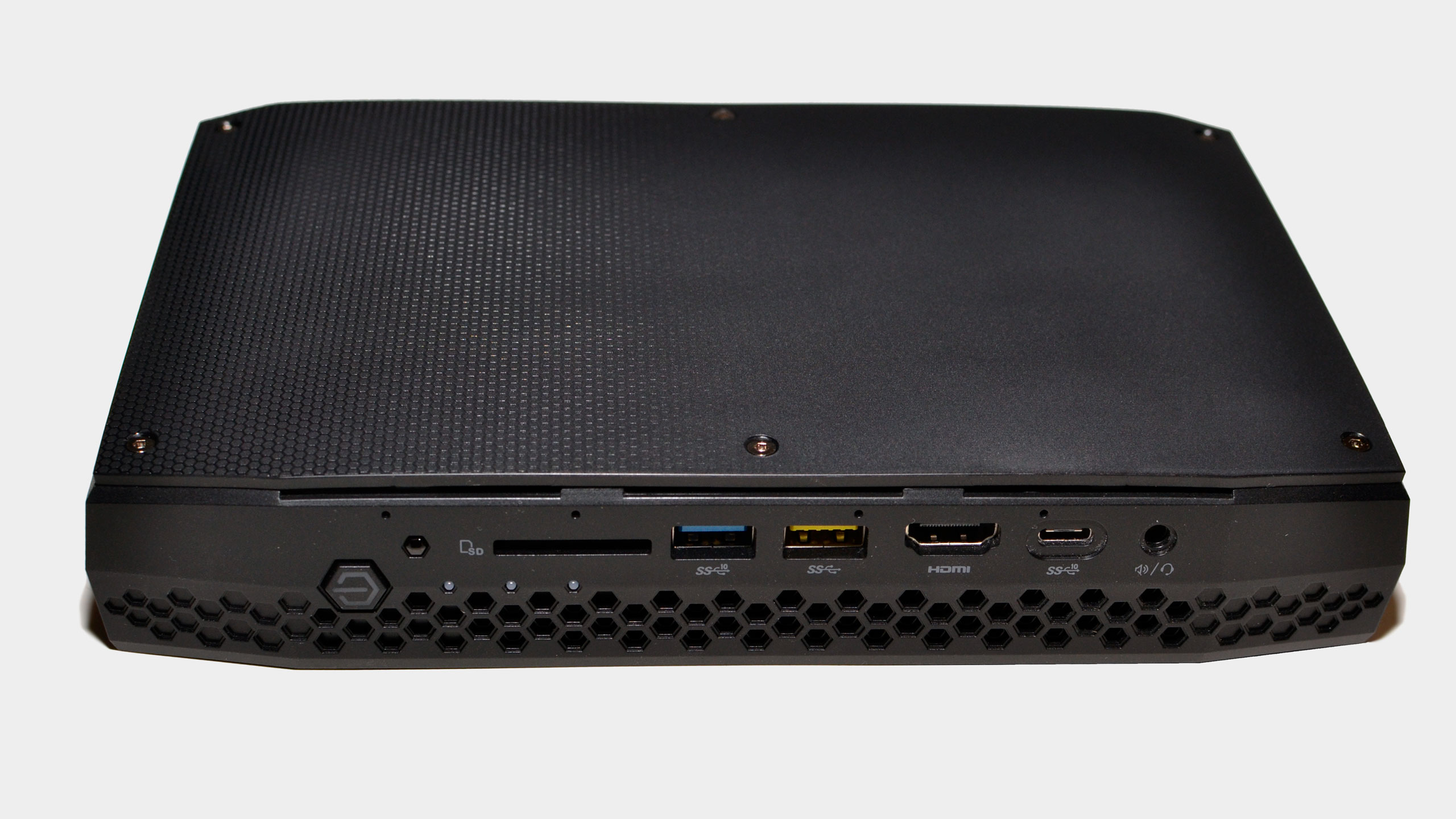

I admit that the Hades Canyon NUC is pretty cool, with Intel's lit up skull icon on the top. (You can change the color of the skull, eyes, and power button, or turn the lighting off if you want something a bit less conspicuous.) Then you get to the price: $910 is what Intel recommends for the i7-8809G, with the barebones NUC typically going for over $1,000. The i7-8705G model NUC is more palatable at $749 but loses a chunk of graphics muscle. Either way, you still need to add your own memory and storage to get a working system. That's almost the equivalent of a decent gaming notebook, without the display, keyboard, and battery. But assuming you're okay with the cost, let's talk about what you get.

The NUC8i7HVK packs a whopping six 4K-capable outputs into the form factor, coming via two Thunderbolt 3 ports (USB Type-C), two mini-DP 1.2 ports, and two HDMI 2.0a ports. Beyond those, you also get five USB 3.1 Gen1 Type-A ports, one each USB 3.1 Gen2 Type-A and Type-C, an SDXC reader, audio output for speakers or TOSLINK, another audio port for headsets or additional speakers, two gigabit Ethernet ports, and infrared support.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Internally, the NUC supports two M.2 slots, with both NVMe and SATA support, and has two SO-DIMM slots. It also includes Intel's 8265 802.11ac solution, capable of up to 867Mbps, which is good but not as impressive as Intel's latest 2x2 160MHz 802.11ac solutions. Still, I saw throughput of up to 700Mbps during testing.

The box itself is well built and stays cool and quiet during use. Maximum power draw for the higher performance CPU is 100W, much like a decent gaming notebook. With no screen or other parts in the way, the 1.5-inch thick chassis has plenty of room for airflow. Throughout my testing, noise levels and temperatures were never a problem. The CPU cores and Vega graphics share the 100W as needed, and while I have seen system power use go beyond 100W, it's tough to say how much of that is the CPU and how much comes from other items like RAM, storage, and the motherboard. The power brick is rated at up to 230W, regardless.

The compact size means the NUC can potentially go places other PCs fear to tread. Intel had a prototype VR vest that used the NUC along with a 300Wh electric bicycle battery, hooked up to Microsoft's Mixed Reality dev kit and running Arizona Sunshine. It was awesome to be free of the wires you normally contend with, but the vest was quite bulky and still only managed about two hours of use between charges. In other words, like many things VR, it felt more like a publicity stunt for the time being. Putting the NUC in your home theater makes more sense, but similar functionality can be had from smaller and less expensive devices.

Assuming you do have a good use case, though, how does the NUC, and by extension the Intel Kaby Lake-G processor family, perform? This is what we can expect from laptops based off these unicorn processors.

Radeon RX Vega M GH graphics performance

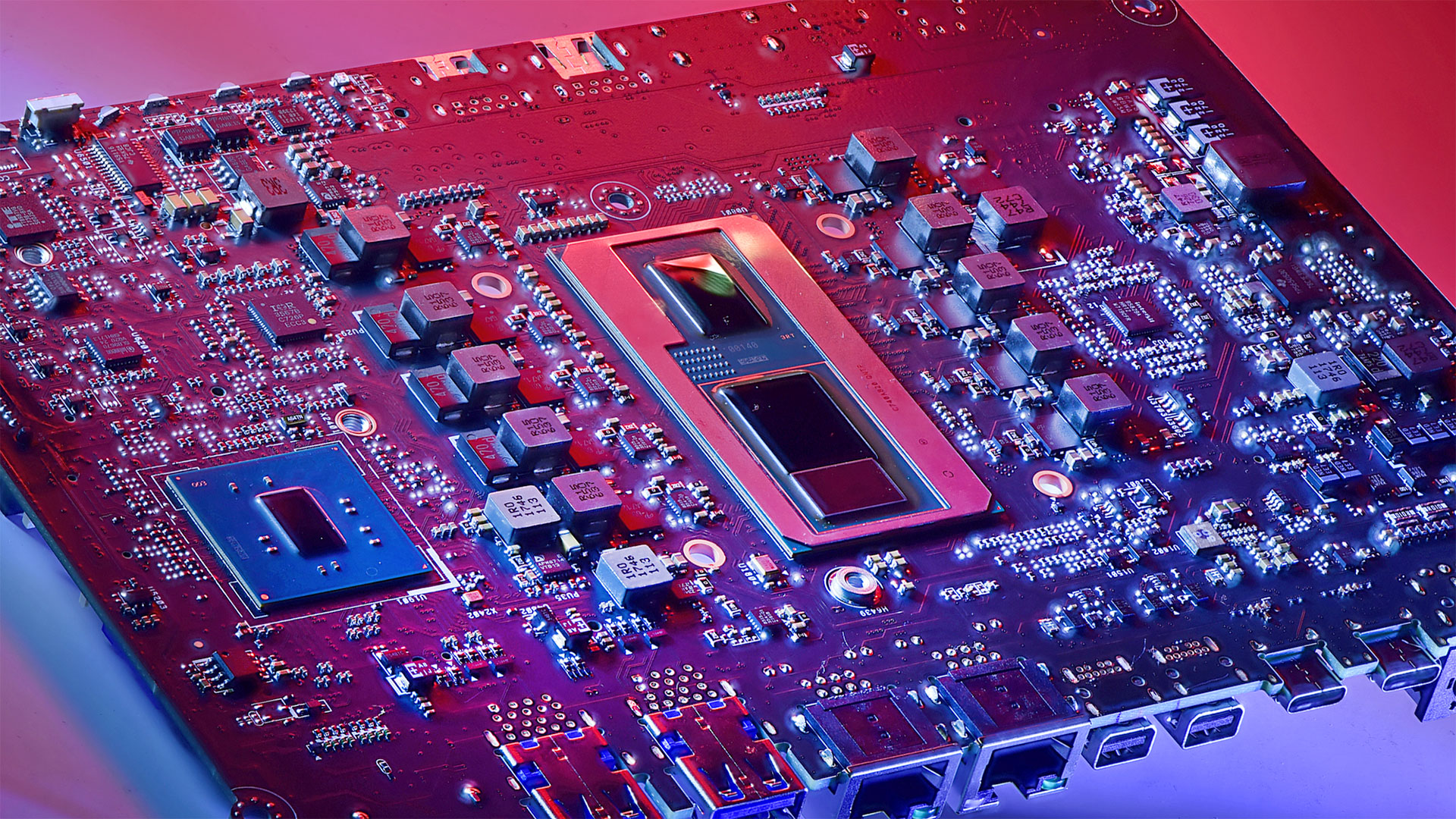

The real star of this show is the integrated Radeon RX Vega M GH, which is the most powerful integrated graphics solution we've ever seen. Much of that is thanks to the inclusion of a 4GB HBM2 stack, as traditionally integrated graphics solutions share system memory and bandwidth, which severely limits maximum performance. With 204.8GB/s of bandwidth, the Vega M shouldn't have any such problems, and in practice it ends up being more like a mainstream dedicated GPU rather than an integrated graphics solution.

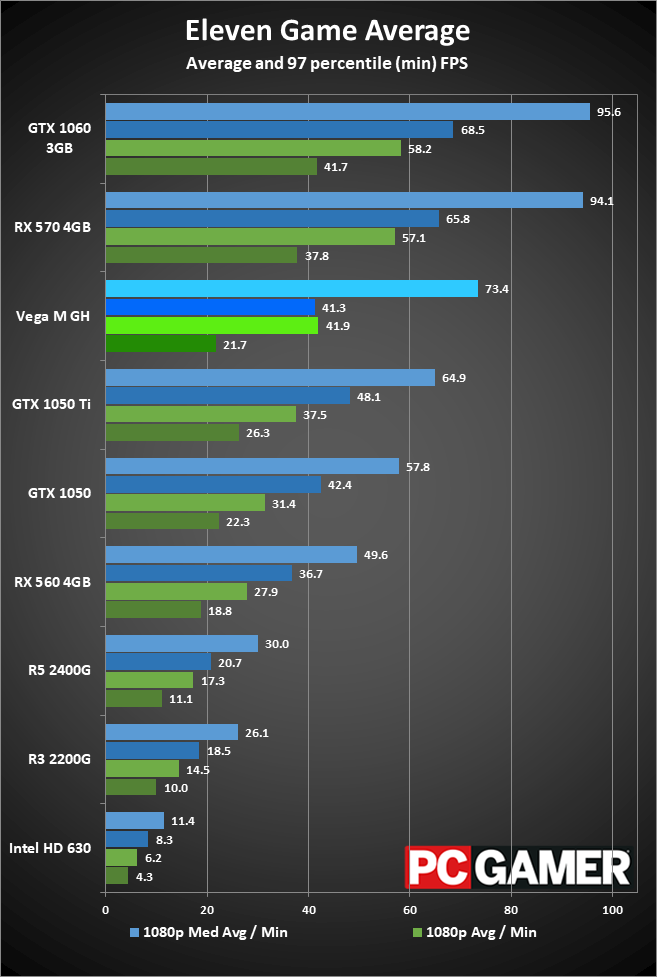

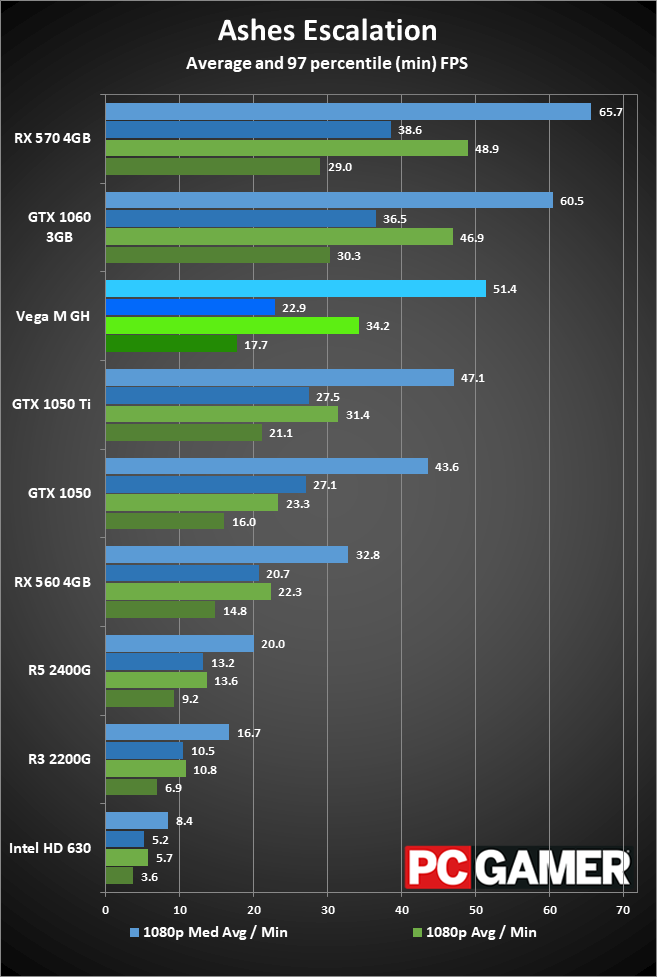

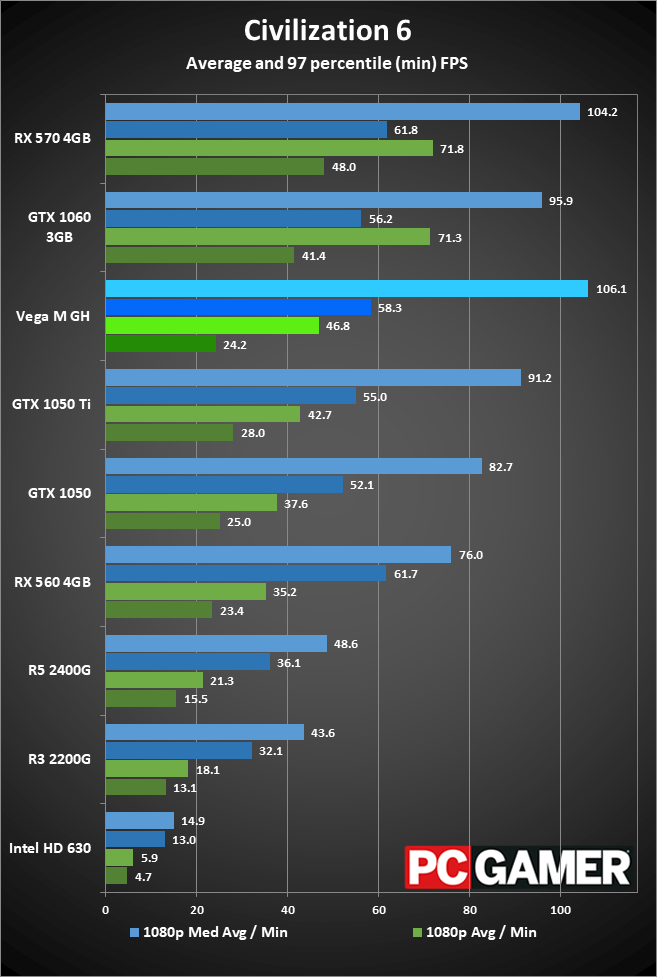

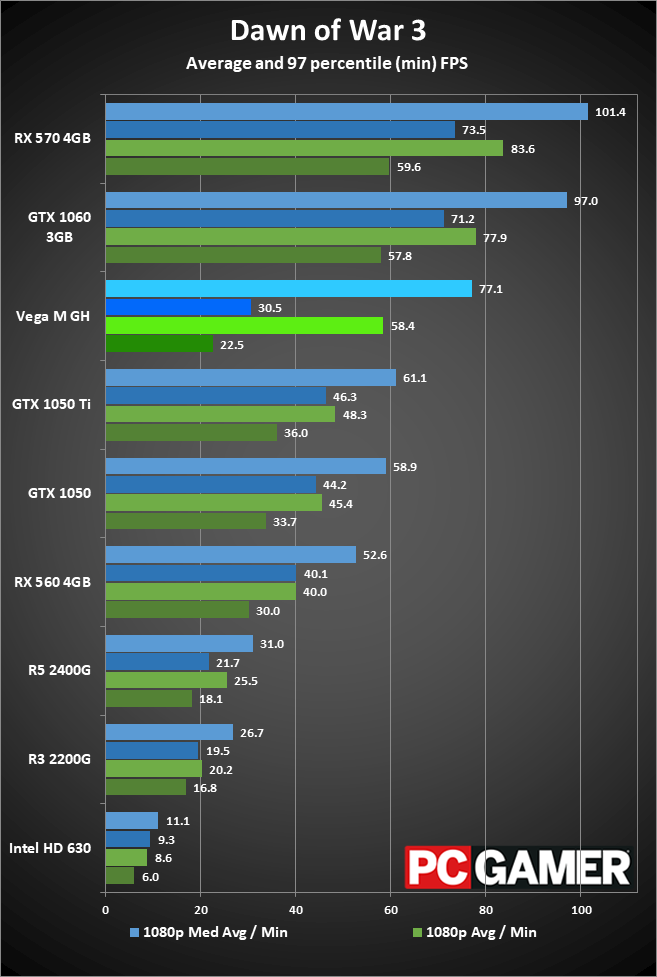

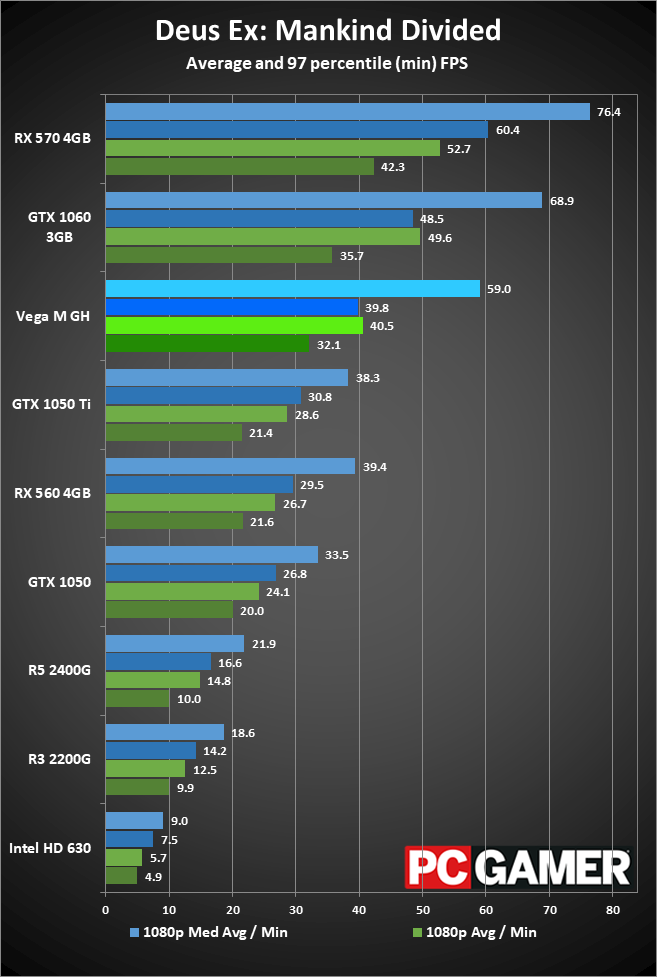

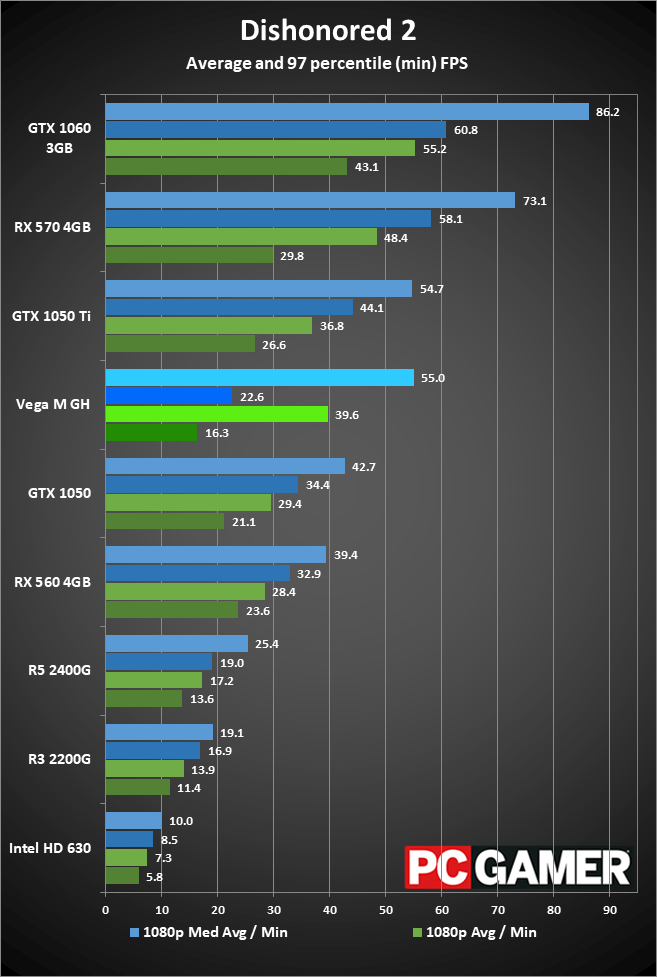

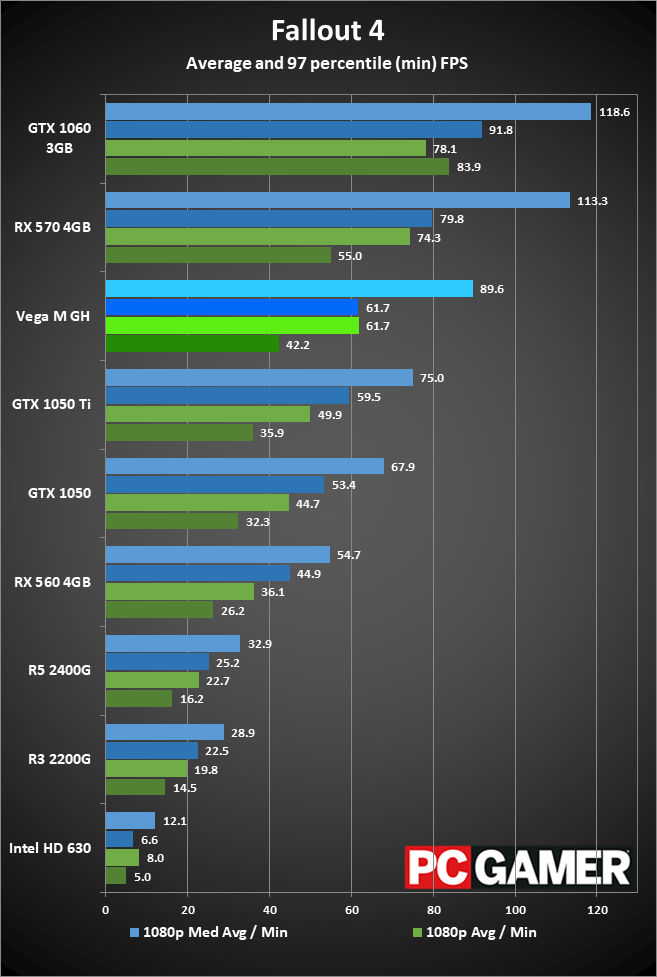

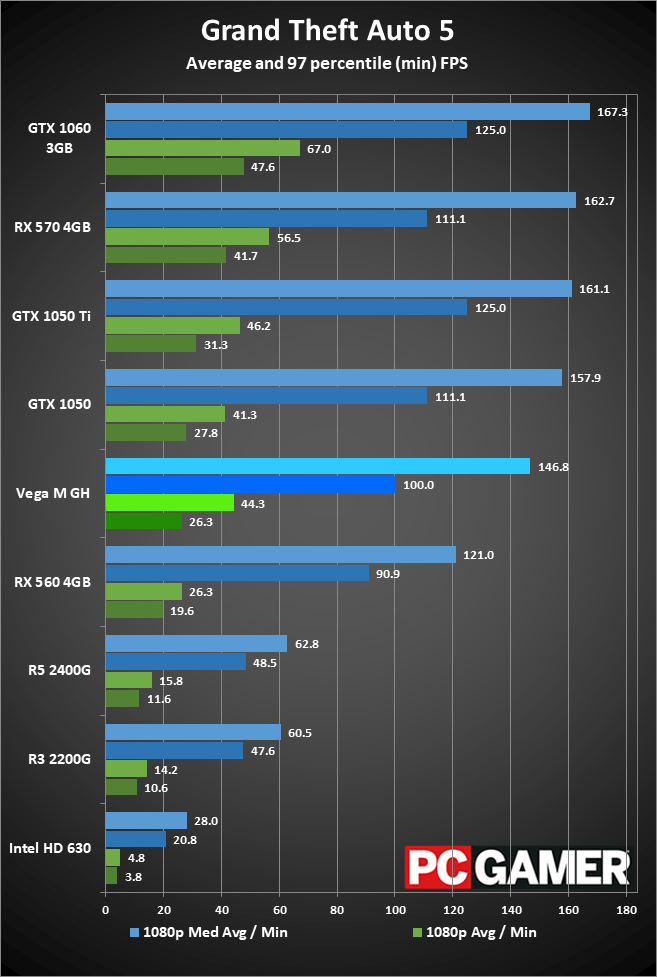

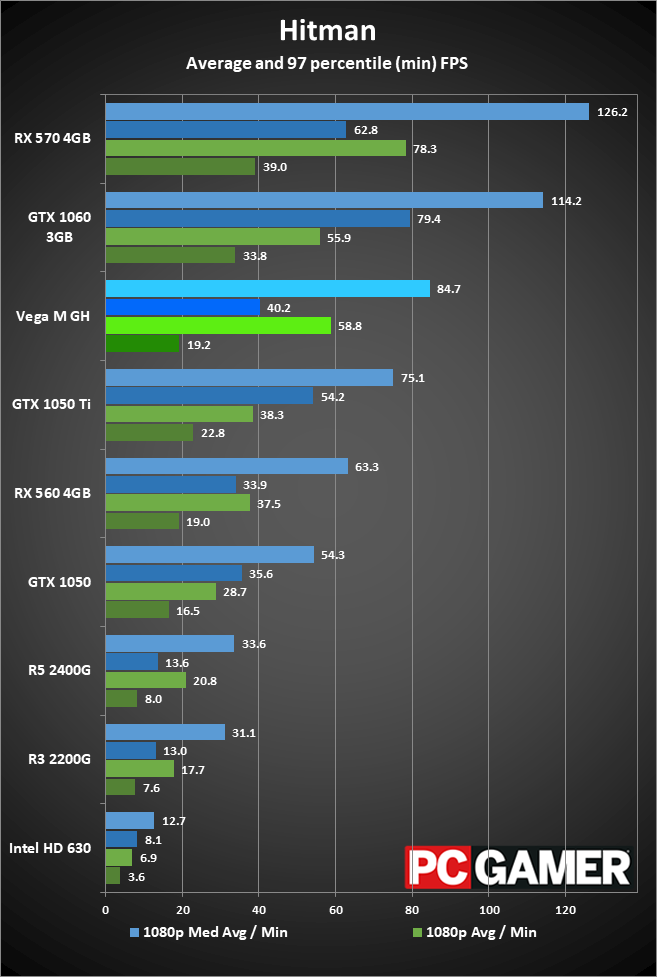

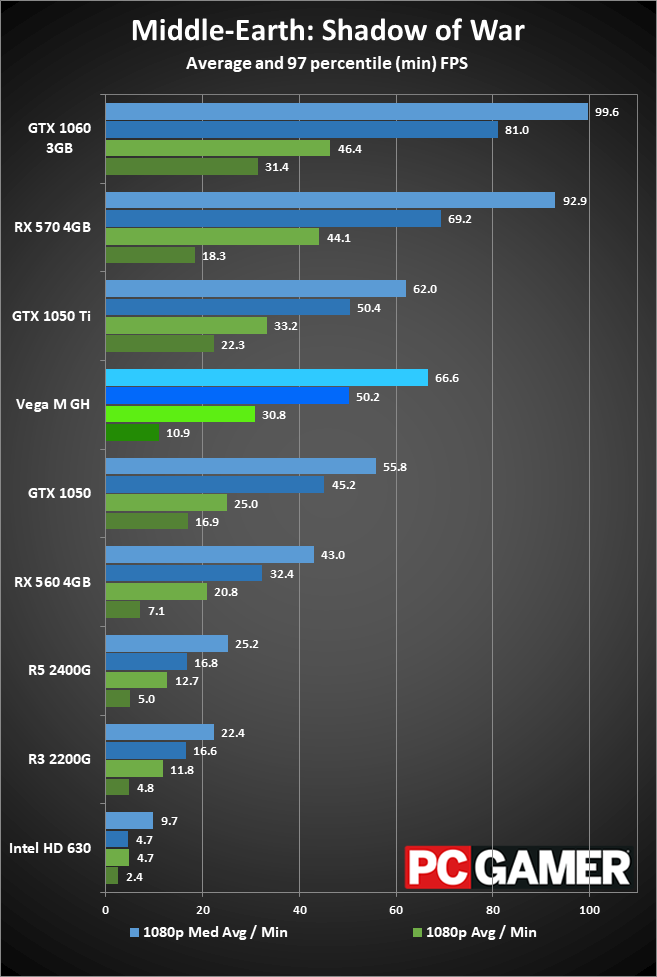

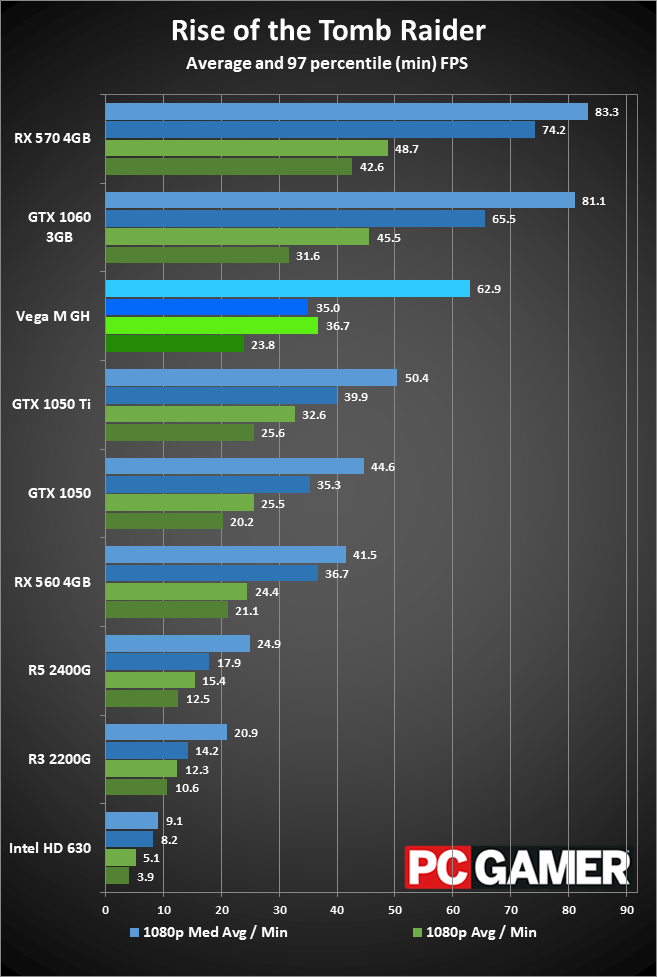

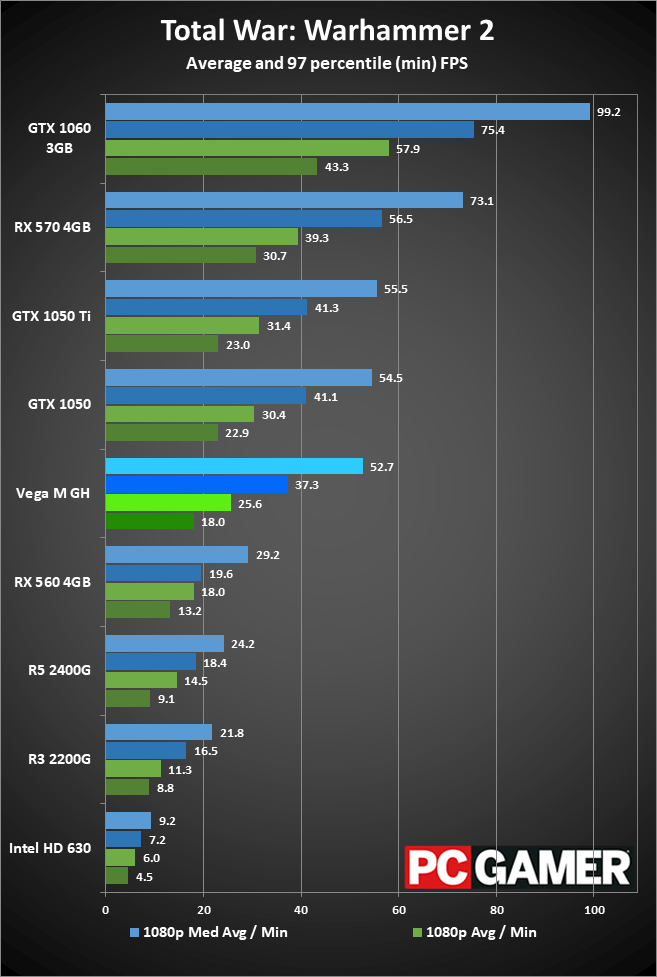

For performance comparisons, I've got Intel's HD Graphics 630 and AMD's Ryzen 5 2400G and Ryzen 3 2200G, plus some budget and midrange graphics cards from AMD and Nvidia. The desktop graphics cards are all running with a faster CPU, but that shouldn't matter much as we're firmly in the realm of GPU limited testing.

Not surprisingly, with 1,536 streaming processors clocked at up to 1190MHz, plus the dedicated 4GB HBM2 stack, gaming performance on the Vega M is more than double what you get from AMD's Ryzen 5 2400G. Obviously they're targeting different markets, but I still hold out hope for a future AMD APU that integrates an HBM2 stack. Finding things to do with all the transistors available on modern process technology can be tricky, and system on chip (SoC) designs are becoming increasingly potent.

What about relative to desktop graphics cards? Here the performance is good, though you're not going to replace a dedicated midrange or higher GPU just yet. The Vega M is faster in most cases than the GTX 1050 Ti, and clearly ahead of the GTX 1050 and RX 560, but it falls well short of the GTX 1060 3GB and RX 570 4GB. That's expected, as the 570 has more compute units (32 compared to 24) and is clocked higher, but it shows that memory bandwidth likely isn't holding back the Vega M. Either way, it's impressive to see an integrated graphics solution perform better than several dedicated desktop GPUs from the same generation.

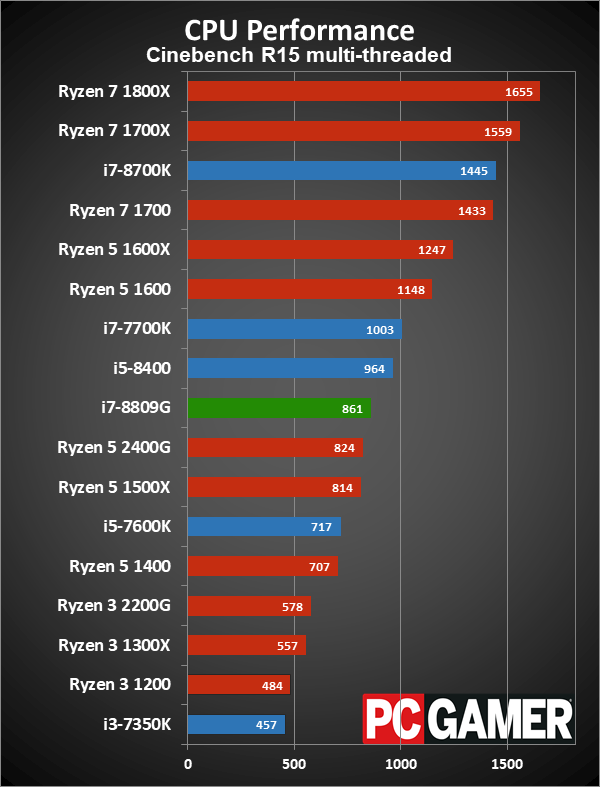

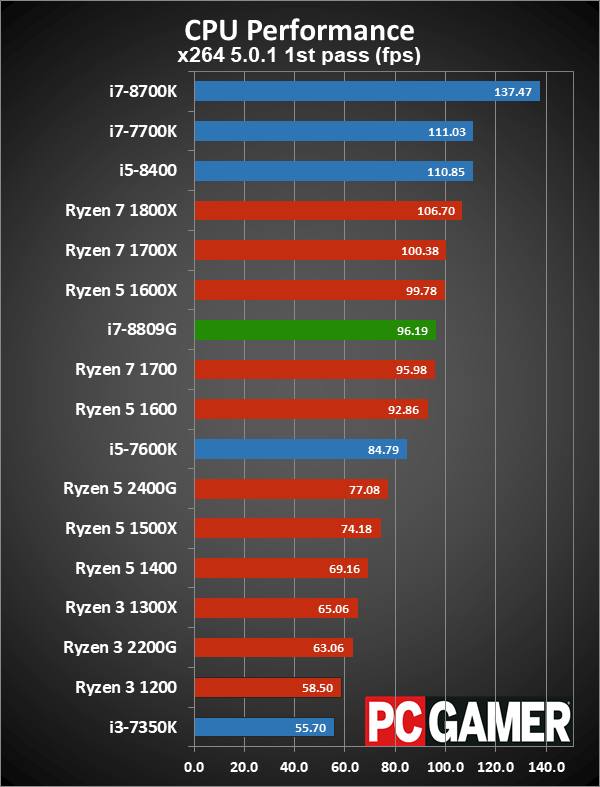

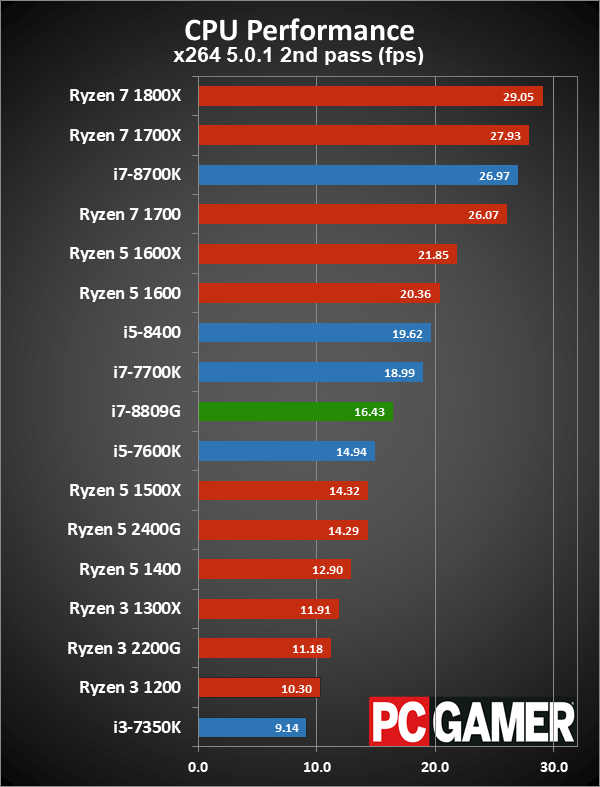

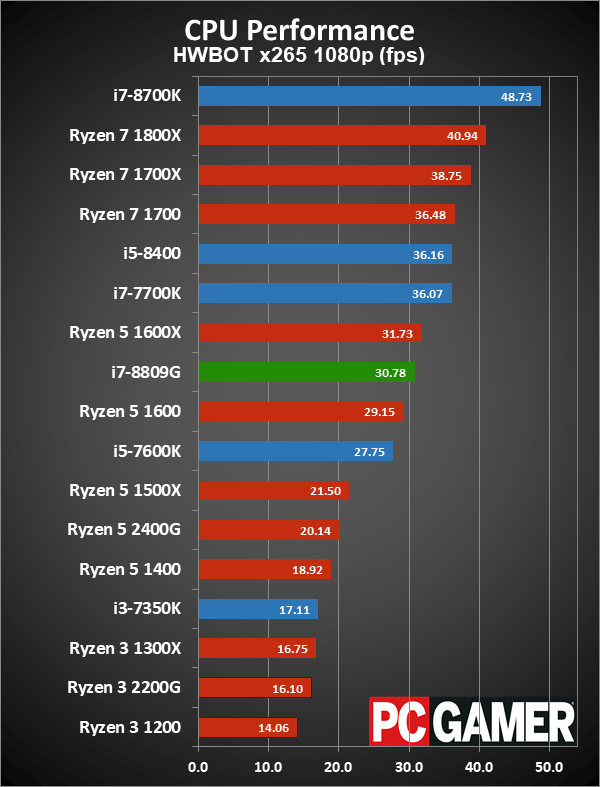

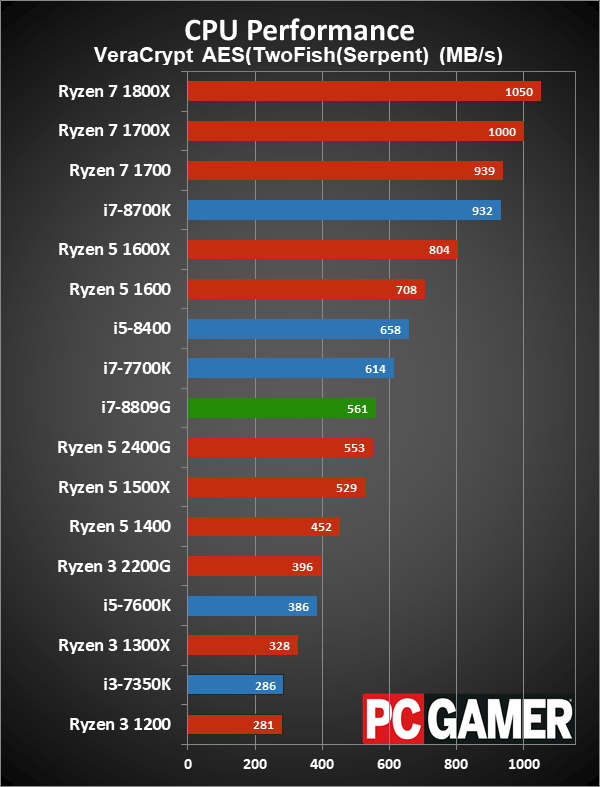

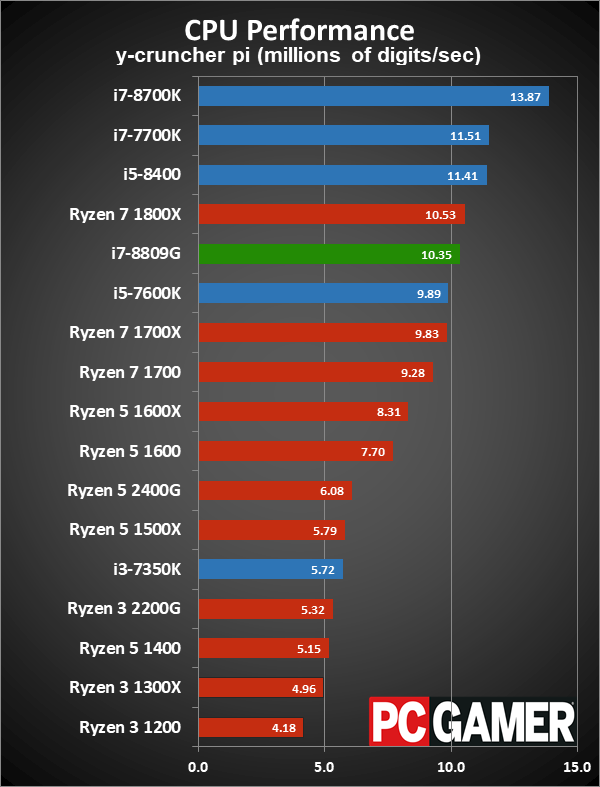

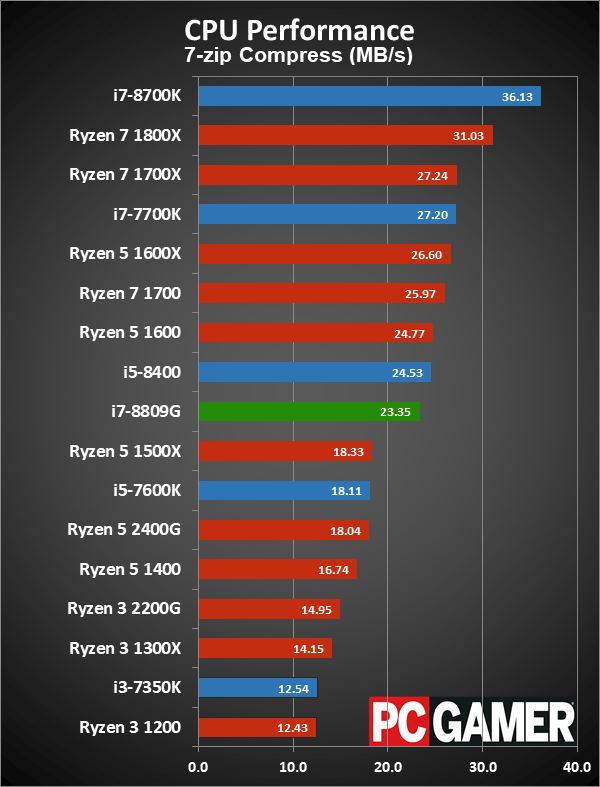

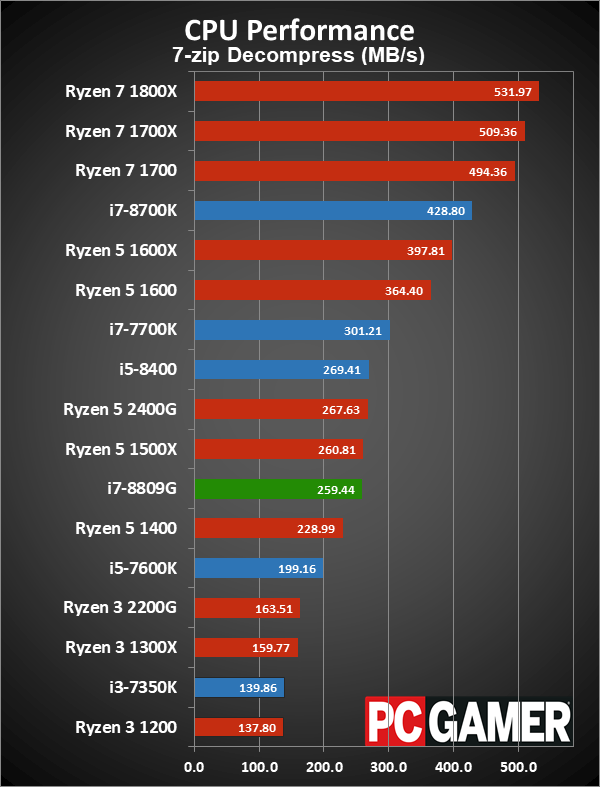

Core i7-8809G CPU performance

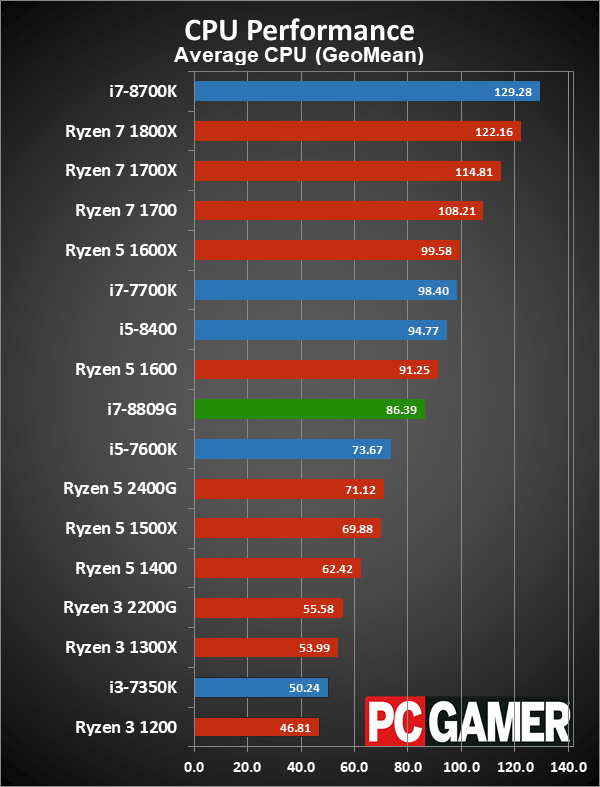

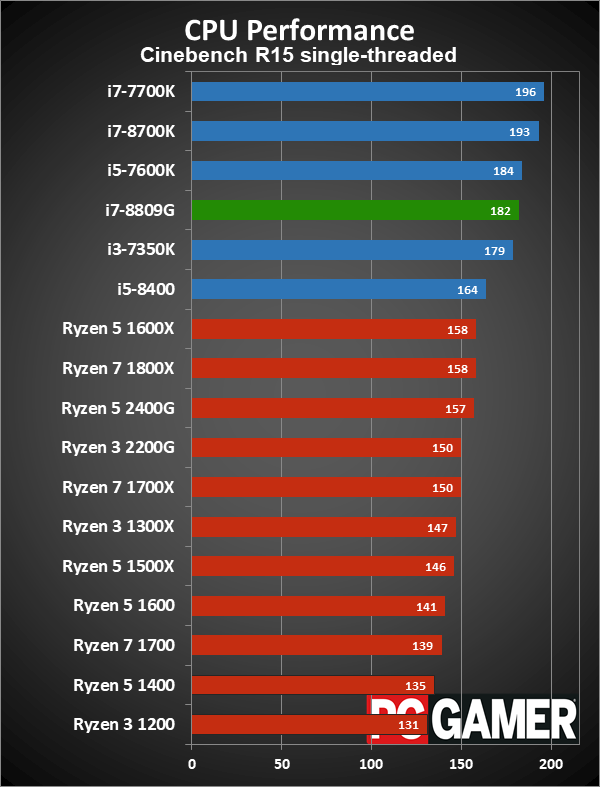

Moving from gaming and graphics workloads to traditional CPU tasks, Kaby Lake-G ends up looking exactly like Kaby Lake. This is a 4-core/8-thread part clocked at up to 4.2GHz, and more importantly, it can sustain all-core turbo clocks of 3.9GHz. That makes it a bit slower than the likes of the i7-7700K, but faster than an i5-7600K. Here are the benchmarks.

In overall CPU performance, the i7-8809G falls just behind the Ryzen 5 1600, and a bit further off the pace of the i5-8400 and i7-7700K. Single-threaded performance is still good, while heavily threaded workloads favor the 6-core and 8-core parts. Most users rarely run tasks that benefit from additional cores and threads, and the i7-8809G proves to be quite capable as a workhorse solution. That also means any less intensive tasks, including streaming video and photo editing, will run fine on the chip.

Overclocking your NUClear warhead

The Core i7-8809G is fully unlocked, on both the CPU and GPU, while lower tier Kaby Lake-G chips are not. Overclocking can be done via the BIOS, or in software, though the latter requires the use of two separate utilities: Intel's XTU for the processor and AMD's WattMan for the GPU. Whatever approach you take, be careful about how much power you try to push through the chip, as noise and temperatures can increase quite a bit as you push clockspeeds.

At stock, the CPU could do 3.9GHz on all cores, and overclocking to 4.2GHz wasn't hard. 4.3GHz and beyond starts to run into potential thermal limits, though you can get a single core up to higher clocks. For the GPU, power use scales quickly with increase GPU clocks, and a 10 percent boost to frequencies with a 10 percent increase in power limits yielded at steady 1310MHz, seemingly without incident, but system power use increased by around 30-40W.

A match made in Hades Canyon

Wrapping things up, there are two elements that I need to discuss. On the one hand is the processor itself, which will likely be found in quite a few laptops in the coming months, and on the other hand we have the latest Intel NUC.

The latter is perhaps easy to write off. Either you love the NUC or you think it's pointless. People who use desktop PCs and like to upgrade their graphics card every year or two are unlikely to be swayed by anything on tap in this latest iteration. Conversely, if you love the Intel's tiny boxes but wish they had more gaming potential, the Vega M graphics are a significant improvement over Intel's Iris solutions.

On paper this seems like a dream build for SFF and HTPC enthusiasts. Unfortunately, there are a few missteps. Despite substantially more powerful graphics performance, AMD's Vega M is worse than Intel's HD Graphics 630 when it comes to video decoding support—specifically, Vega M doesn't have VP9 profile 2 support required for YouTube HDR, and it lacks Protected Audio Video Path (PAVP) needed for UHD Blu-ray. This wouldn't be a problem, except all six video ports are routed through the Vega M graphics.

As the perfect HTPC solution, despite providing six 4k outputs, the NUC comes up short. It's still quite capable, and it does better as a gaming and VR box, but as usual you need to be committed to the ultra small form factor. If you're willing to go with a larger mini-ITX build with a dedicated graphics card, you can end up with a more potent rig for less money.

But it's hard not to like this little guy, and it's the only way you're going to get six displays out of such a tiny package. That may be pretty niche, but some people are bound to love it. There are better values for gaming purposes, but fans of Intel's NUC form factor will find plenty of worthwhile upgrades in this latest version.

The processor on its own is a bit harder to nail down. Intel doesn't even list a price for the chip, as it's intended for OEM use and gets integrated into complete laptops and devices like the NUC. Dell's latest XPS 15 with the i5-8305G and Vega M GL graphics starts at $1,499, while the i7-8705G and Vega M GH models start at $1,699. HP's Spectre X360 15-inch with Vega M should be out soon, but all the units currently listed on HP's site are for the previous iteration and I expect the price to also start at $1,500. In either case, you're not really saving money relative to previous years, but you might end up with a thinner and lighter laptop.

From the manufacturing side, putting the Intel Core with Vega M into a laptop should require a lot less effort (and real estate) than trying to accommodate a CPU plus GPU and GDDR5. That's the major impetus behind Kaby Lake-G, and the Dell XPS 15 and HP Spectre X360 laptops put the chip to good use. Another benefit of the fully integrated design is that Intel can exercise much better control over the clockspeeds and TDP of the CPU and GPU portions of the package. Intel says that thanks to its Dynamic Tuning technology, it can deliver the same gaming performance while using 18 percent less power, so 45W instead of 62.5W. I'll leave the future laptop reviews to Bo, but I do have some thoughts on the Kaby Lake-G processors.

First, let me get this out of the way: calling Kaby Lake-G "8th Gen Core" is just marketing speak. These are 7th Gen Core processors, or even 6th Gen Core, but with a new twist on graphics. The name game for processors gets increasingly confusing over time, and the addition of five new G-series parts doesn't help matters. But naming aside, as an engineering feat you have to be impressed. Getting Intel and AMD to work together couldn't have been easy, and EMIB alleviates the difficulties associated with the silicon interposer normally required for HBM2. Tying everything together with a tidy bow and packaging it up in a working product deserves respect.

All of that is cool on a technological level, but is it something we should rush out and buy? Perhaps not. Initial results for laptop battery life suggest these processors are a step back from previous Intel CPU + Nvidia GPU solutions (with Optimus Technology switchable graphics). AMD's GPUs have traditionally put up solid performance, but only at higher power use compared to Nvidia's solutions. Vega M doesn't appear to be any different, and unless someone can create a laptop that proves otherwise, I would be hesitant to jump onboard this train.

This is a highly integrated processor, and seeing an AMD GPU solution sitting next to an Intel CPU inside the same package is a strange feeling. Even more surreal is opening up the RX Radeon Vega M Settings and seeing all the usual AMD options, only skinned with Intel logos and branding. But desktop gamers have been using dedicated AMD and Nvidia graphics cards running with Intel processors since time immemorial. Cramming the GPU into the same package doesn't actually make things faster, since it's still connected via a PCIe x8 link. At some point that may change, but for most gamers you'll still be better served by going with a traditional separate GPU.

Where do we go from here? I don't think we're going to see products from Intel using graphics from AMD become the new norm, but it does pave the way for potentially faster future Intel graphics solutions. Intel has stated that it's working on dedicated GPUs again, for the first time in over 20 years. Targeting Vega M levels of performance at a minimum would be a good place to start.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.