Intel is bringing its discrete Iris Xe Max graphics card to thin-and-light laptops and 'value desktops'

Mixing and matching Tiger Lake with an Iris Xe Max GPU will deliver higher performance... just not in games.

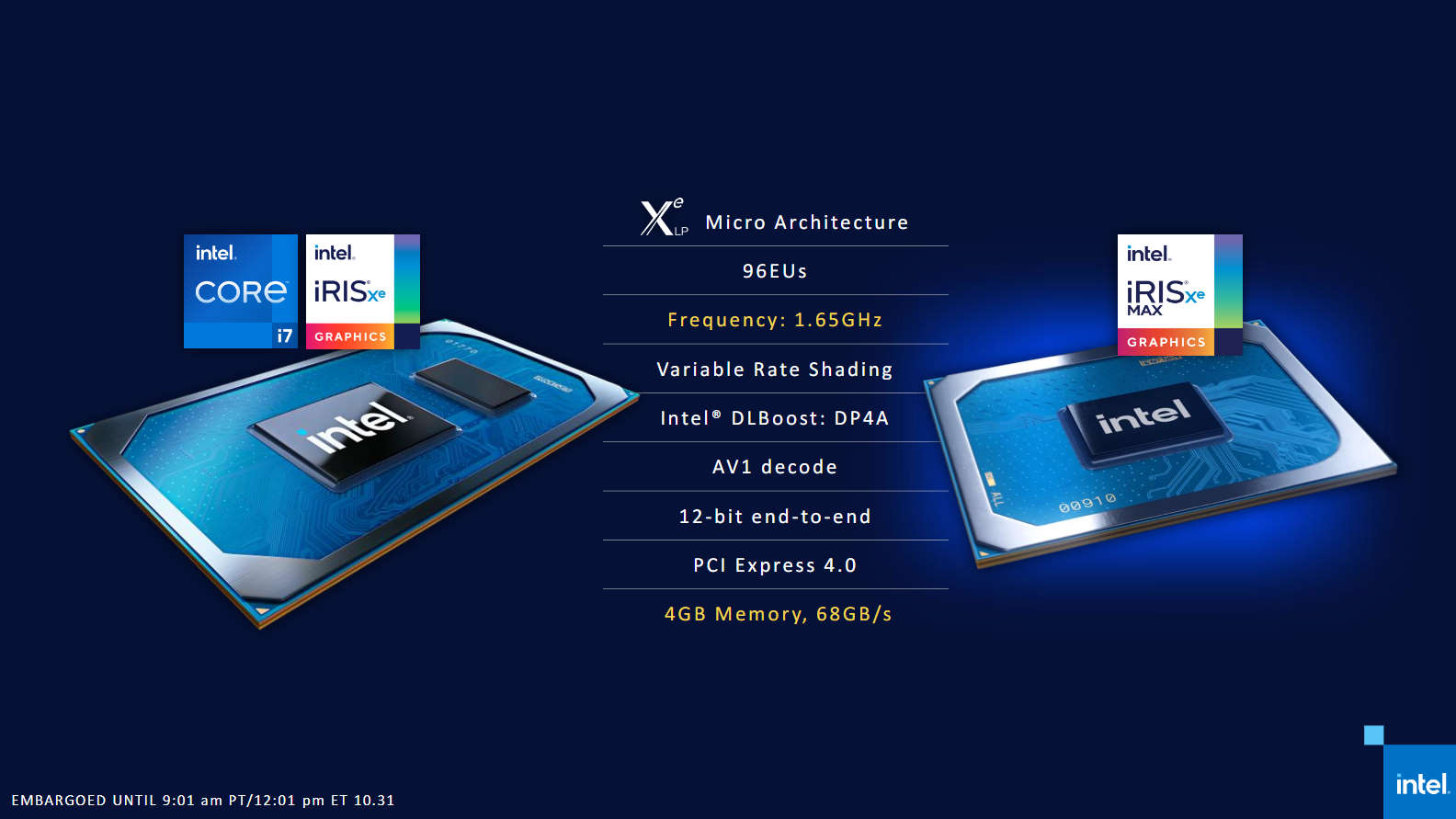

Intel has unveiled its first discrete Xe graphics card, the Intel Iris Xe Max. It's a mobile GPU designed to drop into thin-and-light notebooks to give creators the edge while they're on the go. The Iris Xe Max GPUs match the Iris Xe graphics silicon of the new Tiger Lake processors in terms of raw specs, and promises to also boost performance by pairing the two GPUs together.

This sort of total platform initiative is something AMD has recently championed when users pair its next-gen CPUs and GPUs. The upcoming RX 6000-series cards can potentially offer higher gaming performance when paired up with the Ryzen 5000-series processors.

Sadly, this new Deep Link, multi-GPU(ish) feature is not really going to deliver anything special when it comes to gaming because Intel is focusing its attention on those mobile creators rather than the gamers.

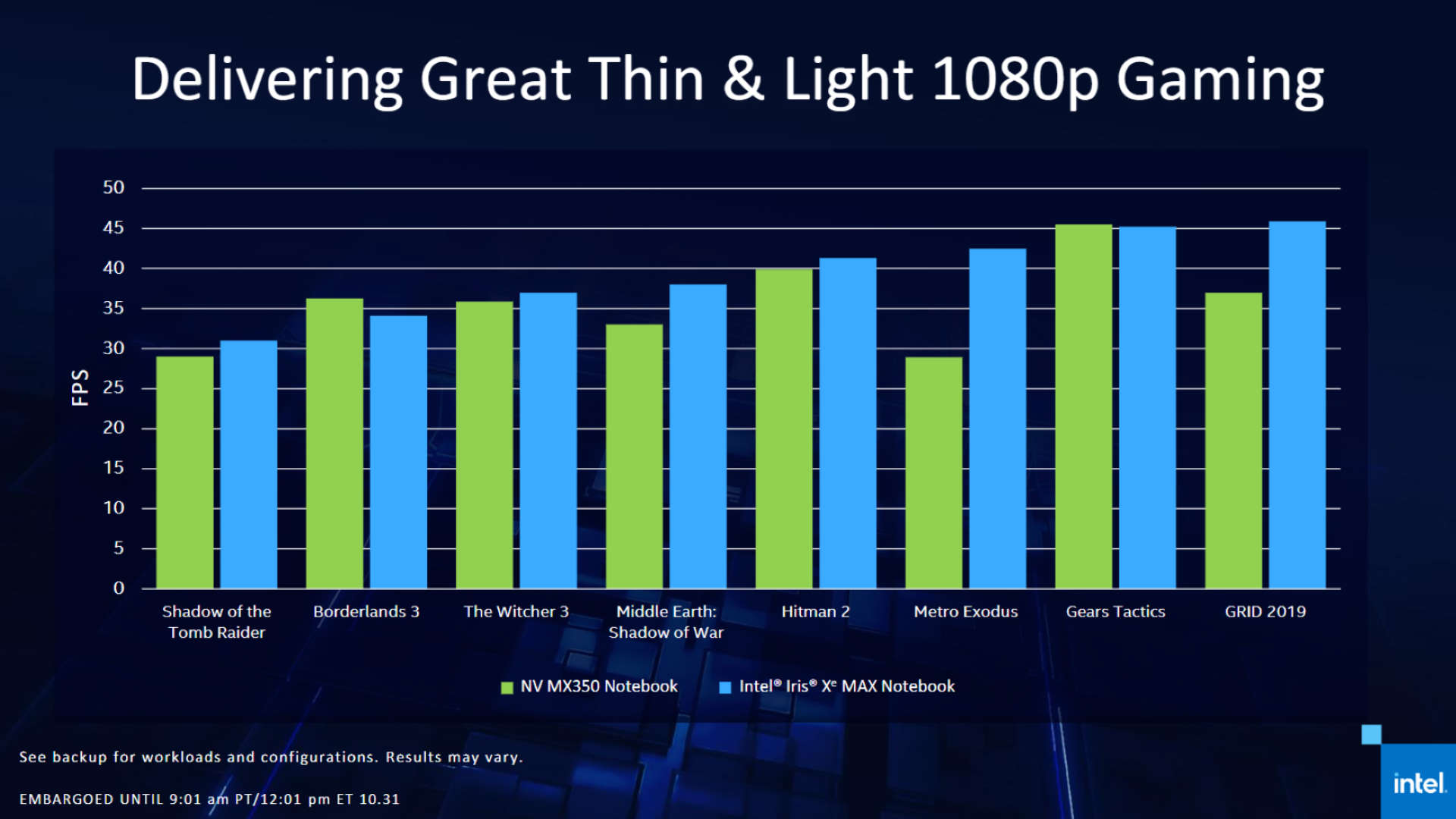

The discrete 10nm SuperFin Iris Xe Max chip does still deliver a level of 1080p gaming, offering performance over the 30fps mark on a host of games, though with varying fidelity settings.

If you want to hit 1080p gaming on these thin-and-light machines you're going to need to be bouncing between either Low or Medium settings at best.

Though in our own testing of the Tiger Lake range's best CPU that was almost achievable with the integrated GPU alone, this discrete version offers a higher clock speed and a dedicated 4GB of LPDDR4x memory.

That all means the blue team can offer all-Intel thin-and-light laptops with higher gaming performance than a similar-spec machine using Nvidia's recent MX350 GPU. At least in its few published benchmarks.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

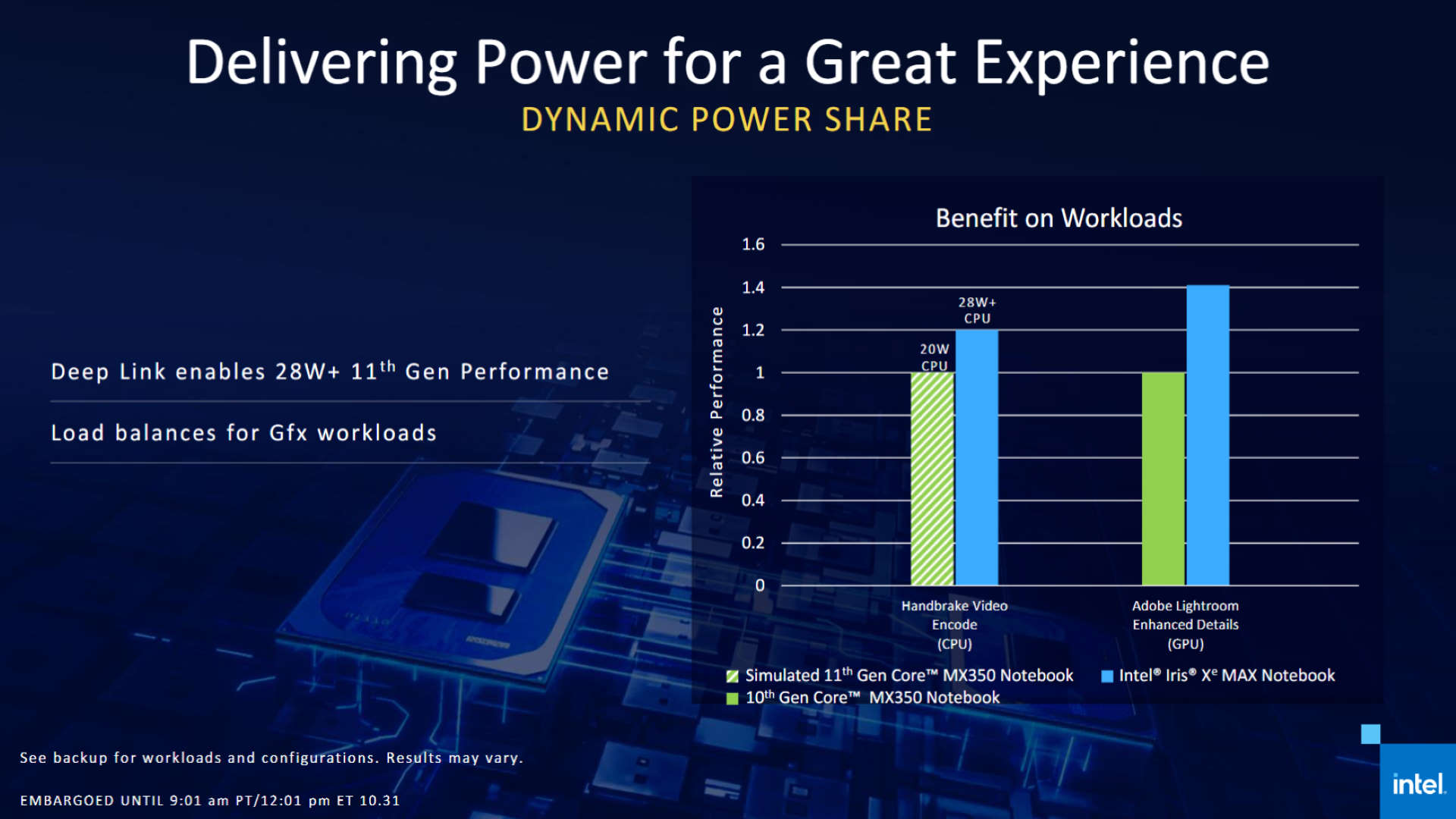

Intel is more focused on getting its Deep Link pairing delivering more performance in compute-focused workloads instead of on the chase for higher, smoother frame rates. To be fair, that's not exactly Intel's fault, given the fact that trying to get a pair of GPUs working in unison to display frames at the right time seems tougher than ever.

Nvidia has given up on SLI—only the RTX 3090 comes with the potential for bridging—and AMD isn't really pushing CrossFire these days either. Both the main graphics card manufacturers have instead passed the onus onto developers to utilise the DirectX multi-adapter features.

Effectively multi-GPU gaming is dead.

But the sort of compute-based tasks creators need help with are far easier to run across different chips. By utilising the two GPUs, and the multiple media encoding engines in such an Iris Xe-based system, Intel has demonstrated enhanced performance in Adobe Lightroom and even in the CPU-based Handbrake video encoding benchmark, to the tune of 1.4x and 1.2x respectively.

It's a smart play for Intel, dropping its own discrete Xe Max GPUs into laptops that might otherwise aim for Nvidia's low-powered MX350 platform instead. And you can bet that the likes of Acer, Asus, and Dell—who are all bringing such machines to market in November—have been given favourable rates for creating all-Intel machines instead.

What I'm not as sure about is the fact that Intel is also going to be bringing it's discrete GPU to market in desktop form at some point in the first half of 2021. That's likely to be an OEM-only enterprise and Intel is specifically talking about 'value desktops' too, so the DIY market needn't worry.

Quite where there is a need for such Iris Xe Max-powered desktop machines I don't really know. I get that having a thin-and-light notebook with a little more creative juice makes sense for people working on the go, but in a desktop form factor creators will surely be happy to drop a little extra upgrade cash on a better GPU than Intel can currently offer.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.