How many GPUs can you fit in a single system?

We love checking out the latest technology and seeing it put to good use. Most of the time, that entails amazing games, VR, and other experiences, but at the GPU Technology Conference we get to see a different facet of the GPU spectrum. Here, it's all about compute, and unlike gaming PCs where it's sometimes difficult to properly utilize even two GPUs, compute can make use of however many GPUs you can throw it. None of these are gaming machines, and considering DirectX 11 doesn't allow the use of more than four GPUs there wouldn't be any benefit to most of the systems we saw. But just how many GPUs can you pack into a single system if you're going after compute?

First up is a desktop workstation courtesy of Velocity Micro. Systems like this were all over the show floor, but the Velocity Micro system caught our eye as it was one of the few to actually use GTX 980 Ti—most were doing GTX Titan X if they used consumer GPUs, while Quadro and Tesla were in the majority of professional servers and workstations. Here, the case is packed with eight GTX 980 Ti cards from EVGA, paired with dual Xeon processors (these were older E5-2660 v3 right now, though newer Xeon v4 models are supported). What's interesting is that the EVGA cards are open air coolers rather than blowers; when asked about this, Velocity Micro said that it was "mainly for show" and that normally they would use reference blowers to help with cooling.

On a related note, we saw a lot of consumer GM200 cards in workstations, which isn't too surprising since there was no high-performance FP64 Quadro card for Maxwell. One of the problems vendors face when using consumer GPUs is that the cards have a relatively short shelf life; in another year, there won't be many GTX Titan X cards around for purchase, assuming a Pascal variant takes its place. Quadro and Tesla are kept in production for much longer, as part of the professional market they cater to. Companies are often faced with needing to balance buying faster consumer hardware that will be phased out sooner, or paying the higher price for professional cards that are clocked slower but will be around for five or more years. And when you can count on cards being around that long, you can do crazy stuff like this:

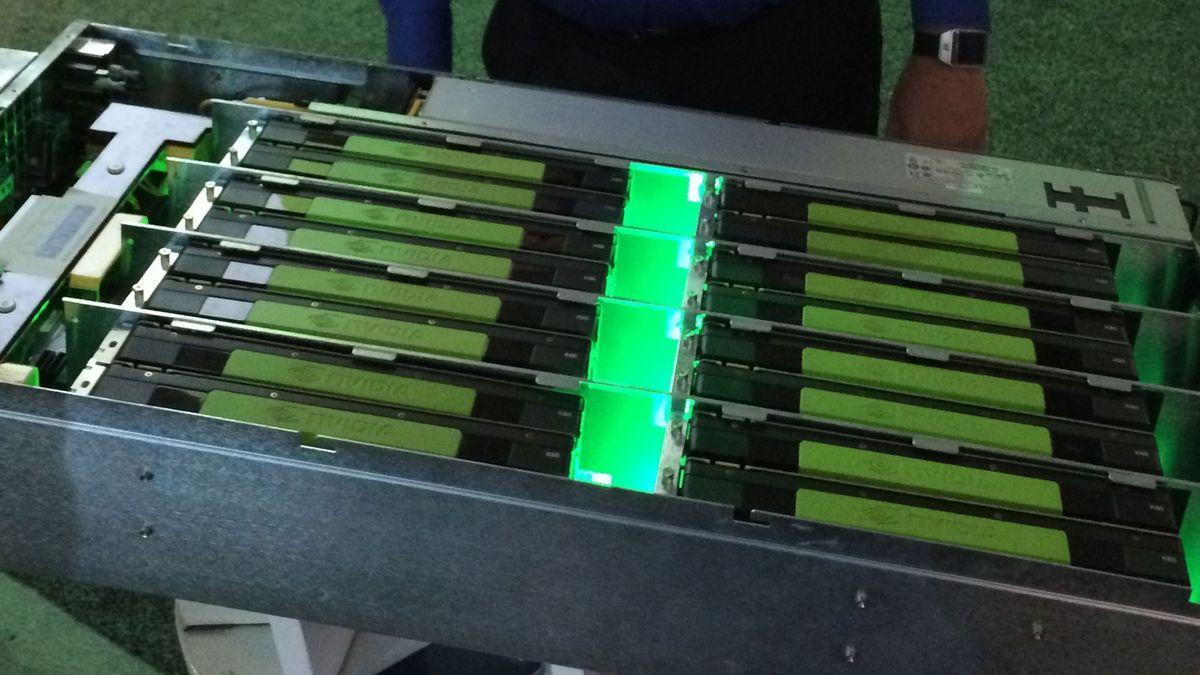

This particular 3U chassis is called the GPUltima and comes from One Stop Systems. Seeing four to eight GPUs in a server is nothing, but sixteen GPUs is another matter entirely. Except, these aren't any old GPUs, they're Tesla K80s, which means each card actually has two GK210 GPUs. That makes this the highest GPU density we saw on the show floor, packing 32 GPUs into a 3U cassis. [Ed—There may have been someone doing more than this, but if so I didn't see it.] Except there's a catch—because there's always a catch.

This particular case doesn't contain any processors; it's all just graphics cards along with a few InfiniBand connectors for networking. The GPUltima is designed to be used with a separate server, and One Stop Systems normally couples it to a dual-socket 2U server. That means the total GPU density is "only" 32 GPUs per 5U, and in a 42U rack—with 2U being used for networking stuff up top—you could fit eight such systems. That yields 256 GPUs per rack, which is still an impressive number, but not the highest density you'll find. There are companies like SuperMicro who make 1U servers that can each house four Tesla K80 cards, so potentially 40 of those 1U servers (plus 2U for networking again) could fit in a single rack, yielding 320 GPUs.

Let's stick with the 128 Tesla K80 GPUs, though. Each has 8.74 FP32 TFLOPS, for a combined 1,120 TFLOPS per rack. Or if you want FP64, you'd drop down to 373 TFLOPS. There's a problem with stuffing that many GPUs into a rack, of course: you still need to provide power and cooling. Just how much power does the GPUltima require? We were told that the typical power use under load for the system sits at around 5000W, though peak power use can jump a bit higher. Each 3U rack comes equipped with three 3000W 240V PSUs (one for redundancy), so a data center needs to be able to supply over 50kW per rack, with 76kW being "safe." The good news is it can also roast marshmallows and hot dogs if you're hungry. (Not really.)

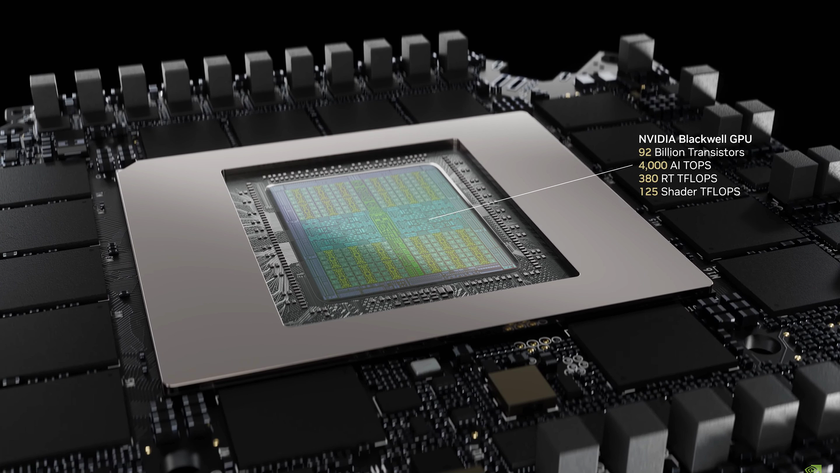

Nvidia's upcoming DGX-1 server with eight Telsa P100 Pascal GPUs can provide up to 170 TFLOPS of performance in a 3U chassis, and with 12 of those in a rack, 2035 TFLOPS per rack. Of course, that's FP16 TFLOPS, so if you need FP32 precision you're looking at 1017 TFLOPS, or 509 TFLOPS for FP64. Using the existing K80, GPUltima is slightly faster on FP32 per rack, but slower on FP64. Perhaps more importantly for the Tesla P100, peak power use should be significantly lower than K80, meaning a full rack of twelve DGX-1 servers should only need around 30-40kW.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

If you're wondering what anyone would do with all of those GPUs, they're looking at things like artificial intelligence, weather systems, and other supercomputing tasks.

Let's end with a fun fact: Did you know there's an estimate for how much computing power it would require to simulate a human? Well, there is, though opinions differ by several orders of magnitude. Some estimate it's around 10^16 FLOPS (Kurzweil), but let's just make it interesting and aim a bit higher, like 10^18 FLOPS. That might not mean too much to you, so let's express it as another term: 1 exa-FLOPS (EFLOPS). With up to 2 PFLOPS per rack, DGX-1 would allow us to stuff 1 EFLOPS into 512 racks. Then we just need the right software to make it all work properly. And all of that would only need 15-20MW of power to run, compared with our little brains humming along on just 20W or so of power.

In other words, our fastest modern super computers might not run Crysis, but they're fully capable of playing Crysis. Which is pretty awesome...and perhaps more than a little bit scary.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

A Jensen Huang-signed version of this golden Asus RTX 5090 will be auctioned off to support relief efforts for the California wildfires

Nvidia CEO sets sights on making 'several hundred billion' dollars worth of electronics in the USA over the next four years, increasing the chance of your next GPU being made in America