How Blizzard is using machine learning to combat abusive chat

Getting an AI system to recognize toxicity is far more complicated than just censoring bad words.

Communicating with your teammates can be a pleasant experience, or it can make you feel like you’ve gone through the nine circles of hell. Maybe you were having an off-day and one of your teammates called you a noob. Maybe you politely asked for someone to switch to a healer and they replied with "go kill yourself." Maybe you just said hello and your teammate started demanding sexual favors.

When someone goes too far with what they say, their remarks can linger long after you've exited the game. Abusive chat can have negative effects on players dealing with various forms of mental illness, and telling those players to either stop playing the game or mute the chat can feel like hollow advice.

Aside from relying on those of us who want a more pleasant experience to report bad actors in-game, the only other countermeasure is an AI system that automatically filters out all their horrible words and phrases. But training an AI system to recognize the good from the bad is a complicated task, as a recent GDC panel hosted by Blizzard pointed out—and much of that training is built on player reports, which is not a perfect system.

Here are a few problems with abusive chat that Blizzard is solving with its AI system.

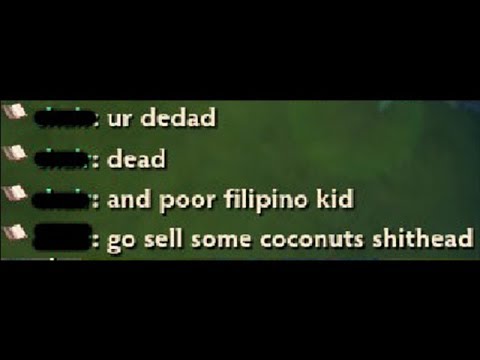

Note: the content and images below contain offensive content.

‘Go make me a sandwich’

The most obvious way gamers still get around chat filters is by misspelling or abbreviating slurs and insults, like FU, sh1t, n00b, etc. To be effective, an AI system needs to understand relationships at the character level. Through an active learning model, Blizzard’s system can account for a plethora of different spellings without having to frontload the AI with all the information. It pulls information through player reports.

But words don’t mean as much by themselves as when you put them together.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

'Fuck' normally gets censored in text chats, but saying 'fuck yeah' is different from saying 'fuck you.' And a lot of the time, a player doesn't even need to use any naughty words to fire an insulting message. 'Garbage' and 'trash' aren't obscene, but telling someone in chat they are garbage or trash is not nice.

Sometimes people get more creative, as many gamers have shared, and continue to share, on places like Reddit: "This annie has the map awareness of Christopher Columbus," and "You're a neckbeard who beats off to blood elves." The latter insult is the more obvious one, but while the first entirely avoids red-flag words, it's no less hurtful. Unless someone reported that comment, the AI might not recognize it as insulting, as it's hard for an AI system to filter out 'insults' as a general category of remark.

Some words that people use can mean different things in different parts of the world.

And how would it tell the difference between friends giving each other a hard time and insults from strangers? The things my friends and I say back and forth could be interpreted as insults, but in reality we're just comfortable with one another and can be 'mean' without taking it too far.

There's also the issue of cultural relevance; some words that people use can mean different things in different parts of the world. 'Bollocks' isn't in the US vernacular, for example, but 'fanny' is used in both the US and the UK—but with very different meanings on either side of the pond.

All that can be solved, or at least heavily mitigated, by a few things. Blizzard says it utilizes a deep learning system that trains itself to understand sentence structure and context. Working in tandem with another model, the AI collects a large amount of text that has been labeled good or bad and is then fed into a machine learning algorithm so it can learn all the relationships within those texts. If this model is successful, then the AI should be able to properly categorize words and sentences it has never seen before—which is one step closer to autonomous abusive chat reporting.

‘You fight like a dairy farmer’

Cultural relevance becomes more complicated when not every player speaks the same language in-game. In certain regions of the world, players often speak multiple languages during a game, so Blizzard has a lot of linguistic and cultural context to consider when programming its AI system.

Also, every language evolves. Words can change their meaning, and new words are created all the time. What wasn’t an insult a year ago could be an insult today. Understanding the meaning of a phrase requires a lot of context. In theory, colloquialisms and slang should be caught by reporting systems. If someone is making racist, sexist, or homophobic remarks, however hard they may be for an AI to decode, other players will report them and they'll be banned.

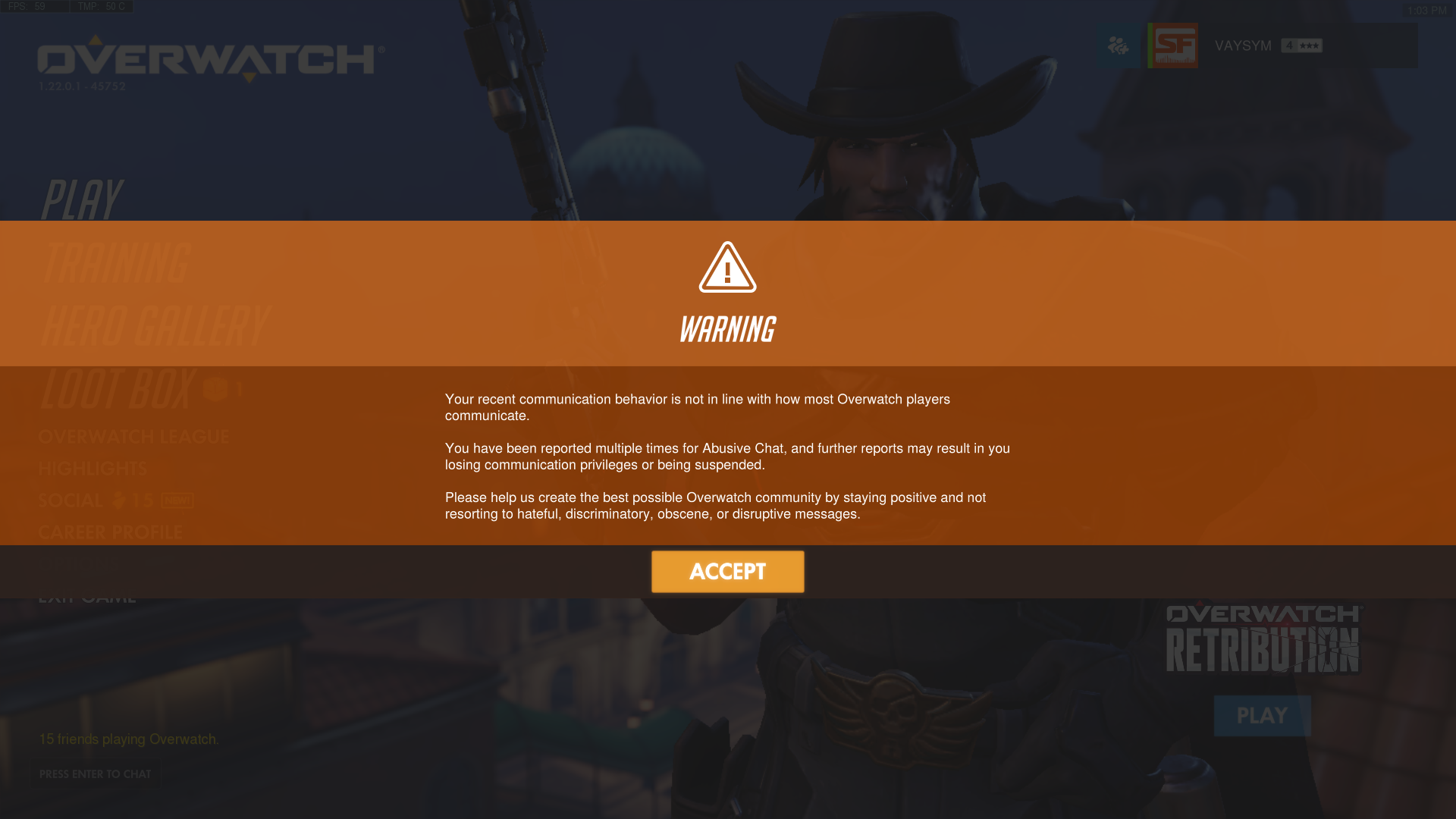

But abusive chat isn’t always reported. Everyone comes into a game with their own barometers of what they consider abuse. For instance, if someone calls me a trash player, I just shrug it off, but if someone directs sexually explicit or misogynistic comments my way, or is being toxic toward another player, I report them. Not everyone does. In cases like this, some AI systems might keep a record of how many times a player was reported, and then silence or suspend them after a certain number of reports.

Abusive chat isn’t always reported.

There's also the issue of players abusing the report system—that is, reporting other players for abusive chat that never took place. Some Overwatch players, for instance, have expressed concerns about being reported for abusive chat just because someone didn't like the hero they picked.

Blizzard’s solution for both those issues still lies within its active learning system. As the AI reads potentially toxic chat, it puts it into a results table. From there a text selection engine goes through all the information, looking for specific things: abusive text that players reported but the AI didn’t recognize; text that the AI thinks is abusive but the players aren’t reporting; and text the model might be confused about. If the issue is the latter, then customer service representatives get involved and go through the text by hand.

The entire model keeps getting updated as the conversations between players change over time. Simply put, if there is a word or phrase players report in greater and greater numbers, then the AI will learn to classify whatever that is as bad.

Can’t we all just get along?

Blizzard notifies and explains to the community whenever there is a change to its reporting system. For Overwatch, Blizzard made changes to the categories under the player reporting system that feeds into its 'machine learning' system. In Heroes of the Storm, it stepped up the penalties for players who make abusive comments in chat. But, like everything outlined above, some things can still slip through the cracks.

In a more ideal world, toxicity in games wouldn't be an issue. But we don't live in that world, so it's up to developers to create systems that prevent or discourage players from being abusive toward others. There's a plethora of variables relating to language, semantics, and otherwise to take into account on a global scale, but it’s encouraging and comforting to see Blizzard is taking a multi-angled approach to dealing with abusive chat.

Ultimately, the player reporting feature is the first line of defense against abusive chat—and it seems to be working. Since starting this project, and changing some aspects of the reporting feature, the company has seen a 59 percent reduction in re-offence rates and a 43 percent reduction in matches with disruptive chat, as Blizzard pointed out in its panel.

I'd expect it to take several years before the AI system is fully fleshed out, but the ultimate goal is to have that system recognize abusive chat automatically, without reports from players. Until then, the best thing any player can do to help make that reality is use the report function early, often, and honestly.