Hitman 3 PC performance proves there might just be some fine wine in AMD's RX 6800 XT

The first big game of 2021 gives us a chance to check out the classic AMD vs. Nvidia head-to-head, and highlights what VRS can deliver in a real game.

The first big game release of 2021 gives a big ol' performance 'W' to AMD, with the latest Radeon driver delivering Hitman 3 performance to the AMD RX 6800 XT that puts it way beyond the Nvidia RTX 3080 at every level.

We've been used to seeing it be a close benchmark fight between the two AMD and Nvidia flagship GPUs, but in general terms the RTX 3080 has taken the win at 4K, despite having a smaller frame buffer to call on. That was the way with Hitman 3 until AMD pulled a blinder on its game ready release drivers, delivering performance that is sometimes 14 percent higher than the Nvidia graphics card.

We've been doing some Hitman 3 performance testing to see how well the latest game runs on the top two graphics architectures of today and it's going to make pleasing reading for the few people that have managed to bag themselves a new AMD RDNA 2 GPU, and maybe less so for the GeForce faithful.

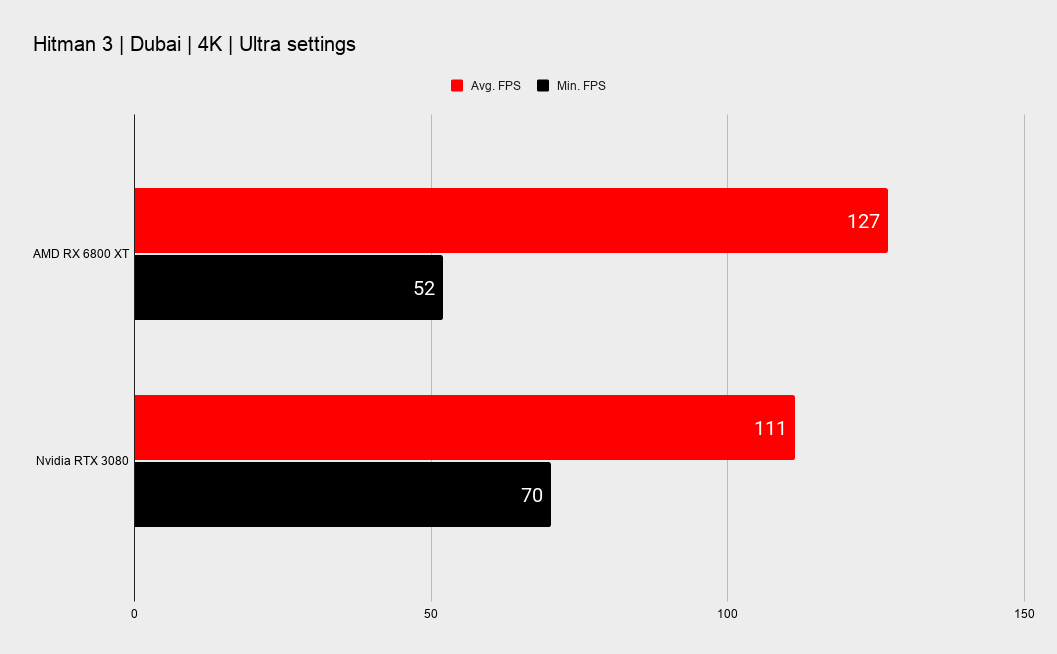

Before we get started, the caveat to these comparative performance metrics is that with the RX 6800 XT and RTX 3080, even at 4K with all the visual bells and whistle-y things enabled, Hitman 3 runs at over 100fps on even the most punishing of its two in-game benchmarks. Sure, the new engine is updated over the previous two games, but you're still going to get some spectacular performance.

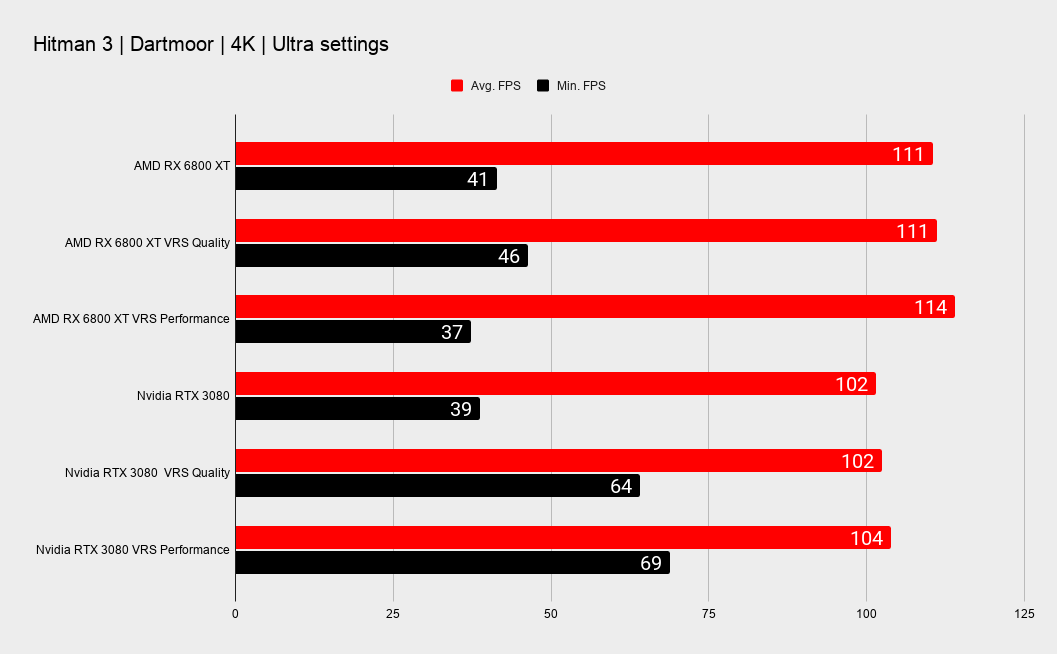

There are a pair of separate benchmarks included with Hitman 3, one based in the Dubai level and another in the Dartmoor level. Yes, the one that wants to be Skyfall. That one is all about destruction and is arguably more of a CPU-intensive test as it alone eats up 50 percent of your processor's juice as it blows the old house apart, piece-by-piece.

Variable Rate Shading (VRS) in Hitman 3

The graphics setting in Hitman 3 which interested me the most is where you have the opportunity to utilise Variable Rate Shading (VRS). Nvidia graphics silicon has offered support since Turing hit the streets, but it's only with the RDNA 2 architecture from AMD that we have competing support from the red team.

VRS is a relatively modern GPU feature—though it was first introduced in 2019—which essentially allows a game to select different levels of fidelity actually within the shading of a scene. There are two tiers listed for VRS, Tier 1 and Tier 2.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Tier 1 VRS allows game developers to select different shading rates per draw call. That means things like being able to assign different shading rates to objects in the foreground to keep detail high, while shading far distant scenery at lower rates as their detail is less important than higher performance.

This is the most basic version of VRS, and as such the version which offers the lowest performance boost, and potentially a greater drop in actual visual fidelity as a result.

Tier 2 VRS is way more granular, allowing for changes to be made on a per-pixel basis as well as per draw call. There's far more to it than that, but it appears as though Hitman 3 is purely using Tier 1 and so we probably duck out before we get into the complex developer-level coding stuff. Though Microsoft does have a decent explainer in its 'Scalpel in a world of sledgehammers' blog post.

How much impact does enabling VRS in Hitman 3 have?

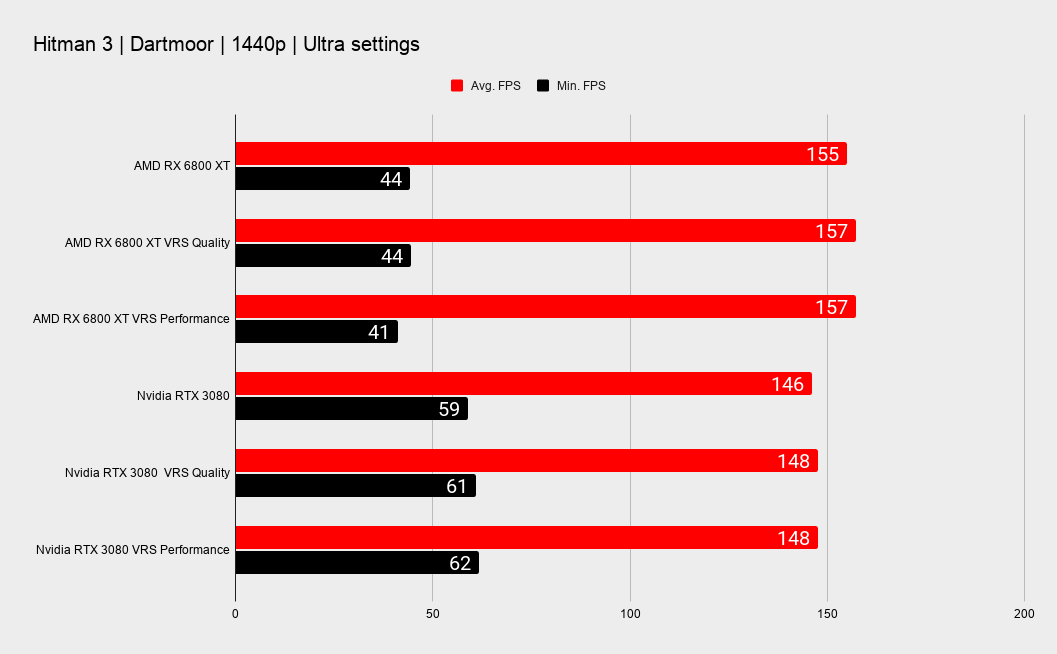

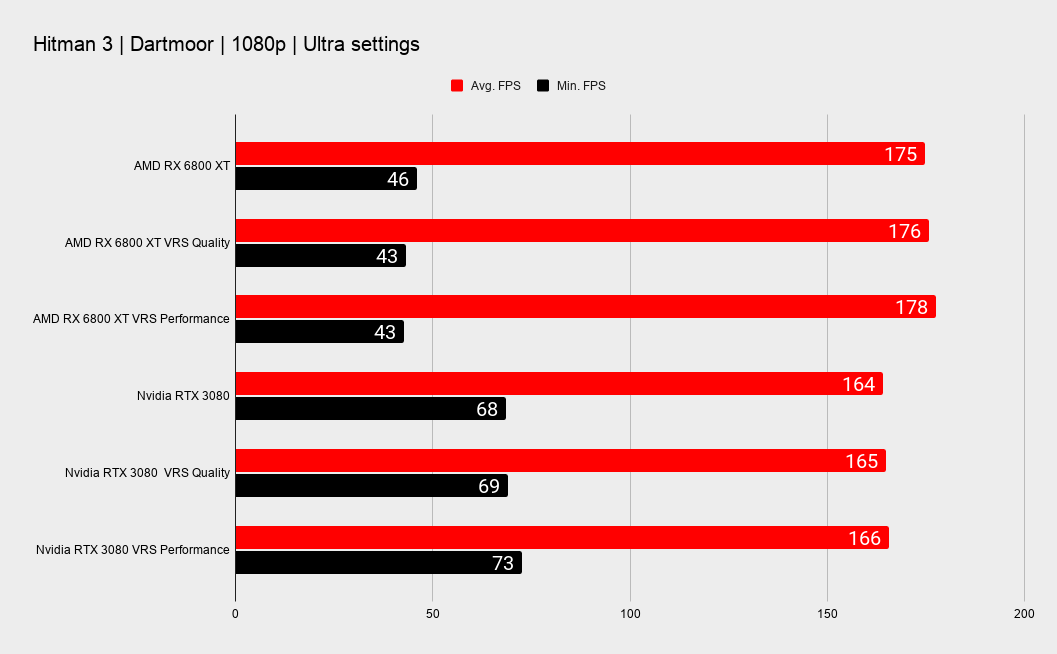

Variable Rate Shading has three settings in Hitman 3: Off, Quality, and Performance. Performance mode is the most aggressive of VRS settings and has the most impact on frame rates and image fidelity, while Quality aims to deliver a balance of the two. Both VRS Performance and Quality settings do deliver frame rate increases, though in the more CPU-oriented Dartmoor benchmark it's only to the tune of 2fps at best.

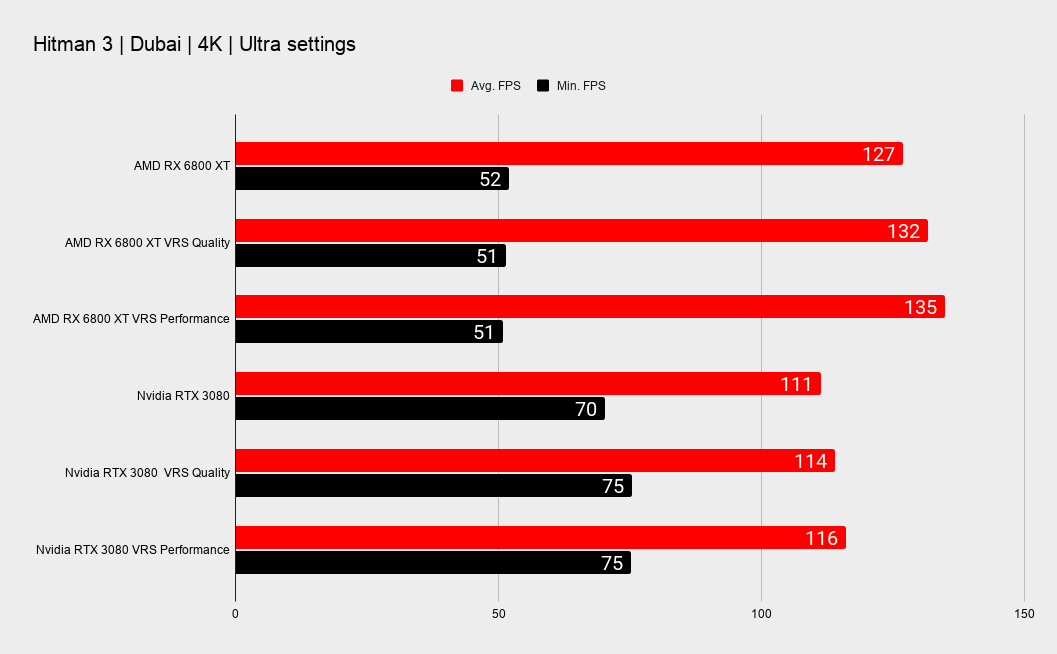

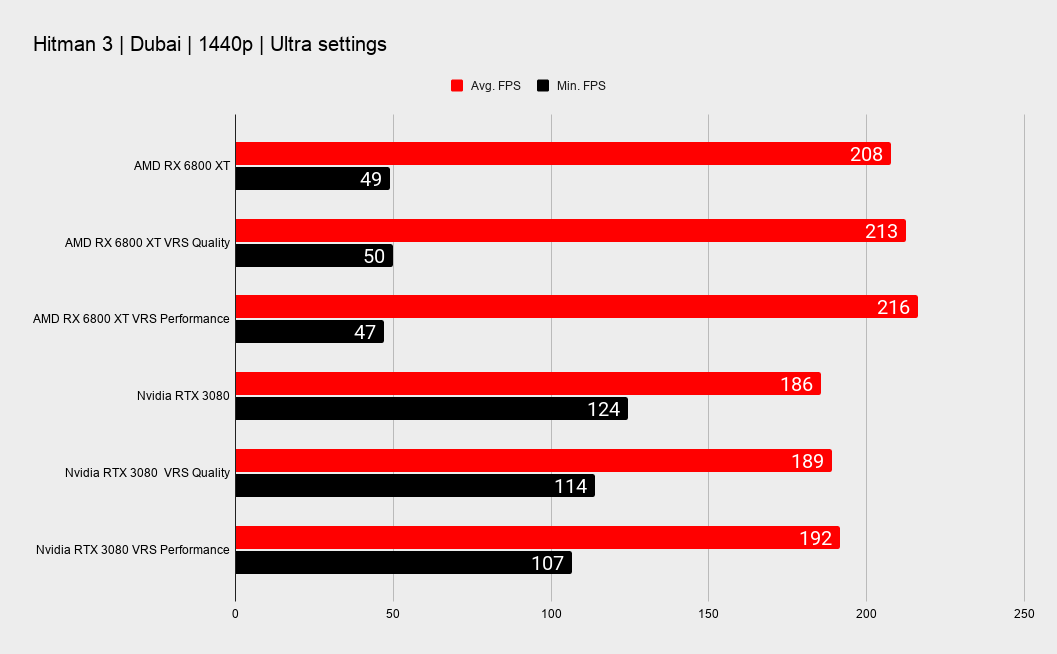

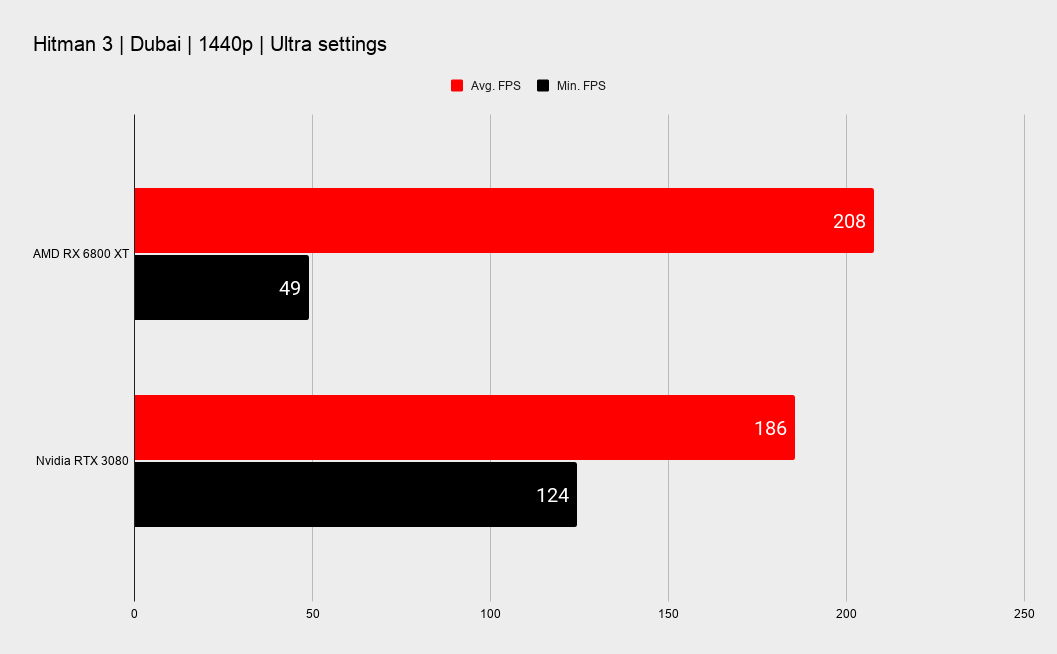

In the Dubai test, which is tuned more towards GPU performance, the difference is more pronounced. Though still very slight in the grand scheme of things.

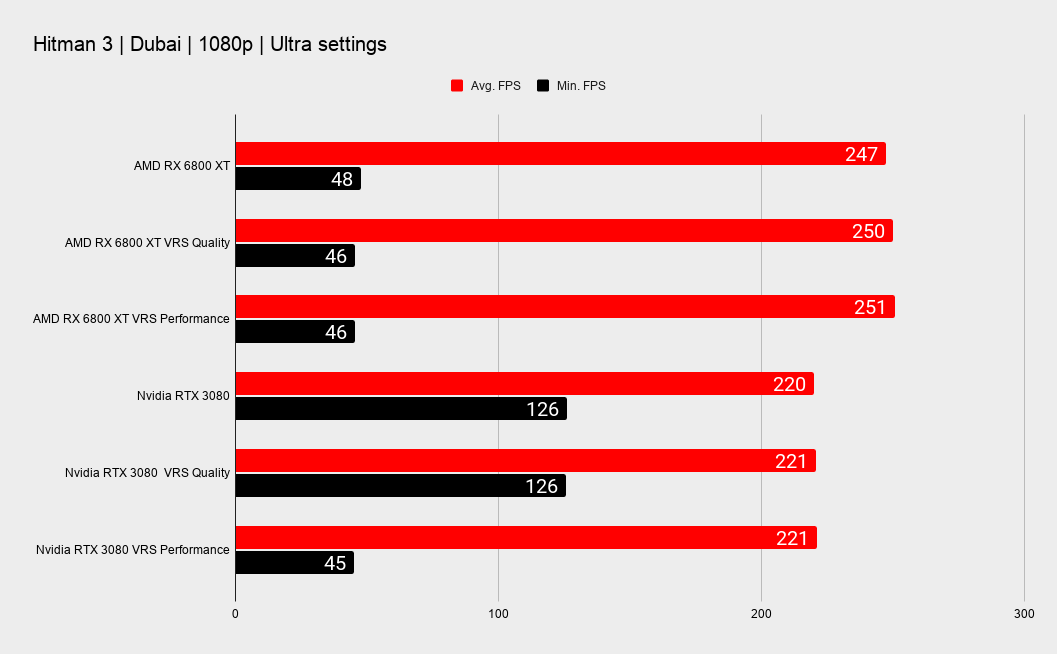

At 4K VRS delivers a little higher boost to the AMD GPU compared with the implementation on the Nvidia chip, though is essentially the same at 1440p. At 1080p there is again nothing between them, though there is also essentially nothing to be gained from enabling VRS here because the frame rates are already well over 200fps!

The Performance setting does on the whole offer higher speed, but not really noticeably more than the Quality setting, and if you peer closely at the images you can see where the image isn't as sharp. In the image highlighting the VRS Performance setting above you can see this around the books on the shelves; they're noticeably less sharp than either VRS Quality or off.

If you're feeling the need for an extra few frames per second out of your graphics card, then enabling the VRS Quality could be worth a shot. But you will arguably get more performance from Hitman 3 from dropping some other settings that will have as little impact on fidelity; knocking back shadows and screen space ambient occlusion, for example.

There's also the fact that not all GPUs are capable of using VRS either. AMD has only enabled support in the latest run of RDNA 2 graphics cards, so only the RX 6000-series need apply. For Nvidia it's anything from Turing onwards, so RTX 20-series or GTX 16-series and up.

Hitman 3 PC performance: AMD vs. Nvidia

Now, I will acknowledge this isn't exactly representative of every AMD and Nvidia card available, especially given there are practically no AMD or Nvidia cards available right now. But that's for another rant.

Here we're specifically looking at the latest GPU architectures, in the flagship graphics cards from the graphics card giants, because they are essentially on par with each other, and should inform which GPUs should win their respective battles further down the stack. And on that front it looks good for anyone sticking their Hitman 3 flag to the Radeon mast.

Across the board the RX 6800 XT performs considerably better than the RTX 3080, and not just a few fps here and there. At 4K, the high-spec resolution where the flagship Ampere GPU has looked the superior silicon so far, the RX 6800 XT comes out 14 percent faster.

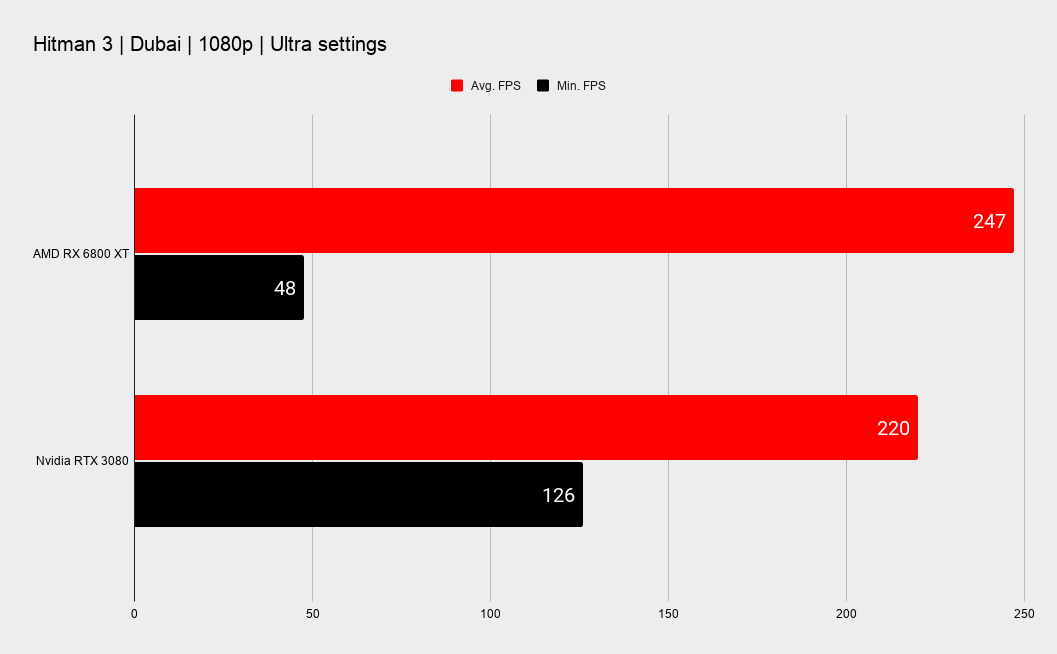

At 1440p the difference is less stark, but only a little less. With a 12 percent performance delta between the AMD and Nvidia cards you're looking at a difference of around 22fps. Looking at the more common—though arguably wasteful with such top GPUs—1080p resolution the AMD RDNA 2 card is again some 12 percent faster.

Where Nvidia can claim something of a win is in the stated minimum frame rates that come out of the benchmarks. The AMD card shows a greater propensity for dropping to a particularly low ebb when it comes to frame rates while it is demonstrably faster on average. These instances are few, and the overall benchmarks still feel smooth to my eye.

I did run some tests using Frameview to visualise the frame times at a frame-by-frame level, but even then the RX 6800 XT shows that it will drop to a lower 99th percentile level than the Nvidia GPU.

It hasn't always looked like this, however, with the GPU drivers available at launch day from the two manufacturers the performance delta was very different. At 1080p AMD still held a fairly considerable lead, but at 1440p there was almost nothing in it, and at 4K Nvidia's RTX 3080 was nudging ahead.

But a recent AMD Radeon driver release has changed the landscape considerably, and I've been assured by Nvidia it has nothing in the works with its latest driver release that will impact Hitman 3 performance on that scale. That just shows what AMD's driver team is capable of, and highlights where some of that delicious Radeon fine wine might come to the table. There has long been a bit of a stigma to AMD's GPU drivers, but they've played a blinder here, so is this a taste of what is to come with new games coming out this year?

Maybe. Just maybe. At least for a GPU architecture that is right at the heart of the very latest generation of games consoles anyway.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.