The 2012 source code for AlexNet, the precursor to modern AI, is now on Github thanks to Google and the Computer History Museum

One of the first modern neural networks makes its way to Github.

AI is one of the biggest and most all-consuming zeitgeists I've ever seen in technology. I can't even search the internet without being served several ads about potential AI products, including the one that's still begging for permissions to run my devices. AI may be everywhere we look in 2025, but the kind of neural networks now associated with it are a bit older. This kind of AI was actually being dabbled with as far back as the 1950's, though it wasn't until 2012 that we saw it kick off the current generation of machine learning with AlexNet; an image recognition bot whose code has just been released as open source by Google and the Computer History Museum.

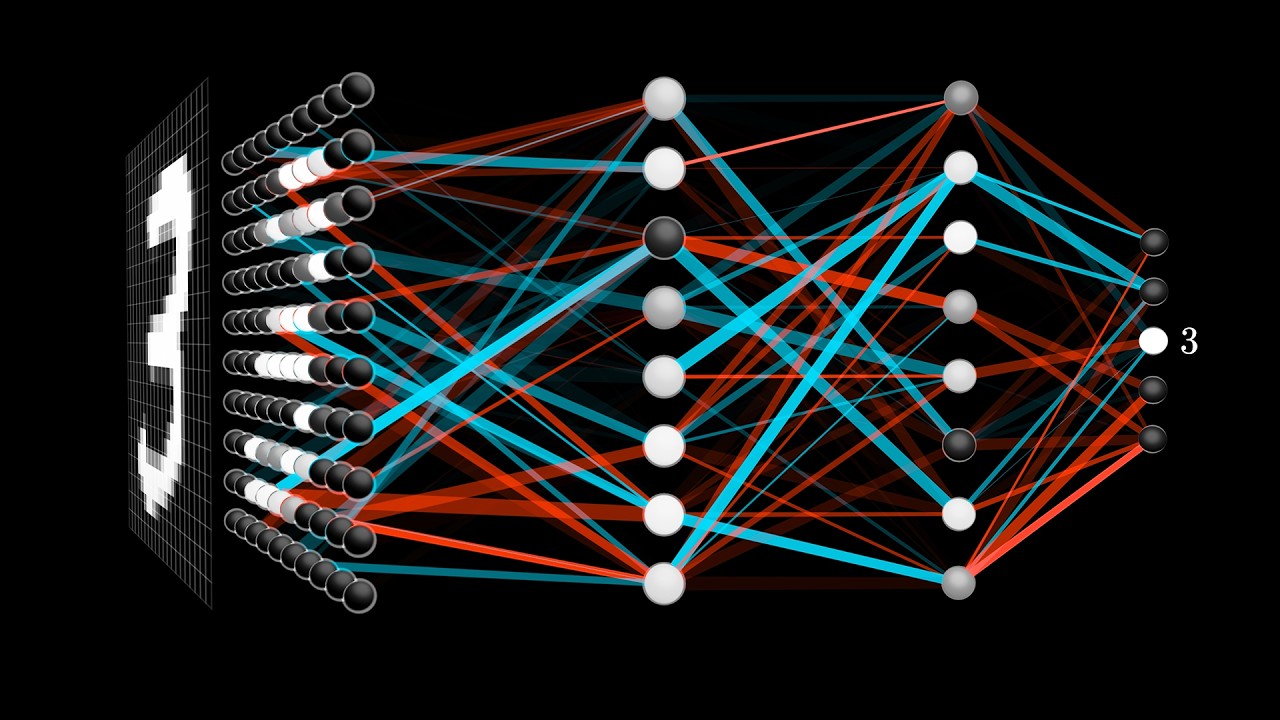

We've seen many different ideas of AI over the years, but generally the term is used in reference to computers or machines with self learning capabilities. While the concept has been talked about by science-fiction writers since the 1800's, it's far from being fully realised. Today most of what we call AI refers to language models and machine learning, as opposed to unique individual thought or reasoning by a machine. This kind of deep learning technique is essentially feeding computers large sets of data to train them on specific tasks.

The idea of deep learning also isn't new. In the 1950's researchers like Frank Rosenblatt at Cornell had already created a simplified machine learning neural network using similar foundational ideas to what we have today. Unfortunately the technology hadn't quite caught up to the idea, and was largely rejected. It wasn't until the 1980's that we really saw machine learning come up once again.

In 1986, Geoffrey Hinton, David Rumelhart and Ronald J. Williams, published a paper around backpropagation, an algorithm that applies appropriate weights to the responses of a neural network based on the cost. They weren't the first to raise the idea, but rather the first that managed to popularise it. Backpropagation as an idea for machine learning was raised by several including Frank Rosenblatt as early as the '60s but couldn't really be implemented. Many also credit it as a machine learning implementation of the chain rule, for which the earliest written attribution is to Gottfried Wilhelm Leibniz in 1676.

Despite promising results, the technology wasn't quite up to the speed required to make this kind of deep learning viable. To bring AI up to the level we see today we needed a heap more data to train them on, and much higher level computational power in order to achieve this.

In 2006 professor Fei-Fei Li at Stanford University began building ImageNet. Li envisioned a database that held an image for every English noun, so she and her students began collecting and categorising photographs. They used WordNet, an established collection of words and relationships to identify the images. The task was so huge it was eventually outsourced to freelancers until it was realised as by far the largest dataset of its kind in 2009.

It was around the same time Nvidia was working on the CUDA programming system for its GPUs. This is the company which just went hard on AI at 2025's GTC, and is even using the tech to help people learn sign language. With CUDA, these powerful compute chips could be far more easily programmed to tackle things other than just visual graphics. This allowed researchers to start implementing neural networks in areas like speech recognition, and actually see success.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

In 2011 two such students under Goeffrey Hinton, Ilya Sutskever (who went on to co-found OpenAI) and Alex Krizhevsky began work on what would become AlexNet. Sutskever saw the potential from their previous work, and convinced his peer Krizhevsky to use his mastery of GPU squeezing to train this neural network, while Hinton acted as principal investigator. Over the next year Krizhevsky trained, tweaked, and retrained the system on a single computer using two Nvidia GPUs with his own CUDA code. In 2012 the three released a paper which Hinton also presented at a computer vision conference in Florence.

Hinton summarised the experience to CHM as “Ilya thought we should do it, Alex made it work, and I got the Nobel Prize.”

It didn't make much noise at the time, but AlexNet completely changed the direction of modern AI. Before AlexNet, neural networks weren't commonplace in these developments. Now, they're the framework for most anything touting the name AI, from robot dogs with nervous systems to miracle working headsets. As computers get more powerful we're only set to see even more of it.

Given how huge AlexNet has been for AI, CHM releasing the source code is not only a wonderful nod, but also quite prudent in making sure this information is freely available to all. To ensure it was done fairly, correctly—and above all legally—CHM reached out to AlexNet's namesake, Alex Krizhevsky, who put them in touch with Hinton who was working with Google after being acquired. Now, considered one of the fathers of machine learning, Hinton was able to connect CHM to the right team at Google who began a five-year negotiation process before release

This may mean the code, available to all on Github might be a somewhat sanitised version of AlexNet, but it's also the correct one. There are several with similar or even the same name around, but they're likely to be homages or interpretations. This upload is described as the "AlexNet source code as it was in 2012" so it should serve as an interesting marker along the pathway to AI, and whatever form it learns to take in the future.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

Hope’s been writing about games for about a decade, starting out way back when on the Australian Nintendo fan site Vooks.net. Since then, she’s talked far too much about games and tech for publications such as Techlife, Byteside, IGN, and GameSpot. Of course there’s also here at PC Gamer, where she gets to indulge her inner hardware nerd with news and reviews. You can usually find Hope fawning over some art, tech, or likely a wonderful combination of them both and where relevant she’ll share them with you here. When she’s not writing about the amazing creations of others, she’s working on what she hopes will one day be her own. You can find her fictional chill out ambient far future sci-fi radio show/album/listening experience podcast right here. No, she’s not kidding.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.