Nvidia might be considering using sockets for its next AI mega GPUs but that's not going to happen with its GeForce graphics cards

For once, it looks like Nvidia is taking a leaf from AMD's book on how to do chips.

Desktop PCs, workstations, and servers share many common aspects, one of which is the CPU is nearly always mounted in a mechanical socket. However, when it comes to big AI servers, especially those using Nvidia chips, there isn't a socket in sight, making upgrades or repairs more complicated. According to one report, though, Nvidia could well change its mind on that.

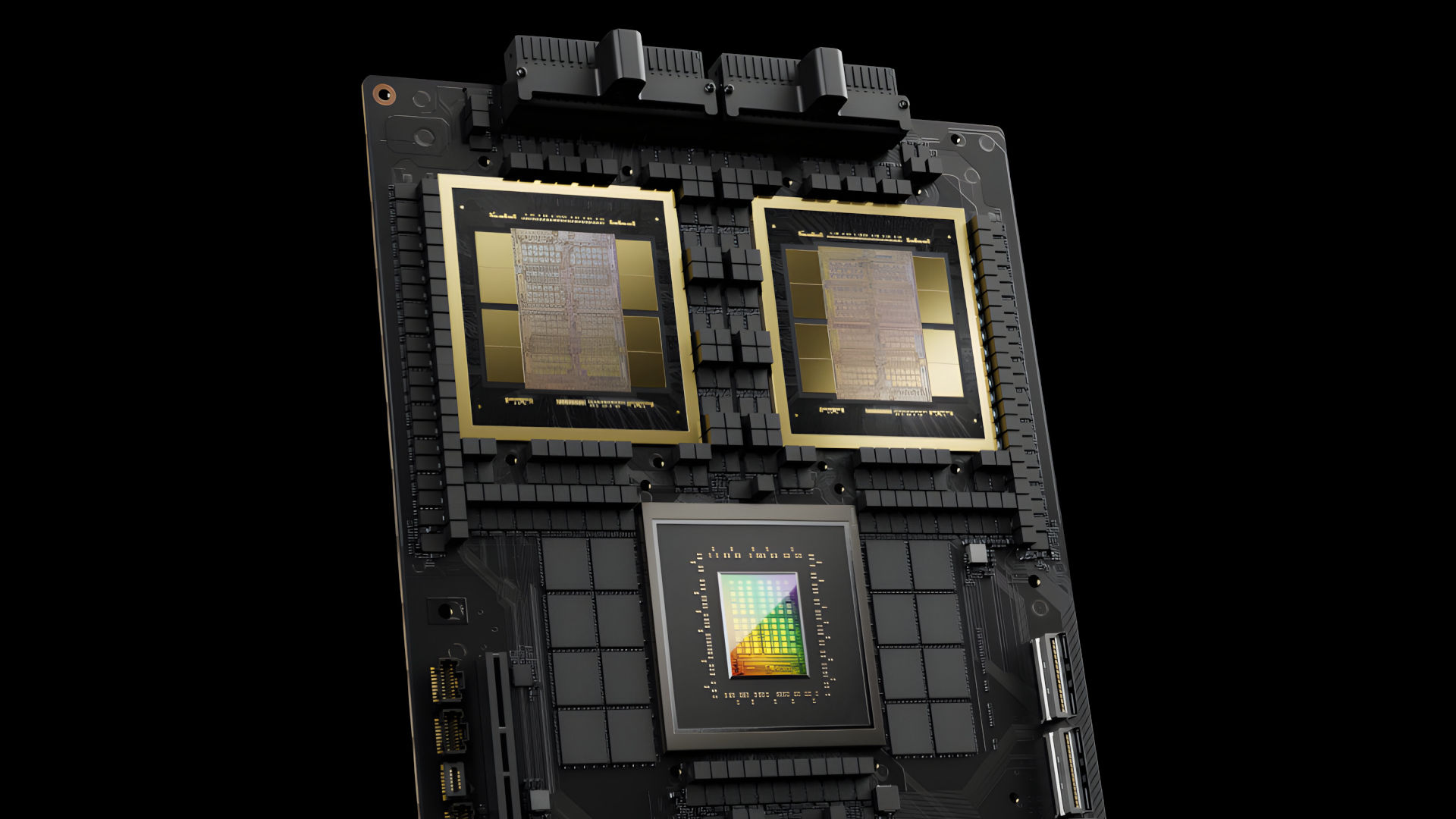

The report in question comes from Trendforce (via Chiphell) which claims that for its next series of Blackwell AI chips, the B300 lineup, Nvidia will switch from using directly mounted processors to a socketed design. Although the former provides the best possible performance, it does make maintenance and general servicing a pain in the neck.

Trendforce also points out that the change would benefit the manufacturers who build Nvidia AI hardware, as it would reduce the amount of surface-mounting machinery required or at the very least, reduce the amount of time spent using the equipment that's already used to make Nvidia's systems.

AMD already uses a socket for its Instinct MI300A monster chips, specifically an SH5 socket, which looks suspiciously like its SP5 socket for EPYC server CPUs. Intel, on the other hand, follows Nvidia's line of thinking with its Gaudi 3 AI accelerators, but since there aren't a huge number of companies using that processor, there's no pressure on Intel to make it socketed.

Of course, none of this really means anything to the general consumer, and the one thing you can be certain about is that you're not going to see a socketed GPU any time soon, if ever. One reason for this is that AMD and Nvidia's mega AI accelerators have RAM on the same package as the processing chiplets, so there's no need to worry about replacing the memory when one needs to swap out the accelerator.

Discrete graphics cards have RAM soldered to the circuit board and although there have been consumer GPUs with on-package VRAM in the past (e.g. the Radeon VII), the cost of such systems compared to the use of high-speed GDDR6 makes it uneconomical to do this at scale these days.

You might then wonder why not have the GPU and VRAM both socketed, just as with the CPU and system memory in your desktop PC. Apart from reducing the overall performance of the graphics card's memory system, it would increase the cost of manufacturing the card.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

Given how expensive they are these days, I'm not sure anyone would want to absorb that additional cost just to have the option to upgrade the GPU and RAM while keeping the same circuit board. Memory slots in motherboards follow an agreed standard, too, and CPUs are designed around that standard.

Nothing like this exists for GPUs and I can't see AMD, Intel, and Nvidia ever agreeing on a VRAM socket design. It would also make GPUs unnecessarily complicated, too.

If you look at every AM4 Ryzen processor, they all have a dual channel 128-bit wide memory controller in them, whereas Nvidia's current RTX 40-series of GPUs range from 96-bits through to 384-bits. Accommodating all that in a socket system is just too complex and thus too expensive.

Perhaps one day, in the dim and distant future, we'll get discrete GPUs in a socket but for now, it's only the humble CPU and cash-cow AI chips that are.

Nick, gaming, and computers all first met in the early 1980s. After leaving university, he became a physics and IT teacher and started writing about tech in the late 1990s. That resulted in him working with MadOnion to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its PC gaming section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com covering everything and anything to do with tech and PCs. He freely admits to being far too obsessed with GPUs and open-world grindy RPGs, but who isn't these days?