Samsung and SK Hynix spend billions more on DRAM production to feed the never-ending hunger for AI servers

This could potentially be good news for PC gaming but not while the tech world loves AI.

For as long as it has been running, the DRAM industry has been going through cycles of increased production and then scaling it back to boost profits. But now the two largest manufacturers are set to spend billions of dollars to significantly increase RAM chip output, all to meet the neverending demand for AI computers.

Samsung and SK Hynix manufacture all kinds of DRAM, be it DDR4 or DDR5 to go into your PC's motherboard, as well as GDDR6 (and now GDDR7) for your graphics card. But when it comes to the mega-processors, like AMD's Instinct MI300 and Nvidia's H100, the memory of choice is so-called High Bandwidth Memory (HBM).

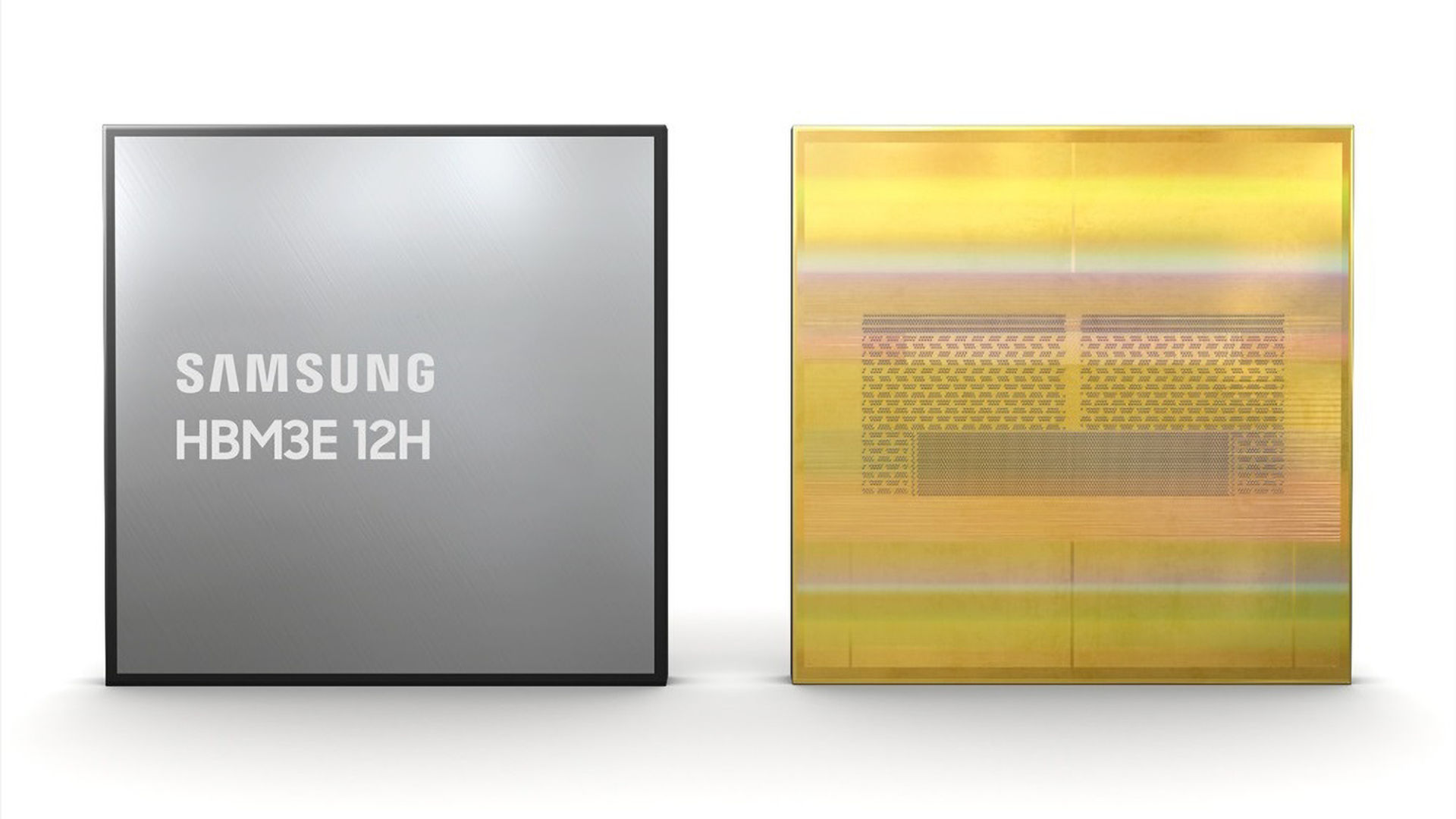

What makes HBM special is that it comprises multiple DRAM chips all stacked together, with power and data transmitted through wires that run vertically through the die (called 'through-silicon vias' or TSVs, for short). Having lots of memory accessed in parallel massively boosts the read/write bandwidth.

Samsung's latest HBM3 Icebolt memory uses 12 layers of its latest 10 nm DRAM dies, for a total of 16 or 24 GB. The AMD Instinct MI300 has eight HBM3 modules (128 GB of GPU memory) which means there are 96 DRAM dies in just one accelerator. Hence why Samsung and SK Hynix are spending a bucketload of cash on increasing output, according to a report by The Korean Economic Daily.

Now, at this point, you might be thinking something along the lines of "Well, that's all interesting but that's just for AI, not PC gaming." But here's the thing: the DRAM dies used for HBM can theoretically also be used in other applications, such as system RAM or graphics card VRAM.

HBM has been in the latter before—AMD was the first manufacturer to use HBM with a GPU in its Radeon RX Fury series, and then again with the RX Vega 64 and its siblings. The latest gaming graphics card to sport HBM was the Radeon VII and sadly, we've not seen it since as the outright speed of GDDR6X and GDDR7 offers sufficient bandwidth.

But if the market becomes awash with spare dies for HBM modules, we may possibly see a return of it in the high-end market, although it will just be for Nvidia cards, as AMD is backing out of that sector for the time being.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

It's also possible that should the AI bubble pop, Samsung and SK Hynix could end up with a veritable mountain of DRAM dies that are no longer in demand and potentially be repurposed for other products. I don't know how well memory intended for HBM modules will work as, say, standard system RAM but you never know—we could be looking at a return to very low memory prices again.

However, as the tech world is still utterly hell-bent on AI-ing everything, it's unlikely that PC gaming will see any benefit from increased DRAM production just yet.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?