Will there ever become a point with AI where there are no traditionally rendered frames in games? Perhaps surprisingly, Jen-Hsun says 'no'

Then goes on to speak rather poetically about inspirational pixels.

The new DLSS 4 Multi Frame Generation feature of the new RTX Blackwell cards has created a situation where one frame can be generated using traditional GPU computation, while the subsequent three frames can now be entirely generated by AI. That's a hell of an imbalance, so does one of the people responsible for making this AI voodoo a reality think we'll get to a point where there are no traditionally rendered frames?

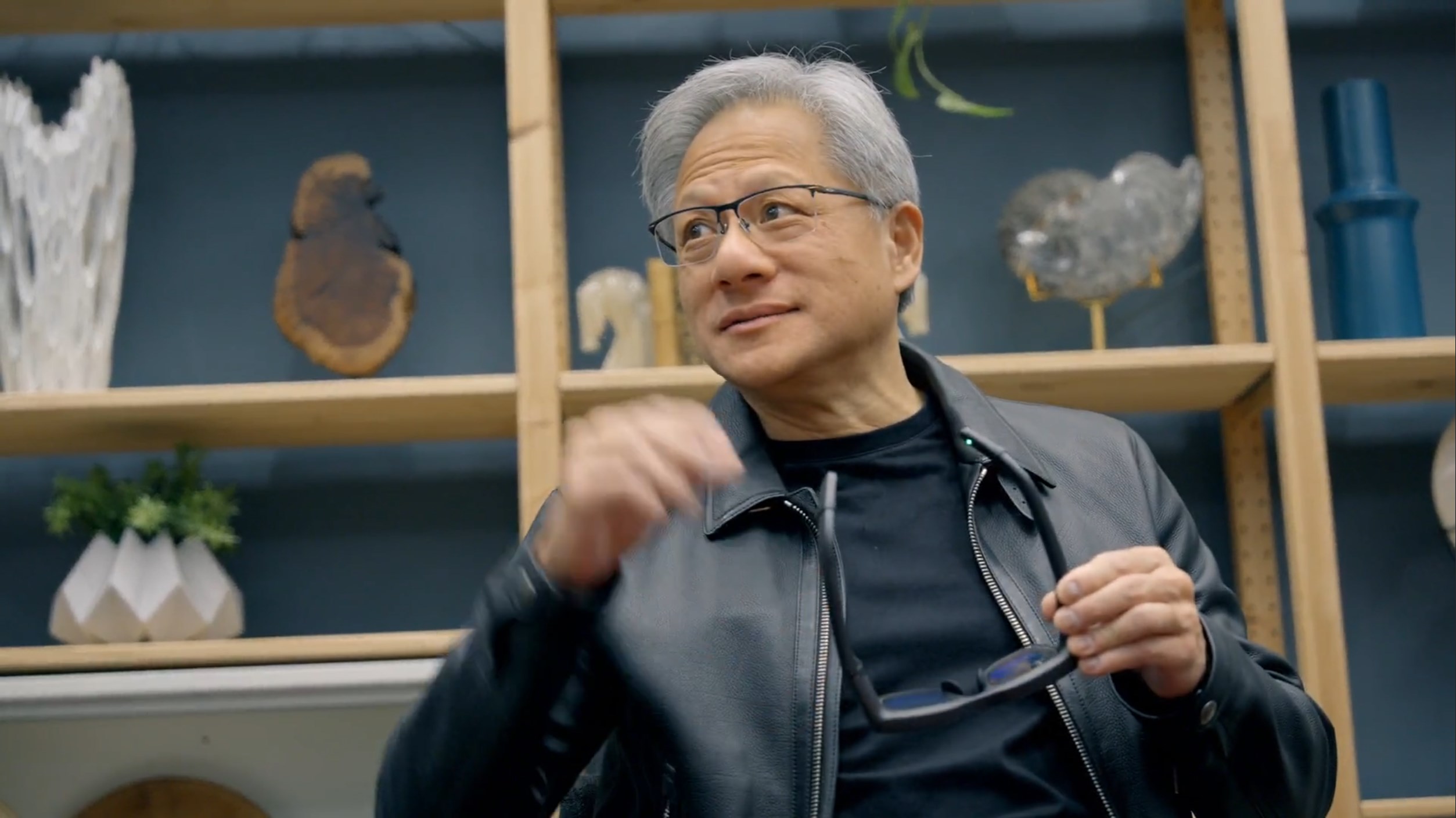

Jen-Hsun Huang, Nvidia's CEO, and one of the biggest proponents of AI in just about damned near everything, says: no.

During a Q&A session today at CES 2025 Jen-Hsun was asked the question about whether we're likely to get purely AI generated game frames as the entirety of a game pipeline and he was unequivocal in his assertion to the contrary, stating that it's vital for AI to be given grounding, to be given context in order to build out its world. In other words, AI still needs something to build from.

In gaming terms Huang suggests that it works in the same way as we give context to ChatGPT.

"The context could be a PDF, it could be a web search… and the context in video games has to not only be relevant story-wise, but it has to be world and spatially relevant. And the way you condition, the way you give it context is you give it early pieces of geometry, or early pieces of textures it could up-res from."

He then brings it back around to DLSS 4 and Multi Frame Generation, and the example of one rendered 4K frame and three further 4K game frames.

"Out of 33 million pixels," says Huang, "we render two [million]. Isn't that a miracle? We literally rendered two and we generated 31. The reason why that's a big deal is because those two million pixels have to be rendered precisely and from that conditioning we can generate the other 31.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

"Those two million pixels can be rendered beautifully using tons of computation because the computing that we would have applied to 33 million pixels we now channel it directly at two. And so those two million pixels are incredibly beautiful and they inspire and form the other 31."

And that's kinda the most lovely way I've ever heard someone speak about upscaling and frame generation, as inspiration for AI-generated pixels. Aww.

Catch up with CES 2025: We're on the ground in sunny Las Vegas covering all the latest announcements from some of the biggest names in tech, including Nvidia, AMD, Intel, Asus, Razer, MSI and more.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.