The Last of Us Part 2 proves that 8 GB of VRAM can be enough, even at 4K with maximum settings, so why aren't more games using the same clever asset-streaming trick?

Any performance issues you have in TLOU2 aren't down to a lack of GPU memory

When Sony's masterpiece The Last of Us Part 1 appeared on the humble PC two years ago, I hoped it would become a watershed moment in the history of console ports. Well, it was, but for all the wrong reasons—buggy and unstable, it hogged your CPU and GPU like nothing else, and most controversially of all, it tried to eat up way more VRAM than your graphics card has. It's fair to say that TLOU1's watershed moment cemented the whole '8 GB of VRAM isn't enough' debate.

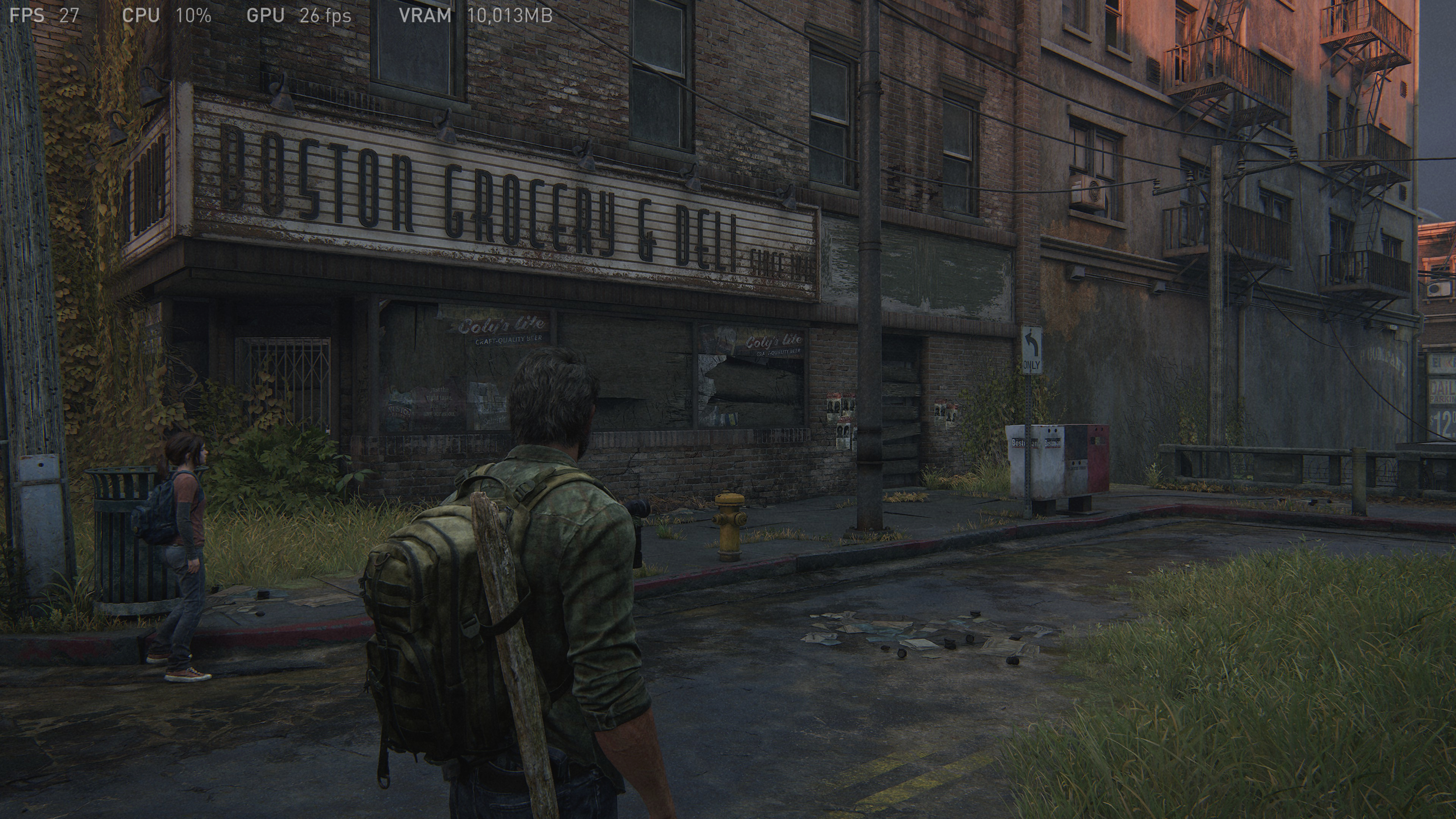

Most of those issues were eventually resolved via a series of patches, but like so many big-budget, mega-graphics games, if you fire it up at 4K on Ultra settings, the game will happily let you use more VRAM than you actually have. The TLOU1 screenshot below is from a test rig using an RTX 3060 Ti, with 8 GB of memory, showing the built-in performance HUD; I've confirmed that RAM usage figure with other tools and the game is indeed trying to use around 10 GB of VRAM.

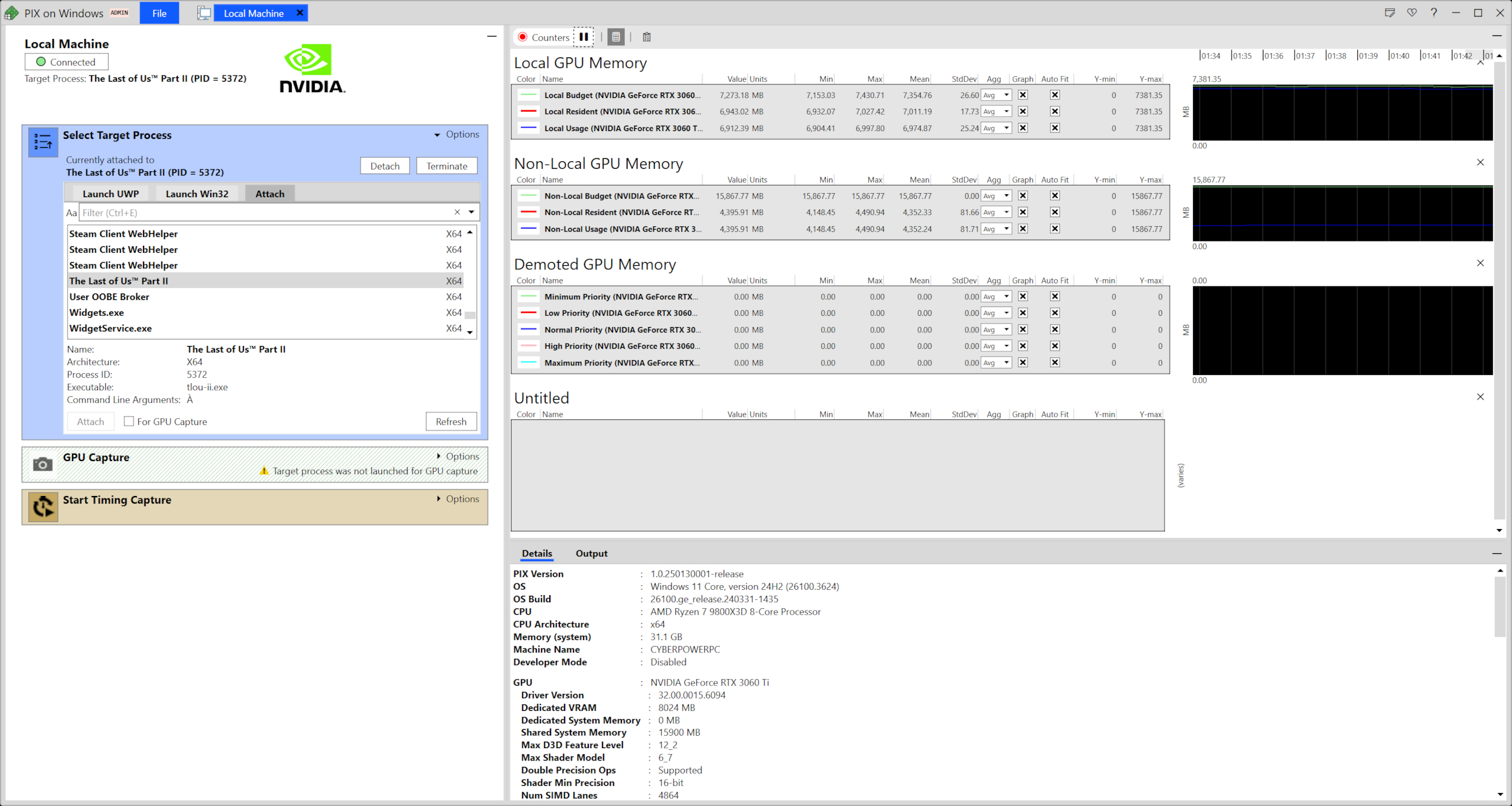

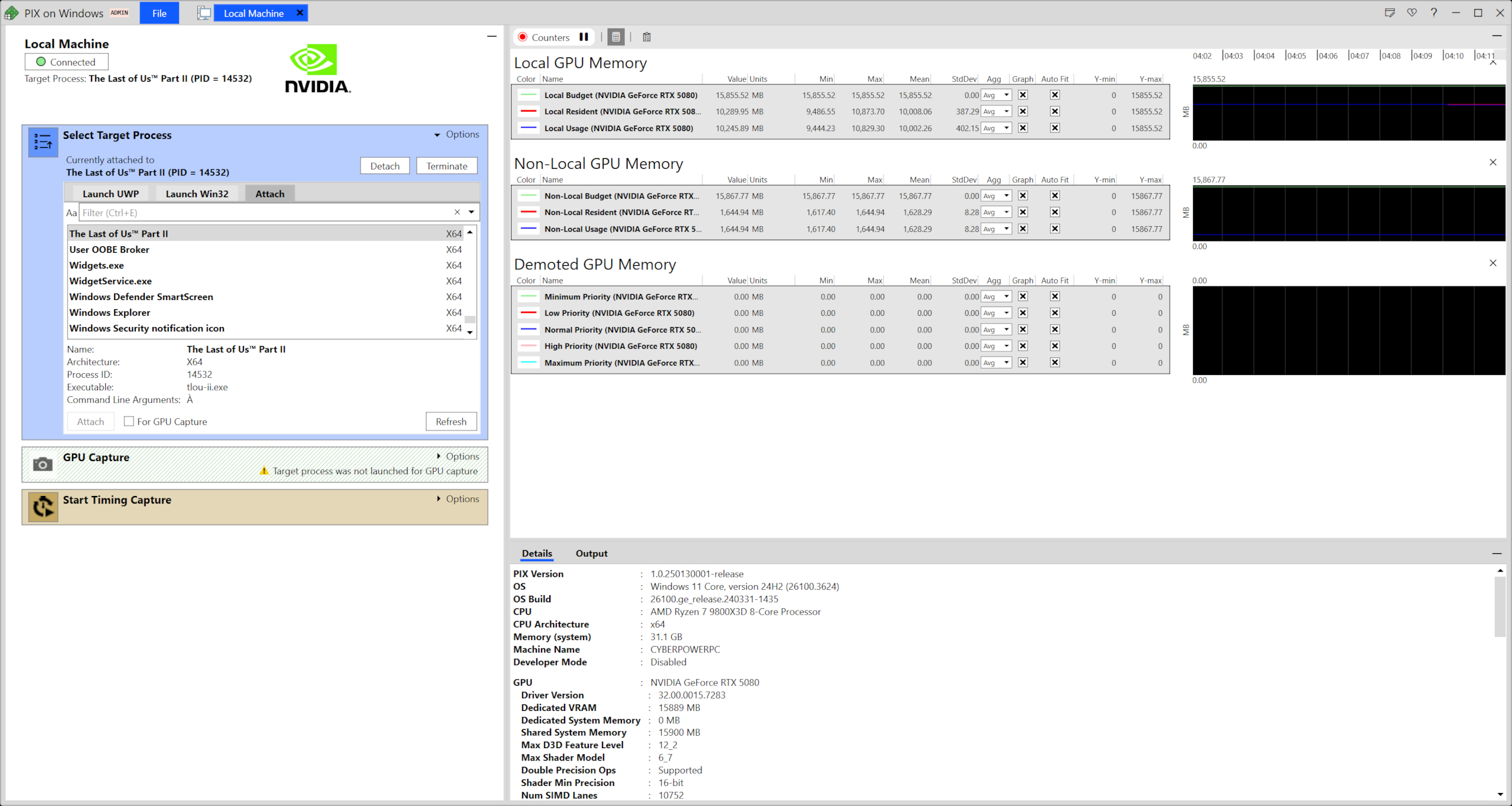

So when I began testing The Last of Us Part 2 Remastered a couple of weeks ago, the first thing I monitored after progressing through enough of the game was the amount of graphics memory it was trying to allocate and actually use. To do this, I used Microsoft's PIX on Windows, a tool for developers that lets them analyse in huge detail exactly what's going on underneath their game's hood, in terms of threads, resources, and performance.

To my surprise, I discovered two things: (1) TLOU2 doesn't over-eat VRAM like Part 1 did and (2) the game almost always uses 80% to 90% of the GPU's memory, irrespective of what resolution and graphics settings are being used. You might find that a little hard to believe but here's some evidence for you:

The screenshots below of PIX show the amount of GPU local and non-local memory being used in TLOU2, in a CyberPowerPC Ryzen 7 9800X3D rig, using an RTX 5080 and RTX 3060 Ti graphics card. The former has 16 GB of VRAM, whereas the latter has 8 GB of VRAM. In both cases, I ran the game at 4K using maximum quality settings (i.e. the Very High preset, along with 16x anisotropic filtering and the highest field of view), along with DLAA and frame generation enabled (DLSS for the 5080, FSR for the 3060 Ti).

Note that in both cases, the amount of local memory being used doesn't exceed the actual amount of RAM on each card—even though they're both running with the same graphics settings applied. Of course, that's how any game should handle memory but after the TLOU1 debacle, it was good to see it all resolved for Part 2.

If you look carefully at the PIX screenshots, you'll notice that the RTX 3060 Ti uses more non-local memory than the RTX 5080, specifically 4.25 GB versus 1.59 GB. Non-local, in this instance, refers to the system memory and what's using that chunk of RAM for the GPU is the game's asset streaming system. Since the 3060 Ti only has 8 GB of VRAM, the streaming buffer needs to be larger than that for the RTX 5080.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

During the gameplay loop I carried out to collate this information, the RTX 5080 averaged 9.77 GB of local memory usage and 1.59 GB of non-local usage, for a total of 11.36 GB. In the case of the RTX 3060 Ti, the figures were 6.81 and 4.25 GB respectively, with that totalling 11.06 GB.

Why aren't they exactly the same? Well, the 3060 Ti was using FSR frame generation, whereas the 5080 was running DLSS frame gen, so the few hundred MB of memory usage between the two cards can be partially explained by this. The other possible reason for the difference is that the gameplay loops weren't identical, so for the recording, the two setups weren't pooling exactly the same assets.

Not that it really matters, as the point I'm making is that TLOU2 is an example of a game that's correctly handling VRAM by not trying to load up the GPU's memory with more assets than it can possibly handle. It's what all big AAA mega-graphics games should be doing and the obvious question to ask here, is why aren't they?

Well, another aspect of TLOU2 I monitored might explain why: the scale of the CPU workload. One of the test rigs I used in my performance analysis of The Last of Us Part 2 Remastered was an old Core i7 9700K with a Radeon RX 5700 XT. Intel's old Coffee Lake Refresh CPU is an eight-core, eight-thread design, and no matter the settings I used, TLOU2 had the CPU core utilization pinned at 100% across all cores, all the time.

Even the Ryzen 7 9800X3D in the CyberPowerPC test rig was heavily loaded up, with its sixteen logical cores (i.e. eight physical cores handling two threads) being utilized heavily—not to the same extent as the 9700K but far more than any game I've tested of late.

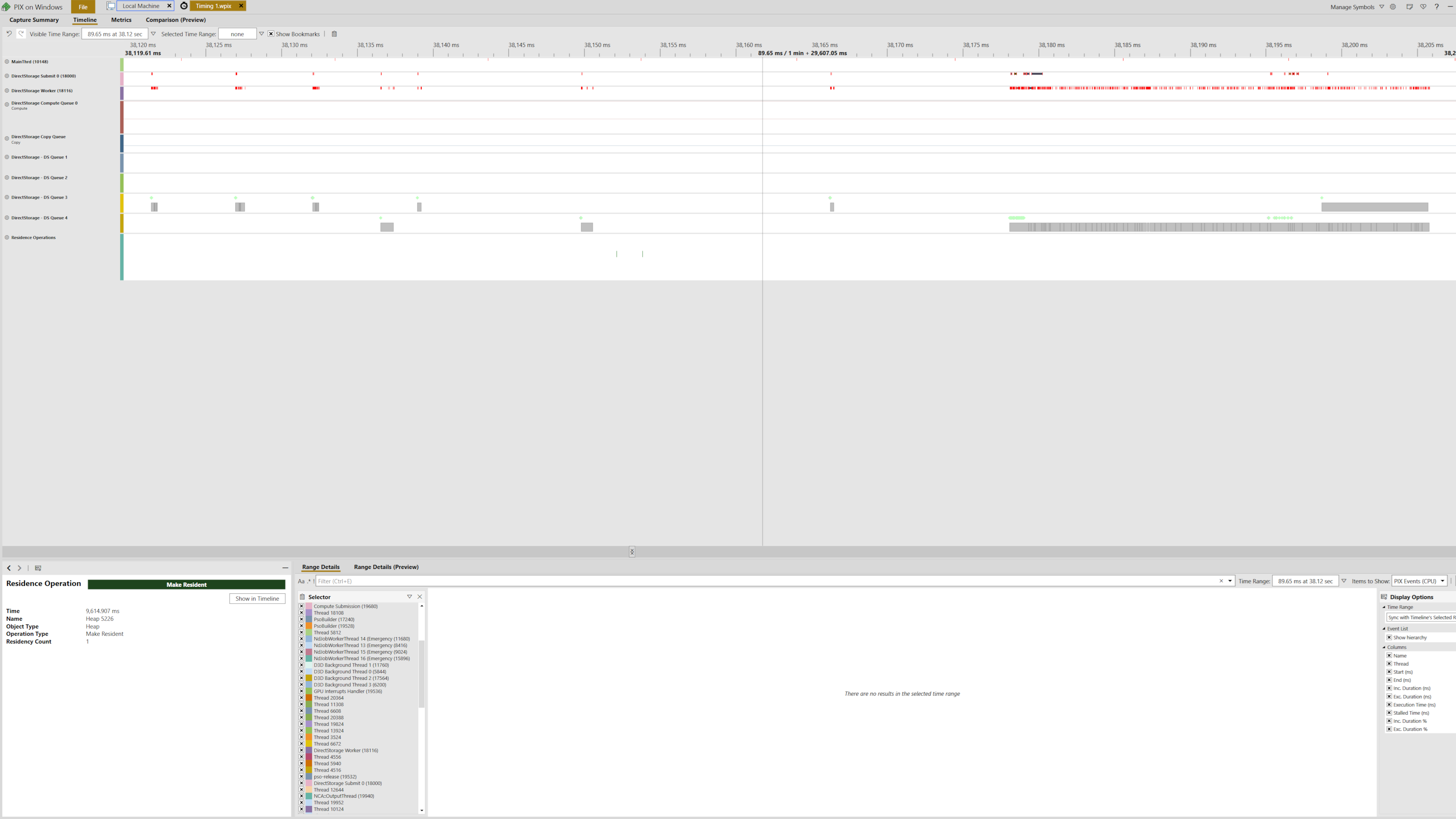

TLOU2 generates a lot of threads to manage various tasks in parallel, such as issuing graphics commands and compiling shaders, but there are at least eight threads that are dedicated to DirectStorage tasks. At this point, I hasten to add that all modern games generate way more threads than you ever normally notice, so there's nothing especially noteworthy about the number that TLOU2 is using for DirectStorage.

The above PIX screenshot shows these particular threads across 80 milliseconds worth of rendering (basically a handful of frames) and while many of the threads are idle in this period, two DirectStorage queues and the DirectStorage submit threads are relatively busy pulling up assets (or possibly 'sending them back' as so to speak).

Given that it's not possible to disable the use of DirectStorage and the background shader compilation in TLOU2, it's hard to tell just how much these workloads contribute to the heavy demand of the CPU's time but I suspect that none of it is trivial.

...while it's not a flawless technique, it does a pretty damn good job of getting around any VRAM limitations

However, I recognise the biggest programming challenge is just making all of this work smoothly and correctly synchronise with the primary threads, and that's possibly why most big game developers leave it to the end user to worry about VRAM usage rather than creating an asset management system like TLOU2's.

The Last of Us Part 1, like so many other games, includes a VRAM usage indicator in the graphics menu for its games and this is relatively easy to implement, although making it 100% accurate is harder than you might think.

At the risk of this coming across as a flamebait, let us consider for a moment whether TLOU's asset management system is a definite answer to the '8 GB of VRAM isn't enough' argument. In some ways, 8 GB is enough memory because I didn't run into it being a limit in TLOU2 (and I've tested a lot of different areas, settings, and PC configurations to confirm this).

Just as in The Last of Us Part 2, any game doing the same thing will also need to stream more assets across the PCIe bus on an 8 GB graphics card compared to a 12 or 16 GB one, but if that's handled properly, it shouldn't affect performance to any noticeable degree. The relatively low performance of the RTX 3060 Ti at 4K Very High has nothing to do with the amount of RAM but instead the number of shaders, TMUs, ROPs, and memory bandwidth.

If you've read this far, you may be heading towards the Comments section to fling various YouTube links my way showing TLOU2 stuttering or running into other performance problems on graphics cards with 8 or less GB of VRAM. I'm certainly not going to say that those analysis pieces are all wrong and I'm the only one who's right.

Anyone who's been in PC gaming long enough will know that PCs vary so much—in terms of hardware and software configurations and environments—that one can always end up having very different experiences.

Justin, who reviewed The Last of Us Part 2, used a Core i7 12700F with an RTX 3060 Ti, and ran into performance issues at 1080p with the Medium preset. I used a Ryzen 5 5600X with the same GPU and didn't run into any problems at all. Two fairly similar PCs, two very different outcomes.

Best CPU for gaming: The top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game ahead of the rest.

Dealing with that kind of thing is one of the biggest headaches that PC game developers have to deal with, and it's probably why we don't see TLOU2's cool asset management system in heavy use—figuring out how to make it work flawlessly on every possible PC configuration that can run the game is going to be very time-consuming. That's a shame because while it's not a flawless technique (it occasionally fails to pull in assets at the right time, for example, leading to some near-textureless objects), it does a pretty damn good job of getting around any VRAM limitations.

I'm not suggesting that 8 GB is enough period because it's not—as ray tracing and neural rendering become more prevalent, and the potential for running AI NPCs on the GPU, the amount of RAM a GPU has will become an increasingly important commodity, for example. Asset streaming is also effectively useless if your entire view in a 3D world is packed full of objects and hundreds of ultra-complex materials because those resources need to be in VRAM right then and there.

But I do hope that some game developers take note of The Last of Us Part 2 and try to implement something similar because with both AMD and Nvidia still producing 8 GB graphics cards and mobile chips (albeit in the entry-level sector), it's still going to be a limiting factor for many more years to come and the argument over whether it's enough VRAM will continue to run for just as long.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.