Patent document shows AMD started researching the use of neural networks in ray-traced rendering at least two years ago

Just because it's patented doesn't mean it'll ever be used but you never know.

GPU companies, like AMD and Nvidia, spend huge sums of money every year on researching rendering techniques, either to improve the performance of their chips or as part of a future architecture design. One recently approved patent shows that AMD began exploring the use of neural networks in ray tracing at least two years ago, just when the Radeon RX 7000-series was just about to be announced.

Under the inauspicious name of 'United States Patent Application 20250005842', AMD submitted a patent application for neural network-based ray tracing in June 2023, with the rubber stamp of approval hitting in January of this year. The document was unearthed by an Anandtech forum user (via DSOGaming and Reddit) along with a trove of other patents, covering procedures such as BVH (bounding volume hierarchy) traversal based on work items and BVH lossy geometry compression.

The neural network patent caught my attention the most, though, partly because of when it was submitted for approval and partly because the procedure undoubtedly requires the use of cooperative vectors—a recently announced extension to Direct3D and Vulkan, that lets shader units directly access matrix or tensor units to process little neural networks.

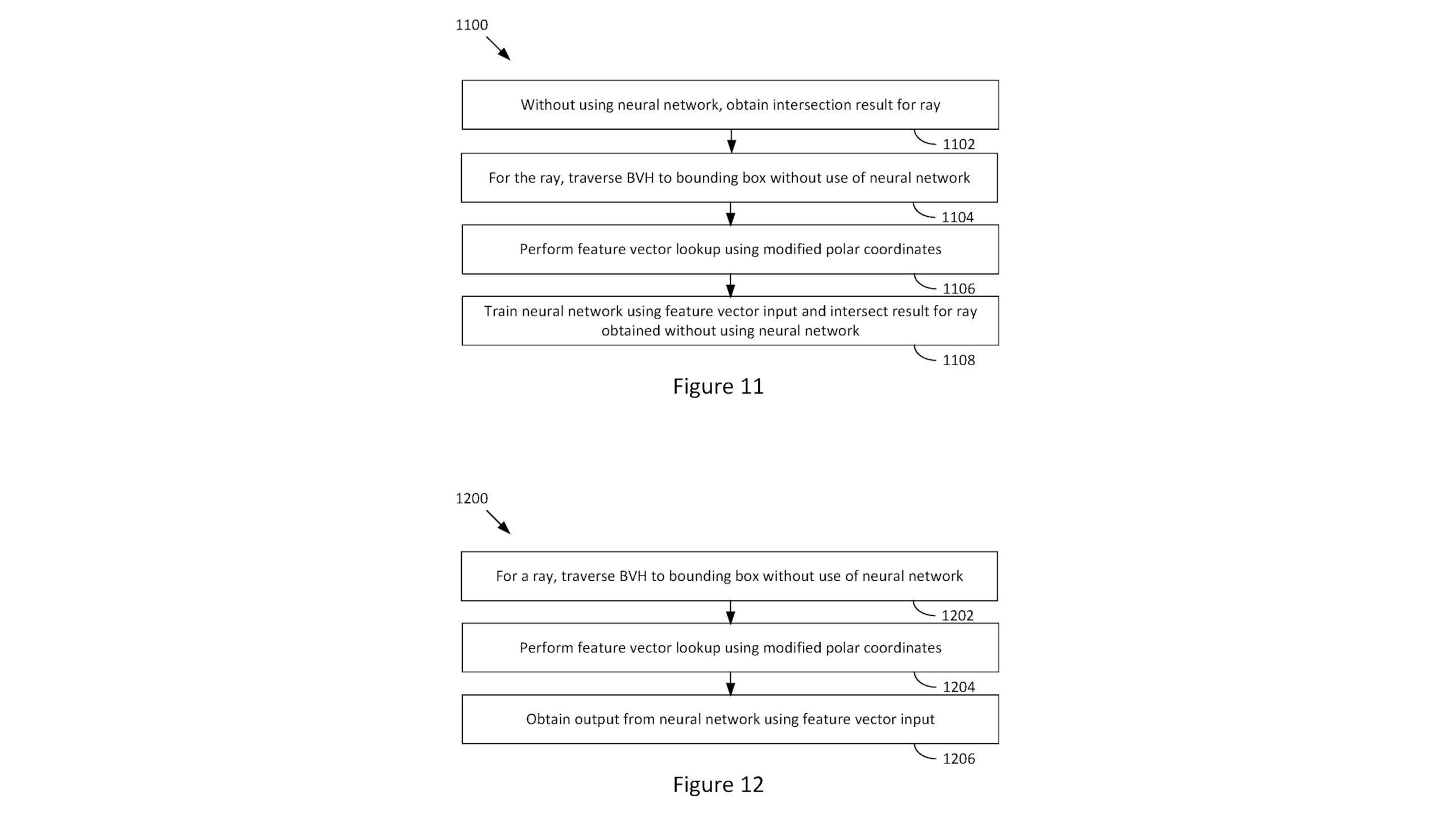

What the process actually does is determine if a ray traced from the camera in a 3D scene is occluded by an object that it intersects. It starts off as a BVH traversal, working through the geometry of the scene, checking to see if there's any interaction between the ray and a box. Where there's a positive result, the process then "perform(s) a feature vector lookup using modified polar coordinates." Feature vectors are a machine-learning thing; a numerical list of the properties of objects or activities being examined.

The shader units then run a small neural network, with the feature vectors as the input, and a yes/no decision on the ray being occluded as the output.

All of this might not sound like very much and, truth be told, it might not be, but the point is that AMD has clearly been invested in researching 'neural rendering' long before Nvidia made a big fuss about it with the launch of its RTX 50-series GPUs. Of course, this is normal in GPU research—it takes years to go from an initial chip design to having the finished product on a shelf, and if AMD only started doing such research now, it'd be ridiculously far behind Nvidia.

And don't be fooled by the submission date of the patent, either. June 2023 is simply when the US Patent Office received the application and there's simply no way AMD cobbled it all together over a weekend, and sent it off the following week. In other words, Team Red has been studying this for many years.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Back in 2019, an AMD patent for a hybrid ray tracing procedure surfaced, which was submitted in 2017. While it's not easy to determine whether it was ever utilized as described, the RDNA 2 architecture used a very similar setup and it launched in late 2020.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

Patent documents don't ever concern themselves with actual performance, so there's no suggestion that you're going to be seeing a Radeon RX 9070 XT running little neural networks to improve ray tracing any time soon (mostly because it's down to game developers to implement this, even if it is faster) but all of this shows that AI is very much going to be part-and-parcel of 3D rendering from now on, even if this and other AI-based rendering patents never get implemented in hardware or games.

Making chips with billions more transistors and hundreds more shader units is getting disproportionately more expensive, compared to the actual gains in real-time performance. AI offers a potential way around the problem, which is why RDNA 4 GPU sports dedicated matrix units to handle such things

At the end of the day, it's all just a bunch of numbers being turned into pretty colours on a screen, so if AI can make games run faster or look better, or better still, do both then it's not hard to see why the likes of AMD is spending so much time, effort, and money on researching AI in rendering.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.