Nvidia testing cooling solutions up to 600W for its Blackwell graphics cards suggests power levels in line with the previous GeForce generations

Looks like RTX super-chonk coolers are chonk for life. Or one more generation, at least.

If you've been wondering if Nvidia's next generation of GeForce cards will need even bigger coolers than those we currently see, then fear not, it seems the chip giant has been experimenting with heatsink and fan designs to cope with power levels no greater than those seen with Ada Lovelace and Ampere GPUs.

That's according to a report by Benchlife.info which has said that information from a cooling module factory points to Nvidia testing four designs at the moment, ranging from 250 W up to 600 W. That last figure might seem ridiculously big but it's no different to how coolers were designed for the current RTX 40-series cards, after the big power hikes seen with Ampere GPUs (RTX 30-series). It's also worth noting that's the limit of the much-maligned 12-pin power connection Nvidia has been using for its cards this generation.

Modern graphics cards require a lot of energy to power the thousands of shaders inside them and that also means lots of heat. To keep that in check many sport huge coolers but what you don't want is a design that can only just manage a GPU's maximum power consumption.

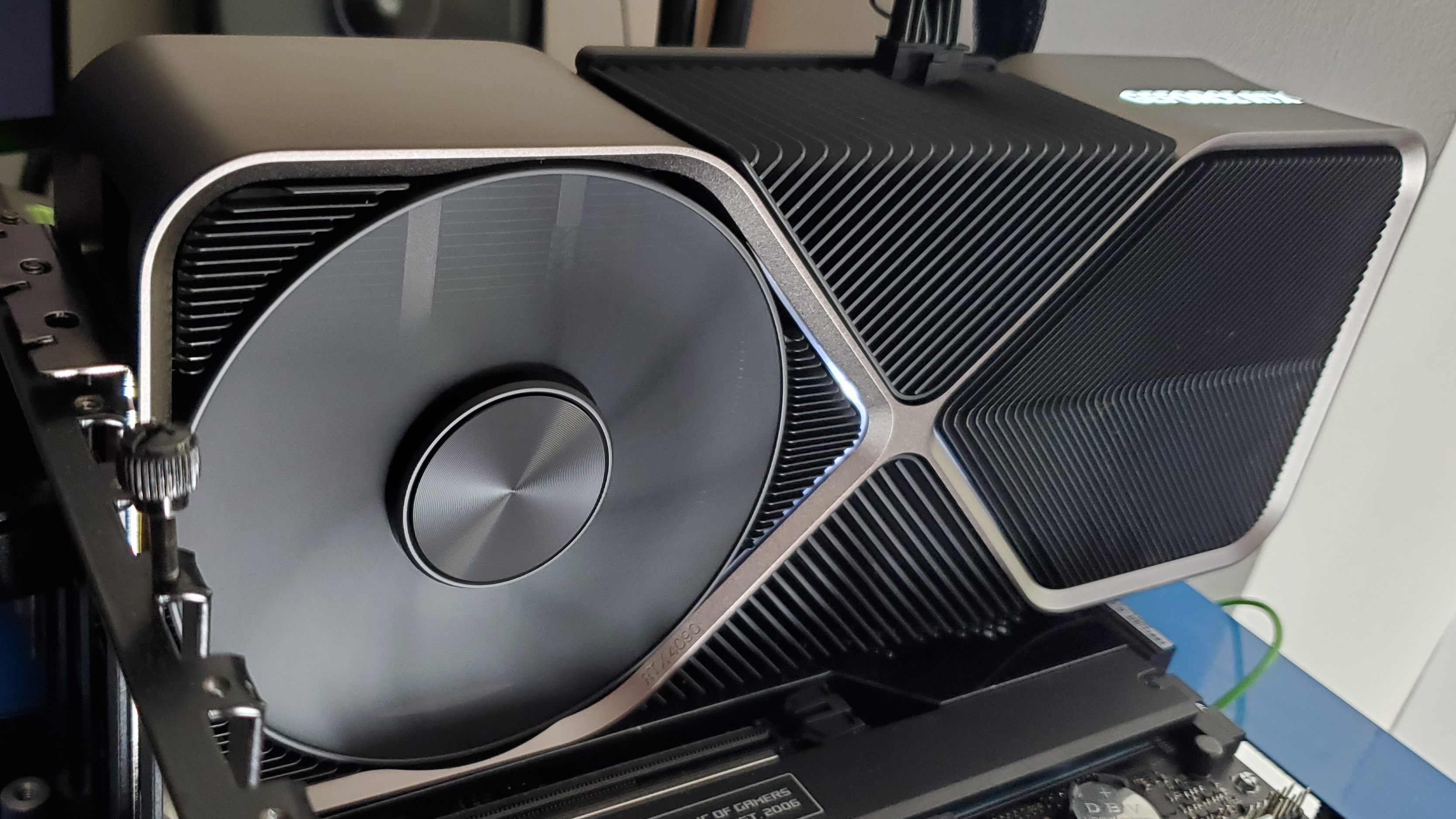

Take the GeForce RTX 4090 as an example—the entire card has a total board power limit of 450 W. It doesn't always get anywhere near that level but it can use more than that, as we discovered in our RTX 4090 review. If its cooler could only cope with 450 W of heat at best, then at those times when it goes over the limit, the chip's temperature would rapidly increase to the point where it just shuts down.

In other words, coolers always need to have a certain amount of headroom to them, to ensure they don't become saturated with heat and become unable to shift it all quickly enough.

This all suggests that Blackwell cards won't be sucking up any more power than Ada Lovelace ones, which is good news, but it also suggests that we're not going to be seeing Nvidia reducing the size of its cards either. And that's going to be especially true of third-party vendors (e.g. Asus, Gigabyte, MSI, et al) who typically use even larger cooler designs than Nvidia's reference designs.

As to why 250 W is the lowest power limit that Nvidia is testing for, it might be looking at boosting the performance of its entry-level models. The RTX 4060 has a total board power limit of just 115 W and while it's very good at sipping energy, its overall performance compared to the previous generation wasn't a huge step forward. If the RTX 5060 is a lot more potent, then its power consumption will undoubtedly be higher than the RTX 4060's.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Then again, Nvidia could still end up releasing a 600 W RTX 5090 and set new records for gaming den temperatures. As much as I love the raw capabilities of today's GPUs, I do wish they weren't so power-hungry—my office turns into a sauna in the summer months!

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?