Micron expects GDDR7 will improve ray tracing and rasterization performance by more than 30%, compared to previous gen VRAM

Does 30% more memory bandwidth mean 30% more fps? Let's put it to the test.

RAM chip manufacturer Micron has recently been making some interesting claims about its next generation of ultra-fast memory for graphics cards, GDDR7. Compared to what's currently being used (GDDR6X and GDDR6), Micron says its forthcoming tech is "expected to achieve greater than 30% improvement in frames per second for ray tracing and rasterization workloads."

That's a remarkable performance boost, no matter how you look at it, and it's normally the preserve of the significant architectural changes a new GPU design brings in. However, while it's certainly true that the data transfer rate and bandwidth of GDDR7 will be at least 30% greater than the fastest GDDR6/6X on offer right now, it's a different story altogether when it comes to actual games and applications.

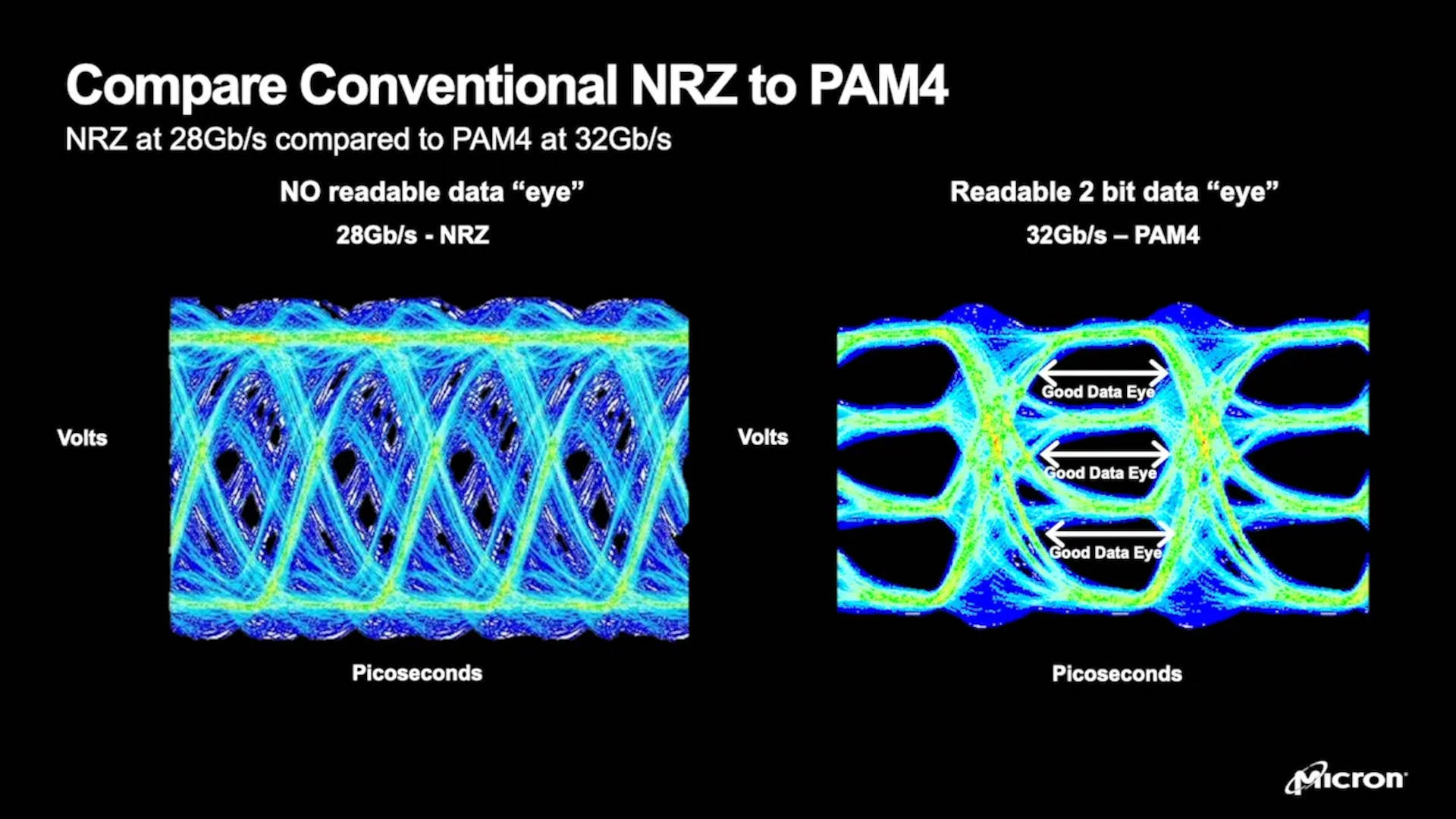

Micron supplies all the GDDR6X chips used in Nvidia's graphics cards and the fastest it offers is rated to 24 GT/s (24 billion transfers per second). It also sells 18 GT/s GDDR6, though Samsung has 20 GT/s GDDR6 for sale. When it comes to GDDR7, though, only Micron is providing any specifications at the moment and the two models available for sampling are rated at 28 and 32 MT/s.

Take 24, increase it by 30%, and you get a value of 31.2 so it's obvious where Micron is getting its performance claims from. But let's say you could take that GDDR7 and add it to a current graphics card (ignoring the fact that it actually wouldn't work because the GPU can't use it)—would games and benchmarks be 30% faster, like Micron says?

I ran through a few tests on an RTX 4080 Super, changing the clocks on its VRAM across the widest range I could manage. Everything else remained the same, the performance differences visible are purely from altering the memory clocks. First up were two 3DMark tests, Steel Nomad and Speed Way. The former uses traditional rasterisation techniques for all the graphics, whereas the latter shoehorns in a fair amount of ray tracing.

It wasn't possible to achieve a 30% difference in clock speeds but 18% is sufficiently big enough to be able to extrapolate how much difference faster VRAM would make. As you can see above, the jump of 18% between the slowest and fastest VRAM speeds only produced a 5% and 7% gain in frame rates in 3DMark tests.

It was a similar story in two games I checked out, Cyberpunk 2077 and Returnal, both at 4K. With no ray tracing enabled, the biggest improvements were in the minimum frame rates with 12% for Cyberpunk 2077 and 11% for Returnal. Enabling ray tracing (path tracing for CP2077), along with DLSS Balanced and Frame Generation to get some playable frame rates, dropped these a little—11% and 7% gains for the two games.

For an 18% increase in VRAM speed, the biggest gain I saw was 12%, which was pretty good. However, that was only for the minimum frame rates, and the averages only improved by 6% at best. This implies that if the RTX 4080 Super could be equipped with 30% faster VRAM, one certainly wouldn't see that kind of an increase in the average frame rates of those games and tests.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Yes, it's only a tiny fraction of all the games one could possibly test, but given how graphically demanding they were, they were good candidates for any hardware changes that provide a performance boost.

So does that mean Micron is lying through its back teeth over these claims? Not really because I strongly suspect that there are some "ray tracing and rasterization workloads" that will show a significant increase in frame rates when using faster VRAM, and once GDDR7 is out in the real world, there's bound to be at least one game that's used a marketing tool to highlight the gains that GDDR7 brings.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

But it's worth remembering that the vast majority of developers are not going to make a game that has 100% memory-limited rendering performance. It's going to depend far more on the number of shader units and the architecture underlying it all.

At this point, you might be wondering what's the point of having GDDR7. The answer lies in the fact that current GPUs are partially designed around the best available memory technology—there's no point in making a GPU with a gazillion shaders when it wouldn't be physically and financially possible to supply it with enough VRAM bandwidth to feed all those shaders.

However, GPUs of the future will have more shaders than those we can currently buy, and the more powerful models will require faster VRAM to keep them fed with data. The next RTX 5090 could well be 30% faster in games than the current RTX 4090, but it won't really be down to GDDR7 being 30% faster than GDDR6X. It'll be down to the usual things: More shaders, higher clock speeds, and more cache.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?