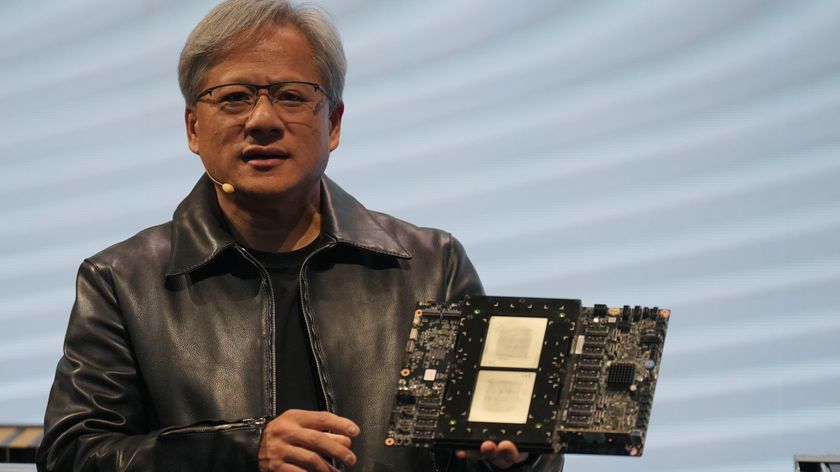

Jen-Hsun reckons Nvidia has driven the 'cost of computing down by 1,000,000 times'

Comparing the relentless performance increase of the GPU to the original economic ethos behind Moore's Law.

By virtue of its relentless pursuit of ever faster, ever more powerful GPUs, Jen-Hsun Huang has claimed that Nvidia, over the past 20 years, has driven the "cost of computing down by one million times".

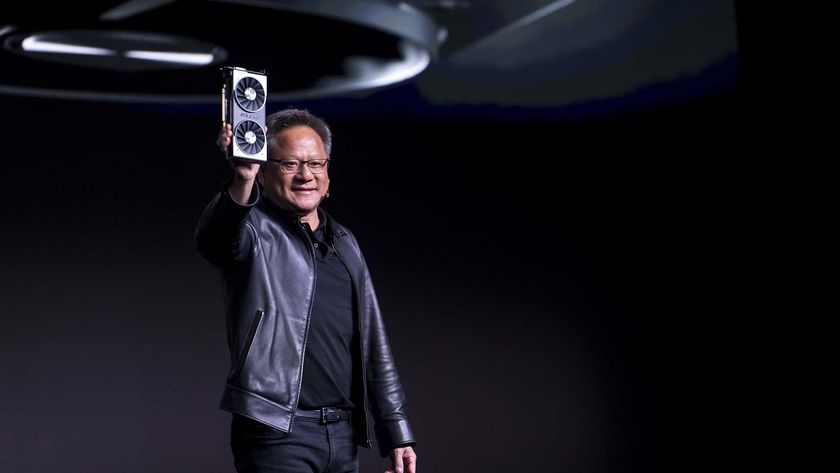

When you look at the rising costs of modern graphics cards compared with their forebears, that's maybe hard to fathom. It sure looks like the cost of a GPU has just been steadily rising to most of us when we look at the objects of our silicon desires. But when you look at just what the graphics chips of today are capable of, the level of raw computational power at the disposal of even a lowly RTX 4060 would have seemed borderline mythical 20 years back.

A GeForce 6800 Ultra from 2005 delivered a whopping 6.4 GFLOPS, while the bottom of the Ada Lovelace generation comes with 15,100 GFLOPS of processing grunt. That's a whole world of difference from a $499 card of 20 years ago versus a $299 GPU of today.

And that's not even a card anywhere near the top of the stack, nor close to what you'll get from Nvidia's most powerful enterprise GPUs.

Jen-Hsun, at today's morning-after-keynote Q&A session, compared what Nvidia has done in developing more powerful graphics silicon, pushing down the relative price of GPU computational power, to the impact of Moore's Law.

"The reason Moore's Law was so important in the history of the chip is that it drove down computing costs," Huang remarks. "In the course of the last 20 years we've driven the marginal cost of computing down by one million times.

"So much that machine learning became logical: 'just have the computer go figure it out.'"

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Basically, there's so much computational power available for such relatively little cash that you might as well just start throwing it at AI to solve all our problems. Or, you know, draw us a picture of a gold fish when you absolutely, positively just have to have a freshly generated picture of a fish.

There's no getting away from it, the graphics card, and its constituent component, the GPU, have become the most important pieces of silicon in our modern time. There's also no getting away from the fact that Nvidia is responsible for some of the most important silicon of our time, however you feel about the rise and rise of artificial intelligence and its potential impact on the world and humanity.

Does Jen-Hsun's maths add up? I don't know, he didn't show his workings, but what is true is that since the birth of the GPU as we know it, the cost-to-performance ratio has only been going in one direction.

Catch up with CES 2025: We're on the ground in sunny Las Vegas covering all the latest announcements from some of the biggest names in tech, including Nvidia, AMD, Intel, Asus, Razer, MSI and more.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.