It's on: our first look at Intel Battlemage comes from Lunar Lake's new graphics silicon with its redesigned Xe2 architecture

The Xe2 GPU architecture will power everything from low-power mobile chips to desktop graphics cards.

Battlemage is coming. At least that's what Intel says at an event dedicated to its new mobile processors, Lunar Lake. These new mobile chips give us a good idea of what to expect with Intel's second graphics card generation—Lunar Lake and the upcoming Battlemage graphics cards share the same GPU architecture.

It's called Xe2—with a little floating 'e' that our website won't display properly. This new architecture will be found across the entire Intel product stack, which means no more Xe-HPG, Xe-LPG, or any of that nomenclature—it's Xe2 all the way down.

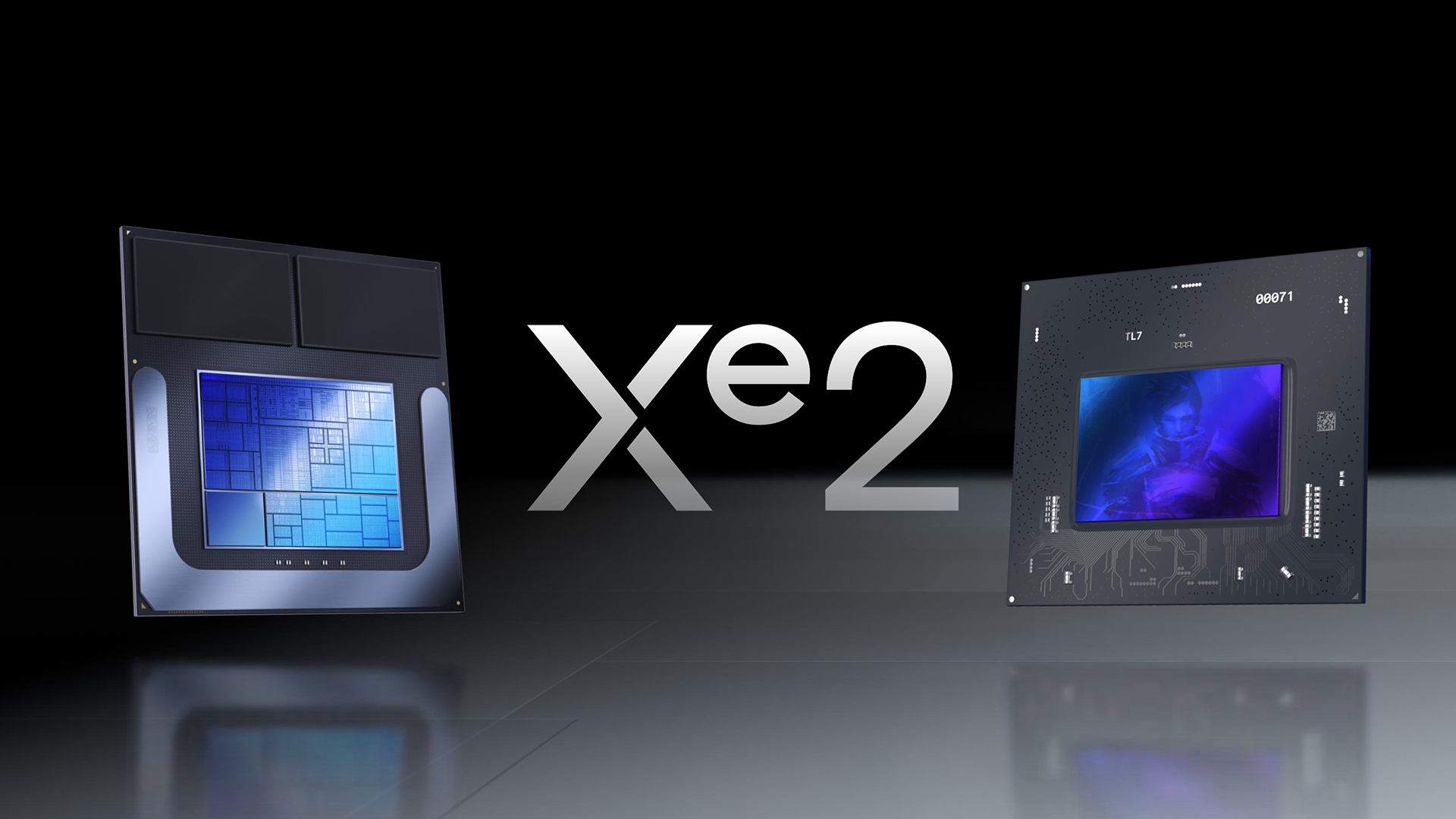

You can see the new Lunar Lake processor on the left in the picture above. On the right, our first official image of the upcoming Battlemage GPU.

"What I'm confirming to you today is that the Xe2 architecture is going to be used in both," Intel graphics spokesperson Tom Petersen says during a Lunar Lake graphics briefing.

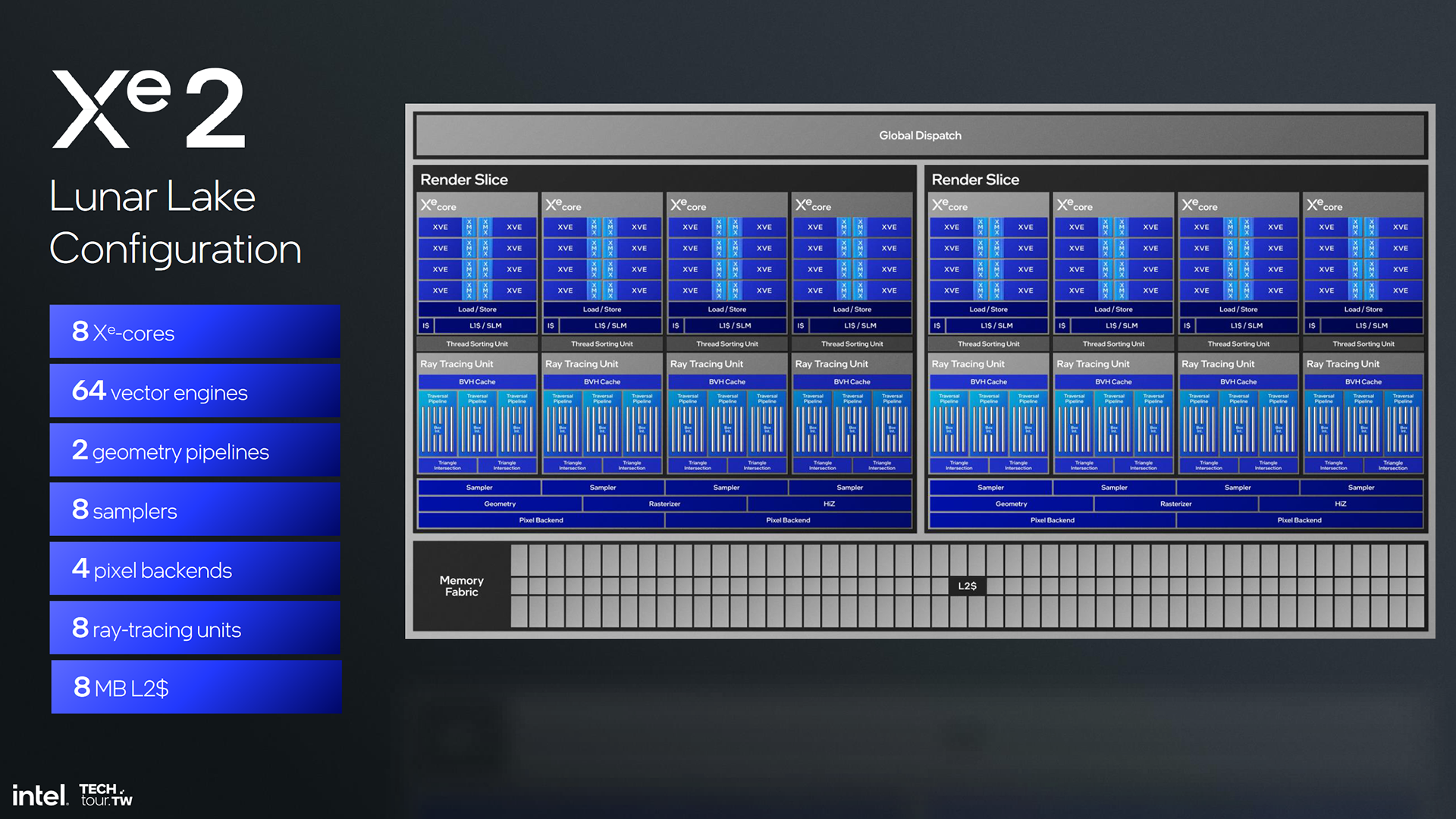

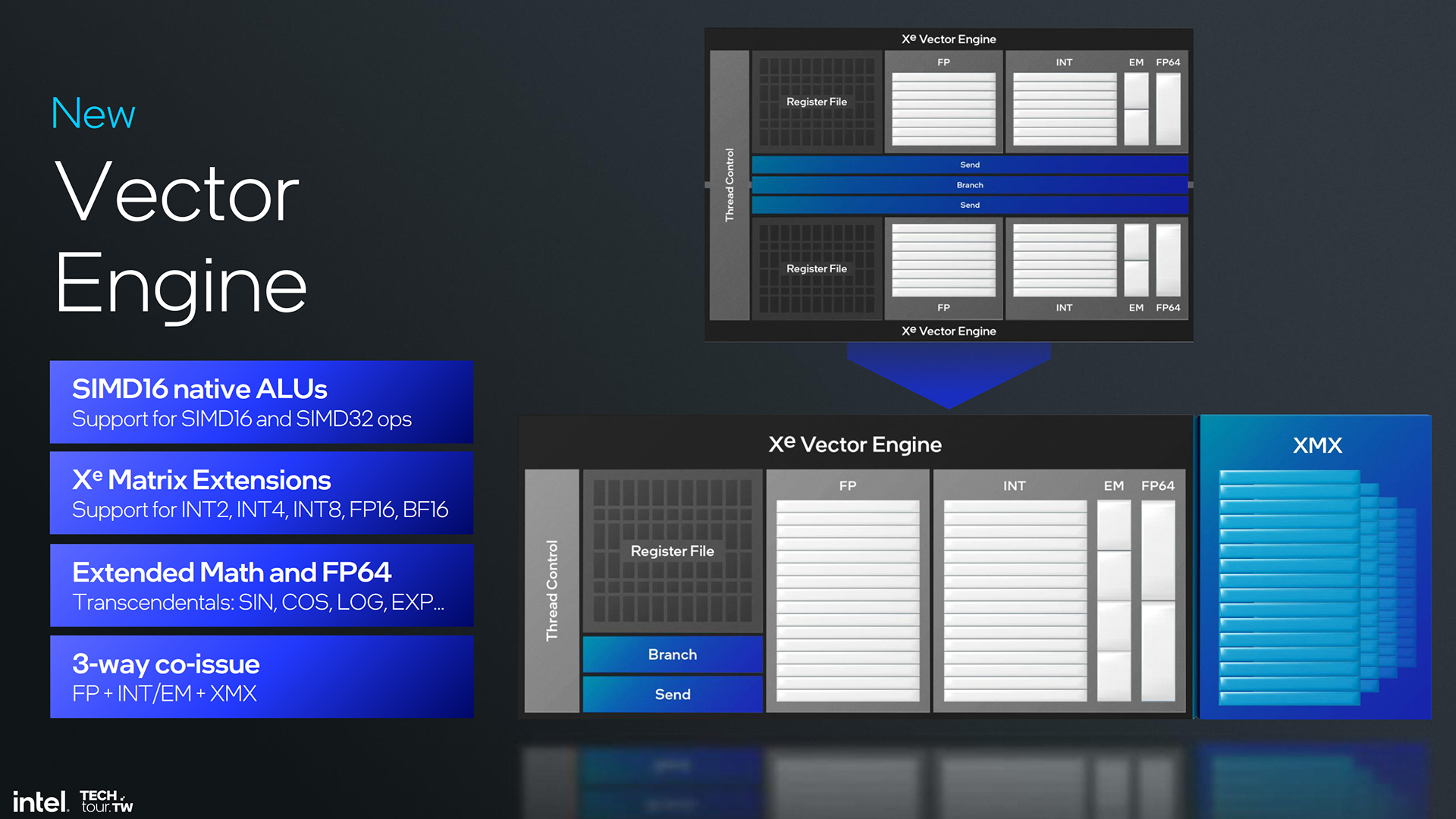

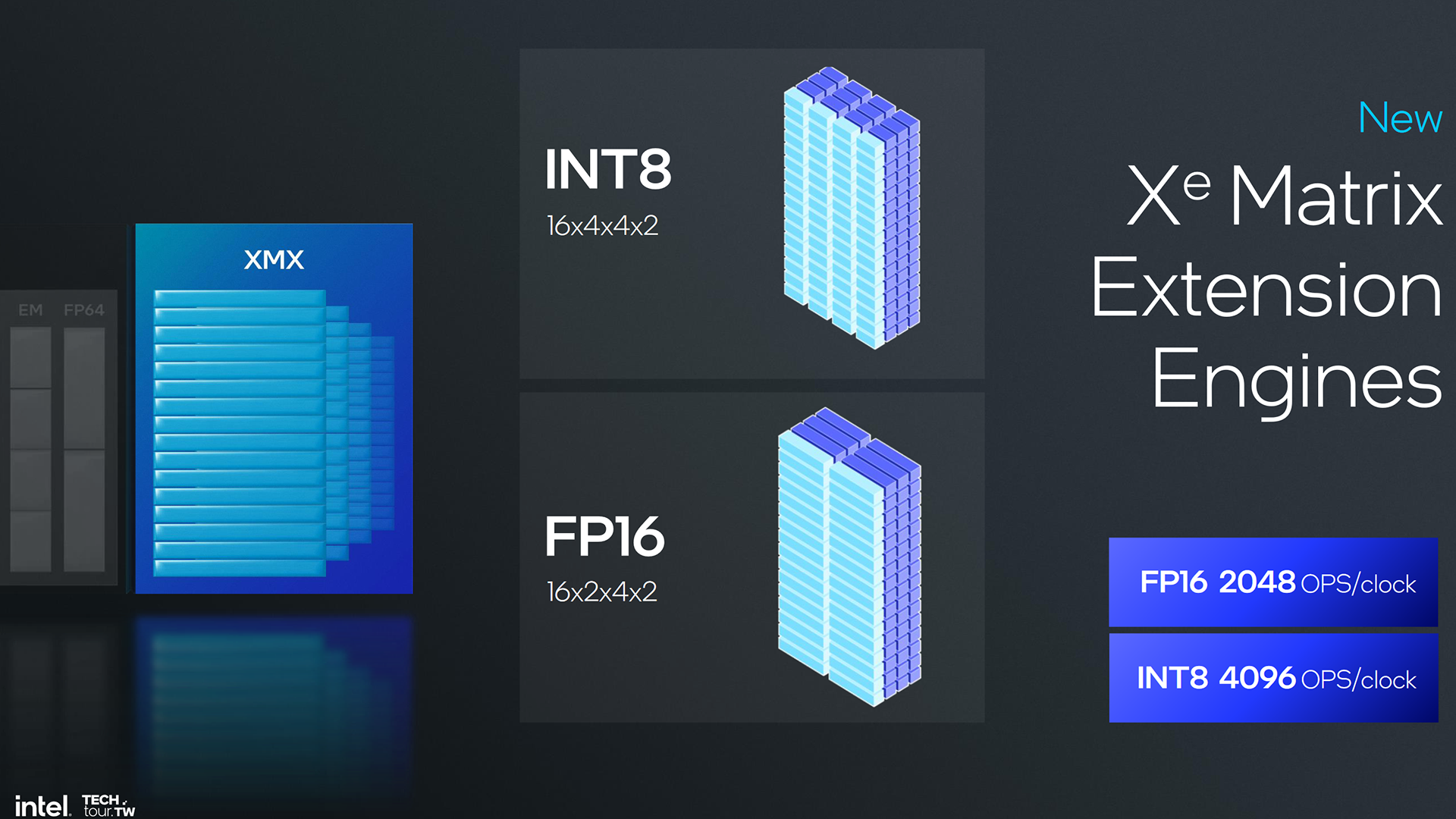

An Xe2 GPU is divided into Render Slices, each Render Slice into four Xe-cores, and each Xe-core into eight Vector Engines.

Lunar Lake comes with eight Xe-cores. That's not altogether very many for a gaming GPU, but for a better idea of what to expect in a discrete card you need only look to the the Arc A770. It comes with 32. So think of all the new additions to the Xe2 architecture listed here but multiplied a good few times over and you'll have a rough estimate of Battlemage's potential.

The new Xe2 architecture has been built to be scalable like that, Petersen says.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

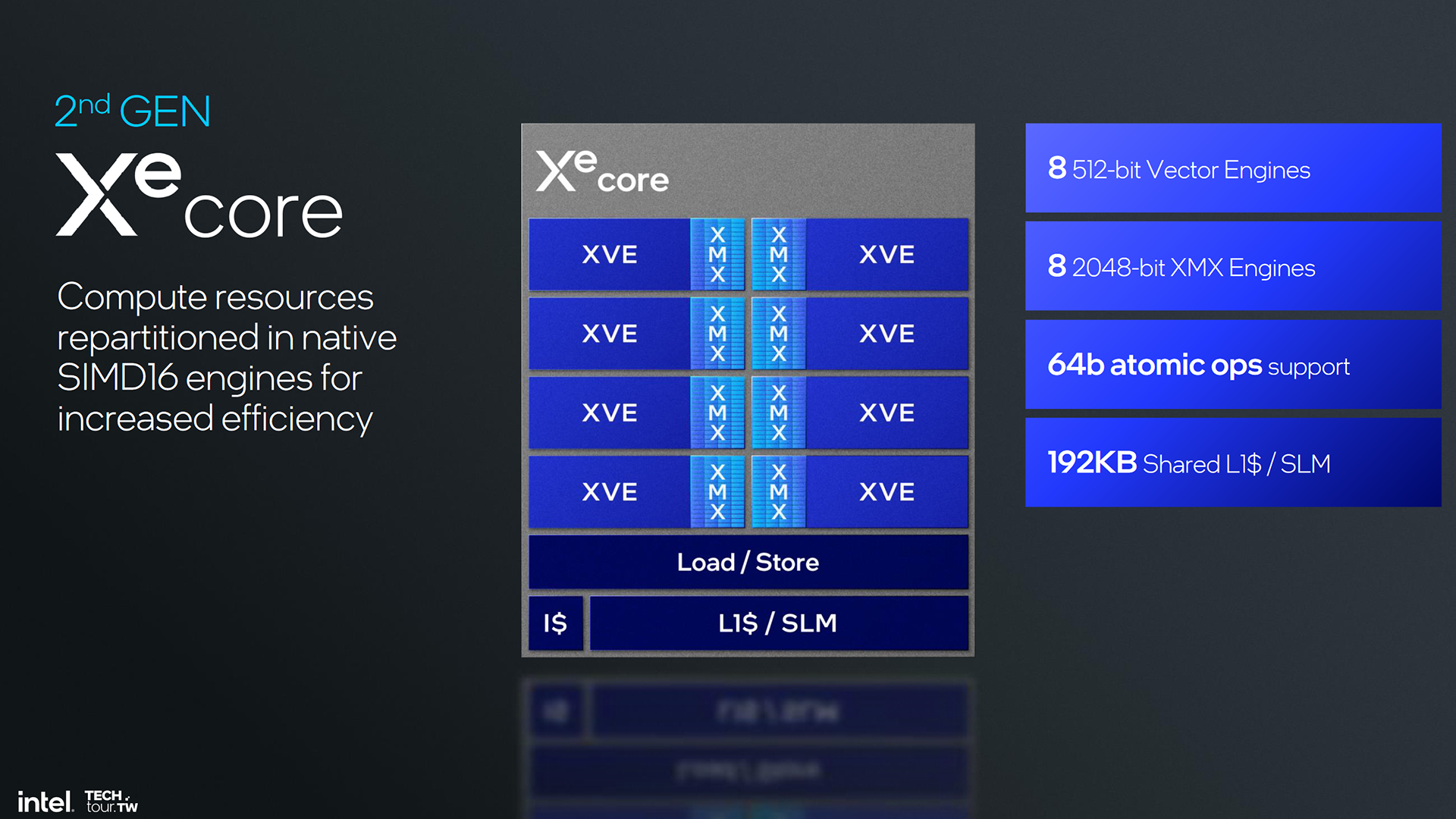

The Xe-cores in Xe2 have been improved for higher performance, better utilisation, and greater compatibility with games. That last point is particularly important, going off Intel's previous form.

"Most often you'll see games running right out of the box," Petersen says.

I sure hope that's the case. Behaviour that can best be described as clunky, or inconsistent, has been the thorn in the side of Intel's first discrete cards, Alchemist. To get a grasp of that would be a big improvement.

As Petersen explains: "It's been a journey. And we've learned a lot about CPU limitations, about share compiling, about DX9, and all of that effort has gone forward into Xe2."

Some of the lessons learned have led to "correcting" some mistakes of Alchemist to improve compatibility and efficiency. The shift to SIMD16, rather than SIMD8, in each Vector Engine is one step towards that, says Petersen.

"Our prior generation was SIMD8, which means, basically, there's eight lanes of computation for every instruction. We've moved that architecture to be 16 wide, which has a lot of efficiency benefits."

Another is execute indirect support baked into the hardware, via the Command Front End, which is a command used commonly in game engines, including Unreal Engine 5. This was previously emulated in software on Alchemist, which led to slowdowns.

"Think about Xe2 as the next generation of GPU architecture designed to be more compatible with games and higher utilisation."

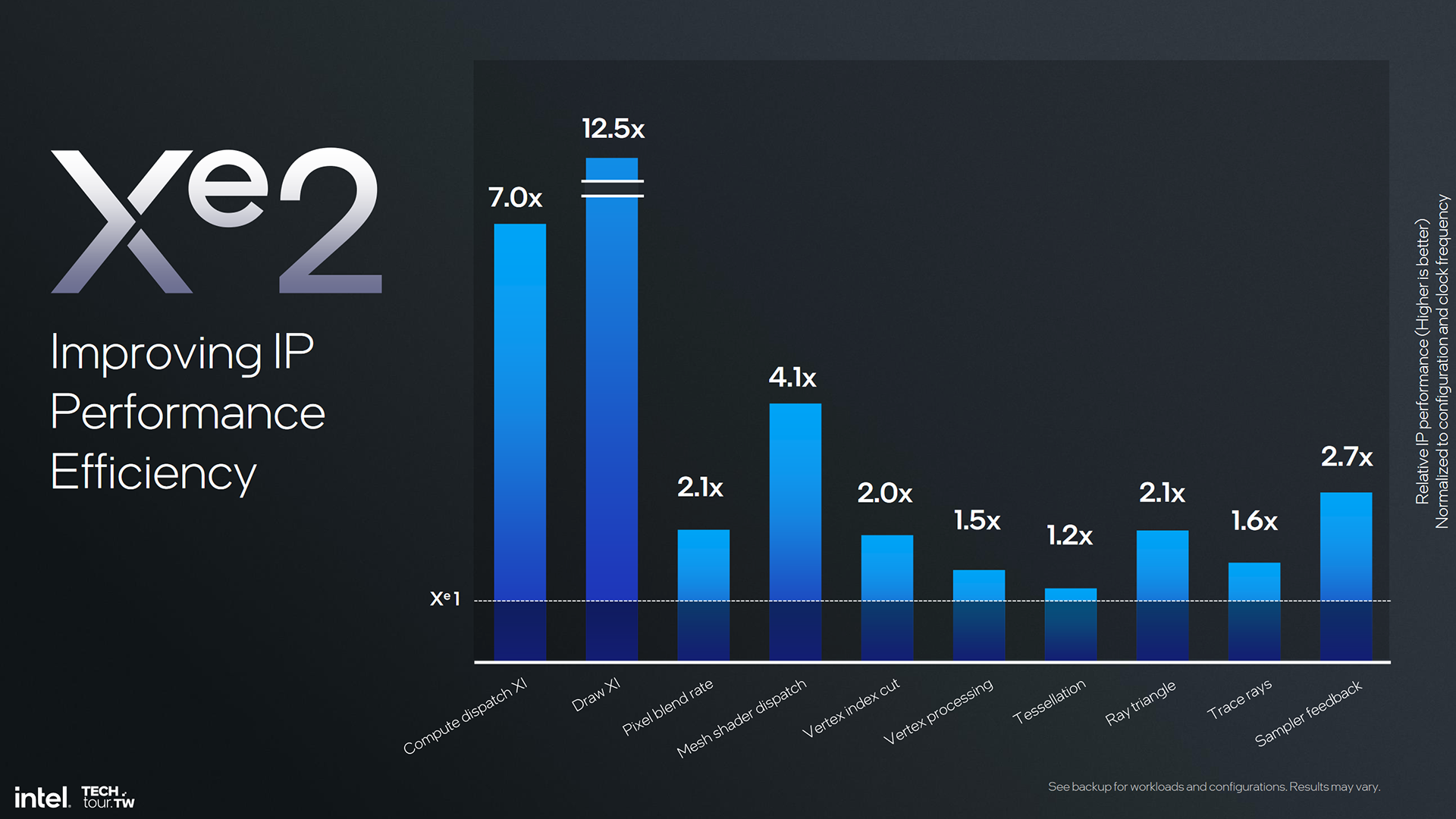

The higher utilisation point gets my attention. Alchemist never did manage to take full advantage of its rather sizeable silicon for the money. If you recall back to the lead-up to the Arc A770, which was intended at one time to compete at the mid-range of Nvidia's lineup. It couldn't make it stick, however, and ended up an entry-level contender.

If utilisation has improved with Xe2, it may mean even a similar size and spec Battlemage GPU would perform a lot better than its equivalent predecessor.

The Xe2 architecture's Render Slice includes improvements to deliver 3x mesh shading performance, 3x vertex fetch throughput, and 2x throughput for sampling without filtering. Bandwidth requirements should be lower, and commands are more in line with what games often use.

Some of the changes appear small and fiddly, on a minute technical level, but altogether should see Intel's next Arc cards, and Lunar Lake laptops, tripping themselves up on the latest games far less often than the first-gen Alchemist GPUs.

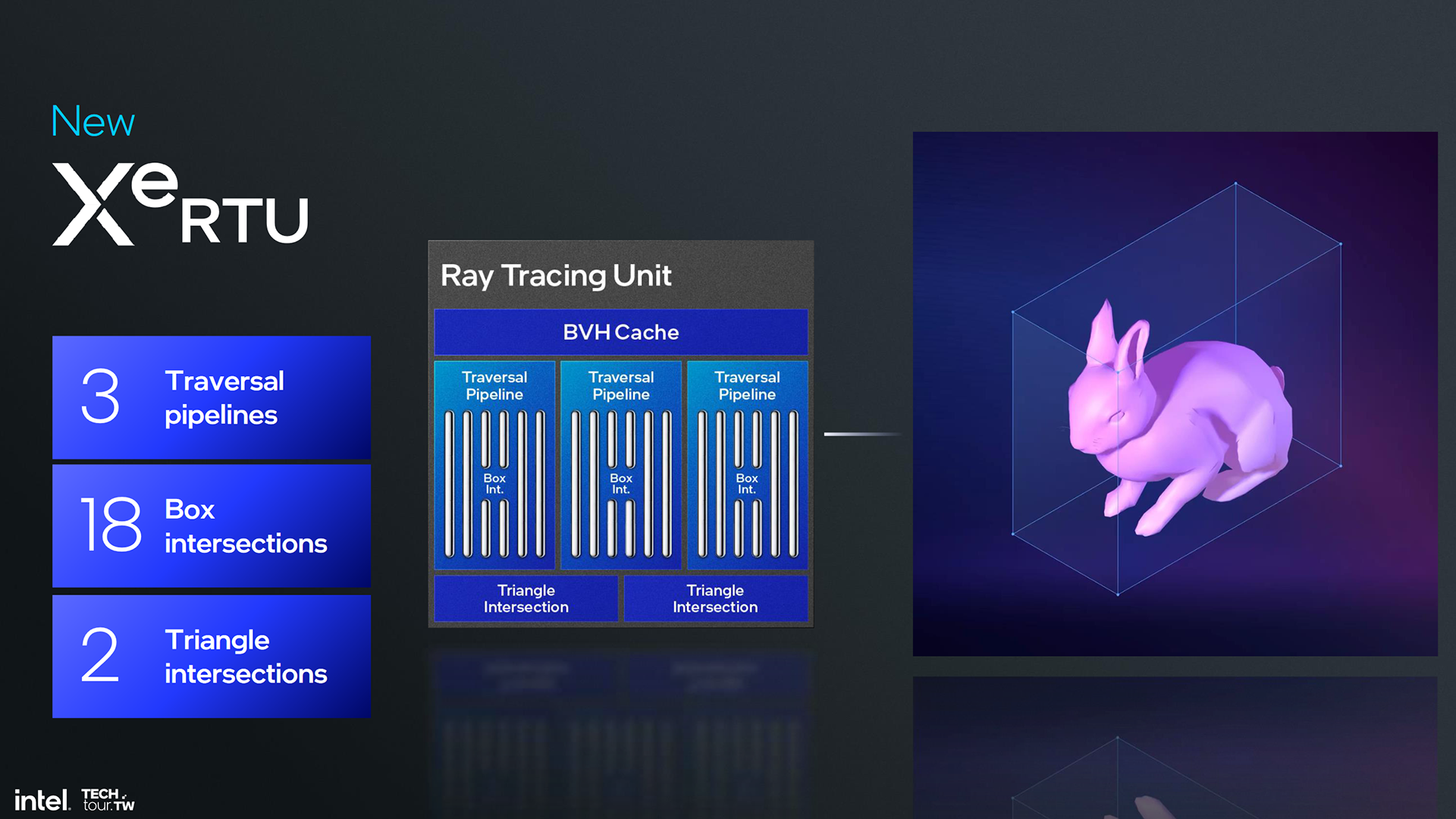

One of the more significant overhauls has been to the Ray Tracing Unit (RTU) in Xe2. The newer generation unit will offer more capability to resolve BVH (bounding volume hierarchy) queries that are used to speed-up ray tracing workloads in real-time.

Petersen signs off with a comment about why they've made some of these decisions. He says Intel aimed to be DirectX compliant with its GPUs, which it is, but it underestimated how it needs to be "similar to the dominant architecture" in other ways.

So, basically, if you can't beat 'em, join 'em. That's surely the market leader in this case, Nvidia.

I don't mind how Intel gets it done, providing the end result is a graphics card I can reliably game on. Speaking of which, Intel's drivers have improved quite a bit since Arc first came out, and it shouldn't lose any of that progress or improvement with Battlemage's release.

Similarly, the company confirms both Lunar Lake and Battlemage will utilise the same drivers.

"This will get the same gaming drivers, the same gaming features, on the same release schedule that discrete graphics products do," says Robert Hallock, Intel technical marketing director.

Intel showed off Lunar Lake running F1 24 with XeSS on the day of the game's release, which Petersen used to show Intel's coming on leaps and bounds with day-1 driver support.

As for when we'll see Battlemage launch, Intel won't confirm that key detail.

"Xe2, it's definitely coming," Petersen said, though likely in reference to Lunar Lake, which arrives sometime in Q3 of this year. That's sure to be the first time we can get our hands on Xe2 for ourselves and bash it through some benchmarks.

Best CPU for gaming: Top chips from Intel and AMD.

Best gaming motherboard: The right boards.

Best graphics card: Your perfect pixel-pusher awaits.

Best SSD for gaming: Get into the game first.

Jacob earned his first byline writing for his own tech blog. From there, he graduated to professionally breaking things as hardware writer at PCGamesN, and would go on to run the team as hardware editor. He joined PC Gamer's top staff as senior hardware editor before becoming managing editor of the hardware team, and you'll now find him reporting on the latest developments in the technology and gaming industries and testing the newest PC components.