Ghost Recon Breakpoint system requirements, settings, benchmarks, and performance analysis

Get better framerates in Breakpoint via FPS boost microtransactions.

Ghost Recon Breakpoint is a bit of a perplexing game. The latest installment of the series feels less like Ghost Recon and more like yet another take on an open world Ubisoft game full of microtransactions. It's not just the world and the game mechanics that feel familiar, however. The AnvilNext 2.0 engine is back, with a few tweaks that, oddly enough, make the game run quite a bit faster at maximum quality compared to Ghost Recon Wildlands. 4K ultra at more than 60 fps is actually possible, though it still requires a powerful graphics card.

Also typical of AnvilNext 2.0 is that CPU requirements are higher than in other games, and while officially you only need a Ryzen 3 1200 or Core i5-4460, you're not going to get anywhere near 144 fps with such a processor—and even 60 fps is going to be a stretch. Minimum fps is definitely a concern, and if you like buttery smooth framerates, a FreeSync or G-Sync compatible gaming monitor is highly recommended. If your PC hardware is looking a bit long in the tooth, whether for Ghost Recon Breakpoint or some other game, stay on the lookout for some good Black Friday deals. But let's get back to Breakpoint.

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Ghost Recon Breakpoint on a bunch of different AMD and Nvidia GPUs, multiple CPUs, and several laptops. See below for the full details, along with our Performance Analysis 101 article. Thanks, MSI!

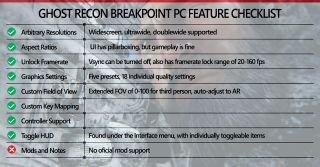

Ghost Recon Breakpoint's PC feature checklist is pretty typical of recent Ubisoft games. Resolution and aspect ratio support is good, you can sort of adjust field of view (the range is a bit limited) and tweak 18 advanced graphics settings (or choose from one of five presets), and the only missing feature is mod support. But you weren't really expecting Ubisoft to embrace mods, were you? Not when it has such amazing microtransaction potential! I'm just waiting for Ubisoft to start including microtransactions for improved performance and settings options. (That's a joke, Ubisoft. No more MTX, please.)

One final note before continuing is that Ghost Recon Breakpoint is officially promoted by AMD. It's part of AMD's Raise the Game bundle if you purchase a qualifying AMD graphics card right now. That means potentially the game may favor AMD hardware, and it also supports AMD's FidelityFX filter that improves image quality by undoing some of the blurriness introduced by temporal anti-aliasing. The good news is that FidelityFX is completely open, so you don't need an AMD GPU to enable the feature, and as we'll see in a moment, AMD doesn't seem to have much of a performance advantage over Nvidia—by which I mean that Nvidia's GPUs actually win nearly every meaningful match-up right now.

Ghost Recon Breakpoint system requirements

In terms of hardware, let me start with the official system requirements. Ubisoft goes above and beyond most games in listing five different sets of system requirements. From 1080p low all the way up to 4K ultra, Ubisoft has recommendations. It doesn't specify what sort of performance you'll get from the recommended hardware, but that's why I'm here with the benchmarks.

Minimum – Low Setting | 1080p

- OS: Windows 7/8.1/10

- CPU: AMD Ryzen 3 1200 / Intel Core i5-4460

- RAM: 8GB

- GPU: Radeon R9 280X / GeForce GTX 960 (4GB)

Recommended – High Setting | 1080p

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

- OS: Windows 7/8.1/10

- CPU: AMD Ryzen 5 1600 / Intel Core i7-6700K

- RAM: 8GB

- GPU: Radeon RX 480 / GeForce GTX 1060 6GB

Ultra – Ultra Setting | 1080p

- OS: Windows 10

- CPU: AMD Ryzen 7 1700X / Intel Core i7-6700K

- RAM: 16GB

- GPU: Radeon RX 5700 XT / GeForce GTX 1080

Ultra 2K – Ultra Setting | 2K

- OS: Windows 10

- CPU: AMD Ryzen 7 1700X / Intel Core i7-6700K

- RAM: 16GB

- GPU: Radeon RX 5700 XT / GeForce GTX 1080

Elite – Ultra Setting | 4K

- OS: Windows 10

- CPU: AMD Ryzen 7 2700X / Intel Core i7-7700K

- RAM: 16GB

- GPU: AMD Radeon VII / GeForce RTX 2080

There are obviously some oddities with the above specs, like the fact that the Ultra and Ultra 2K are the same—and based on my testing, I'd say the 1080p ultra recommendation should be GTX 1070 or RX Vega 56, and 1440p ultra should be GTX 1080 or RX 5700, but I'll get to that in a moment. I'd also venture a guess that the minimum specs will only do 30 fps or more, not 60.

I can confirm that Breakpoint really doesn't like some 2GB graphics cards, which is why the 960 4GB is listed as the minimum spec. On a GTX 1050, performance in the built-in benchmark waffled between nearly 50 fps average on a good run, to 25 fps on a subsequent run, and 1080p medium was effectively unplayable. A 1060 3GB on the other hand did remarkably well, handling up to 1080p very high at more than 60 fps. Finally, that 4K ultra recommendation isn't coming anywhere near 60 fps, especially not on the Radeon VII. The 2080 almost gets there, and dropping a few settings should do the trick, but as we'll see in the benchmarks, the Radeon VII struggles quite a bit.

Low

Medium

High

Very High

Ultra

Ultra + temporal injection

Low

Medium

High

Very High

Ultra

Low

Medium

High

Very High

Ultra

Low

Medium

High

Very High

Ultra

Ghost Recon Breakpoint settings overview

Ghost Recon Breakpoint has five different presets along with 18 individual advanced settings that can be tweaked, not including resolution or resolution scaling. Here's the thing: on most graphics cards, the difference between minimum and maximum quality isn't very large, and some of the settings behave in unexpected ways. You can nearly double performance going from minimum to ultra on GPUs like the 1060 3GB, but part of that is the lack of VRAM. On a GTX 1070, low vs. ultra is only 70 percent faster, and on the Vega cards it's only 60 percent faster. Everything beyond the high preset looks pretty much the same as well, as you can see in the above image gallery.

The one setting I want to call out in advance is anti-aliasing. There are two settings: anti-aliasing and temporal injection. The latter can't be enabled if AA is off, but on many GPUs it appears temporal injection gives a decent 15 percent boost to performance. If you turn off AA (and thus temporal injection), the low preset actually runs about as fast as the medium preset with AA and injection enabled.

What is this so-called temporal injection, though? The description says, "Temporal Injection represents the technology of using samples from many frames to provide crisp high resolution images while keeping smooth framerate." That's fine, except it's basically a description of temporal AA.

Looking at some still images, it seems temporal injection is actually lowering the rendering resolution and then doing some form of image upscaling—a sort of cheap take on DLSS. (You can see the comparison below, or here are the full size images: 1440p ultra, 1440p ultra + temporal injection.) That would explain the performance boost, and I asked for additional details from Ubisoft. Here's what it had to say:

"Temporal Injection is a system that draws less pixels (causing the performance boost) and uses a temporal upscaling shader that temporally reconstructs a high resolution image. It can produce slightly blurry backgrounds in some cases, which may show up more when using a large screen or a low resolution. On high resolution (1440p or greater), however, the effect becomes barely noticeable and is a must have to achieve great performances."

My own experience more or less agrees with that. At 1080p, temporal injection is more noticeable than at 1440p or 4K (and it's possibly even worse at 720p). But it doesn't appear to be doing a strict lower resolution rendering and then upscaling. It seems to affect foliage and people the most, and distance may also play a role.

Regardless, I initially started testing with both AA and temporal injection enabled, and to keep the results consistent across all hardware, I left temporal injection enabled for all the testing that follows. It improves performance, and you can always turn it off if you prefer. It's the wrong choice as far as image quality, but the right choice if you're trying to boost performance, and it's almost required at 1440p and 4K. Anyway, I'm not going to go rerun several hundred benchmarks at this point. Sorry. (Subtract 10-15 percent from the results for an estimate of the non-TI numbers.)

Moving on to the other settings, let's start with the ones that basically don't matter, which is most of them. All of the following showed less than a 3 percent difference in performance between max and min settings: Anisotropic Filtering, Bloom, FidelityFX, Fob Background Blur, Long Range Shadows, Motion Blur, Screen Space Shadows, Subsurface Scattering, and Texture Quality. You can safely leave those alone if you're looking for higher framerates.

That's basically half of the settings, and many more only cause a 3-5 percent change in performance: Ambient Occlusion, Grass Quality, Level of Detail, Screen Space Reflections, Terrain Quality, and Volumetric Fog. If you're keeping score, that only leaves three settings that cause more than a five percent change in performance, and I already covered two of those at the start.

That leaves Sun Shadows (detailed shadows cast by the sun) as the only remaining setting, and dropping this from ultra to low can boost performance by 9-10 percent. Needless to say, turning this down also tends to cause the most visible change in image quality, as dynamic shadows are pretty noticeable.

Sometimes tweaks to the settings may stack up to slightly larger improvements, so I checked that as well. Going from the ultra preset to the minimum setting on the nine settings that caused less than a 3 percent change in performance yielded a 2.4 percent improvement in framerates. Yeah, those settings really don't matter. In contrast, dropping the seven settings that give modest fps increases from ultra to high boosts performance by around 22 percent, nearly the same as going from the ultra to high preset.

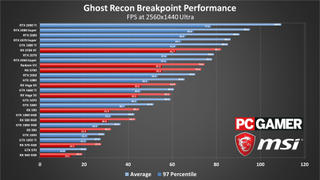

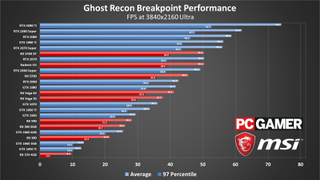

Ghost Recon Breakpoint graphics card benchmarks

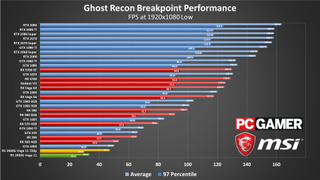

Ghost Recon Breakpoint includes its own benchmark tool, which was used for all of the benchmark data. Each GPU is tested multiple times and I use the best result, though outside of cards with less than 3GB VRAM, the variability between runs was largely meaningless (1 percent margin of error). The built-in benchmark doesn't represent every aspect of the game. Some areas will perform better, some worse, but it does give a good baseline summary of what sort of performance you can expect.

One thing to note is that there are a few scenes in the benchmark that are very light (e.g., starting at the snowy train tracks for several seconds), which may artificially boost the overall performance. As noted earlier, performance is actually better than that of Ghost Recon Wildlands, but some of that may simply be due to the differences in the benchmark sequence, plus the new temporal injection setting. Pay attention to the 97 percentile minimums (calculated as the average fps of the highest 3 percent of frametimes), which give a fair representation of worst-case performance (e.g., in a firefight).

All of the GPU testing is done using an overclocked Intel Core i7-8700K with an MSI MEG Z390 Godlike motherboard, using MSI graphics cards, unless otherwise noted. AMD Ryzen CPUs are tested on MSI's MEG X570 Godlike, except the 2400G which uses an MSI B350I board since I need something with a DisplayPort connection (which most of the X370/X470/X570 boards omit). MSI is our partner for these videos and provides the hardware and sponsorship to make them happen, including three gaming laptops (the GL63, GS75, and GE75).

For these tests, I've checked all five presets at 1080p (yes, some of the results on different presets are nearly identical), plus tested 1440p and 4K using the ultra preset. Anti-aliasing and Temporal Injection were left on for all of the tests, as the combination improves performance (at the cost of image fidelity). Minimum fps also tended to fluctuate quite a bit, and sometimes I'd get about a several second stall during a benchmark run, so multiple tests were run at each setting for each component, and I'm reporting the best result.

I used the latest AMD and Nvidia drivers available at the time for testing: AMD 19.9.3 and Nvidia 436.48, both of which are game ready for Ghost Recon Breakpoint. I also tried to test Intel and AMD integrated graphics at 720p low, but the Intel GPU refused to run the game. (I'm told an updated driver is in the works.) That was using the most recent 26.20.100.7212 drivers, and it would crash to desktop while attempting to load the main menu.

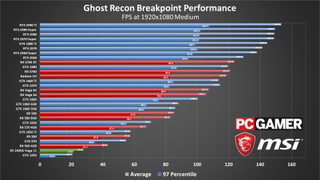

Ghost Recon Breakpoint can look a bit washed out at low quality, which again makes the relatively middling performance concerning. The GTX 1050 and RX 560 fail to clear 60 fps, but more critically, the GTX 1050 performance was prone to serious fluctuations. At one point, performance dropped to around 25 fps in the benchmark, and I ended up having to restart the PC to "fix" things. A second run right after the first test (shown in the chart) got 35 fps. I would be extremely cautious about buying Ghost Recon Breakpoint if you don't have a dedicated GPU with at least 4GB of RAM (or the 1060 3GB).

Minimum fps tends to be more variable than in other games, which is pretty typical of the AnvilNext 2.0 engine. You'll need at least a GTX 1650 to get a steady 60 fps (i.e., minimums above 60). The GTX 970 also performs quite poorly, dropping below even the GTX 1050 Ti.

As for integrated graphics, Intel is out of the picture, but AMD's Vega 11 did pretty well at 720p low, and even managed a playable 33 fps at 1080p low. It's not going to be the best experience for a shooter, but it's better than crashing to desktop. Interestingly, Vega 11 actually did better than the GTX 1050 at medium quality, though that's not saying much as it stayed below 30 fps.

CPU limits are also present, at least on the fastest GPUs. The RTX 2070 and above all end up with a tie at around 160 fps, with a bit of fluctuation. Meanwhile, AMD's GPUs seem to hit a lower ceiling of around 130 fps. The result is that AMD's GPUs underperform compared to Nvidia, despite this being an AMD promoted game. The RX 5700 XT ends up being AMD's fastest GPU at 1080p low, beating even the Radeon VII. However, Nvidia's 1080, 1660 Ti, and RTX 2060 all outperform it.

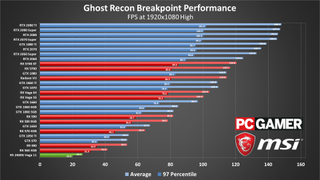

Performance with the medium and high presets is very similar on most of the GPUs. The only major item to note is that the GTX 1050 really struggled at the medium preset because it only has 2GB VRAM, so I didn't bother going any higher. Performance drops around 11 percent on average going from low to medium, but just 3-4 percent on average going from medium to high. Individual GPUs may show slightly smaller or larger deltas, but in general the medium and high presets perform about the same.

Nvidia GPUs continue to dominate the top of the charts. That's partly because there are simply more of them to begin with, but the best AMD GPU is the RX 5700 XT and that fails to match the RTX 2060. I don't know if it's a driver problem or just the way Breakpoint runs, though it's interesting to note that the 10-series Nvidia GPUs also perform worse relative to the newer Nvidia cards. My best guess, other than drivers, is that the Turing architecture's concurrent FP and INT support is more beneficial here than in other games.

As long as you have a midrange GPU with at least 4GB VRAM (e.g., RX 570 or GTX 1650), you can still break 60 fps, and the 1060 3GB and above also keep minimums above 60. 144 fps is also possible on the RTX 2070 Super and above, but minimums sit around 100 fps, and there's still a clear CPU limit for the fastest graphics cards. A FreeSync or G-Sync compatible 144Hz display would be your best bet for a smooth and tearing-free experience.

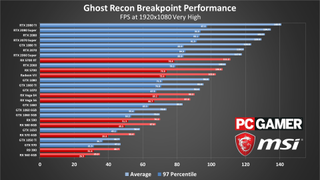

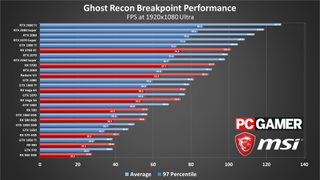

The very high and ultra presets finally start to move beyond CPU bottlenecks on the fastest GPUs, though the CPU is still a factor. The various RTX cards remain pretty tightly clustered at the top of the chart, and the 2080 Ti is only 30 percent faster than the 2060, even though it has twice the computational power and nearly twice the bandwidth. AMD's lower maximum fps also becomes a bit less of a factor, with the RX 5700 XT moving ahead of the 2060 at very high, and the 5700 also pulling ahead at 1080p ultra. That's still not a great showing, however.

Cards with 4GB or more VRAM still do okay with very high, but the 1650 and 570 both fall below 60 fps now. You'll need an RX 580 8GB or faster to average 60, and the GTX 1660 or higher to keep minimums above 60. Move up to ultra and you'll need at least a GTX 1660 to average 60 fps, with the GTX 1080 and above mostly keeping minimums above that mark.

I say "mostly" because the Radeon VII continues to deliver abnormally poor performance. The 16GB HBM2 isn't really helping here, which suggests drivers are to blame. Given this is the third major AMD promoted game to launch in the past month, not to mention the lack of Radeon VII owners, my bet is AMD is focused more on improving performance on the new RX 5700 cards now.

1440p ultra as usual requires a lot of GPU horsepower to maintain smooth framerates. The Vega 56 and GTX 1070 nearly get there, with the GTX 1660 Ti and Vega 64 just barely averaging 60 fps or more, but only the RX 5700 XT and above can keep minimums above 60. Also remember that I tested with temporal injection enabled. Turn that off and performance drops 10-15 percent, meaning only the 2080 Super and 2080 Ti are likely to stay above 60.

Alternatively, dropping the setting to high while keeping the resolution at 1440p should boost performance by around 30-40 percent. That should get the GTX 1660 and faster GPUs into the 60 fps range, and the difference in image fidelity isn't that noticeable. At least at 1440p the GPU rankings start to look more or less as expected. Some cards still underperform, like the Radeon VII—or perhaps it's just that Turing overperforms. Architectural differences could also play a role in the better-than-elsewhere performance of AMD's RX 5700 series parts.

What about 4K ultra—is it possible to hit reasonable framerates in Breakpoint? Somewhat surprisingly, yes, it is. Okay, so only the RTX 2080 Super and RTX 2080 Ti average 60 fps or more, and that's with temporal injection enabled, but it's still better than the 50 fps the 2080 Ti gets in Ghost Recon Wildlands at 4K ultra. Minimum fps continues to be a concern, but 4K is certainly within reach of quite a few GPUs. Drop to 4K high or even medium and the RX 5700 and faster should all be pretty smooth, particularly with a FreeSync or G-Sync compatible display.

Ghost Recon Breakpoint CPU benchmarks

Given the apparent CPU bottleneck at lower settings with faster GPUs, you might expect there to be a relatively large gap in performance between the fastest and slowest CPUs. I've used my usual selection of relatively modern processors, and again I want to point out that the Core i3-8100 tends to perform quite similarly to the i7-4770K, and the i5-8400 is generally close to the i7-7700K / i7-6700K in performance. All of the CPUs were tested with the MSI RTX 2080 Ti Duke 11G OC to put as much emphasis on the CPU as possible, and using a slower GPU would make the charts look more like the 1440p and 4K ultra charts. Here are the results:

First, let me just point out that AnvilNext 2.0 has always been pretty demanding on the CPU side, and it doesn't usually scale well to ultra high framerates (eg, look at Assassin's Creed Odyssey). Where Wolfenstein Youngblood could reach into the 300+ fps range, the fastest CPU in Breakpoint gets 173 fps, and more demanding areas can end up in the 120-130 fps range.

Having more cores and threads also helps, along with clockspeed, so while Intel claims the top two spots at 1080p low, one of those CPUs is running overclocked. Running the 8700K at stock falls behind AMD's 3900X and 3700X.

It's actually a bit surprising how close the Ryzen 5 3600 is to the Ryzen 7 3700X, considering it has two fewer cores and gives up a couple hundred MHz in clockspeed—it's effectively tied with the stock 8700K. AMD's previous gen Ryzen 5 2600 on the other hand doesn't do nearly as well. Still, everything from the i5-8400 and up keeps minimum fps above 60, which is more than enough for most PC gamers.

The CPU standings mostly remain the same at higher quality settings. The i5-8400 moves ahead of the 2600 in many of the charts, but with worse minimum fps. Interestingly, the stock 8700K also pulls ahead of all the Ryzen CPUs at 1440p and 4K, where normally we see a tie between all the processors. I'm not sure why, but the Intel chips seem to eke out just a bit higher fps at the highest resolutions.

Still, the only CPU that really doesn't do very well is the i3-8100, which stutters a bit even at 1080p and minimum quality. If you want to maintain 60 fps in Breakpoint, I recommend getting at least a 6-core processor.

Ghost Recon Breakpoint laptop benchmarks

Considering the GPU and CPU benchmarks so far, Ghost Recon Breakpoint is likely to struggle a bit on laptop that don't have a 6-core processor. That includes the GL63, which packs an RTX 2060, but pairs it with a 4-core/8-thread Core i5-8300H. The other two laptops have 6-core/12-thread Core i7-8750H processors, giving them a clear advantage at lower settings.

Generally speaking, the RTX 2060 and RTX 2070 Max-Q deliver similar performance, so most of the difference between the GL63 and GS75 can be attributed to the CPU. The GL63 can't keep minimum fps above 60 even at 1080p low, and shows almost no change in minimum fps up through th every high preset. Average fps on the RTX 2060 even comes out ahead of the GS75 at the very high and ultra presets, but the weaker CPU is still holding it back.

The GE75 also struggles a bit with minimum fps, though at least it stays above 60 at all five tested presets. It's not enough to fully benefit from the 144Hz display, and it's only at very high and ultra that it starts to put some distance between itself and the other two laptops. This is the price gamers pay for going with a laptop instead of a desktop: higher cost, lower performance, but you can pack it up and take it to a LAN party or a friend's house more easily.

Final thoughts

Desktop PC / motherboards / Notebooks

MSI MEG Z390 Godlike

MSI Z370 Gaming Pro Carbon AC

MSI MEG X570 Godlike

MSI X470 Gaming M7 AC

MSI Trident X 9SD-021US

MSI GE75 Raider 85G

MSI GS75 Stealth 203

MSI GL63 8SE-209

Nvidia GPUs

MSI RTX 2080 Ti Duke 11G OC

MSI RTX 2080 Super Gaming X Trio

MSI RTX 2080 Duke 8G OC

MSI RTX 2070 Super Gaming X Trio

MSI RTX 2070 Gaming Z 8G

MSI RTX 2060 Super Gaming X

MSI RTX 2060 Gaming Z 8G

MSI GTX 1660 Ti Gaming X 6G

MSI GTX 1660 Gaming X 6G

MSI GTX 1650 Gaming X 4G

AMD GPUs

MSI Radeon VII Gaming 16G

MSI Radeon RX 5700 XT

MSI Radeon RX 5700

MSI RX Vega 64 Air Boost 8G

MSI RX Vega 56 Air Boost 8G

MSI RX 590 Armor 8G OC

MSI RX 580 Gaming X 8G

MSI RX 570 Gaming X 4G

MSI RX 560 4G Aero ITX

Thanks again to MSI for providing the hardware for our testing of Ghost Recon Breakpoint. If I'm being kind, this is a game that really benefits from a faster CPU and a powerful graphics card. Alternatively, the game looks like it was released prematurely, with clearly less than fully optimized performance, plenty of bugs, and a story that's likely to disappoint long-time fans of the Ghost Recon franchise. It's still fun, sure, and performance is at least equal to or better than Wildlands, but that's a pretty low bar to clear.

For AMD, this is yet another less than stellar showing. That's particularly damning considering AMD is promoting Ghost Recon Breakpoint, potentially giving a free copy to purchasers of the RX 5700 series graphics cards. But since you get a choice—Ghost Recon Breakpoint or Borderlands 3—unless you already own Borderlands 3, I'd pass on Breakpoint. Maybe it will get better with future patches and/or driver updates, but right now our review found the game severely lacking.

October is going to be a busy month for major game launches. With Ghost Recon Breakpoint now behind us, we can look forward to The Outer Worlds, Call of Duty, and Red Dead Redemption 2. Check back later this month for more benchmarks and performance analysis.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Marvel Rivals devs are adding new team-up abilities for Season 2, including my dream combo of Captain America and The Winter Solider

'Thick thighs save lives': Marvel Rivals players are desperate for Emma Frost to crush them between her thunderous gams when season 2 goes live