Forget the CoD: Modern Warfare 2 best settings, it's all about the upscalers

The myriad settings make minimal difference, but turning on one of the many upscalers absolutely will.

Call of Duty: Modern Warfare 2 is here. Again. Just 13 short years since the first of its name graced our desktops it's time to don the camo, break out your weird character-combo pseudonyms, and murder each other in the name of Activision. I mean, that's why we all go nuts for CoDs, right?

But how to get the game running right? Unfortunately, I can't give you any help when it comes to dealing with the myriad crashing issues the game seems to be suffering under already, nor am I able to solve the problems with network connections.

We're not going to pretend that simply verifying your game files, restarting your router, or rolling back to a previous GPU driver will suddenly make your problems go away. But, by all means give it a go, none of those 'solutions' seemed to work for us.

I've still been struggling with one of my office PCs steadfastly refusing to connect to Activision's servers, and the reason? Well, the nonsensical "Hueneme Concord" error is what the game trots out, and I've had no joy fixing the issue. Luckily our standard test rig hasn't had any such issue, despite being on the exact same super-fast, low latency network.

Sigh.

What I can help with, however, is what to do about the thorny issue of in-game graphics settings. And there are myriad ones to choose from, as well as a handful of simple presets that mean you don't have to worry about individually tweaking a setting here or there.

And the good news is that really CoD: MW2 is actually pretty straightforward in terms of what you need to do to get great performance. The path to the highest frame rates, as is increasingly becoming the case, is all about the upscaler you use.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

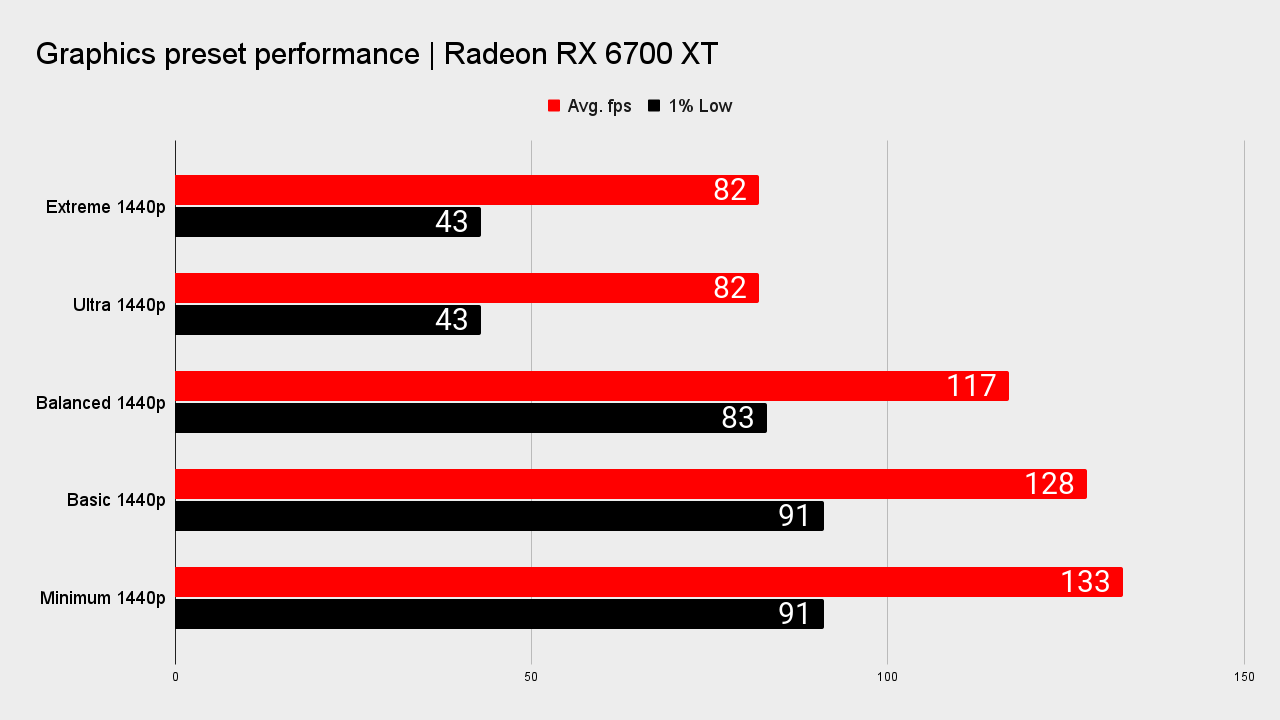

But, to start with you can play around with the basic in-game presets. We've tested with a Radeon RX 6700 XT GPU, which is a very capable graphics card in its own right, but nowhere near the top of today's stack. At the 1440p resolution it will deliver over 80 fps in the highest graphical preset, but it will still give us an idea of what settings we can tweak and what will actually have an impact on frame rates without having much of an impact on visual fidelity.

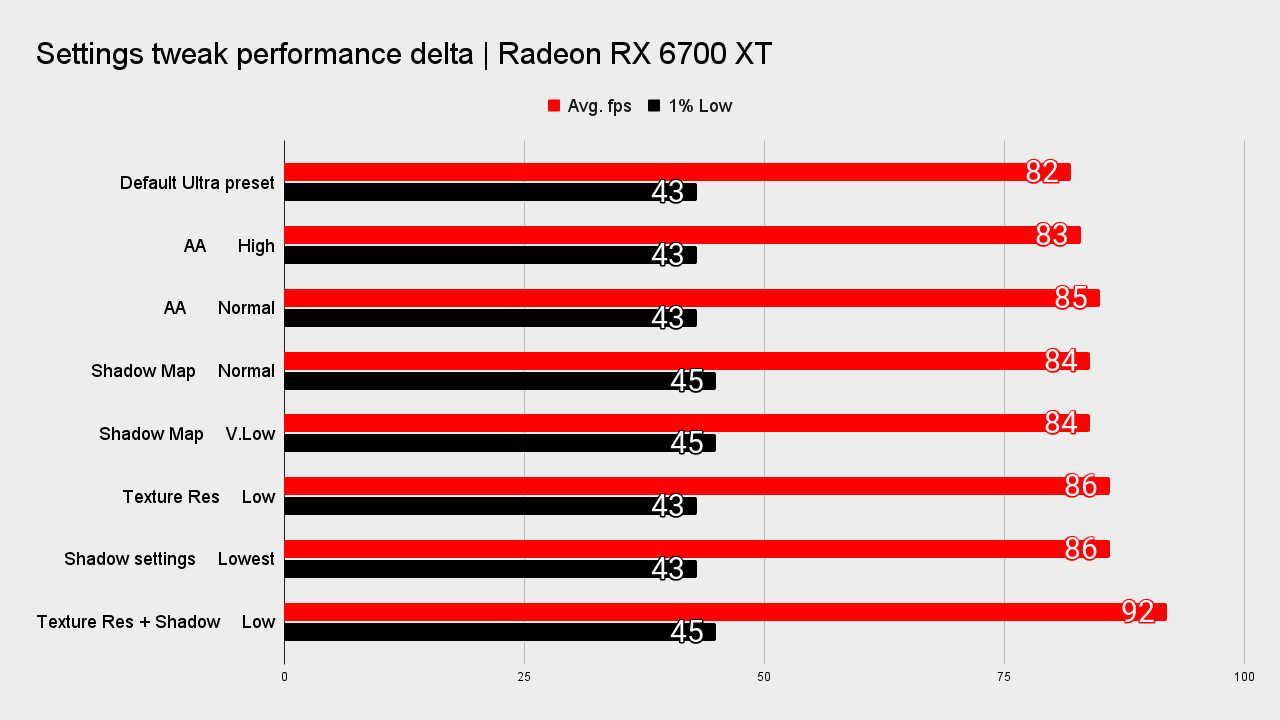

Strangely you'll get the same figure for the setting below Extreme, noted as Ultra. I noticed the same thing while testing the RTX 4090 with the new Modern Warfare 2—no difference in performance at all until you hit Balanced. Texture resolution is pushed up for Extreme over Ultra, as is Particle Lighting and the Spot light shadow cache. But none of that makes any difference to the frame rates you'll see.

Dropping to Balanced and then Basic at native 1440p will net you a 43% and 56% performance increase over Ultra settings respectively. Considering individually tweaking the discrete settings manually won't deliver much of a performance boost—highlighted by the lack of a frame rate delta between Extreme and Ultra—you might as well play around with these presets instead.

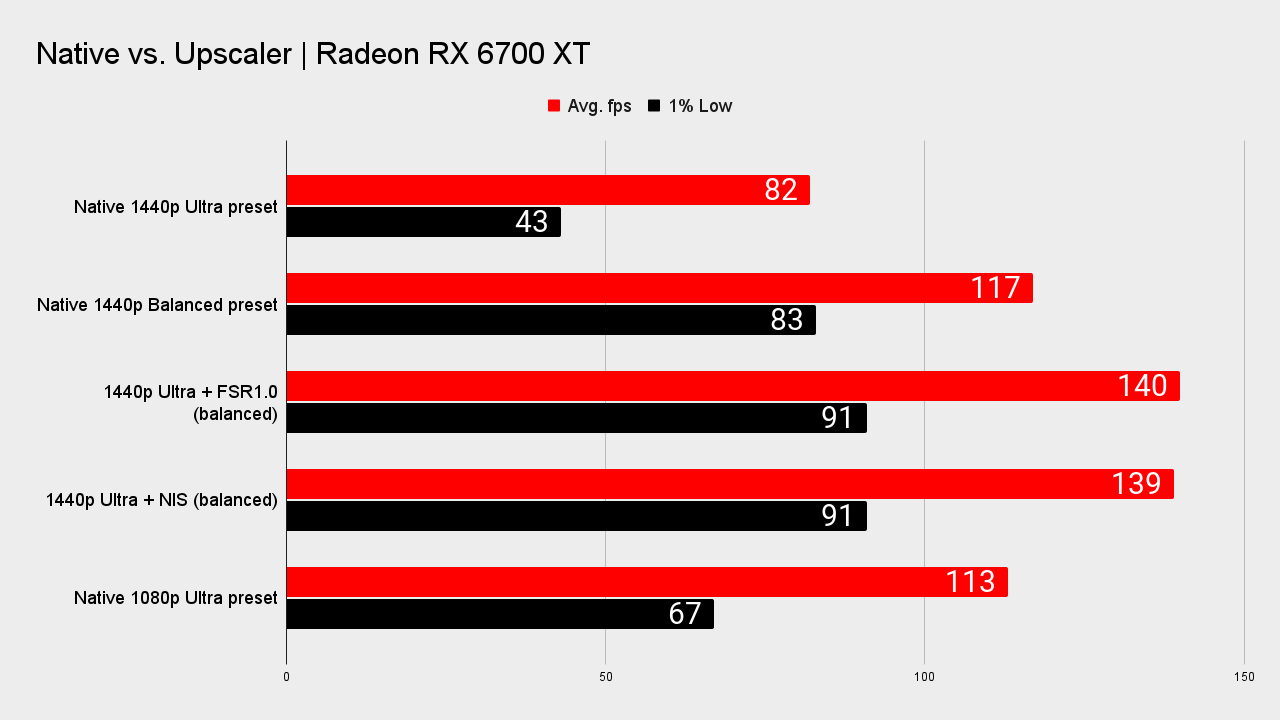

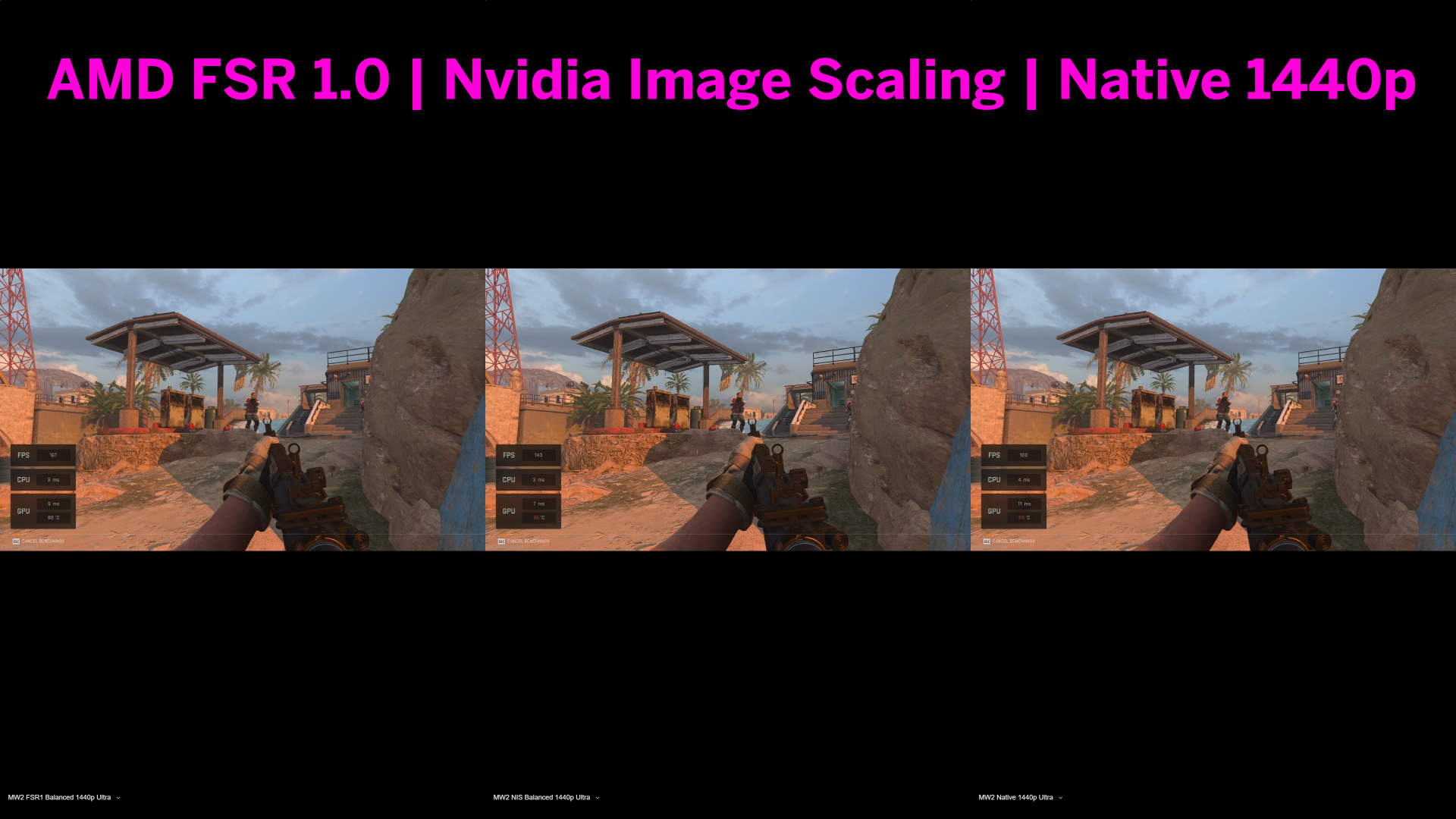

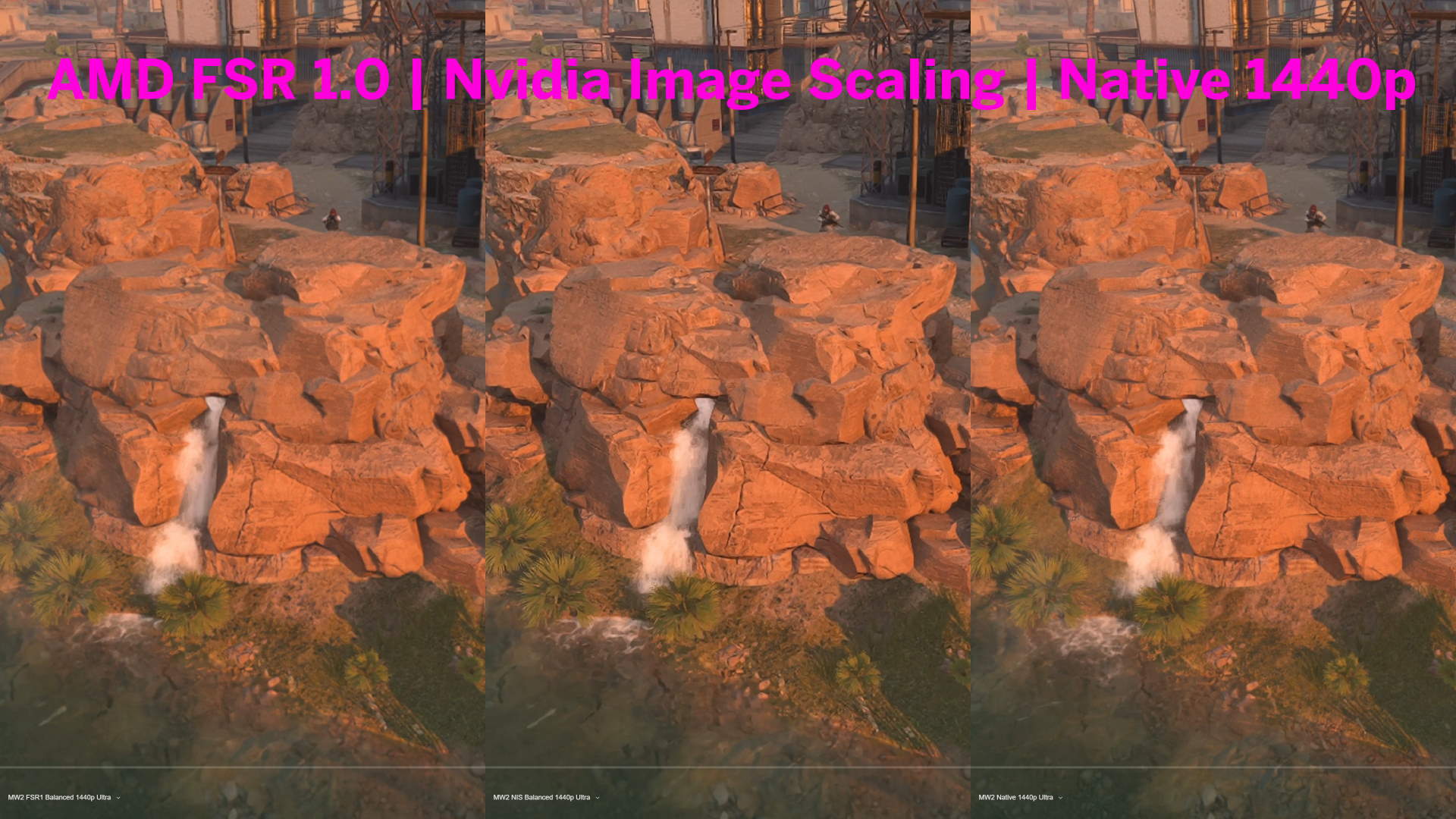

What makes more of a difference, to the tune of a 71% frame rate increase at 1440p Ultra settings, is the move from native rendering to using AMD's FSR 1.0. Granted I'm using an AMD card here, but you will also see around the same boost in performance from using the Nvidia Image Scaling feature instead. That's available on AMD cards, too, while the DLSS preset is saved for GeForce GPUs alone.

Sadly, we couldn't enable Intel's XeSS feature on MW2 despite its existence in the settings menu. It may currently be stuck only with Intel GPUs for now.

But FSR 1.0 and NIS do a grand job of delivering a huge improvement in gaming frame rates, with a minimal impact on image quality. Sure, because we aren't talking about the impressive FSR 2.0 upgrade or actual DLSS, there is some loss of clarity if you zoom in on some still images.

But in motion, you'd be hard pressed to tell me which was native, which was AMD, and which was Nvidia's GPU agnostic upscaler. Personally, I'd say I prefer the look of FSR 1.0 over Nvidia Image Scaling—it's just too over sharpened for me—but in a multiplayer shoot out you're looking for frame rates over all such fine fidelity margins.

And when you can get a higher frame rates from enabling either FSR or NIS at the top graphics preset than from dropping down to a lower setting, why would you bother?

When you can actually get it into a game, Call of Duty: Modern Warfare 2 feels pretty slick as game engines go. And all those little graphics settings knobs aren't really worth twisting on their own because nothing will deliver the jump in frame rates that a good upscaler can.

It's just a shame there are still so many actual niggles that are preventing players from actually booting the game they paid for.

This article is no longer supported and remains purely independent.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.