Fallout 4 Graphics Revisited: Patch 1.3

War, war never changes…

…the hardware and software you're using, on the other hand, can change in rapid fashion, often rendering older results meaningless. This is the case with Fallout 4, which we initially benchmarked right after launch. Two and a half months later, we've just received the latest official 1.3 patch, which was in open beta for the past week or two. Unlike the beta, the official release is intended to be ready for general consumption. This is important because there have been rumblings that Fallout 4 performance has gotten worse with the patch.

Let's just cut straight to the point: That's bollocks. We've got the same performance sequence we used in our initial testing, only now we're running the latest AMD and Nvidia drivers. After dozens more repeat benchmark runs, we can comfortably say that almost everyone will see some healthy improvements to performance compared to the state of the game back in November. But there's more to the story than just driver updates and bug fixes.

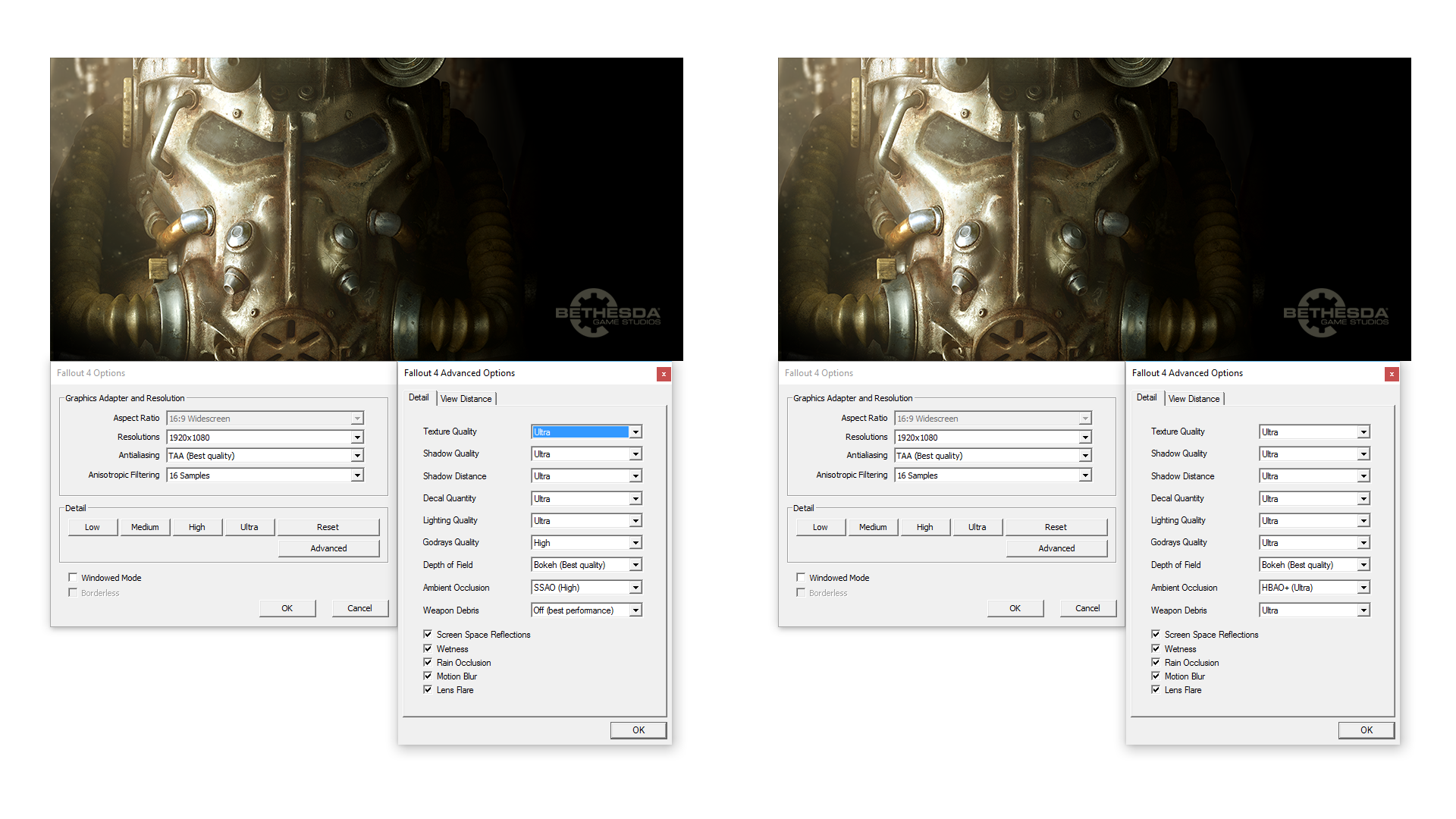

Fallout 4 version 1.3 offers a few new enhancements to graphics, both courtesy of Nvidia's GameWorks libraries. Now, before any AMD fans get too bent out of shape, let's be clear that all the new features are optional using the graphics presets. So if you go into the options and click Low/Medium/High/Ultra, you won't even see the new enhancements. Instead, you'll need to open the Advanced menu, and there you will see the option to set Ambient Occlusion to HBAO+, and if you have an Nvidia GPU, you can also set Weapon Debris to one of four options (Off/Medium/High/Ultra). Weapons Debris appears to leverage some PhysX libraries—or at least, something Nvidia isn't enabling for other GPU vendors—while HBAO+ will work on all DX11 GPUs.

What does Fallout 4 look like with the image quality maxed out compared to the Ultra preset? The shadows are improved and there's more foliage in some areas, but outside of pixel hunting you likely won't notice a sizeable change in the way things look.

Besides the graphics updates, we've also noticed that the game now plays much more nicely when it comes to disabling V-Sync (iPresentInterval=0) or using a display that supports 144Hz refresh rates. Entering/exiting power armor no longer causes the player to occasionally get stuck, at least not in our experience, and while picking locks at high frame rates is a bit iffy (you'll break a lot of bobby pins), the only main concern with disabling V-Sync is the usual image tearing.

Let's talk performance

Before we get to the new performance numbers, let's quickly recap the launch. Fallout 4 showed clear favoritism for Nvidia GPUs, but at the time AMD hadn't released an optimized driver for the game. That came out about a week after our initial benchmarking, and it dramatically improved the situation for AMD graphics cards. Since then, we've seen the Crimson 15.12 and 16.1 drivers, but Fallout 4 performance has mostly stayed the same. Nvidia meanwhile has gone from their Fallout 4 Game Ready 358.91 driver to the current 361.75 driver, and they've also shown some performance improvements during the past few months. CPUs were also a potential bottleneck at launch, particularly for AMD graphics cards, but the optimized drivers appear to have largely addressed that area.

| Maximum PC Graphics Test Bed | |

|---|---|

| CPU | Intel Core i7-5930K: 6-core HT OC'ed @ 4.2GHzCore i3-4350 simulated: 2-core HT @ 3.6GHz |

| Mobo | Gigabyte GA-X99-UD4 |

| GPUs | AMD R9 Fury X (Reference)AMD R9 390 (Sapphire)AMD R9 380 (Sapphire)AMD R9 285 (Sapphire)Nvidia GTX 980 Ti (Reference)Nvidia GTX 980 (Reference)Nvidia GTX 970 (Asus)Nvidia GTX 950 (Asus) |

| SSD | Samsung 850 EVO 2TB |

| PSU | EVGA SuperNOVA 1300 G2 |

| Memory | G.Skill Ripjaws 16GB DDR4-2666 |

| Cooler | Cooler Master Nepton 280L |

| Case | Cooler Master CM Storm Trooper |

| OS | Windows 10 Pro 64-bit |

| Drivers | AMD Crimson 16.1 Nvidia 361.75 |

We're using the same hardware as before, though we've modified our choice of CPUs on the low end from parts that don't actually exist to a simulated Core i3-4350. Many gamers wouldn't be caught dead running such a "low-end" processor, but you might be surprised just how much performance even a Core i3 part can offer. We've trimmed down our list of GPUs slightly this round as well, dropping the GTX Titan X and GTX 960 as those scores aren't all that different from the other parts we're testing. And with that out of the way, let's just dive right back into the radioactive waters and hope our Rad-X can keep us healthy….

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

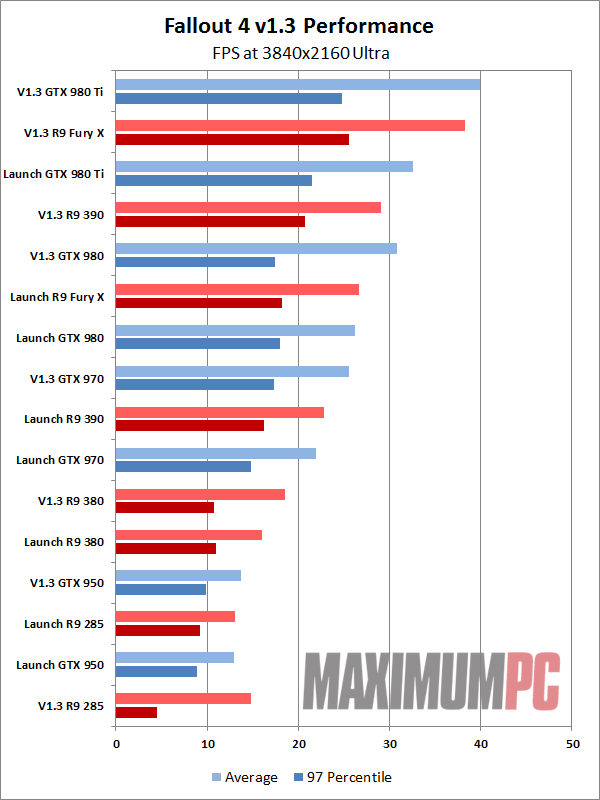

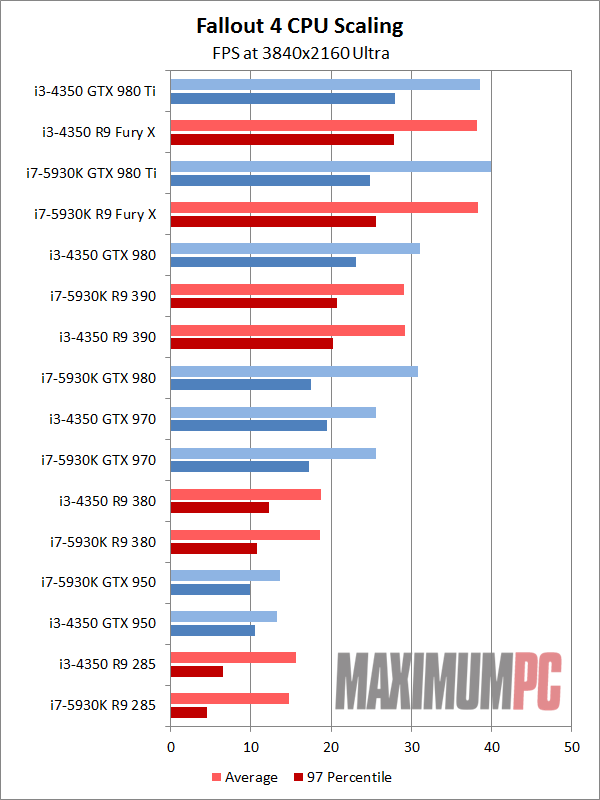

Running at 4K resolutions, particularly at Ultra quality, is generally the domain of multi-GPU setups, and that remains the case with Fallout 4. Sure, a single GTX 980 Ti can break 30 fps most of the time, and paired with a G-Sync display it's certainly playable, but it can definitely feel choppy. The good news is that nearly all of our GPUs show some decent performance improvements since launch, and especially AMD looks much more reasonable here.

With the 1.3 patch in place, we now have two GPUs comfortably breaking the 30 fps mark, and even the GTX 980 manages to just squeak by. 97 percentile frame rates are all below 30 fps, however, so you can expect a bit of stuttering on occasion—especially when you're outside and transition between area boundaries. Fallout 4 doesn't demand ultra-high frame rates, however, and with a bit of tweaking (say, the High preset, or maybe just disable TXAA) you can definitely play 4K with the R9 390, GTX 980, R9 Fury X, and GTX 980 Ti.

What's interesting is how far the gap has narrowed between AMD and Nvidia GPUs. Where the 980 Ti and 980 used to hold double-digit percentage leads over the Fury X and 390, with the patch and updated drivers the cards are now running basically tied (4–6 percent leads for Nvidia, but AMD has better 97 percentile results now). The 970 was also more or less tied with the 390 before, but now the 390 holds a sizeable 12 percent advantage. It's just unfortunate it took a couple of weeks after launch to narrow the gap. We could point out how badly AMD dominates Nvidia at the $200 market, though with sub-20 fps results we'll save that for below.

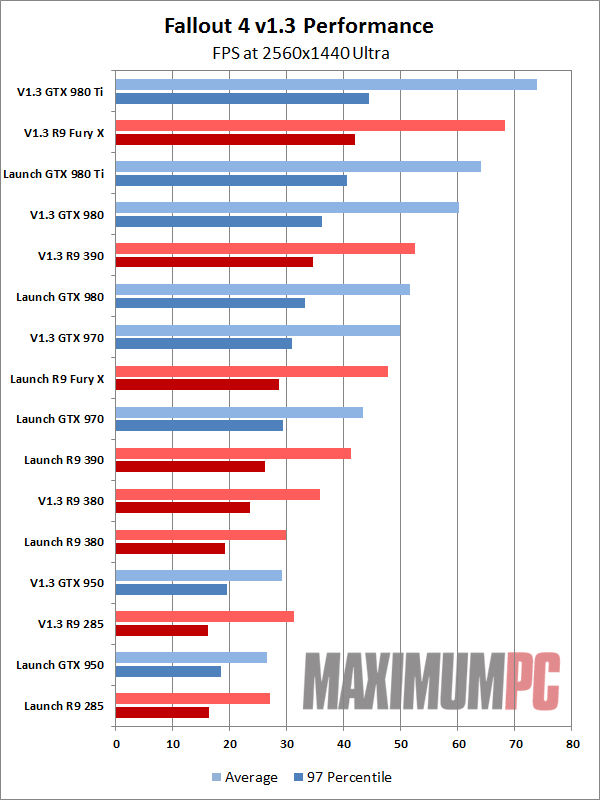

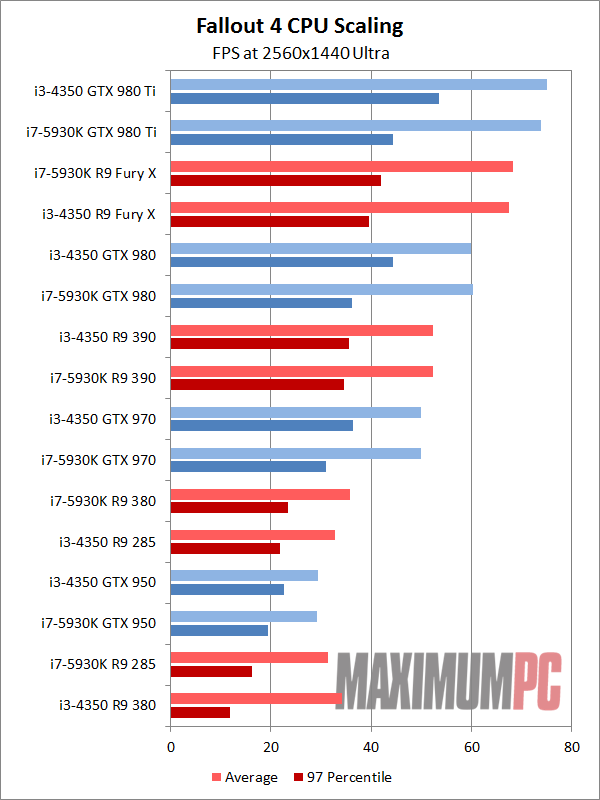

If the changes at 4K were helpful to AMD, at QHD they're almost a night and day difference. 980 Ti used to lead the Fury X by 30-40 percent; now it's down to less than 10 percent. The 980 still beats the 390 by 5-15 percent, but it should given their respective prices; the 970 on the other hand has gone from leading the 390 by 5-15 percent to trailing by 5-10 percent. And we're not just talking meaningless numbers here; at 1440p Ultra, all of these GPUs are certainly playable—particularly if you pair them with a G-Sync/FreeSync display. 97 percentiles are above 30 fps for all of these cards, and if you're shooting for even higher frame rates you can always drop the quality settings a notch.

For the lower priced cards like the GTX 950 and R9 380, 1440p Ultra still proves to be (mostly) insurmountable. The R9 380 4GB card is easily ahead of the others, however, and it leads the GTX 950 by almost 20 percent. Of course, it also costs 35 percent more than the GTX 950 2GB, so it's not really a decisive victory.

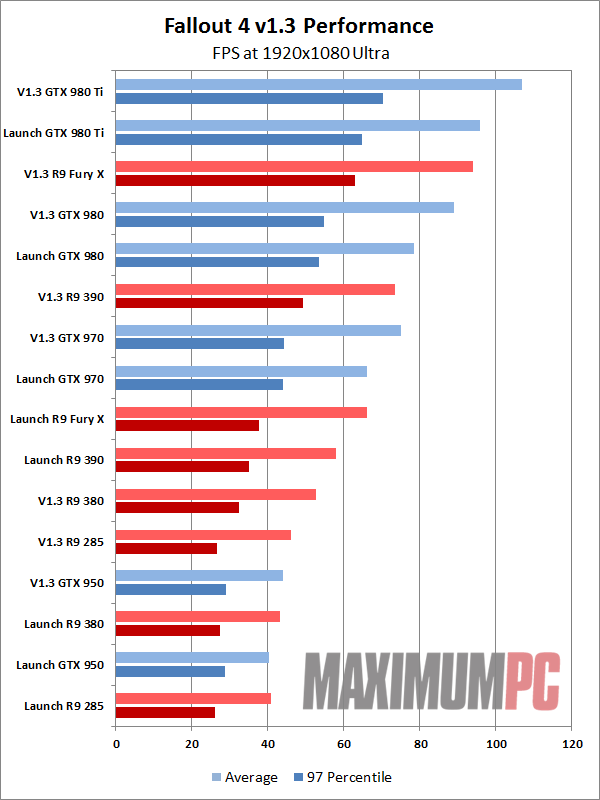

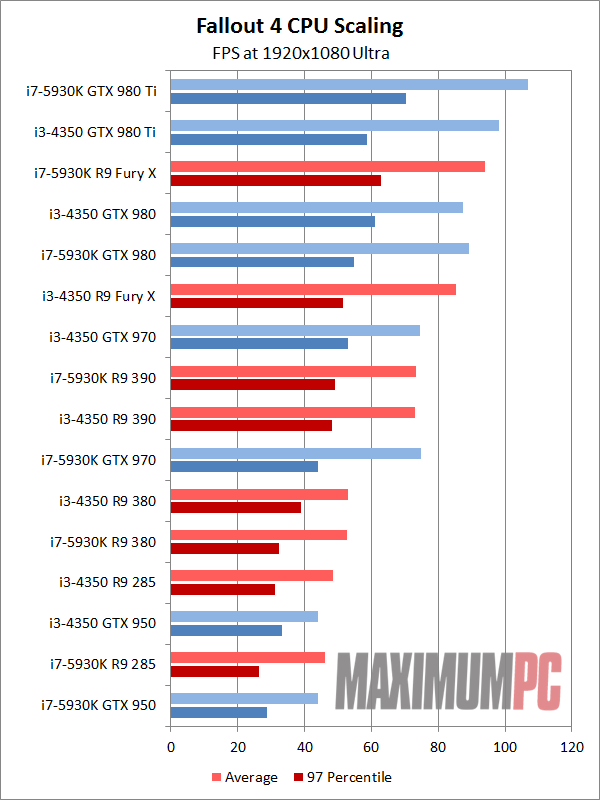

1080p Ultra isn't really where the highest-end cards are designed to run, though the 980 Ti still easily claims the top spot. AMD for their part shows 20-40 percent improvements compared to launch performance, with the Fury X benefiting the most. This is why driver optimizations for games are important, and the sooner you get them into the hands of gamers, the more likely they will be to recommend your hardware. If you're a day-0 gamer that pre-orders stuff in advance, AMD's track record doesn't look so good.

Nvidia still shows better scaling overall, suggesting the CPU is perhaps more of a bottleneck on AMD GPUs in this title. We'll get to that further on down the page. Having 4GB of VRAM also looks to be a big boost to performance here, with the R9 380 outpacing the R9 285 by a solid 15 percent or more, where prior to the driver and game updates the gap was mostly equal to the difference in their core clocks.

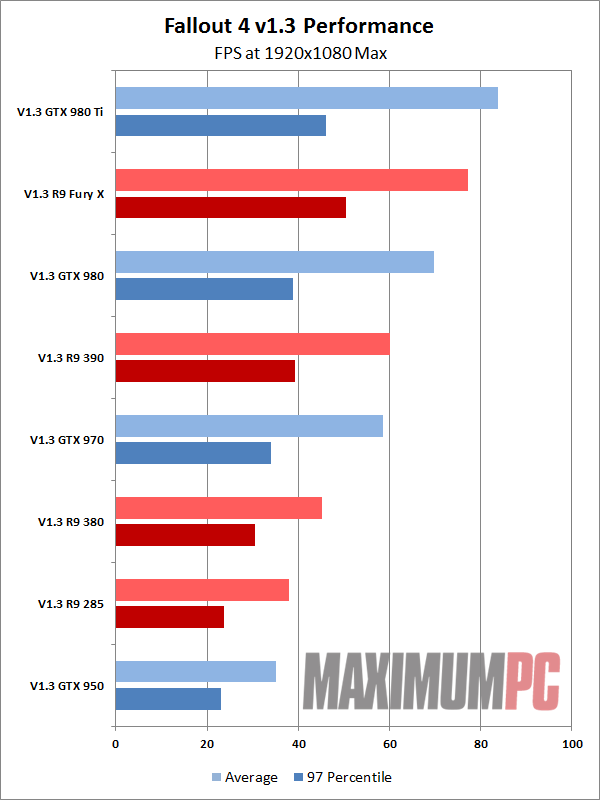

But what happens if you enable the new HBAO+ ambient occlusion—along with maxing out all of the other settings? (Note that the weapons debris option is only available with Nvidia GPUs, so we left it off.) If you compare the numbers from 1080p Ultra to our 1080p Max, interestingly, the gap between AMD and Nvidia narrows again. All of the GPUs we tested remain "playable" (meaning, higher than 30 fps averages), though interestingly it's Nvidia that appears to have more stuttering and low frame rates now.

How's your CPU?

To be frank, I wasn't actually going to retest CPU performance, but there was a small snafu. The last testing I had done involved Rise of the Tomb Raider, running with a simulated i3-4350. I ran all of these benchmarks using that configuration before realizing I was missing four cores and 600MHz of CPU clock speed. Here's the catch, though: The simulated i3-4350 still has a full 15MB L3 cache, where a real i3-4350 only has 4MB L3—and of course, quad-channel DDR4-2667 instead of some form of DDR3 memory. You might be wondering how I could have missed the lack of CPU performance, especially in light of our earlier findings. Take a look at the charts, though:

Previously, we simulated much slower parts and ran with multiple core configurations. This time, targeting a real Core i3 SKU does a lot to eliminate the performance gap. In fact, there are actually some oddities that show up, with the "Core i3" part often beating the real Core i7. Our best guess is that by devoting the whole 15MB L3 to just two cores, more data can fit into the cache, resulting in improvements particularly for our 97 percentiles.

Given we're testing with FRAPS, which is prone to wider variations between runs, we wouldn't read too much into these charts, but overall there looks to be very little difference in performance between our two processor configurations. Average frame rates are mostly within the margin of error (less than five percent), and only the two fastest GPUs (980 Ti and Fury X) appear to benefit from the hex-core i7-5930K—and even then, it's only at 1920x1080 Ultra where they outperform the simulated i3-4350. Crazy!

I suspect everything else showing the simulated Core i3 "winning" is due to the cache differences, because really that shouldn't happen with a real Core i3. We've got a faster core clock, three times as many cores, and more than three times as much L3 if we're looking at a true i3-4350 comparison. But even if you have an actual Core i3 processor, short of dual GPUs it's very likely the CPU won't be a significant bottleneck.

Prepare for cryogenic sleep…

And that wraps up our return to the post-apocalyptic wastes. Things have improved, and if you like open-world adventures, Fallout 4 is awesome. You don't even want to know how many hours I've spent playing the game, let alone benchmarking it. But I digress. The short summary is that Nvidia continues to hold on to the performance crown, but AMD users no longer need to feel betrayed. In the midrange $200 GPU market, AMD even holds the lead, and with a few tweaks to the settings you should be able to happily run around soaking up rads until your eyes rot out.

We'll continue to use Fallout 4 as one of our GPU and CPU benchmarks, because it's a popular title and can be reasonably taxing. But unless something really dramatic happens (like a DX12 patch, which is highly unlikely), this is going to be our last detailed look at Fallout 4 performance. Meanwhile, if you heard rumors that Nvidia was intentionally crippling performance on older Kepler GPUs, we did run a quick test with a GTX 770 using both older and newer drivers and found no noteworthy changes, so you can hopefully rest easy.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.