Don't rely on Bing, ChatGPT, or Bard for vital PC building questions

Some free LLMs are better for answering your pressing PC building questions than others.

Anyone who's been on the text-to-text generation trend knows that sometimes it's difficult to get a truthful answer out of these early AI chatbots. We're looking to large language models (LLMs) to simplify our lives by pulling together research from all across the web, but when some AI have been caught straight up lying—even spiralling into a meltdown when confronted about their inaccuracies—it's hard to know where to turn for authentic information.

I wanted to pit the top free language models against one another with questions we on the PCG hardware team could confidently answer as pro PC builders. So once I got access to Bard, Google's new chatbot, you can bet I gave it a hard time with ambiguous questions that require a solid knowledge base to answer.

Here's the big one: "What PSU do I need for an RTX 4090?"

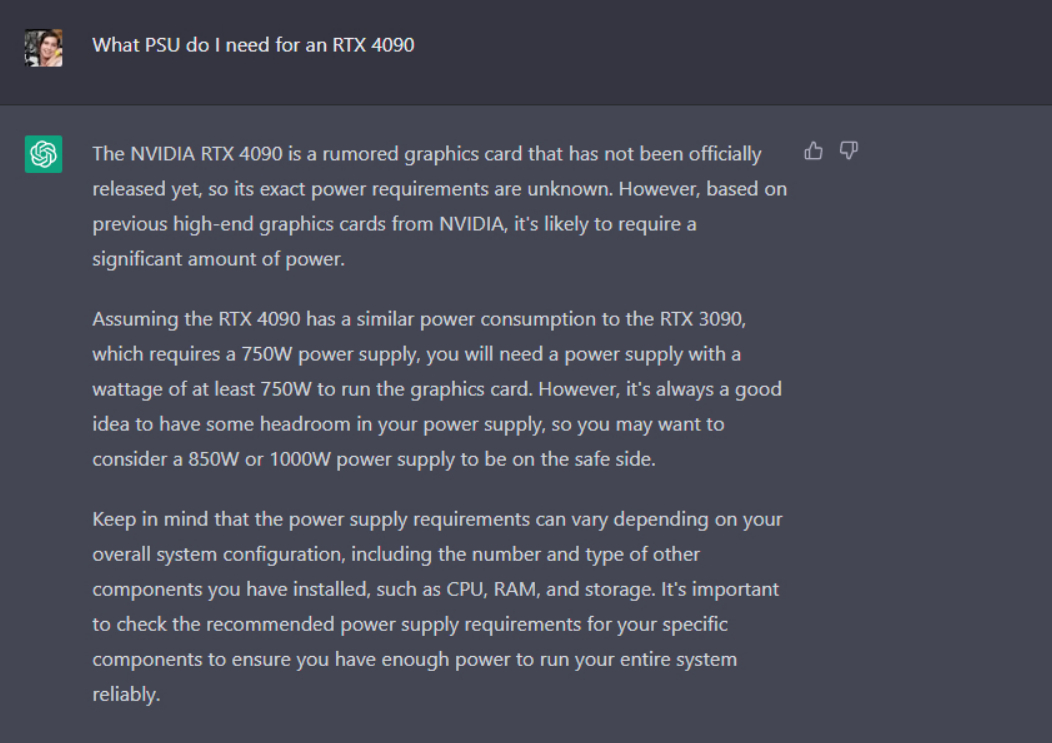

First up, I went back to the one that started it all. OpenAI's free ChatGPT version is currently running GPT-3.5, which is admittedly trained on a somewhat outdated dataset. Still I thought I'd give it a go. The answer came slowly, and was a little all over the place.

"The Nvidia RTX 4090 is a rumoured graphics card that has not been officially released yet." So far, off to a bad start. After noting that the card is "likely to require a significant amount of power", it then goes on to assume it will have a similar power consumption to the RTX 3090. Oh, what a mistake to make.

Should you pair your RTX 4090 with a 750W power supply, you would be sorely hard-done-by. With a TDP of 450W, you want at least 1000W, and some, to keep an RTX 4090 plus all the other components you'd want to pair with it fed. And that depends entirely on the AIB you've opted for.

Palit recommends a whopping 1,200W power supply for its RTX 4090 GameRock OC, for example.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

At this point you already know ChatGPT has lost, so we'll ignore its little cop-out disclaimer at the bottom: "It's important to check the recommended power supply requirements for your specific components"...

That's kinda what my intentions were. Moving on.

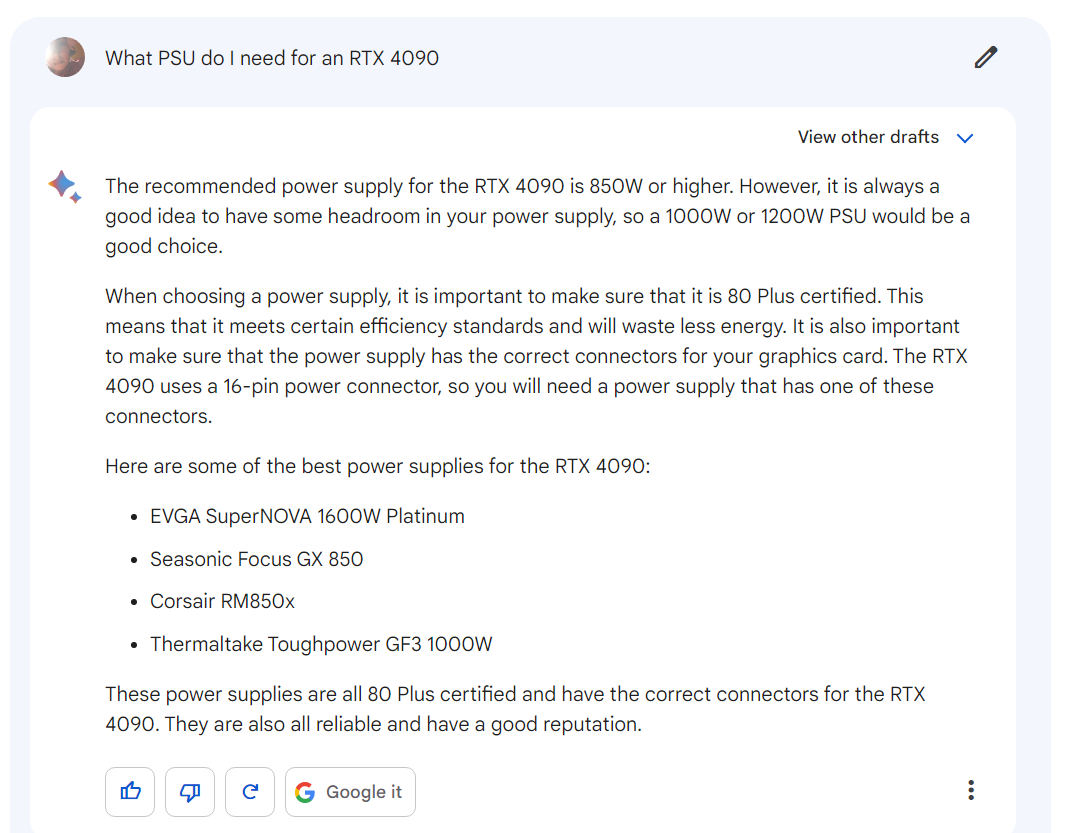

Turning to Bard, we're looking at a much clearer, and safer answer. "The recommended power supply for the RTX 4090 is 850W or higher. However, it is always a good idea to have some headroom in your power supply, so a 1000W or 1200W PSU would be a good choice."

Not only does it know the graphics card has already been released, it even gives some trusted options for PSUs to pair it with. Sadly, after recommending a 1000W power supply it points to some 850W options that certainly wouldn't keep up with an entire system built around the RTX 4090, but we're nearly on the right track.

Having been built on a lightweight and optimised version of LaMDA, Google's Bard is trained on some 137 billion parameters, and seems to have put its own rigorous algorithms to work checking for each sources trustworthiness. That doesn't stop it getting a little bit muddled up in the output however.

"The RTX 4090 uses a 16-pin power connector, so you will need a power supply that has one of these connectors" it says. That's partially true, though it is possible to use an adapter to connect it to your PSU, which Bard seems to have glossed over.

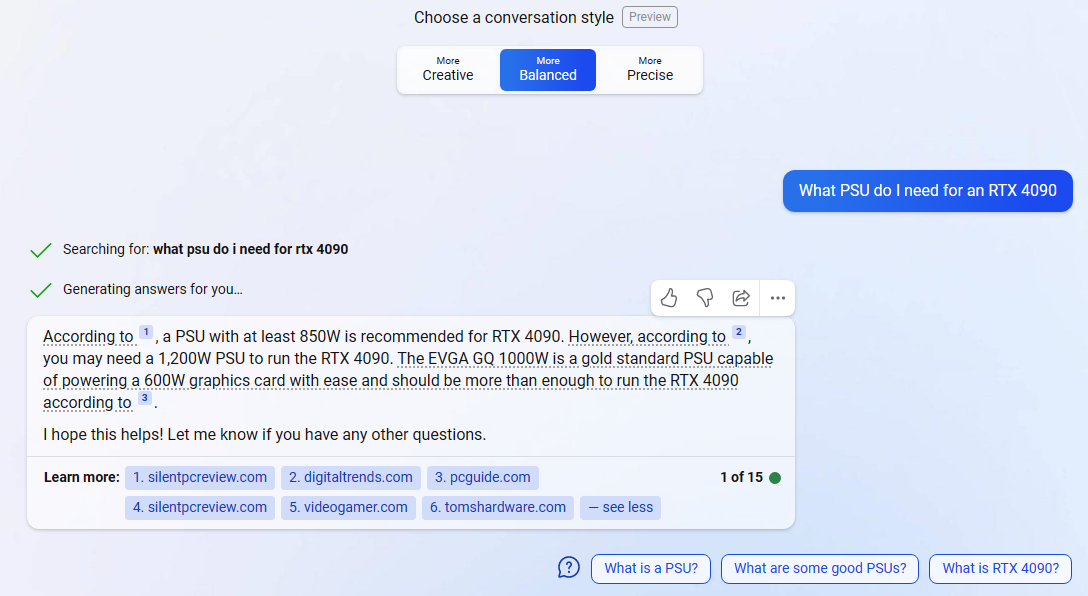

So now it comes down to Bing, which utilises the latest GPT-4 model that would only be available to ChatGPT users if they paid for the Pro version. With Bing you can access it for free as long as you sign in with your Live account, open the page in Microsoft Edge, and promise Microsoft your firstborn child.

Best CPU for gaming: The top chips from Intel and AMD

Best gaming motherboard: The right boards

Best graphics card: Your perfect pixel-pusher awaits

Best SSD for gaming: Get into the game ahead of the rest

Bing supplies a single, definitive answer as the EVGA GQ 1000W as recommended by PC Guide. And while their guide hasn't been updated since December 2022, that's still a solid recommendation, helped into the results no doubt by their incessant littering of the RTX 4090 as a keyword in the intro copy.

Its answer is cited with several trustworthy sources, and although it doesn't go into as much detail with its initial answer, you can ask it to be more precise. That way it gives a little more context around other manufacturers recommendations.

The takeaway here is that it looks like none of the free options can give a full and completely accurate answer with all the context. As time goes on, LLMs will only get better, so you can bet the answers will become fuller and more accurate.

Right now, though, just double check the recommended specs before asking an AI to help you build your PC. Or head over to our best power supply guide for the full 2023 picture.

Screw sports, Katie would rather watch Intel, AMD and Nvidia go at it. Having been obsessed with computers and graphics for three long decades, she took Game Art and Design up to Masters level at uni, and has been rambling about games, tech and science—rather sarcastically—for four years since. She can be found admiring technological advancements, scrambling for scintillating Raspberry Pi projects, preaching cybersecurity awareness, sighing over semiconductors, and gawping at the latest GPU upgrades. Right now she's waiting patiently for her chance to upload her consciousness into the cloud.