What you need to get Destiny 2 running at 60 fps in 4K

We've tested the settings required on various GPUs to get butter smooth framerates at maximum resolution.

With the Destiny 2 beta finally bringing Bungie's shared-world shooter to PC, there's a massive range of hardware the game needs to support. The good news is that Destiny 2 isn't some lackadaisical port—it's legit, as promised—and Bungie has worked with Nvidia to ensure the game gets the proper PC treatment. There are plenty of knobs and dials to fiddle with, including resolution, field of view, and more than 15 graphics settings you can tweak. Destiny 2 is also one of the first games to include native support for HDR (High Dynamic Range) displays, which is something we'll look at in a separate article.

For console gamers looking to make the leap to PC, one of the major questions with Destiny 2 is going to be what sort of hardware you need to comfortably run the game at 4K and more than 60 fps. Sure, you could just throw a pair of GTX 1080 Ti cards in SLI at it, but that's no small investment. What if you're hoping to keep things a bit more sane—what chance do builds with more modest components have of running Destiny 2 at 4K?

To find out, I gathered all the high-end MSI Gaming X graphics cards we use for our performance analysis articles and set my sights on 4K. Then I tweaked settings until the game stayed comfortably above 60 fps—or until I was at the minimum settings.

GeForce GTX 1080 Ti Gaming X 11G

MSI GTX 1080 Gaming X 8G

MSI GTX 1070 Gaming X 8G

MSI GTX 1060 6GB Gaming

MSI RX Vega 64 8G

MSI RX Vega 56 8G

MSI RX 580 Gaming X 8G

MSI Aegis Ti3 VR7RE SLI-014US

Full details of our test equipment and methodology are detailed in our Performance Analysis 101 article.

What if you're not interested in 4K gaming? Don't worry—I'll still be giving Destiny 2 the full performance analysis treatment, but figured we could start with high-end configurations while I continue to work my way through a bunch of other settings. I'll be back later this week with the rest of the benchmarks, including tests at lower quality settings, lower resolutions, and on budget hardware. For now, let's see what the top graphics cards can do—all paired with a modestly overclocked 4.8GHz Core i7-7700K, courtesy of MSI's Aegis Ti3 desktop.

Destiny 2 benchmarks

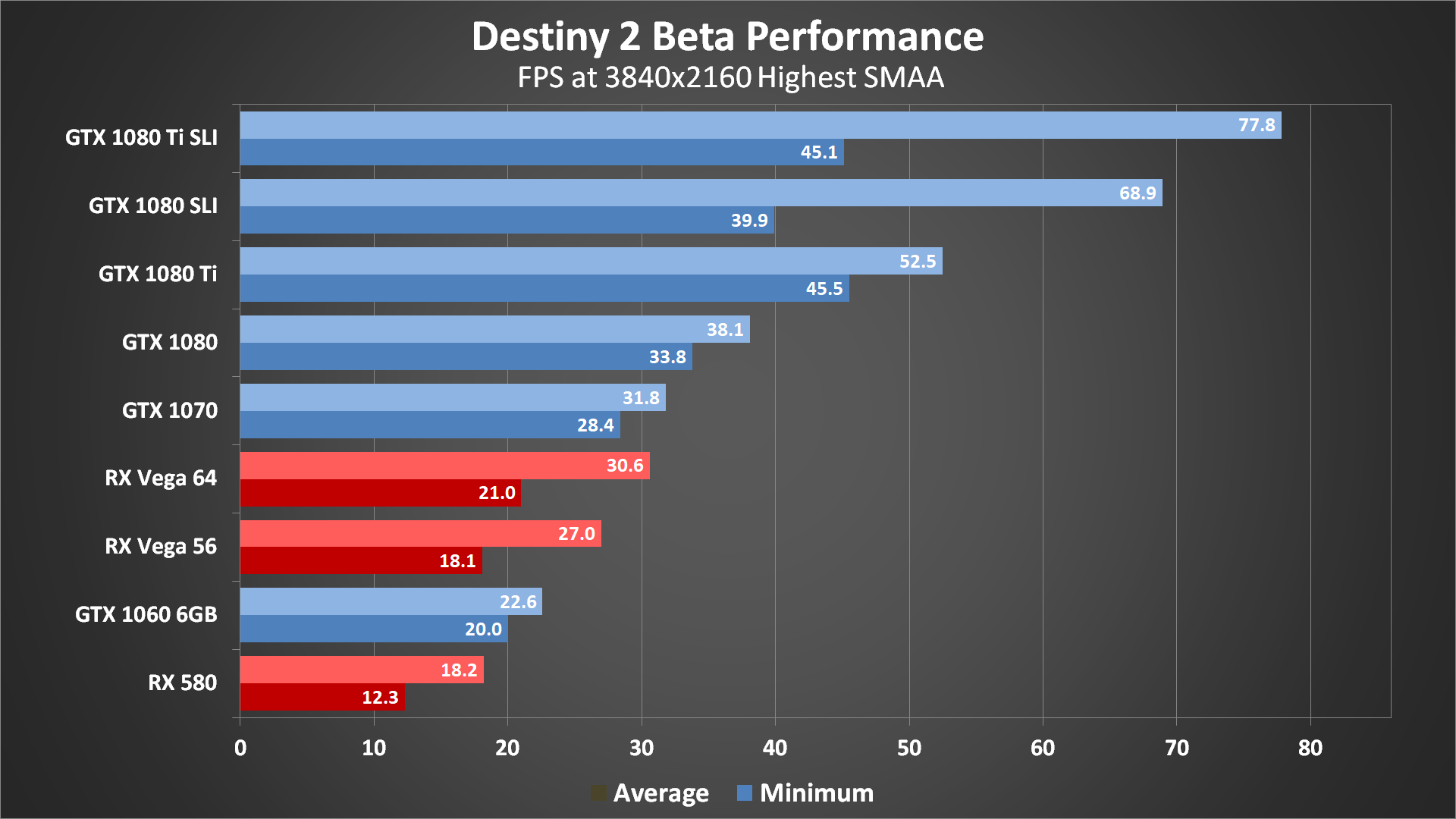

Let's start with the whole enchilada and see how the cards manage the maximum quality 'highest' setting at 4K. One quick note here is that I've turned anti-aliasing down from MSAA (Multi-Sample Anti-Aliasing) to SMAA (Subpixel Morphological Antialiasing), because MSAA proves to be far too demanding for any current graphics card—at least with Destiny 2 at 4K. Plus, when you're running such a high resolution, the need for AA is diminished—the pixels are smaller and so it's harder to see the jaggies. SMAA is typically a minor performance hit, slightly more than the nearly-free FXAA (Fast Approximate Anti-Aliasing), with quality that is nearly equal to 4xMSAA.

So obviously there are a few caveats here. Bungie is receiving active 'help' from Nvidia with Destiny 2, and that can mean a lot of different things. Whether it's better optimizations for Nvidia hardware, or the use of features and technologies where Nvidia GPUs are more adept, or even just a better understanding of the game code so that Nvidia can optimize its drivers better, the net result is that the beta currently runs far, far better on Nvidia's 10-series GPUs than on AMD's RX 500/Vega GPUs.

Right now, Destiny 2 looks very much like another game where Nvidia GPUs will have a major performance lead.

When I looked at a broad selection of games, overall the GTX 1080 and Vega 64 were relatively close, with the 1080 taking a slight lead of around five percent. GTX 1070 and Vega 56 were a similar story, except Vega 56 ended up being slightly faster overall, by about three percent. But if I look for the games where Nvidia's lead is the greatest, like in Dishonored 2, the 1080 is sometimes over 25 percent faster than the Vega 64, and the 1070 can lead the Vega 56 by over 10 percent.

The biggest gaming news, reviews and hardware deals

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Right now, Destiny 2 looks very much like another game where Nvidia GPUs will have a major performance lead. The 1080 is 25 percent faster than the Vega 64, the 1070 is 18 percent faster than the Vega 56, and the 1060 6GB is 24 percent faster than the RX 580 8GB. And if you want to add insult to injury, the GTX 1080 Ti, which currently sells for the same price as the Vega 64, is a massive 72 percent faster than Vega 64. Those are all average framerates, but looking at the minimum (97 percentile averages) things become even worse. For 'average minimum' fps, the 1080 leads Vega 64 by 61 percent, 1070 leads Vega 56 by 57 percent, 1060 is 63 percent ahead of the 580, and the 1080 Ti is 117 percent faster (yes, more than twice as fast) than the Vega 64.

Something important to note with the above tests is that the only way to consistently get above 60 fps at 4K and nearly maxed out settings is to have 1080 SLI or 1080 Ti SLI—anything less and you're going to come up short. SLI isn't without issues, however, as minimum framerates don't scale all that much, so even though 1080 Ti averages well above 60 fps, there are periodic dips into the 40-50 fps range.

Recommended 4K gaming settings for each GPU

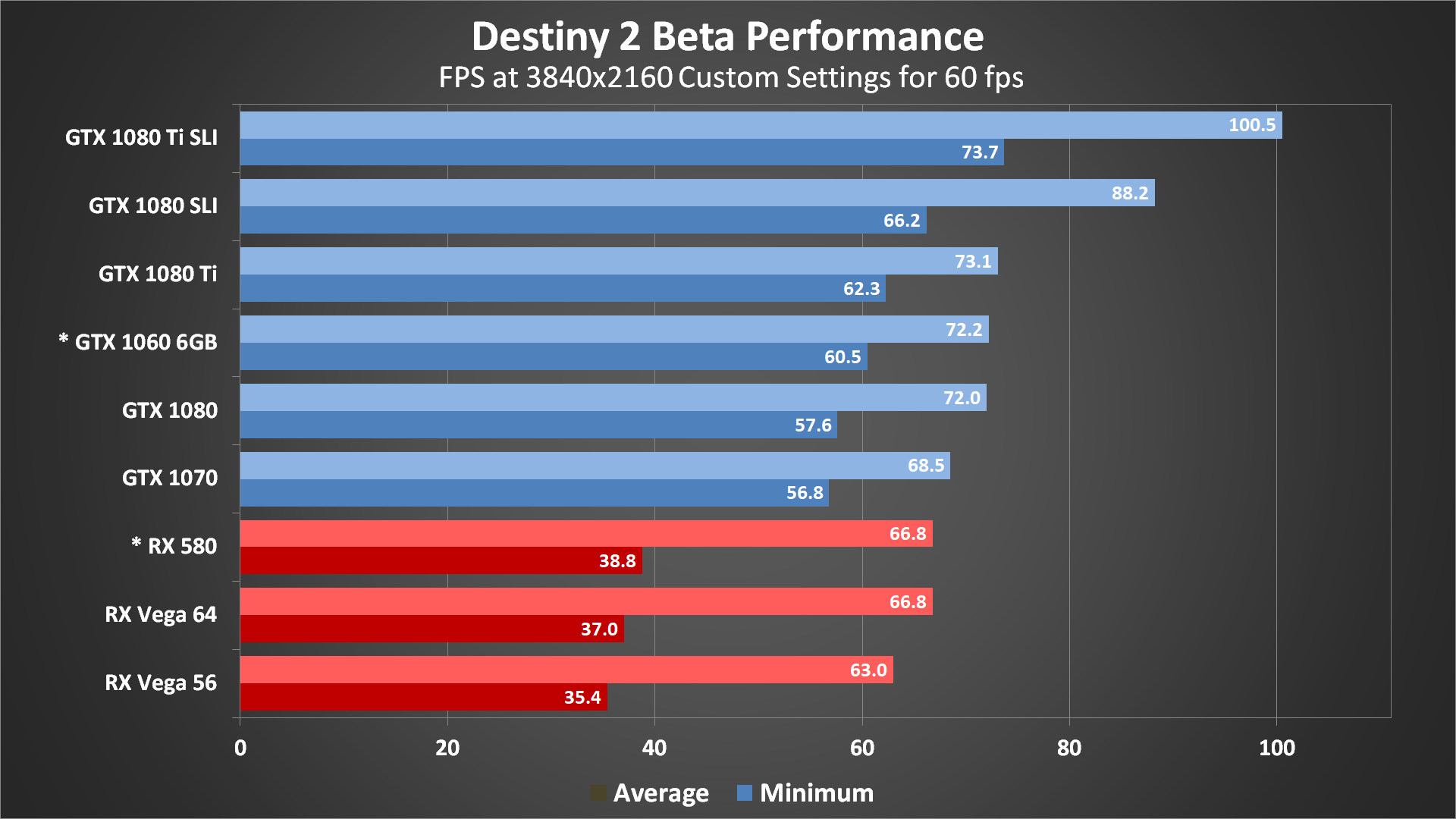

Given the above, I decided to take each card and rather than testing at the same settings for comparison, instead I tried to find the best settings possible that would still average over 60 fps. While the generic presets are useful for initial tuning of performance, I dug a bit deeper to see which settings affect framerates the most. The answer: besides MSAA (which I didn't use for any of the tests—even the mighty 1080 Ti only manages 49 fps at 1440p with the Highest preset), the best places to look for improved performance are Screen Space Ambient Occlusion (SSAO), Shadow Quality, and Depth of Field (DoF)—every other setting (besides Render Resolution) has very little impact on performance, though in aggregate they can add up to slightly more.

Getting into the settings I selected for each GPU, here's what I ended up using. (The three fastest configurations all used the same settings.)

- GTX 1080 Ti SLI: use the highest preset and then set DoF to High.

- GTX 1080 Ti: use the highest preset and then set DoF to High.

- GTX 1080 SLI: use the highest preset and then set DoF to High.

- GTX 1080: use the high preset, then turn off SSAO and DoF.

- GTX 1070: use the medium preset, then turn off SSAO and DoF.

- GTX 1060 6GB: use the medium preset, turn off SSAO and DoF, and set resolution scaling to 75.

- RX Vega 64: use the medium preset, then turn off SSAO and DoF.

- RX Vega 56: use the low preset, then set Texture Quality and Shadow Quality to lowest.

- RX 580: use the medium preset, then turn off SSAO and DoF, and set resolution scaling to 75.

All of the cards were able to reach 60 fps or higher average framerates using the above settings, but do note that technically the RX 580 and GTX 1060 aren't actually running 4K anymore. The 75 percent resolution scaling means those cards are actually rendering 2880x1620 and upscaling that to 4K—because it's the only way they'll get above 60 fps. Using the above settings, here's what 4K performance looks like:

Note: GPUs were not tested at identical settings—do not compare GPUs without reading the text explanations!

As you can see, my quest for 4K 60 fps gaming in Destiny 2 is mostly a success, though the need for 4K gaming is still debatable. I'd much rather have a 2560x1440 144Hz display than a 4K 60Hz display, and the benefit is that the lower resolution is far less taxing—in most cases, performance is nearly double what you get at 4K. I also generally prefer higher quality settings at 1440p over the reduced quality settings necessary for some of the cards to reach 60 fps at 4K.

One problem that remains is AMD's minimum fps, where all of the GPUs are still dipping below 40 fps on a fairly regular basis. I'll get into more of the details with the full performance analysis, but there's a lot of noticeable stuttering on the AMD GPUs right now. Several of the Nvidia cards don't quite maintain 60 fps either, though a few more tweaks could get them there.

Initial thoughts on Destiny 2 performance

As an open beta running a couple of months before the official launch date, we can't draw any firm conclusions about how Destiny 2's performance. Nvidia cards are doing substantially better than AMD cards right now, but the gap could narrow as Bungie further optimizes performance. Part of the purpose of an open beta is to get more details about the performance of the game and track down additional bugs. Hopefully between game optimizations and driver tuning, AMD's GPUs will do better come October.

Conclusively, Bungie has so far delivered on their big promise to take the PC seriously. They talked up the PC version quite a bit in the past few months, and since we'll be waiting nearly two months after the console release to play it, it's a staggering relief to see it in such great shape. It has the signs of becoming the definitive version of the game, even capable of looking nice and running well on some older, lower end rigs without sacrificing too much in its scaling.

But if you have the graphics horsepower to run it at 4K and maximum quality, Destiny 2 is looking fantastic on PC. Cloth ripples in the wind, there are tons of pyrotechnics going off during battles, and outside of the initial level loading screen, you can traverse the large maps without any stalls. I'm not much of a multiplayer gamer, though the co-op mission was good and I've played through that several times now while looking for good areas to benchmark.

If you're curious about the actual benchmark sequence, I used the first part of the single-player mission, where you start in an area with lots of fire and debris. I'm not sure if the single-player missions are as taxing as the multiplayer stuff will end up being, but the first minute or so of the mission had framerates that were consistently lower than most of the multiplayer areas I checked. Plus, there's the huge benefit of not having to queue up and wait for other players, not to mention the risk of being shot (repeatedly) while running benchmarks.

I'll be back later this week with a look at additional GPUs, many test settings besides 4K, and a bunch of CPUs thrown in for good measure. There were rumblings about Destiny 2 scaling well with multi-core CPUs, being able to 'use all the cores' of Intel's Core i9. That's something I look forward to seeing, though I suspect faster CPUs will mostly be of benefit at lower resolutions—at 4K, GPUs are almost always the overwhelming performance bottleneck.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.